1. Introduction

Tropical cyclones (TCs) are among the most devastating natural hazards globally, often producing large fatalities, widespread infrastructure damage, and long-term socioeconomic disruption in coastal regions. These impacts are especially pronounced in the tropical Americas and along the U.S. Gulf Coast, where high population density, rapid coastal development, and limited evacuation flexibility heighten vulnerability to extreme storm events [

10,

26]. As coastal populations expand and anthropogenic climate change intensifies the thermodynamic environment, TCs are projected to become stronger, wetter, and potentially more destructive [

1,

17,

18]. These trends underscore the increasing need for reliable seasonal hurricane outlooks, which serve as foundational information for preparedness planning, emergency management, insurance and reinsurance decisions, and resource allocation across government and private sectors.

Seasonal TC activity is strongly modulated by large-scale climate variability, and decades of research have established robust relationships between storm frequency and indicators such as the El Niño–Southern Oscillation (ENSO), Atlantic sea-surface temperature (SST) anomalies, vertical wind shear, tropospheric humidity, and the meridional overturning circulation [

2,

7,

11]. ENSO in particular is among the most influential predictors: El Niño events suppress Atlantic TCs through enhanced vertical wind shear and subsidence, whereas La Niña events tend to enhance development by reducing shear and moistening the MDR [

14,

32]. Atlantic multidecadal variability, such as the Atlantic Multidecadal Oscillation (AMO), also contributes substantially to long-term TC modulation through changes in SSTs, thermodynamic structure, and Sahel rainfall [

8,

31]. These well-documented climate–TC linkages have motivated the widespread use of statistical forecasting systems grounded in large-scale climate indices.

Operational seasonal TC forecasts by NOAA, CSU, and other research groups typically rely on linear or generalized linear regression models using indicators such as MDR SST anomalies, ENSO indices, vertical wind shear, sea-level pressure anomalies, and SST gradient patterns [

15,

16,

27]. These approaches benefit from transparency, physical interpretability, and computational simplicity, and have demonstrated considerable skill relative to climatology. More recent statistical advances, including Lasso regularization, ridge regression, and systematic predictor screening, have further improved preseason forecast performance [

5,

29]. Nonetheless, the overwhelming majority of these methods focus on basin-wide activity.

Despite substantial progress, key gaps remain in our understanding and prediction of regional TC activity. The Gulf of Mexico, Caribbean Sea, and wider Atlantic basin each respond differently to ENSO, the Atlantic Meridional Mode (AMM), the AMO, and MDR thermodynamics [

19,

32,

34]. For instance, Caribbean TC activity is especially sensitive to ENSO-induced vertical wind shear, whereas Gulf hurricanes are more strongly influenced by local SST anomalies, the Loop Current, and mesoscale oceanic features [

22,

28]. These spatial variations imply that basin-wide predictors do not necessarily translate into accurate regional forecasts. Only a limited number of studies have attempted regional or landfall-focused predictions using logistic regression or analog methods [

4,

33], and these typically employ small predictor sets and inconsistent validation frameworks.

A second major gap concerns prediction across different intensity thresholds. Although tropical storms, hurricanes, and major hurricanes share some common environmental drivers, the development of major hurricanes is especially sensitive to thermodynamic structure, potential intensity, inner-core processes, and favorable upper-ocean conditions [

6,

35]. Few seasonal forecast systems evaluate skill simultaneously across intensity categories, limiting our understanding of why prediction skill varies with storm strength and what role climate predictors play in modulating major hurricane counts.

The rise of machine-learning (ML) methods has introduced new opportunities for capturing nonlinear climate–TC relationships that traditional linear models cannot resolve. Neural networks, random forests, gradient-boosted trees, and analog-style k-nearest neighbors have all been applied in preliminary or experimental forms to seasonal TC prediction [

3,

13,

23]. These techniques in principle allow more flexible modeling of predictor interactions, such as combined ENSO–SST–shear influences or compound thermodynamic effects. Yet existing ML studies vary widely in predictor selection, typically test only one algorithm, and rarely employ operationally realistic cross-validation designs. Reported skill is often comparable to or marginally better than traditional regression models, but the lack of standardized experimental frameworks has prevented clear scientific conclusions regarding whether ML methods reliably outperform simpler, well-regularized statistical techniques, especially under small-sample constraints.

Collectively, these gaps motivate a unified, systematic evaluation of multiple forecast models, regional domains, intensity thresholds, and predictor sets under consistent data and validation conditions. This study addresses these needs by developing and testing four forecasting approaches—Lasso regression, k-nearest neighbors (KNN), artificial neural networks (ANN), and XGBoost—across nine region–intensity combinations within the Atlantic basin. We draw on HURDAT best-track data and National Hurricane Center (NHC) seasonal maps for target definitions, and pair these with 34 monthly climate predictors from NOAA and NASA GISS datasets, including MDR latent heat flux (LHF) empirical orthogonal function (EOF) patterns, ENSO indices, global SST fields, and large-scale circulation metrics. A 30-year sliding cross-validation window approximates realistic operational constraints and allows consistent comparison of linear and nonlinear climate–TC relationships.

The goals of this work are to

quantify how seasonal predictive skill varies across regions and intensity thresholds;

evaluate the relative contributions and stability of large-scale climate predictors; and

determine whether ML approaches offer robust, repeatable improvements over traditional statistical models for seasonal TC forecasting.

By integrating regional, intensity-specific, and multi-model analyses within a unified methodological framework, this study contributes new insight into the structure of climate predictability for Atlantic TCs and provides a comparative foundation for improving regional seasonal hurricane outlooks in a warming climate.

2. Data and Methods

2.1. Data

Historical tropical cyclone (TC) activity from 1950–2024 was compiled using the HURDAT2 best-track archive [

12], supplemented by seasonal TC track maps produced by the National Hurricane Center (NHC). For each season and sub-basin, the number of TCs was manually tabulated and stratified by maximum lifetime intensity following the Saffir–Simpson Hurricane Wind Scale. Three intensity-based target variables were defined:

Tropical cyclone (TC): tropical storms, category 1–2 hurricanes, and major hurricanes

Hurricane (HU): category 1–5 hurricanes

Major hurricane (MH): category 3–5 hurricanes

Counts for each category were assembled for three subregions of the Greater Atlantic Basin—the Gulf of Mexico, the Caribbean Sea, and the full North Atlantic Basin—resulting in nine region–intensity target variables (

Figure 1).

To ensure consistency and comparability across all modeling approaches, a unified set of predictor variables was adopted following established practice in seasonal hurricane prediction [

30]. The predictors include a suite of large-scale oceanic and atmospheric climate indices archived by the NOAA Physical Sciences Laboratory. These indices encompass sea surface temperature (SST) anomalies in the Atlantic and Pacific, a variety of El Niño–Southern Oscillation (ENSO) indicators, and multiple teleconnection and synoptic-scale circulation indices.

To account for regional climate dynamics most relevant to Atlantic TC genesis, additional predictors were derived for the Main Development Region (MDR; 10°–20° N, 80°–20° W) using NCEP–NOAA Reanalysis data. MDR-based fields included SST, outgoing longwave radiation (OLR), sea-level pressure (SLP), upper- and lower-tropospheric zonal and meridional winds, and vertical wind shear. Surface latent heat flux (LHF) fields were also extracted for boreal winter, from which Empirical Orthogonal Function (EOF) analysis was applied to identify dominant modes of variability linked to TC-favorable conditions.

Broader global forcing was represented by mean land–ocean temperature indices for the globe (GGST), Northern Hemisphere (NGST), and Southern Hemisphere (SGST), derived from the GISS Surface Temperature Analysis (Version 4).

In total, 34 monthly indices—capturing SST anomalies, ENSO behavior, teleconnection patterns, and regional flux dynamics—were evaluated as candidate predictors (

Table 1).

To evaluate the stability and performance of forecasting models under differing levels of predictor availability, nine predictor-set configurations were constructed. These configurations cross three

temporal availability scenarios—(1) January–February, (2) January–April, and (3) January–February combined with a July–September Niño-region SST forecast—with three

data-scope scenarios: (a) Core indices only, (b) Core + Niño indices, and (c) Core + Niño + LHF-EOF predictors. When cross-classified with the nine region–intensity target variables, these design choices generated 81 predictor–target combinations for model training and evaluation (

Table 2).

2.2. Methods

This study evaluates four statistical and machine learning approaches for seasonal hurricane prediction: a generalized linear model with Lasso regularization (Lasso), a K-nearest neighbors model (KNN), a three-layer artificial neural network (ANN), and an Extreme Gradient Boosting model (XGBoost). The central hypothesis is that hurricane activity across different Atlantic subregions is systematically related to large-scale climate predictor variables. The models are tested to determine which approach most effectively predicts future-year hurricane activity using a moving 30-year window of historical data.

- 1)

Statistical and Machine Learning Models

- a)

Lasso Model:

The Lasso model follows the formulation introduced in Sun et al. (2021), assuming that the logarithm of the expected hurricane count in a given region is a linear function of the predictor set. Coefficients are estimated by minimizing a penalized log-likelihood function with an L₁ penalty, enabling concurrent parameter shrinkage and variable selection. The optimization criterion is:

where

is the observed hurricane count in year

,

is the predictor vector,

is the intercept,

is the coefficient vector, and

is the regularization parameter.

Because many climate indices exhibit substantial multicollinearity, predictors are first grouped using hierarchical clustering based on pairwise correlation. Within each cluster, the variable most strongly associated with the target is retained for modeling. This preprocessing step mitigates redundancy and preserves degrees of freedom.

Model predictions assume a Poisson distribution for hurricane counts, and predictive skill is evaluated using a log-likelihood skill score relative to climatology. As an interpretable statistical benchmark, the Lasso model highlights dominant linear relationships between climate conditions and hurricane variability.

- b)

KNN Model:

The K-nearest neighbors model provides a non-parametric, instance-based approach that estimates hurricane outcomes from historical seasons with climate conditions similar to those of the target year. All predictors are standardized to zero mean and unit variance, and similarity is measured using Euclidean distance.

For a target year with predictor vector

, the forecast is computed as:

where

is the set of the

closest analog years.

The hyperparameter is selected via cross-validation to minimize mean squared prediction error. Smaller values emphasize localized analogs but increase noise sensitivity, while larger values provide smoother predictions. Because the KNN model imposes no parametric form, it naturally captures nonlinear and multimodal relationships among climate predictors—such as interactions between ENSO phases, Atlantic SST anomalies, and wind-shear variations. The analog-based framework also facilitates physically interpretable comparison between more recent and historical seasons.

- c)

ANN Model:

The artificial neural network is designed to capture nonlinear, high-order relationships among climatic predictors that may not be fully represented by linear or distance-based models. A feed-forward multilayer perceptron architecture is implemented with two hidden layers, rectified linear unit (ReLU) activations, and an output layer producing continuous hurricane-count predictions.

For an input

, the network output is:

where

and

denote trainable weights and biases.

The first hidden layer comprises 100 neurons, and the second contains 50 neurons, allowing the model to expand and then compress the predictor space. Training is performed for 50 epochs using the Adam optimizer (learning rate ) and a StepLR scheduler with a decay factor of 0.1 every 10 epochs. Mini-batches of size 8 balance computational efficiency and gradient stability.

The ANN autonomously learns nonlinear mappings among predictors—such as multi-variable interactions between SST anomalies, vertical wind shear, and ENSO metrics—and provides a representative neural network benchmark for this prediction task.

- d)

XGBoost Model:

The Extreme Gradient Boosting (XGBoost) model captures nonlinear relationships and complex feature interactions through an ensemble of regression trees trained sequentially. Each tree reduces the residuals of the ensemble built thus far, while built-in regularization controls overfitting—an essential feature given the limited number of annual observations.

The model minimizes the regularized objective:

where

is the loss function,

is the tree added at iteration

, and

penalizes model complexity based on the number of leaves

and leaf weights

.

A grid search with five-fold cross-validation is used to optimize tree depth, number of boosting rounds, and minimum child weight, with the parameter space restricted to reduce overfitting. XGBoost’s ability to uncover nonlinear interactions among teleconnections, SST anomalies, and dynamical predictors makes it a competitive baseline for this study.

- 2)

Performance Evaluation

All models are evaluated using a Sliding-Window Cross-Validation (SWCV) design that emulates operational preseason forecasting. For each target year , models are trained using the preceding 30 years of data and evaluated on year . The window then advances by one year, yielding a full series of out-of-sample forecasts across 1951–2024.

This approach ensures:

- 1)

Temporal integrity, preventing information leakage from future predictors.

- 2)

Robust validation, producing an empirical distribution of model errors across many independent forecasts.

Forecasts for each model are generated for all three intensity categories (TC, HU, MH) and all three subregions (Atlantic Basin, Caribbean Sea, Gulf of Mexico).

Model performance is assessed using mean squared error (MSE) and a log-likelihood skill score

, defined as:

where the negative log-likelihood for Poisson-distributed counts is:

Positive values indicate improvement over climatology. Averaging across all SWCV windows yields an overall cross-validated skill score for each model, serving as the primary basis for comparing the generalization ability of Lasso, KNN, ANN, and XGBoost across decades of climate variability.

3. Results

This section presents the predictive performance of the four models across all forecasting experiments. We first evaluate temporal accuracy and stability for each individual model and then assess how performance varies with different predictor-variable configurations and region–intensity target combinations.

3.1. Individual Model Performance

a) Lasso Model

Figure 1 compares observed hurricane counts with climatological and Lasso forecasts across all nine region–intensity combinations. The Lasso model performs reliably for total tropical cyclone counts (ATTC, CATC, GUTC), reproducing both long-term trends and substantial interannual variability. For hurricanes (HU) and major hurricanes (MH), the model remains responsive to year-to-year fluctuations, outperforming the nearly constant climatological baseline.

Although extreme peaks are somewhat damped—a consequence of regularization—the model retains the correct timing and relative magnitude of active and inactive periods across all basins. Overall, Lasso exhibits strong and stable skills for frequently occurring storm categories and provides meaningful improvements over climatology even for more intense hurricane classes.

b) KNN Model

The KNN framework yields favorable skill across most region–intensity combinations (

Figure 2). By identifying past seasons with predictor profiles similar to the target year, the model effectively captures multidecadal variability and broad transitions between active and quiet phases. Forecasts generally smooth extreme peaks but maintain accurate temporal phasing, resulting in consistently better performance than climatology for TC and HU categories.

Skill diminishes for major hurricanes, reflecting the scarcity of suitable analog years and the difficulty of matching rare climatic configurations. Nevertheless, the KNN approach offers a robust non-parametric benchmark that performs competitively for more common storm categories.

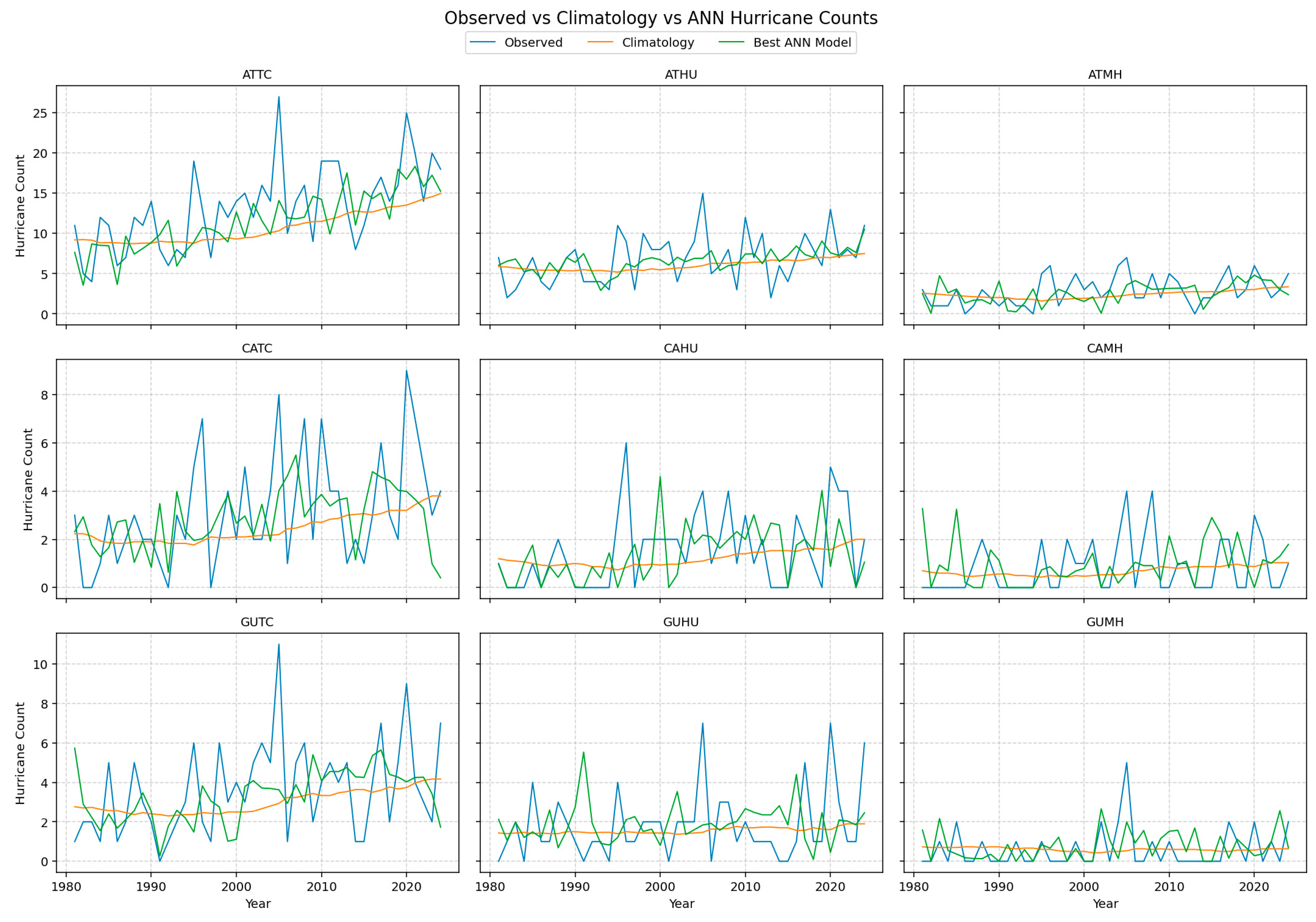

c) ANN Model

The ANN model captures large-scale fluctuations in hurricane activity but struggles to align the timing of peaks and troughs with observations (

Figure 3). While the model learns the general amplitude and low-frequency variability of TC counts, its predictions frequently lag or lead observed extreme events, producing substantial phase errors.

Performance is adequate for total TCs but deteriorates for HU and MH categories, where nonlinear interactions and small sample size challenge the network’s ability to generalize. These results suggest that the ANN recognizes broad climatic patterns but cannot reliably translate them into year-specific activity levels given the limited training data.

Figure 3 A temporal comparison between observed hurricane activities, the climatology predictions, and the ANN model predictions.

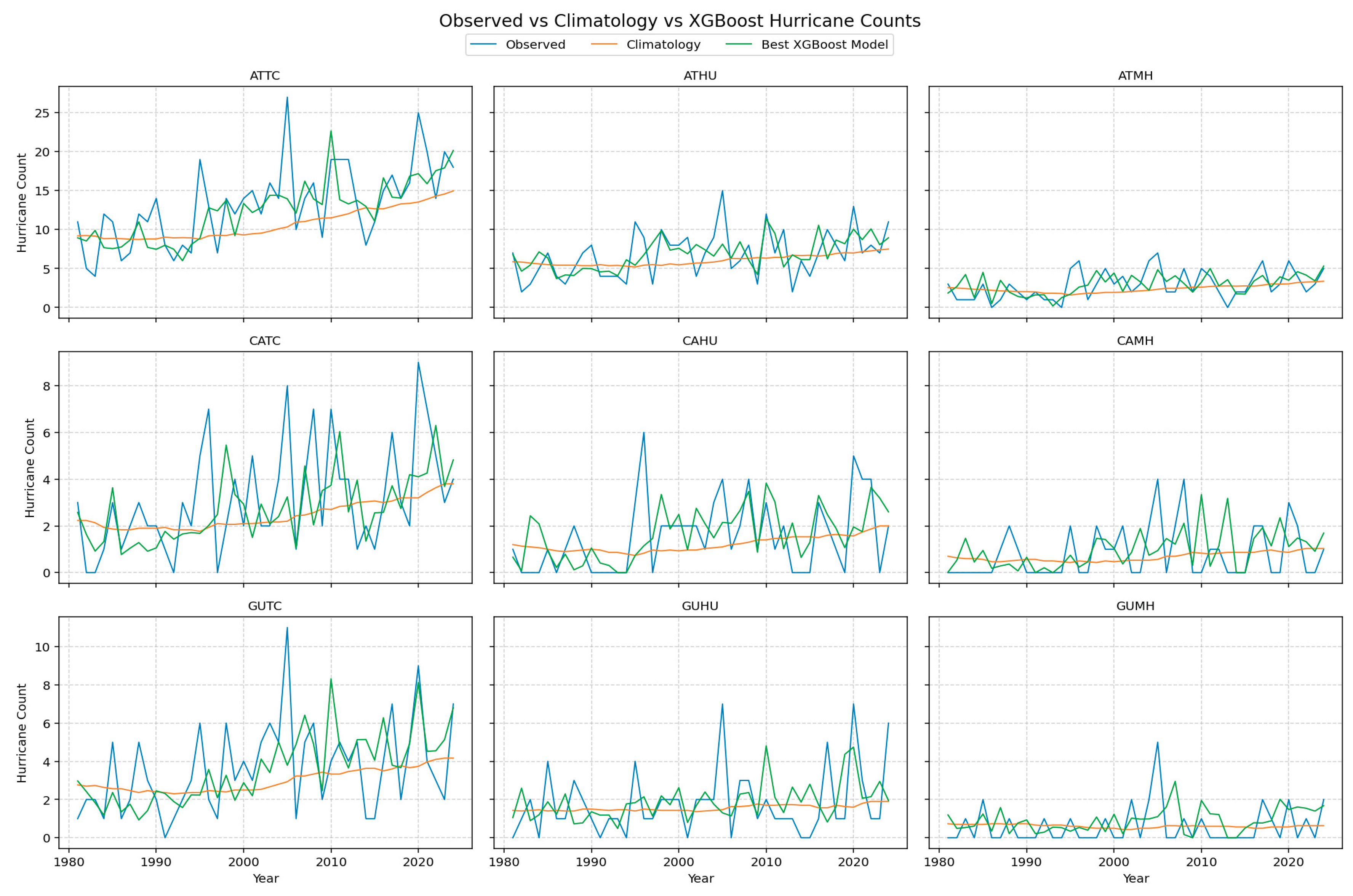

- d)

XGBoost Model

XGBoost delivers the strongest and most consistent performance among all four approaches (

Figure 4). Its forecasts reproduce decadal variability, capture the onset and decline of active phases, and maintain realistic amplitude across all intensity levels. The model is particularly effective for total TCs and hurricanes, where tree-based boosting adapts to nonlinear and hierarchical relationships without overfitting interannual noise.

Even for major hurricanes—where data scarcity challenges all models—XGBoost maintains stable phasing and amplitude. This consistent fidelity underscores the advantages of ensemble boosting and embedded regularization in extracting predictive signals from high-dimensional climate data.

3.2. Cross-Model Comparison

a) Performance Across Predictor-Variable Sets

Table 3 summarizes the best and average log-likelihood skill scores (H) for each model across nine predictor-variable configurations. The results highlight model sensitivity to predictor diversity, seasonal lead time, and inclusion of ENSO and latent heat flux (LHF) information.

XGBoost demonstrates the highest and most stable performance. It achieves top best-case H-values in virtually all predictor sets (up to 0.374) and maintains near-neutral or positive average skill, even when predictor dimensionality increases. The strongest improvement occurs in the Jan–Apr, Core + Niño configuration, indicating that the inclusion of spring ENSO signals enhances model skill. The model remains robust even with LHF predictors, confirming the effectiveness of its internal regularization.

Lasso performs best when predictor sets are moderate in size. Its strongest results appear in the Jan–Apr, Core + LHF and Jan–Feb + Niño JAS Forecast configurations (best H ≈ 0.25–0.26), where dominant linear relationships drive most of the predictive signal. Beyond these settings, average H-values decline slightly as additional predictors introduce bias from increased regularization.

KNN shows limited sensitivity to predictor set expansion. Best H-values remain between 0.06 and 0.08, while average values fluctuate around zero or slightly negative. This indicates that Euclidean distance in high-dimensional climate space does not leverage additional predictor information effectively.

ANN exhibits substantial performance degradation as input dimensionality increases. Average H-values fall below –3 for almost all expanded predictor sets, especially those including LHF variables. These results reflect severe overfitting given the limited training sample and confirm that the tested ANN architecture is insufficiently constrained for this forecasting task.

In summary, regularized linear (Lasso) and boosted tree (XGBoost) models benefit most from moderate predictor diversity, while data-intensive models (ANN) degrade when dimensionality increases. XGBoost is the only method that maintains stable and positive skill across all predictor configurations.

b) Performance Across Target Variables

Table 4 summarizes best and average H-values for each model across nine region–intensity target variables.

XGBoost is the top-performing approach for seven of the nine targets. It achieves positive mean skill for most TC and HU categories, especially in the Atlantic Basin (mean H = 0.27 for ATTC; 0.01 for ATHU). Even the more difficult MH categories yield near-neutral skill, reflecting the model’s resilience to noise and low sample size.

Lasso performs as a strong linear benchmark, achieving positive average skill for total TC categories (e.g., ATTC avg H = 0.18; GUTC avg H = 0.08). Skill declines for hurricane and major hurricane categories, where linear assumptions limit flexibility, but performance remains stable and interpretable.

KNN produces mixed results. It achieves isolated positive best-case scores (e.g., ATMH best H = 0.06), but average values cluster near zero or slightly negative. Its analog-based inference struggles to consistently represent rare events and high-frequency variability.

ANN consistently produces large negative H-values across nearly all targets, reflecting overfitting and poor temporal generalization. Its predictions frequently misalign major activity shifts, resulting in unstable and unreliable forecasts.

In summary, XGBoost provides the strongest overall performance—highest best-case and average skill—followed by Lasso as a reliable linear alternative. KNN adds marginal skill over climatology, and ANN underperforms across all targets given the small-sample constraints.

4. Discussion

This study evaluated four statistical and machine-learning approaches—Lasso, KNN, ANN, and XGBoost—for seasonal prediction of Atlantic tropical cyclone activity under multiple predictor configurations and across several region–intensity targets. The results show that while several methods modestly exceed climatology, only a subset delivers stable, generalizable performance suitable for operational use.

The most consistent outcome is the superiority of XGBoost. Across nearly all predictor sets and targets—especially basin-wide total tropical cyclones and hurricanes—XGBoost achieves the highest best-case scores and maintains near-neutral or positive mean skill. Its ability to regularize model complexity through boosted decision trees, prune weak interactions, and accommodate nonlinear dependencies among predictors appears well matched to the structure of the seasonal hurricane prediction problem. With only a few decades of training data, a model must capture large-scale climate signals without tracking year-specific noise; XGBoost is the only method that consistently strikes that balance.

Lasso performs well when predictor sets remain moderate in size and dominated by physically interpretable indices. It yields positive mean skill for several total TC targets and, in specific configurations, matches or occasionally exceeds XGBoost. This behavior aligns with earlier findings that regularized linear models can isolate compact, physically meaningful predictors. In this study, Lasso performs best for high-sample targets where linear relationships between climate drivers and storm counts are strongest. Its performance weakens for rare outcomes—such as regional major hurricanes—where strong regularization and linear constraints limit sensitivity. Even so, Lasso remains a stable, interpretable baseline for identifying core climate–TC linkages.

KNN and ANN highlight the difficulties inherent in applying data-intensive or highly flexible models to small training samples. KNN exhibits minimal responsiveness to increased predictor dimensionality, with modest best-case skill and near-zero or negative average performance. Euclidean analogs in high-dimensional, correlated predictor spaces fail to reliably distinguish active from inactive seasons. ANN performs substantially worse: mean skill scores are strongly negative across most targets. The network overfits the limited data and does not reproduce the temporal alignment of observed variability. Without substantial redesign—such as stronger regularization, reduced complexity, or larger training sets—ANN architectures of this type are not suitable for seasonal TC prediction.

Predictor availability and lead time influence model performance but not uniformly. Both XGBoost and Lasso usually benefit from additional early-season information, such as predictors extended through April or the inclusion of Niño indices. XGBoost responds most favorably to “Jan–Apr, Core + Niño” configurations. Lasso achieves its best scores in a subset of balanced predictor sets where the number and strength of predictors align with its regularization. By contrast, inclusion of LHF-derived EOFs leads to mixed outcomes, indicating that increased physical detail does not automatically improve predictive skill. Instead, the interaction among model structure, predictor noise, and limited sample size determines whether new information provides a usable signal.

Differences across region–intensity targets further demonstrate the limits of predictability. Both XGBoost and Lasso perform best for basin-wide total TCs, with skill decreasing for hurricanes, major hurricanes, and geographically smaller domains. This pattern reflects both physical and statistical constraints. Basin-wide TC activity is strongly tied to large-scale climate conditions, whereas regional hurricane and major-hurricane counts depend more heavily on mesoscale processes, track variability, and internal dynamics not captured by seasonal predictors. Sparse samples for regional MH targets reduce effective signal strength, pushing several models toward neutral or negative skill. These outcomes indicate that fine-scale seasonal guidance is inherently more uncertain, rather than showing that models are fundamentally inadequate.

Several implications emerge. First, regularized ensemble methods—especially XGBoost—provide a strong foundation for seasonal TC forecasting and represent a useful complement or alternative to traditional linear approaches. Second, increased model complexity or “black-box” architectures do not guarantee better performance; models must be matched to data volume and the underlying physical structure of the problem. Third, the pronounced contrast between basin-scale and region-specific skill underscores the need for caution when issuing granular seasonal guidance. Regional outlooks remain possible but should be accompanied by clear communication of uncertainty.

This study has several limitations that suggest directions for future work. Results are based on a fixed 30-year sliding-window validation framework and a single set of climate predictors based on storm counts; alternative target variables, such as accumulated cyclone energy, validation window lengths, additional dynamical model inputs, or other reanalysis products may alter relative model performance. The analysis treats each regional target variable independently, foregoing potential benefits of multitask or hierarchical models that exploit shared climate drivers. Furthermore, verification relies solely on Poisson-likelihood skill scores; complementary probabilistic or decision-relevant metrics could provide a broader assessment of forecast value.

Despite these constraints, the findings show that physically informed, well-regularized machine-learning systems can substantially improve seasonal prediction of Atlantic tropical cyclone activity—particularly for basin-scale total storm counts. As climate change continues to reshape the large-scale environment influencing tropical cyclones, comparative evaluations such as this will be critical for guiding the development of next-generation seasonal and regional hurricane prediction systems.

5. Conclusions

This study compared four modeling approaches—Lasso, KNN, ANN, and XGBoost—for seasonal prediction of Atlantic tropical cyclone activity across multiple regions, intensity thresholds, and predictor configurations. Using a consistent suite of climate predictors and a 30-year sliding window cross-validation framework, the analysis provides an operationally realistic assessment of how linear, analog-based, neural-network, and tree-ensemble methods perform in a small-sample seasonal forecasting environment.

Across the full set of experiments, XGBoost emerges as the most reliable and skillful model, consistently achieving the highest best-case and positive or near-neutral mean H-scores for many basin-wide and regional targets. Its ability to accommodate nonlinear interactions among predictors—while controlling overfitting through boosting and regularization—makes it particularly well suited to the limited sample sizes that characterize seasonal hurricane prediction. Lasso also performs strongly, especially for total tropical cyclone counts and for predictor sets of moderate size that emphasize physically interpretable climate indices. These results reaffirm the value of regularized linear methods as stable, interpretable baselines. In contrast, KNN and ANN models exhibit marginal or even negative skill, highlighting the difficulty of applying high-flexibility or data-intensive approaches to small-sample climatological problems.

The analysis also demonstrates that forecast skill is sensitive to predictor availability and lead time. Certain configurations—particularly those incorporating early-season SST anomalies and Niño indices—enhance performance for both XGBoost and Lasso. However, the benefits of expanding predictor sets are not uniform; additional variables sometimes degrade skill, reflecting an inherent trade-off between predictor diversity, noise characteristics, and optimized predictor size. Moreover, regional and major-hurricane targets remain the most challenging to predict, owing to both statistical limitations (sparse event counts) and physical constraints on the predictability of storm counts for relatively small regions.

Collectively, these findings have several implications. First, well-designed machine-learning models—especially tree-based ensembles—can provide meaningful improvements over climatology and simple regression when rigorously evaluated. Second, greater algorithmic complexity does not guarantee greater forecast skill; model choice must remain closely aligned with data volume, physical interpretability, and the nature of the prediction problem. Third, seasonal outlooks for highly specific regional or intensity categories should be communicated with appropriate caution, given the limited predictability at these scales.

Several opportunities for future research remain. Alternative target variables, different lengths of training-window and validation windows, expanded predictor sources (including dynamical model output), and multitask or hierarchical learning frameworks may better leverage shared information across regions and intensity levels. Additional verification metrics—probabilistic scores or decision-oriented measures—could also provide a more comprehensive view of model utility.

Despite these limitations, the study demonstrates that physically informed machine-learning frameworks offer a viable pathway for improving the accuracy, robustness, and interpretability of seasonal Atlantic tropical cyclone forecasts, particularly at the basin scale and for total storm counts under operationally realistic constraints.

Acknowledgments

The bulk of this study was carried out in the Coastal Fluid Dynamics Laboratory (CFDL), Department of Marine, Earth and Atmospheric Sciences, North Carolina State University as part of the ongoing Research Experience Enrichment Program for high school students. We appreciate assistance from current and former CFDL graduate students and postdocs for providing some of the model source codes and research training to the program participants. Some of the model experiments were conducted on the ThinkStation P7 donated by Lenovo through a gift to the North Carolina State University.

Abbreviations

| AMM |

Atlantic Meridional Mode |

| AMO |

Atlantic Multidecadal Oscillation |

| ANN |

Artificial Neural Network |

| ATTC |

Atlantic Total Tropical Cyclones |

| ATHU |

Atlantic Hurricanes |

| ATMH |

Atlantic Major Hurricanes |

| CATC |

Caribbean Total Tropical Cyclones |

| CATHU |

Caribbean Hurricanes |

| CAMH |

Caribbean Major Hurricanes |

| EOF |

Empirical Orthogonal Function |

| ENSO |

El Niño–Southern Oscillation |

| GISS |

Goddard Institute for Space Studies |

| GUMH |

Gulf Major Hurricanes |

| GUTC |

Gulf Total Tropical Cyclones |

| GUTHU |

Gulf Hurricanes |

| HURDAT |

North Atlantic Hurricane Database |

| HURDAT2 |

Modernized North Atlantic Hurricane Database |

| KNN |

K-Nearest Neighbors |

| LHF |

Latent Heat Flux |

| MDR |

Main Development Region |

| ML |

Machine Learning |

| NHC |

National Hurricane Center |

| NOAA |

National Oceanic and Atmospheric Administration |

| SST |

Sea-Surface Temperature |

| TC |

Tropical Cyclone |

| USA |

United States of America |

| XGBoost |

eXtreme Gradient Boosting |

References

- Bhatia, K. T.; Vecchi, G. A.; Murakami, H.; Underwood, S.; Kossin, J. P. Projected response of tropical cyclone intensity and intensification in a global climate model. Journal of Climate 2019, 32(20), 6471–6489. [Google Scholar] [CrossRef]

- Camargo, S. J.; Sobel, A. H.; Barnston, A. G.; Klotzbach, P. J. The influence of natural climate variability on tropical cyclones and seasonal forecasts. In Climate Extremes and Society; Landsea, C. W., Lucus, J. C., Eds.; American Meteorological Society, 2007; pp. 325–360. [Google Scholar]

- Caron, L.-P.; Jones, C. G.; Winger, K. Impact of machine learning on seasonal tropical cyclone prediction. Climate Dynamics 53 2019, 7035–7053. [Google Scholar]

- Chu, P.-S.; Zhao, X. A Bayesian regression approach for predicting seasonal tropical cyclone activity over the central North Pacific. Journal of Climate 2004, 17(24), 4893–4908. [Google Scholar] [CrossRef]

- Elsner, J. B.; Jagger, T. H. Prediction models for annual US hurricane counts. Journal of Climate 2006, 19(12), 2935–2952. [Google Scholar] [CrossRef]

- Emanuel, K. A. Sensitivity of tropical cyclones to surface exchange coefficients and a revised steady-state model incorporating eye dynamics. Journal of the Atmospheric Sciences 1995, 52(22), 3969–3976. [Google Scholar] [CrossRef]

- Goldenberg, S. B.; Shapiro, L. J. Physical mechanisms for the association of El Niño and West African rainfall with Atlantic major hurricane activity. Journal of Climate 1996, 9(6), 1169–1187. [Google Scholar] [CrossRef]

- Goldenberg, S. B.; Landsea, C. W.; Mestas-Nuñez, A. M.; Gray, W. M. The recent increase in Atlantic hurricane activity: Causes and implications. Science 293 2001, 474–479. [Google Scholar] [CrossRef]

- Grinsted, Anders; Moore, John C.; Jevrejeva, Svetlana. Global Odds of Record-Breaking Climate Extremes. Proceedings of the National Academy of Sciences 2019, 116(19), 8499–8504. [Google Scholar]

- Grinsted, A.; Moore, J. C.; Jevrejeva, S. Global projections of storm surges and extreme sea levels. Scientific Reports 9 2019, 2352. [Google Scholar]

- Gray, W. M. Atlantic seasonal hurricane frequency. Part I: El Niño and 30 mb quasi-biennial oscillation influences. Monthly Weather Review 1984, 112(9), 1649–1668. [Google Scholar] [CrossRef]

- Jarvinen, Brian R.; Neumann, Charles J.; Davis, Mary AS. A Tropical Cyclone Data Tape for the North Atlantic Basin, 1886-1983: Contents, Limitations, and Uses. 1984. [Google Scholar]

- Johnson, N. C.; Collins, D.; Schreck, C. Machine learning approaches for improved seasonal tropical cyclone prediction. Geophysical Research Letters 2022, 49(4), e2021GL095726. [Google Scholar]

- Klotzbach, P. J. Recent developments in statistical prediction of seasonal Atlantic hurricane activity. Tellus A 2007, 59(4), 511–518. [Google Scholar] [CrossRef]

- Klotzbach, P. J.; Gray, W. M. Updated 6- to 11-month prediction of Atlantic basin seasonal hurricane activity. Weather and Forecasting 2004, 19(5), 917–934. [Google Scholar] [CrossRef]

- Klotzbach, P. J.; Bell, G. D.; Blake, E.; Landsea, C. W. Seasonal tropical cyclone forecasting in the North Atlantic basin: A review. Atmosphere 2019, 10(2), 112. [Google Scholar]

- Knutson, T. R.; et al. Tropical cyclones and climate change. Nature Geoscience 2010, 3(3), 157–163. [Google Scholar] [CrossRef]

- Knutson, T. R.; et al. Tropical cyclones and climate change assessment: Part II. Projected response to anthropogenic warming. Bulletin of the American Meteorological Society 2020, 101(3), E303–E322. [Google Scholar] [CrossRef]

- Kossin, J. P.; Camargo, S. J.; Sitkowski, M. Climate modulation of North Atlantic hurricane tracks. Journal of Climate 2010, 23(11), 3057–3076. [Google Scholar] [CrossRef]

- Landsea, Christopher W.; Franklin, James L. Atlantic Hurricane Database Uncertainty and Presentation of a New Database Format. Monthly Weather Review 2013, 141(10), 3576–3592. [Google Scholar] [CrossRef]

- Landsea, C. W.; Franklin, J. L. Atlantic hurricane database uncertainty and presentation of a new database format. Monthly Weather Review 2013, 141(10), 3576–3592. [Google Scholar] [CrossRef]

- Mainelli, M.; DeMaria, M.; Shay, L. K.; Goni, G. Application of oceanic heat content estimation to operational forecasting of recent Atlantic category 5 hurricanes. Weather and Forecasting 2008, 23(1), 3–16. [Google Scholar] [CrossRef]

- Napoles, O. M.; Vigh, J. L.; Ritchie, E. A. Tropical cyclone formation prediction using machine learning. Journal of Applied Meteorology and Climatology 2020, 59(7), 1177–1194. [Google Scholar]

- NOAA National Hurricane Center. HURDAT2: North Atlantic Hurricane Database. 2025. Available online: https://www.nhc.noaa.gov/data/.

- NOAA Climate Prediction Center. 2025 North Atlantic Hurricane Season Outlook. 2025. Available online: https://www.cpc.ncep.noaa.gov/products/outlooks/hurricane.shtml.

- Pielke, R. A.; et al. Normalized hurricane damage in the United States: 1900–2005. Natural Hazards Review 2008, 9(1), 29–42. [Google Scholar] [CrossRef]

- Saunders, M. A.; Lea, A. S. Large contribution of sea-surface warming to recent increase in Atlantic hurricane activity. Nature 451 2008, 557–560. [Google Scholar] [CrossRef]

- Scharroo, R.; Smith, W. H. F.; Lillibridge, J. L. Satellite altimetry and the Loop Current. Geophysical Research Letters 2005, 32(1), L05605. [Google Scholar]

- Sun, L.; Patricola, C. M.; Hsiang, S. Pre-season statistical prediction of Atlantic hurricane activity using clustered climate indices. Geophysical Research Letters 2021, 48(8), e2020GL091583. [Google Scholar]

- Sun, Xia; Xie, Lian; Shah, Shahil Umeshkumar; Shen, Xipeng. A Machine Learning Based Ensemble Forecasting Optimization Algorithm for Preseason Prediction of Atlantic Hurricane Activity. Atmosphere 2021, 12(4), 522. [Google Scholar] [CrossRef]

- Ting, M.; Kushnir, Y.; Seager, R.; Li, C. Forced and internal twentieth-century SST trends in the North Atlantic. Journal of Climate 2015, 28(4), 1087–1102. [Google Scholar] [CrossRef]

- Vecchi, G. A.; et al. Weakening of tropical Pacific atmospheric circulation due to anthropogenic forcing. Nature 441 2011, 73–76. [Google Scholar] [CrossRef]

- Villarini, G.; Vecchi, G. A.; Smith, J. A. Modeling the dependence of tropical storm counts on climate indices. Statistical Analysis and Data Mining 2010, 3(4), 135–142. [Google Scholar]

- Wang, C.; Lee, S.-K. Co-variability of tropical cyclones in the North Atlantic and the eastern North Pacific. Geophysical Research Letters 36 2009, L24702. [Google Scholar] [CrossRef]

- Wing, A. A.; Emanuel, K.; Holloway, C. E.; Muller, C. Convective self-aggregation in numerical simulations: A review. Surveys in Geophysics 2019, 39, 421–465. [Google Scholar]

- Chen, X.; Xie, L. AI-ML-Statistical-Hurricane-Prediction-Models [Software]. 2025. Available online: https://github.com/xzchen16/AI-ML-Statistical-Hurricane-Prediction-Models (accessed on 14 December 2025).

Figure 1.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the LASSO model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 1.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the LASSO model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 2.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the KNN model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 2.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the KNN model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 3.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the ANN model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 3.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the ANN model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 4.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the XGBoost model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Figure 4.

Comparison of seasonal counts of TCs (left column), hurricanes (center column) and major hurricanes (right column) among observed values (blue), 30-year sliding window climatology predictions (climatology), and the XGBoost model predictions (green) in Atlantic basin (top panel), Caribbean Sea (middle panel), and the Gulf of Mexico (bottom panel).

Table 1.

Predictor variables and category assignments.

Table 1.

Predictor variables and category assignments.

| Category |

Climate Index |

Climate Index Name |

| Core |

AMM |

Atlantic Meridional Mode |

| AMO |

Atlantic Multidecadal Oscillation |

| AO |

Arctic Oscillation |

| CENSO |

Bivariate ENSO (El Niño–Southern Oscillation) time series |

| DM |

Atlantic Dipole Mode (DM = TNA – |

| EPO |

East Pacific/North Pacific Oscillation index |

| GGST |

Global Mean Land/Ocean Temperature index |

| NGST |

North-Hemisphere Mean Land/Ocean Temperature index |

| SGST |

South-Hemisphere Mean Land/Ocean Temperature index |

| MDRSST |

Sea Surface Temperature averaged over Major Development Region (MDR) |

| MDROLR |

Top of Atmosphere Outgoing Longwave Radiation averaged over MDR |

| MDRSLP |

Sea Level Pressure averaged over MDR |

| MDRU200 |

Zonal Wind at 200 hPa averaged over MDR |

| MDRV200 |

Meridional Wind at 200 hPa averaged over MDR |

| MDRU850 |

Zonal Wind at 850 hPa averaged over MDR |

| MDRV850 |

Meridional Wind at 850 hPa averaged over MDR |

| MDRVWS |

Vertical Wind Shear averaged over MDR |

| NAO |

North Atlantic Oscillation |

| PDO |

Pacific Decadal Oscillation |

| PNA |

Pacific North American index |

| QBO |

Quasi-Biennial Oscillation |

| SFI |

Solar Flux (10.7 cm) |

| SOI |

Southern Oscillation Index |

| TNI |

Trans-Niño Index |

| TNA |

Tropical Northern Atlantic index |

| TSA |

Tropical Southern Atlantic index |

| WHWP |

Western Hemisphere Warm Pool |

| WP |

Western Pacific index |

| NIÑO |

MEI |

Multivariate ENSO Index |

| NINO12 |

Extreme Eastern Tropical Pacific SST (0–10° S, 90° W–80° |

| NINO3 |

Eastern Tropical Pacific SST (5° N–5° S, 150–90° W) |

| NINO34 |

East Central Tropical Pacific SST (5° N–5° S, 170–120° |

| NINO4 |

Central Tropical Pacific SST (5° N–5° S, 160° E–150° |

| LHF |

LHF.WIN |

LHF EOF Scores for Winter |

Table 2.

Predictor-variable configurations and target variables.

Table 2.

Predictor-variable configurations and target variables.

| Number |

Predictor Variable Sets |

Number |

Target Variable |

| 1 |

Jan-Feb

Core only |

1 |

Atlantic Basin Tropical Cyclone

(ATTC) |

| 2 |

Jan-Feb

Core + Nino |

2 |

Atlantic Basin Hurricane

(ATHU) |

| 3 |

Jan-Feb

Core+Nino+LHF |

3 |

Atlantic Basin Major Hurricane

(ATMH) |

| 4 |

Jan-Apr

Core only |

4 |

Caribbean Sea Tropical Cyclone

(CATC) |

| 5 |

Jan-Apr

Core + Nino |

5 |

Caribbean Sea Hurricane

(CAHU) |

| 6 |

Jan-Apr

Core+Nino+LHF |

6 |

Caribbean Sea Major Hurricane

(CAMH) |

| 7 |

Jan-Feb+Nino JAS Forecast

Core only |

7 |

Gulf of Mexico Tropical Cyclone

(GUTC) |

| 8 |

Jan-Feb+Nino JAS Forecast

Core + Nino |

8 |

Gulf of Mexico Hurricane

(GUHU) |

| 9 |

Jan-Feb+Nino JAS Forecast

Core+Nino+LHF |

9 |

Gulf of Mexico Major Hurricane

(GUMH) |

Table 3.

Summary of Best and Average H Scores by Model and Predictor Variable Sets.

Table 3.

Summary of Best and Average H Scores by Model and Predictor Variable Sets.

| Predictor Set |

LASSO (Best / Avg) |

KNN (Best / Avg) |

ANN (Best / Avg) |

XGBoost (Best / Avg) |

Top Performer |

Jan–Feb,

Core only

|

0.089 / –0.056 |

0.061 / –0.018 |

0.090 / –1.697 |

0.221 / –0.321 |

XGBoost |

Jan–Feb,

Core + Niño

|

0.201 / –0.013 |

0.061 / –0.018 |

0.111 / –2.012 |

0.360 / –0.134 |

XGBoost |

Jan–Feb,

Core + Niño + LHF

|

0.123 / –0.059 |

0.061 / –0.373 |

0.090 / –5.270 |

0.144 / –0.247 |

XGBoost |

Jan–Apr,

Core only

|

0.239 / –0.091 |

0.075 / –0.452 |

0.098 / –3.278 |

0.297 / –0.145 |

XGBoost |

Jan–Apr,

Core + Niño

|

0.103 / –0.069 |

0.075 / –0.452 |

0.135 / –1.114 |

0.374 / –0.036 |

XGBoost |

Jan–Apr,

Core + Niño + LHF

|

0.254 / –0.016 |

0.013 / –0.690 |

0.207 / –3.722 |

0.327 / –0.003 |

Lasso |

| Jan–Feb + Niño JAS, Core only |

0.205 / –0.030 |

0.061 / –0.018 |

0.165 / –3.568 |

0.212 / –0.341 |

XGBoost |

| Jan–Feb + Niño JAS, Core + Niño |

0.259 / 0.037 |

0.061 / –0.018 |

0.075 / –1.579 |

0.208 / –0.376 |

Lasso |

| Jan–Feb + Niño JAS, Core + Niño + LHF |

0.219 / 0.023 |

0.061 / –0.372 |

0.144 / –5.360 |

0.286 / 0.013 |

XGBoost |

Table 4.

Summary of Best and Average H Scores by Model and Target Variables.

Table 4.

Summary of Best and Average H Scores by Model and Target Variables.

| Target Variable |

Lasso (Best / Avg) |

KNN (Best / Avg) |

ANN (Best / Avg) |

XGBoost (Best / Avg) |

Top Performer |

| ATTC |

0.259 / 0.181 |

0.004 / –0.017 |

0.207 / 0.124 |

0.374 / 0.270 |

XGBoost |

| ATHU |

0.003 / –0.141 |

0.032 / –0.008 |

0.041 / –0.719 |

0.170 / 0.007 |

XGBoost |

| ATMH |

–0.041 / –0.193 |

0.061 / –0.090 |

–0.421 / –3.249 |

0.122 / 0.008 |

XGBoost |

| CATC |

0.112 / 0.022 |

0.075 / 0.027 |

–0.084 / –3.664 |

0.072 / –0.293 |

Lasso |

| CAHU |

0.125 / –0.035 |

0.039 / –1.022 |

–3.076 / –6.432 |

0.124 / –0.117 |

Lasso |

| CAMH |

–0.030 / –0.216 |

0.030 / –0.167 |

–3.815 / –5.716 |

0.025 / –0.176 |

KNN |

| GUTC |

0.197 / 0.084 |

–0.030 / –0.079 |

–0.086 / –2.515 |

0.063 / –0.022 |

Lasso |

| GUHU |

–0.015 / –0.100 |

–0.074 / –0.093 |

–0.486 / –4.974 |

–0.063 / –0.672 |

XGBoost |

| GUMH |

0.089 / –0.095 |

–0.081 / –1.286 |

–0.677 / –3.648 |

–0.051 / –1.041 |

Lasso |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |