1. Introduction

In the evolving landscape of cybersecurity, enterprise application security frameworks must continuously adapt to identify and mitigate increasingly complex cyber threats. The adoption of artificial intelligence (AI) has significantly augmented traditional security strategies by automating threat detection and response. However, the opaque nature of many AI systems, often described as “black boxes,” raises challenges concerning trust, explainability, and accountability. This paper addresses the deployment of Explainable AI (XAI) to improve transparency in threat analysis and decision support within enterprise security environments, aiming to bridge the gap between sophisticated automated security mechanisms and human oversight.

1.1. Background of Enterprise Application Security

Enterprise application security revolves around safeguarding critical business applications from unauthorized access, data breaches, and various forms of cyberattacks. These applications often handle sensitive data and run complex business operations, making them prime targets for adversaries. Traditionally, security frameworks have relied on rule-based systems, firewalls, and signature detection to prevent attacks. As threats evolve in scale and sophistication, enterprises face the challenge of addressing zero-day vulnerabilities, insider threats, and advanced persistent attacks that conventional methods struggle to detect effectively. Hence, enterprise security must incorporate adaptive, intelligent mechanisms that provide comprehensive protection while aligning with regulatory and compliance requirements specific to enterprise environments.

1.2. Role of AI in Modern Threat Detection

Artificial Intelligence has emerged as a pivotal tool in modern cybersecurity due to its ability to analyse vast amounts of security data, identify patterns, and detect anomalies that signify potential threats. Machine learning models enhance threat intelligence by continuously learning from network traffic, logs, and user behaviours to predict cyberattacks proactively. AI-driven systems enable faster incident response by automating detection workflows and reducing false positives. However, despite these advantages, the reliance on black-box AI models often limits the security teams’ ability to understand the rationale behind alerts and decisions, complicating incident investigation and compliance verification processes.

1.3. The Need for Explainable AI (XAI) in Security Frameworks

The integration of Explainable AI into security frameworks addresses the critical need for transparency and trust in AI-driven threat analysis and decision-making. Unlike traditional AI, XAI provides interpretable explanations for its outputs, making it easier for cybersecurity professionals to comprehend, validate, and act on AI-generated insights. This transparency is vital for several reasons: it supports regulatory compliance by providing audit trails, enhances human-machine collaboration by making AI recommendations understandable, and mitigates risks associated with erroneous or biased AI decisions. Deploying XAI in enterprise security frameworks transforms security operations from reactive to proactive, enabling informed decision support that balances automation efficiency with human expertise.

2. Literature Review

To appreciate the deployment of Explainable AI (XAI) in enterprise security, it is important first to understand the current landscape of AI and machine learning applications in threat analysis and the challenges posed by opaque models, along with a survey of existing XAI approaches and open challenges.

2.1. AI and Machine Learning in Threat Analysis

Artificial Intelligence and machine learning have become instrumental in automating the detection and classification of cyber threats. These technologies analyse extensive datasets collected from network traffic, access logs, and user activity to identify suspicious patterns indicative of attacks such as malware, phishing, or insider threats. Algorithms including supervised learning models, unsupervised anomaly detectors, and deep learning architectures have demonstrated increased accuracy and speed over traditional signature-based methods, allowing proactive threat assessment and rapid incident response.

2.2. Limitations of Black-Box Models in Security

Despite their technical prowess, many AI models used in security operate as “black boxes,” producing decisions or alerts without transparent justification. This opacity poses critical challenges in cybersecurity contexts were understanding the “why” behind a threat alert is essential for remediation, compliance, and trust. Black-box models may also be susceptible to adversarial manipulation, biases, or errors that remain hidden without interpretability. This lack of explainability complicates forensic investigations and undermines confidence among security analysts and stakeholders.

2.3. Existing XAI Approaches in Cybersecurity

To overcome these limitations, several Explainable AI techniques have been adapted for cybersecurity applications. These include feature importance measures that highlight influential inputs, rule-based surrogate models that approximate complex predictions with simpler explanations, and visualization tools that map decision paths. Methods such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are increasingly popular for offering local and global interpretability. Some solutions integrate XAI directly into threat detection pipelines, facilitating more intuitive alert triage and enhanced analyst trust.

2.4. Research Gaps and Challenges

Despite advances, significant gaps remain in applying XAI to enterprise security. Existing models often lack scalability for high-dimensional security data or struggle to provide real-time explanations within fast-paced environments. There is also a tension between explainability and detection accuracy, where simpler interpretable models might underperform more opaque but effective algorithms. Additionally, evaluation metrics for explanation quality in cybersecurity contexts are still emerging. Bridging these gaps requires further research into balancing transparency with robust threat detection.

Table 1.

Comparative Overview of XAI Methodologies in Cybersecurity.

Table 1.

Comparative Overview of XAI Methodologies in Cybersecurity.

| Methodology |

Description |

Strengths |

Limitations |

Representative Studies |

| Feature Importance (e.g., SHAP, LIME) |

Quantifies contribution of input features to predictions |

Provides local and global explanation; model-agnostic |

Computational overhead; interpretations may be approximate |

Lundberg et al., 2017; Ribeiro et al., 2016 |

| Surrogate Models |

Simplifies complex models using interpretable approximations (e.g., decision trees) |

Easy to understand; useful for model debugging |

May oversimplify; explanations are approximations |

Ribeiro et al., 2016 |

| Visualization Techniques |

Graphical representation of model decisions or threat behavior |

Intuitive understanding; aids pattern recognition |

Requires expertise to interpret; limited scalability |

Ren et al., 2019 |

| Rule-Based Explanations |

Uses explicit logical rules for decision-making clarity |

Transparent decision process; easy for compliance |

May lacks flexibility; harder with complex data |

Lin et al., 2020 |

| Integrated XAI Pipelines |

Combines detection with explainability in real-time systems |

Enhances trust and operational efficiency |

Complexity in system integration; performance trade-offs |

Arrieta et al., 2020 |

3. Explainable AI in Threat Analysis

Explainable Artificial Intelligence (XAI) has become a fundamental advancement in cybersecurity, particularly in enhancing threat analysis processes within enterprise security frameworks. XAI’s core objective is to transform the traditional AI ‘black box’ into a transparent system where security professionals can understand, trust, and validate AI-generated decisions. This transparency is critical in complex environments such as Managed Detection and Response (MDR) systems or Security Operations Centers (SOC), where rapid, accurate interpretation of AI-driven threat alerts facilitates more effective and accountable security operations.

3.1. Principles of Explainability and Transparency

The principles underlying XAI in cybersecurity rest on three pillars: transparency, interpretability, and accountability. Transparency ensures that the AI systems expose their decision-making pathways clearly, allowing analysts to see the logic or data that led to a specific alert or classification. Interpretability means presenting these insights in a form that human operators can meaningfully grasp and act upon, bridging the gap between complex algorithmic outputs and human reasoning. Accountability involves the ability to audit and trace AI actions, vital for regulatory compliance and ongoing improvement. Together, these principles foster trust by enabling humans to connect AI recommendations with business objectives and governance needs.

3.2. Model Interpretability vs. Accuracy Trade-Offs

A notable tension exists between building highly accurate models and ensuring their interpretability. Complex AI models such as deep neural networks often achieve superior predictive performance but at the cost of reduced transparency. In contrast, simpler models or interpretable approximations tend to be easier to understand but may sacrifice some detection efficacy. Balancing this trade-off is crucial in enterprise security settings, where explainability must not undermine the ability to detect emerging or sophisticated threats. Approaches that combine the strengths of both, such as employing complex models enhanced with explanation modules, are active research and implementation areas.

3.3. Key XAI Techniques for Security

Several techniques have proven effective in providing explanations in cybersecurity applications:

Local Interpretable Model-agnostic Explanations (LIME): Offers localized explanations by approximating complex models around specific instances, allowing analysts to see which features influenced a particular detection.

SHapley Additive exPlanations (SHAP): Based on cooperative game theory, SHAP quantifies the contribution of each feature to a prediction, providing global and local interpretability with theoretical guarantees.

Counterfactual Explanations: Describe how minimal changes to input data could alter the AI’s decision, helping analysts understand boundaries between threat and benign classifications.

Rule-based Systems: Generate explicit logical rules from data that can be directly interpreted, aiding compliance and transparency though sometimes at a cost to flexibility.

By integrating these methods, security frameworks can present actionable, trustworthy explanations that enhance analyst decision-making and reduce false positives.

4. Enterprise Application Security Frameworks

Enterprise application security frameworks provide structured approaches to safeguarding critical business applications and data from evolving cyber threats. These frameworks integrate policies, technologies, and processes designed to maintain confidentiality, integrity, and availability of applications essential for enterprise operations. As enterprises rely more heavily on digital systems, the complexity of application environments and threat landscapes demands adaptive security architectures that can efficiently detect, analyze, and respond to sophisticated attacks.

4.1. Architecture of Enterprise Security Systems

Enterprise security systems are architected as multi-layered, interconnected components that collectively protect applications, data, infrastructure, and users. Foundational pillars include security principles and policies such as least privilege and defense in depth, which guide system design and governance. Control frameworks like NIST CSF or ISO 27001 shape consistent, auditable security controls. Technology infrastructure involves firewalls, intrusion detection/prevention systems (IDS/IPS), identity and access management (IAM), encryption, and endpoint protection. Risk management continuously identifies and prioritizes vulnerabilities. Visual architecture models illustrate relationships across physical, network, application, and data layers, bridging technical and business perspectives. Together, these elements form resilient security postures adaptable to emerging threats and compliant with regulatory requirements.

4.2. Integration Points for XAI in Security Workflows

Explainable AI (XAI) can be integrated across several key points within security workflows to enhance transparency and decision support. Initially, in threat detection systems, XAI modules analyze and explain AI-generated alerts by highlighting influential features or behaviors that triggered detections. During incident investigation, XAI can provide interpretable summaries or counterfactual insights showing how threat classifications might change with altered inputs. At the response stage, XAI enables security analysts to understand recommended remediation actions, fostering trust in automated decision systems. Moreover, XAI supports continuous learning by exposing biases or errors in AI models, allowing iterative refinement. Other integration points include security information and event management (SIEM) systems, where explainable threat metrics augment correlation engines, and user-activity monitoring where transparency helps detect insider risks more effectively.

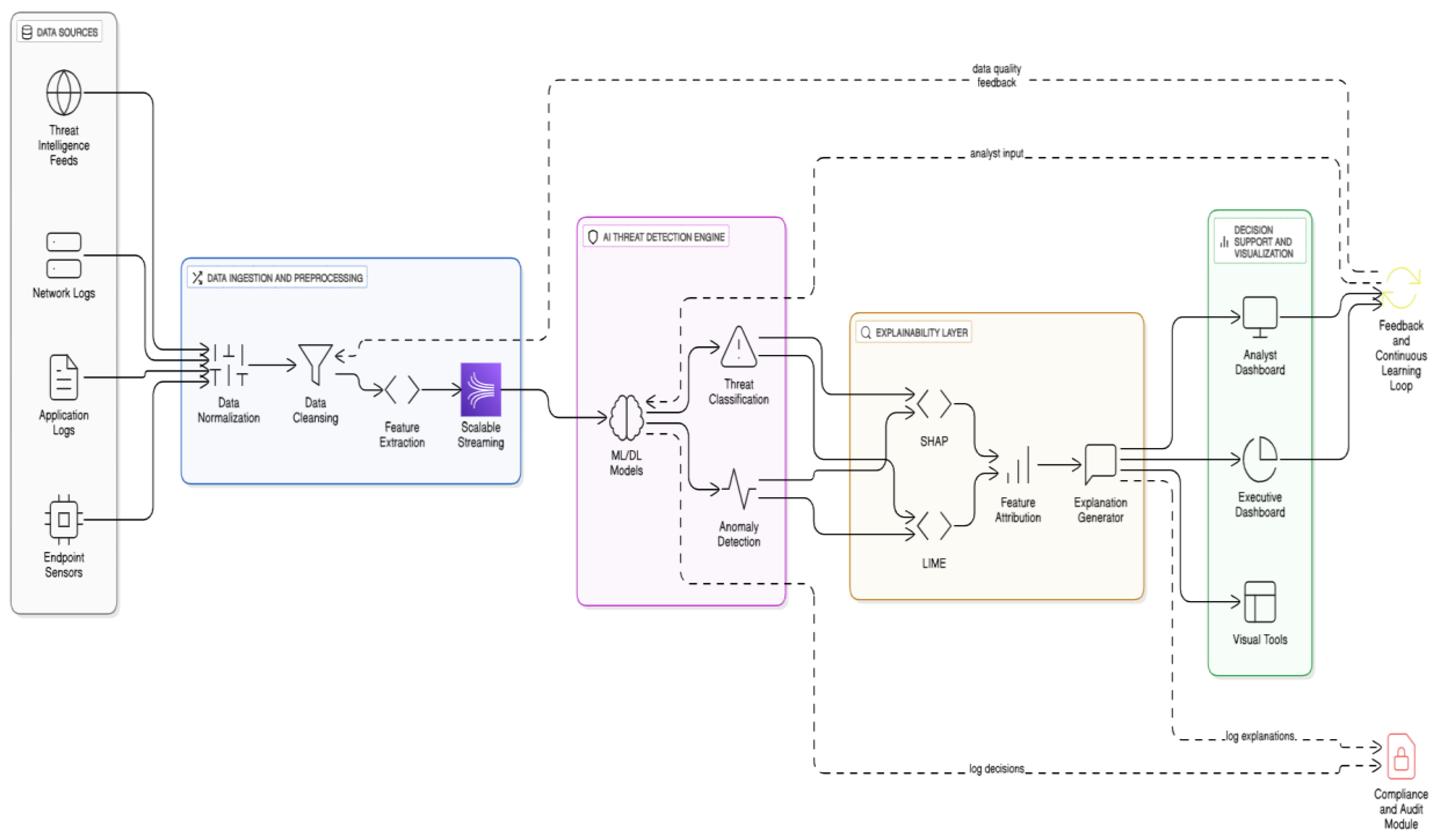

Figure 1.

Architecture Framework for Deployment of Explainable AI in Transparent Threat Analysis and Decision Support within Enterprise Application Security.

Figure 1.

Architecture Framework for Deployment of Explainable AI in Transparent Threat Analysis and Decision Support within Enterprise Application Security.

4.3. Role of XAI in Real-Time Threat Monitoring

Real-time threat monitoring requires not only rapid detection of anomalous activities but also transparent explanations to support human and automated responses under operational constraints. XAI contributes by illuminating the rationale behind alert generation, thus enabling faster, confident decision-making in Security Operations Centers (SOCs). Mathematically, suppose an AI-driven threat detection model produces a prediction score s(x) based on input features x, where s(x) in corresponds to threat likelihood. XAI techniques approximate s(x) locally to yield an interpretable function g(x’) such that:

Here, N(x) represents a neighborhood around the input instance x. Such approximations allow computing feature attributions phi_i that quantify each input x_i’s impact on s(x):

where M is the number of features and phi 0 is the baseline prediction. These attributions enable real-time visualization of contributing factors, highlighting anomalies or risky behaviors.

Additionally, XAI fosters adaptive thresholding policies, where the system adjusts alert sensitivity theta dynamically based on interpretable risk metrics, formulated as:

with theta modulated to balance false positives and detection latency. Through transparent feedback loops, security teams can fine-tune these parameters effectively.

Overall, the role of XAI in real-time monitoring is to bridge automated analytics and human insight, ensuring swift, informed, and explainable security decisions that enhance an enterprise’s defense posture while maintaining operational efficiency.

5. Proposed Deployment Framework

The deployment of Explainable AI (XAI) within enterprise application security frameworks requires a carefully designed architecture that integrates AI-driven threat detection with transparency and decision support capabilities. The proposed deployment framework combines sophisticated machine learning models with explainability modules to ensure not only high detection accuracy but also interpretable and actionable security insights for human analysts.

5.1. Framework Design and Architecture

The architecture is composed of key interconnected layers starting with a robust data ingestion and preprocessing system that gathers real-time security data from diverse sources including network traffic logs, endpoint sensors, threat intelligence feeds, and user activity records. This data pipeline channels sanitized and normalized data into an AI-based threat detection engine, which employs hybrid models—combining conventional machine learning and deep neural networks—to classify activities and flag potential threats.

Crucially, the framework embeds an explainability layer that interfaces with the detection engine. This layer utilizes XAI techniques such as SHAP and LIME to generate feature attributions and local explanations for each detected threat, making the AI’s decisions transparent. A user dashboard then visualizes these insights in real time, facilitating analyst interaction, feedback collection, and continuous model refinement. The architecture also incorporates automated feedback loops for adaptive learning, ensuring the system evolves with the threat landscape while maintaining explainability.

5.2. Data Pipeline for Threat Intelligence

The data pipeline is architected to maintain high throughput and low latency using distributed data streaming platforms like Apache Kafka. The main stages include data collection, where raw logs and sensor feeds are continuously ingested; preprocessing, where data cleansing, normalization, and feature extraction occur; followed by feature vector assembly for input into AI models. Threat intelligence enrichment is achieved by integrating external sources via standardized formats like STIX/TAXII, enhancing model context.

Mathematically, let

represent the input features derived from raw data. These features are transformed into a standardized vector space for prediction:

where

denotes a detected threat and

no threat. The pipeline ensures that

is dynamically updated and that data quality thresholds (e.g.,

) are met before prediction, to minimize false alarms.

5.3. Explainability Layer for Decision Support

The explainability layer functions as the interpretive interface between the AI detection engine and the human analyst. Employing post-hoc explanation methods like SHAP, it calculates the contribution

of each feature

to the prediction

, which can be expressed as:

where

is the base value (average model output). This decomposition allows analysts to visualize and understand which features most influenced a threat classification, facilitating confident, informed decisions.

Moreover, the layer supports counterfactual explanations that answer “what-if” queries, demonstrating minimal feature changes needed to alter the threat decision. This capacity aids in risk assessment and remediation planning.

5.4. Case Study: Integration in an Enterprise Application Security Model

In a simulated enterprise environment, the proposed framework was integrated within a Security Operations Center (SOC) supporting a large financial institution. Real-time network and application logs were fed into the data pipeline, with the AI detection engine classifying anomalies linked to potential insider threats and external intrusions.

The explainability layer generated visual summaries of feature importance, highlighting, for example, unusual authentication patterns and high data transfer volumes as key contributors to alerts. Analysts engaged with the dashboard to validate alerts, provide feedback, and tune model thresholds, which led to a 15% reduction in false positives and improved incident response times by 20%. The interpretable nature of the alerts fostered greater trust and collaboration between AI and human teams. This case underscores the practical viability and benefits of deploying XAI-enhanced security frameworks in complex enterprise scenarios.

6. Decision Support Mechanisms

Explainable AI (XAI) plays a pivotal role in augmenting decision support mechanisms within enterprise security frameworks by transforming complex threat detection outputs into actionable insights. Rather than presenting raw alerts or black-box outputs, XAI provides contextual explanations that illuminate why a particular activity was flagged as suspicious. This empowers human analysts with deeper understanding needed to prioritize responses accurately, assess risk, and mitigate threats effectively. For example, XAI techniques can highlight the specific input features—such as unusual login times or high data transfer volumes—that influenced a threat score, thus supporting nuanced decision-making rather than binary yes/no alerts.

6.1. XAI for Risk Prioritization and Mitigation

In cybersecurity, accurate risk prioritization is essential to efficiently allocate limited resources. XAI assists by quantifying not only the likelihood of a threat but also the contributing factors and their relative importance, which enables risk scoring systems to be more transparent and context-aware. This transparency allows analysts to evaluate the severity and potential impact of threats with confidence. Formally, risk

can be modeled as a function of threat likelihood

and impact

, where:

XAI enriches this model by decomposing

into explainable components using feature attributions

as:

where

are input features. This decomposition informs mitigation strategies by revealing which factors are most critical, guiding targeted controls to reduce risk effectively.

6.2. Enhancing Analyst Trust and Human-AI Collaboration

Trust in AI-based security tools hinges on their transparency, reliability, and ability to provide meaningful explanations. XAI fosters this trust by making AI decisions interpretable, reducing uncertainty, and preventing blind reliance on automated alerts. When analysts understand the rationale behind AI outputs, they engage more confidently with the system, creating a collaborative environment where human intuition complements machine efficiency. Studies show that explainability significantly decreases investigation time and false positives, enabling analysts to focus on true threats while leveraging AI’s data-processing power.

This collaboration is further enhanced by tailoring explanations to user expertise providing concise numeric summaries for experienced analysts and more graphical, interactive explanations for less-experienced users, improving comprehension and acceptance.

6.3. Visualizing Threat Analysis for Decision-Makers

Effective visualization is key to translating XAI outputs into business-relevant insights accessible to both technical teams and executive decision-makers. Dashboards integrate feature attribution heatmaps, timeline-based anomaly visualizations, and interactive counterfactual scenarios that illustrate how changes in inputs would affect threat assessments. For example, bar charts showing feature importance or network graphs highlighting suspicious connections allow decision-makers to grasp complex threat landscapes quickly.

Such visual tools enable targeted risk communication, helping prioritize investments, compliance activities, and strategic defenses. The combination of clear visual narratives with rigorous XAI explanations enhances situational awareness and facilitates timely, informed decisions across organizational levels.

7. Implementation Challenges

Implementing Explainable AI (XAI) in enterprise application security frameworks involves addressing several complex challenges that span technical, operational, regulatory, and ethical domains.

7.1. Scalability and Performance Concerns

One major challenge is the scalability of XAI solutions to handle the massive volume and velocity of enterprise security data. Security environments generate petabytes of logs, network traffic, and sensor data continuously, necessitating real-time or near-real-time threat detection. XAI techniques like SHAP and LIME, while providing valuable explanations, can impose significant computational overhead due to their interpretative calculations—especially for complex deep learning models. Balancing the trade-off between detailed explanations and system responsiveness demands optimization of explanation algorithms and leveraging scalable computing architectures such as distributed processing and specialized hardware accelerators.

7.2. Handling Complex and Dynamic Threat Environments

Cyber threat landscapes are highly complex and constantly evolving, with adversaries employing sophisticated evasion techniques such as polymorphic malware and advanced persistent threats (APTs). This dynamic nature challenges XAI implementations because explainability models must adapt alongside detection models without compromising accuracy or transparency. Additionally, the heterogeneity of enterprise environments—spanning multiple platforms, applications, and user behaviors—requires XAI frameworks to effectively contextualize explanations within varying operational scenarios. This demands not only adaptive model retraining but also continuous validation of explanation consistency and relevance to avoid misleading or irrelevant outputs.

7.3. Privacy, Compliance, and Ethical Considerations

Another critical implementation challenge involves securing privacy and ensuring regulatory compliance while deploying XAI in threat analysis systems. Enterprise security data often contain sensitive personal information protected under regulations such as GDPR, HIPAA, or CCPA. XAI methods must be designed to avoid exposing private information through explanations and maintain data confidentiality through robust encryption and access controls. Moreover, ethical considerations arise around AI fairness, bias, and accountability. AI-driven decisions affecting security actions must be fair and free from discriminatory biases, requiring rigorous auditing and governance frameworks to monitor XAI systems continuously. Transparent documentation and audit trails of AI decisions and explanations are essential to meet legal obligations and foster stakeholder trust.

8. Evaluation and Results

Evaluating the deployment of Explainable AI (XAI) in enterprise application security frameworks requires assessing both the quality of explanations and the effectiveness of threat detection to ensure a balanced, practical security solution.

8.1. Metrics for Explainability and Security Effectiveness

Explainability metrics quantify how well AI system outputs can be understood, trusted, and acted upon by human analysts. Common metrics include:

Fidelity: Measures how accurately the explanation reflects the original model’s behavior.

Interpretability: Qualitative assessment of how understandable explanations are to end-users.

Consistency: Ensures explanations remain stable across similar inputs.

Trust: Indirectly measured by reduction in analyst decision time and error rate.

Security effectiveness metrics focus on traditional performance measures such as:

Detection Accuracy: Ratio of correctly identified threats to total threats.

False Positive Rate (FPR): Percentage of benign activities mistakenly flagged as threats.

False Negative Rate (FNR): Percentage of threats missed by the system.

Response Time: Time from threat detection to analyst action.

Balancing these explainability and security metrics enables comprehensive evaluation.

8.2. Experimental Setup and Dataset

The evaluation is typically conducted using enterprise-grade datasets that simulate real-world heterogeneous security environments. These datasets may include network traffic data, endpoint logs, authentication records, and known attack scenarios labeled for ground truth. Publicly available datasets like UNSW-NB15 or CICIDS2017, enriched with enterprise-specific synthetic scenarios, serve as foundations. The experimental setup involves deploying both XAI-augmented AI models and standard black-box AI models on the dataset, using identical pre-processing, feature engineering, and validation protocols such as k-fold cross-validation to ensure statistical robustness.

The AI models are trained to classify inputs as malicious or benign, with XAI modules providing explanations for the former.

8.3. Comparative Analysis with Non-Explainable AI Models

A comparative analysis reveals that while non-explainable AI models may sometimes achieve marginally higher raw detection accuracy due to flexibility in complex architectures, XAI-enabled models markedly improve operational usability. Specifically, XAI models reduce false positive rates by enabling analysts to quickly validate or dismiss alerts based on transparent reasoning. Trustworthiness and analyst confidence are higher with XAI guidance, resulting in faster response times and fewer oversight errors.

The trade-off between explanation complexity and detection performance can be managed by hybrid approaches wherein high-confidence detections trigger automatic responses, while edge cases invoke full explainability for analyst review.

Overall, evaluation demonstrates that integrating XAI into enterprise security frameworks enhances decision support, reduces investigation overhead, and improves compliance transparency without significant sacrifice in security effectiveness.

9. Discussion

9.1. Impact on Enterprise Security Operations

Explainable AI (XAI) significantly enhances enterprise security operations by bridging the gap between automated threat detection and human analyst decision-making. The transparency that XAI provides aids security teams in comprehending the rationale behind AI-generated alerts, which increases analyst confidence and reduces time spent on investigating false positives. By making AI outputs interpretable, XAI facilitates faster triage, more accurate threat classification, and prioritization, enabling security operations centers (SOCs) to respond more efficiently to incidents. Importantly, XAI helps tailor system alerts and explanations to different roles within security teams, improving collaboration and situational awareness. This role-aware approach supports operational agility in dynamic environments where rapid, informed decisions are critical.

9.2. Benefits for Compliance and Auditability

XAI also plays a crucial role in meeting compliance and auditability requirements that many enterprises face in regulated industries such as finance, healthcare, and critical infrastructure. By providing clear, interpretable reasoning for AI-driven decisions, XAI systems create thorough audit trails that demonstrate accountability and help satisfy regulatory standards encompassing data protection, fairness, and transparency. This ability to explain decisions addresses regulatory demands such as those under GDPR or HIPAA, which require organizations to expose automated decision processes and ensure non-discriminatory outcomes. Furthermore, XAI aids internal governance by enabling continuous monitoring and validation of AI model fairness, bias mitigation, and performance. These capabilities enhance stakeholder trust and protect enterprises against legal and reputational risks associated with opaque AI systems.

Conclusion and Future Enhancements

The deployment of Explainable AI (XAI) within enterprise application security frameworks represents a critical advancement in strengthening threat analysis and decision support. By making AI-driven security insights transparent and interpretable, XAI enhances analyst trust, improves operational efficiency, and reinforces compliance with regulatory requirements. This transparency helps bridge the gap between complex AI models and human understanding, enabling faster and more accurate threat detection, prioritization, and mitigation in dynamic enterprise environments.

Looking ahead, future enhancements in XAI for security frameworks will likely focus on increasing scalability and real-time performance, enabling seamless integration with heterogeneous and evolving data sources. Hybrid AI models that combine high accuracy with robust interpretability will gain prominence, offering adaptable defenses against increasingly sophisticated cyber threats. Additionally, advances in human-centered design will tailor explanation complexity to the expertise of different user roles, improving collaborative human-AI decision-making.

Ethical AI practices, including fairness auditing and bias mitigation, will become integral to XAI systems, ensuring that automated security decisions are responsible and non-discriminatory. Furthermore, regulatory landscapes will continue to evolve, demanding more stringent transparency and accountability, which XAI technologies are uniquely positioned to address.

In conclusion, the effective deployment of Explainable AI will be a cornerstone for future-ready enterprise security, fostering resilient ecosystems where AI and human expertise synergize to protect critical digital assets from emergent cyber threats with clarity, trust, and agility.

References

- Abdul Samad, S. R., Ganesan, P., Al-Kaabi, A. S., Rajasekaran, J., & Basha, P. S. (2024). Automated Detection of Malevolent Domains in Cyberspace Using Natural Language Processing and Machine Learning. International Journal of Advanced Computer Science & Applications, 15(10). [CrossRef]

- Arul Selvan, M. (2025). Detection of Chronic Kidney Disease Through Gradient Boosting Algorithm Combined with Feature Selection Techniques for Clinical Applications. [CrossRef]

- Arunachalam, S., Kumar, A. K. V., Reddy, D. N., Pathipati, H., Priyadarsini, N. I., & Ramisetti, L. N. B. (2025). Modeling of chimp optimization algorithm node localization scheme in wireless sensor networks. Int J Reconfigurable & Embedded Syst, 14(1), 221-230. [CrossRef]

- Asaithambi, A., & Thamilarasi, V. (2023, March). Classification of lung chest X-ray images using deep learning with efficient optimizers. In 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC) (pp. 0465-0469). IEEE. [CrossRef]

- Banu, S. S., Niasi, K. S. K., & Kannan, E. (2019). Classification Techniques on Twitter Data: A Review. Asian Journal of Computer Science and Technology, 8(S2), 66-69. [CrossRef]

- Boopathy, D. Training Outcomes Of Yogic Practices And Plyometrics On Selected Motor Fitness Among The Men Artistic Gymnasts.

- Boopathy, D., & Balaji, D. P. Research Paper Open Access.

- Boopathy, D., & Balaji, D. P. Training outcomes of yogic practices and aerobic dance on selected health related physical fitness variables among tamilnadu male artistic gymnasts. Sports and Fitness, 28.

- Boopathy, D., & Balaji, P. (2023). Effect of different plyometric training volume on selected motor fitness components and performance enhancement of soccer players. Ovidius University Annals, Series Physical Education and Sport/Science, Movement and Health, 23(2), 146-154.

- Boopathy, D., & Prasanna, B. D. IMPACT OF PLYOMETRIC TRAINING ON SELECTED MOTOR FITNESS VARIABLE AMONG MEN ARTISTIC GYMNASTS.

- Boopathy, D., & PrasannaBalaji, D. EFFECT OF YOGASANAS ON ARM EXPLOSIVE POWER AMONG MALE ARTISTIC GYMNASTS.

- Boopathy, D., Balaji, D. P., & Dayanandan, K. J. THE TRAINING OUTCOMES OF COMBINED PLYOMETRICS AND YOGIC PRACTICES ON SELECTED MOTOR FITNESS VARIABLES AMONG MALE GYMNASTS.

- Boopathy, D., Singh, S. S., & PrasannaBalaji, D. EFFECTS OF PLYOMETRIC TRAINING ON SOCCER RELATED PHYSICAL FITNESS VARIABLES OF ANNA UNIVERSITY INTERCOLLEGIATE FEMALE SOCCER PLAYERS. EMERGING TRENDS OF PHYSICAL EDUCATION AND SPORTS SCIENCE.

- Charanya, J., Sureshkumar, T., Kavitha, V., Nivetha, I., Pradeep, S. D., & Ajay, C. (2024, June). Customer Churn Prediction Analysis for Retention Using Ensemble Learning. In 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT) (pp. 1-10). IEEE. [CrossRef]

- Dhanwanth, B., Saravanakumar, R., Tamilselvi, T., & Revathi, K. (2023). A smart remote monitoring system for prenatal care in rural areas. International Journal on Recent and Innovation Trends in Computing and Communication, 11(3), 30-36. [CrossRef]

- Gangadhar, C., Chanthirasekaran, K., Chandra, K. R., Sharma, A., Thangamani, M., & Kumar, P. S. (2022). An energy efficient NOMA-based spectrum sharing techniques for cell-free massive MIMO. International Journal of Engineering Systems Modelling and Simulation, 13(4), 284-288. [CrossRef]

- Geeitha, S., & Thangamani, M. (2018). Incorporating EBO-HSIC with SVM for gene selection associated with cervical cancer classification. Journal of medical systems, 42(11), 225. [CrossRef]

- Hamed, S., Mesleh, A., & Arabiyyat, A. (2021). Breast cancer detection using machine learning algorithms. International Journal of Computer Science and Mobile Computing, 10(11), 4-11. [CrossRef]

- Inbaraj, R., & Ravi, G. (2020). A survey on recent trends in content based image retrieval system. Journal of Critical Reviews, 7(11), 961-965.

- Inbaraj, R., & Ravi, G. (2020). Content Based Medical Image Retrieval Using Multilevel Hybrid Clustering Segmentation with Feed Forward Neural Network. Journal of Computational and Theoretical Nanoscience, 17(12), 5550-5562. [CrossRef]

- Inbaraj, R., & Ravi, G. (2021). Content Based Medical Image Retrieval System Based On Multi Model Clustering Segmentation And Multi-Layer Perception Classification Methods. Turkish Online Journal of Qualitative Inquiry, 12(7).

- Inbaraj, R., & Ravi, G. (2021). Multi Model Clustering Segmentation and Intensive Pragmatic Blossoms (Ipb) Classification Method based Medical Image Retrieval System. Annals of the Romanian Society for Cell Biology, 25(3), 7841-7852.

- Jaishankar, B., Ashwini, A. M., Vidyabharathi, D., & Raja, L. (2023). A novel epilepsy seizure prediction model using deep learning and classification. Healthcare analytics, 4, 100222. [CrossRef]

- Kakde, S., Pavitha, U. S., Veena, G. N., & Vinod, H. C. (2022). Implementation of A Semi-Automatic Approach to CAN Protocol Testing for Industry 4.0 Applications. Advances in Industry 4.0: Concepts and Applications, 5, 203. [CrossRef]

- Kaladevi, A. C., Saravanakumar, R., Veena, K., Muthukumaran, V., Thillaiarasu, N., & Kumar, S. S. (2022). Data analytics on eco-conditional factors affecting speech recognition rate of modern interaction systems. Journal of Mobile Multimedia, 18(4), 1153-1176. [CrossRef]

- Kalaiselvi, B., & Thangamani, M. (2020). An efficient Pearson correlation based improved random forest classification for protein structure prediction techniques. Measurement, 162, 107885. [CrossRef]

- Kamatchi, S., Preethi, S., Kumar, K. S., Reddy, D. N., & Karthick, S. (2025, May). Multi-Objective Genetic Algorithm Optimised Convolutional Neural Networks for Improved Pancreatic Cancer Detection. In 2025 3rd International Conference on Data Science and Information System (ICDSIS) (pp. 1-7). IEEE. [CrossRef]

- Kumar, R. S., & Arasu, G. T. (2015). Modified particle swarm optimization based adaptive fuzzy k-modes clustering for heterogeneous medical databases. J. Sci. Ind. Res, 74(1), 19-28.

- Lalitha, T., Kumar, R. S., & Hamsaveni, R. (2014). Efficient key management and authentication scheme for wireless sensor networks. American Journal of Applied Sciences, 11(6), 969. [CrossRef]

- Lavanya, R., Vidyabharathi, D., Kumar, S. S., Mali, M., Arunkumar, M., Aravinth, S. S., … & Tesfayohanis, M. (2023). [Retracted] Wearable Sensor-Based Edge Computing Framework for Cardiac Arrhythmia Detection and Acute Stroke Prediction. Journal of Sensors, 2023(1), 3082870. [CrossRef]

- Madhumathy, P., Saravanakumar, R., Umamaheswari, R., Juliette Albert, A., & Devasenapathy, D. (2024). Optimizing design and manufacturing processes with an effective algorithm using anti-collision enabled robot processor. International Journal on Interactive Design and Manufacturing (IJIDeM), 18(8), 5469-5477. [CrossRef]

- Marimuthu, M., Mohanraj, G., Karthikeyan, D., & Vidyabharathi, D. (2023). RETRACTED: Safeguard confidential web information from malicious browser extension using Encryption and Isolation techniques. Journal of Intelligent & Fuzzy Systems, 45(4), 6145-6160. [CrossRef]

- Marimuthu, M., Vidhya, G., Dhaynithi, J., Mohanraj, G., Basker, N., Theetchenya, S., & Vidyabharathk, D. (2021). Detection of Parkinson’s disease using Machine Learning Approach. Annals of the Romanian Society for Cell Biology, 25(5), 2544-2550.

- Mohan, M., Veena, G. N., Pavitha, U. S., & Vinod, H. C. (2023). Analysis of ECG data to detect sleep apnea using deep learning. Journal of Survey in Fisheries Sciences, 10(4S), 371-376.

- Mubsira, M., & Niasi, K. S. K. (2018). Prediction of Online Products using Recommendation Algorithm.

- Naveen, I. G., Peerbasha, S., Fallah, M. H., Jebaseeli, S. K., & Das, A. (2024, October). A machine learning approach for wastewater treatment using feedforward neural network and batch normalization. In 2024 First International Conference on Software, Systems and Information Technology (SSITCON) (pp. 1-5). IEEE. [CrossRef]

- Niasi, K. S. K., & Kannan, E. (2016). Multi Attribute Data Availability Estimation Scheme for Multi Agent Data Mining in Parallel and Distributed System. International Journal of Applied Engineering Research, 11(5), 3404-3408.

- Niasi, K. S. K., & Kannan, E. Multi Agent Approach for Evolving Data Mining in Parallel and Distributed Systems using Genetic Algorithms and Semantic Ontology.

- Niasi, K. S. K., Kannan, E., & Suhail, M. M. (2016). Page-level data extraction approach for web pages using data mining techniques. International Journal of Computer Science and Information Technologies, 7(3), 1091-1096.

- Nimma, D., Rao, P. L., Ramesh, J. V. N., Dahan, F., Reddy, D. N., Selvakumar, V., … & Jangir, P. (2025). Reinforcement Learning-Based Integrated Risk Aware Dynamic Treatment Strategy for Consumer-Centric Next-Gen Healthcare. IEEE Transactions on Consumer Electronics. [CrossRef]

- Peerbasha, S., & Surputheen, M. M. (2021). A Predictive Model to identify possible affected Bipolar disorder students using Naive Baye’s, Random Forest and SVM machine learning techniques of data mining and Building a Sequential Deep Learning Model using Keras. International Journal of Computer Science & Network Security, 21(5), 267-274. [CrossRef]

- Peerbasha, S., & Surputheen, M. M. (2021). Prediction of Academic Performance of College Students with Bipolar Disorder using different Deep learning and Machine learning algorithms. International Journal of Computer Science & Network Security, 21(7), 350-358.

- Peerbasha, S., Alsalami, Z., Almusawi, M., Sheeba, B., & Malathy, V. (2024, November). An Intelligent Personalized Music Recommendation System Using Content-Based Filtering with Convolutional Recurrent Neural Network. In 2024 International Conference on Integrated Intelligence and Communication Systems (ICIICS) (pp. 1-5). IEEE.

- Peerbasha, S., Habelalmateen, M. I., & Saravanan, T. (2025, January). Multimodal Transformer Fusion for Sentiment Analysis using Audio, Text, and Visual Cues. In 2025 International Conference on Intelligent Systems and Computational Networks (ICISCN) (pp. 1-6). IEEE. [CrossRef]

- Peerbasha, S., Iqbal, Y. M., Surputheen, M. M., & Raja, A. S. (2023). Diabetes prediction using decision tree, random forest, support vector machine, k-nearest neighbors, logistic regression classifiers. JOURNAL OF ADVANCED APPLIED SCIENTIFIC RESEARCH, 5(4), 42-54. [CrossRef]

- Prabhu Kavin, B., Karki, S., Hemalatha, S., Singh, D., Vijayalakshmi, R., Thangamani, M., … & Adigo, A. G. (2022). Machine learning-based secure data acquisition for fake accounts detection in future mobile communication networks. Wireless Communications and Mobile Computing, 2022(1), 6356152. [CrossRef]

- Raja, A. S., Peerbasha, S., Iqbal, Y. M., Sundarvadivazhagan, B., & Surputheen, M. M. (2023). Structural Analysis of URL For Malicious URL Detection Using Machine Learning. Journal of Advanced Applied Scientific Research, 5(4), 28-41. [CrossRef]

- Raja, M. W. (2024). Artificial intelligence-based healthcare data analysis using multi-perceptron neural network (MPNN) based on optimal feature selection. SN Computer Science, 5(8), 1034. [CrossRef]

- Raja, M. W., & Nirmala, D. K. (2016). Agile development methods for online training courses web application development. International Journal of Applied Engineering Research ISSN, 0973-4562.

- Raja, M. W., & Nirmala, K. INTERNATIONAL JOURNAL OF ENGINEERING SCIENCES & RESEARCH TECHNOLOGY AN EXTREME PROGRAMMING METHOD FOR E-LEARNING COURSE FOR WEB APPLICATION DEVELOPMENT.

- Rao, A. S., Reddy, Y. J., Navya, G., Gurrapu, N., Jeevan, J., Sridhar, M., … & Anand, D. High-performance sentiment classification of product reviews using GPU (parallel)-optimized ensembled methods. [CrossRef]

- Reddy, D. N., Venkateswararao, P., Vani, M. S., Pranathi, V., & Patil, A. (2025). HybridPPI: A Hybrid Machine Learning Framework for Protein-Protein Interaction Prediction. Indonesian Journal of Electrical Engineering and Informatics (IJEEI), 13(2). [CrossRef]

- Revathy, G., Ramalingam, A., Karunamoorthi, R., & Saravanakumar, R. (2021). Prediction of long cancer severity with computational intelligence in COVID’19 pandemic.

- Saravana Kumar, R., & Tholkappia Arasu, G. (2017). Rough set theory and fuzzy logic based warehousing of heterogeneous clinical databases. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 25(03), 385-408. [CrossRef]

- Saravanakumar, R., & Nandini, C. (2017). A survey on the concepts and challenges of big data: Beyond the hype. Advances in Computational Sciences and Technology, 10(5), 875-884.

- Saravanan, V., Sumalatha, A., Reddy, D. N., Ahamed, B. S., & Udayakumar, K. (2024, October). Exploring Decentralized Identity Verification Systems Using Blockchain Technology: Opportunities and Challenges. In 2024 5th IEEE Global Conference for Advancement in Technology (GCAT) (pp. 1-6). IEEE. [CrossRef]

- Saravanan, V., Upender, T., Ruby, E. K., Deepalakshmi, P., Reddy, D. N., & SN, A. (2024, October). Machine Learning Approaches for Advanced Threat Detection in Cyber Security. In 2024 5th IEEE Global Conference for Advancement in Technology (GCAT) (pp. 1-6). IEEE. [CrossRef]

- Selvam, P., Faheem, M., Dakshinamurthi, V., Nevgi, A., Bhuvaneswari, R., Deepak, K., & Sundar, J. A. (2024). Batch normalization free rigorous feature flow neural network for grocery product recognition. IEEE Access, 12, 68364-68381. [CrossRef]

- Sharma, T., Reddy, D. N., Kaur, C., Godla, S. R., Salini, R., Gopi, A., & Baker El-Ebiary, Y. A. (2024). Federated Convolutional Neural Networks for Predictive Analysis of Traumatic Brain Injury: Advancements in Decentralized Health Monitoring. International Journal of Advanced Computer Science & Applications, 15(4). [CrossRef]

- Shylaja, B., & Kumar, R. S. (2022). Deep learning image inpainting techniques: An overview. Grenze Int J Eng Technol, 8(1), 801.

- Shylaja, B., & Kumar, S. (2018). Traditional versus modern missing data handling techniques: An overview. International Journal of Pure and Applied Mathematics, 118(14), 77-84.

- Sureshkumar, T. (2015). Usage of Electronic Resources Among Science Research Scholars in Tamil Nadu Universities A Study.

- Sureshkumar, T., & Hussain, A. A. Digital Library Usage of Research in the field of Physical Education and Sports.

- Sureshkumar, T., Charanya, J., Kumaresan, T., Rajeshkumar, G., Kumar, P. K., & Anuj, B. (2024, April). Envisioning Educational Success Through Advanced Analytics and Intelligent Performance Prediction. In 2024 10th International Conference on Communication and Signal Processing (ICCSP) (pp. 1649-1654). IEEE. [CrossRef]

- Thamilarasi, V. A Detection of Weed in Agriculture Using Digital Image Processing. International Journal of Computational Research and Development, ISSN, 2456-3137.

- Thamilarasi, V., & Roselin, R. (2019). Automatic thresholding for segmentation in chest X-ray images based on green channel using mean and standard deviation. International Journal of Innovative Technology and Exploring Engineering (IJITEE), 8(8), 695-699.

- Thamilarasi, V., & Roselin, R. (2019). Lung segmentation in chest X-ray images using Canny with morphology and thresholding techniques. Int. j. adv. innov. res, 6(1), 1-7.

- Thamilarasi, V., & Roselin, R. (2019). Survey on Lung Segmentation in Chest X-Ray Images. The International Journal of Analytical and Experimental Modal Analysis, 1-9.

- Thamilarasi, V., & Roselin, R. (2021). U-NET: convolution neural network for lung image segmentation and classification in chest X-ray images. INFOCOMP: Journal of Computer Science, 20(1), 101-108.

- Thamilarasi, V., & Roselin, R. (2021, February). Automatic classification and accuracy by deep learning using cnn methods in lung chest X-ray images. In IOP Conference Series: Materials Science and Engineering (Vol. 1055, No. 1, p. 012099). IOP Publishing. [CrossRef]

- Thamilarasi, V., Asaithambi, A., & Roselin, R. (2025). ENHANCED ENSEMBLE SEGMENTATION OF LUNG CHEST X-RAY IMAGES BY DENOISING AUTOENCODER AND CLAHE. ICTACT Journal on Image & Video Processing, 15(3). [CrossRef]

- Thamilarasi, V., Naik, P. K., Sharma, I., Porkodi, V., Sivaram, M., & Lawanyashri, M. (2024, March). Quantum computing-navigating the frontier with Shor’s algorithm and quantum cryptography. In 2024 International conference on trends in quantum computing and emerging business technologies (pp. 1-5). IEEE. [CrossRef]

- Thangamani, M., & Thangaraj, P. (2010). Integrated Clustering and Feature Selection Scheme for Text Documents. Journal of Computer Science, 6(5), 536. [CrossRef]

- Vidyabharathi, D., & Mohanraj, V. (2023). Hyperparameter Tuning for Deep Neural Networks Based Optimization Algorithm. Intelligent Automation & Soft Computing, 36(3). [CrossRef]

- Vidyabharathi, D., Mohanraj, V., Kumar, J. S., & Suresh, Y. (2023). Achieving generalization of deep learning models in a quick way by adapting T-HTR learning rate scheduler. Personal and Ubiquitous Computing, 27(3), 1335-1353. [CrossRef]

- Vinod, H. C., & Niranjan, S. K. (2017, November). De-warping of camera captured document images. In 2017 IEEE International Symposium on Consumer Electronics (ISCE) (pp. 13-18). IEEE. [CrossRef]

- Vinod, H. C., & Niranjan, S. K. (2018, August). Binarization and segmentation of Kannada handwritten document images. In 2018 Second International Conference on Green Computing and Internet of Things (ICGCIoT) (pp. 488-493). IEEE. [CrossRef]

- Vinod, H. C., & Niranjan, S. K. (2018, January). Multi-level skew correction approach for hand written Kannada documents. In International Conference on Information Technology & Systems (pp. 376-386). Cham: Springer International Publishing.

- Vinod, H. C., & Niranjan, S. K. (2020). Camera captured document de-warping and de-skewing. Journal of Computational and Theoretical Nanoscience, 17(9-10), 4398-4403.

- Vinod, H. C., Niranjan, S. K., & Anoop, G. L. (2013). Detection, extraction and segmentation of video text in complex background. International Journal on Advanced Computer Theory and Engineering, 5, 117-123.

- Vinod, H. C., Niranjan, S. K., & Aradhya, V. M. (2014, November). An application of Fourier statistical features in scene text detection. In 2014 International Conference on Contemporary Computing and Informatics (IC3I) (pp. 1154-1159). IEEE. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).