Submitted:

22 September 2025

Posted:

23 September 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background

1.2. Research Problem

1.3. Research Objectives

1.4. Research Questions

2. Theoretical Framework

2.1. Responsible Innovation Theory (RRI)

2.2. Value-Sensitive Design (VSD)

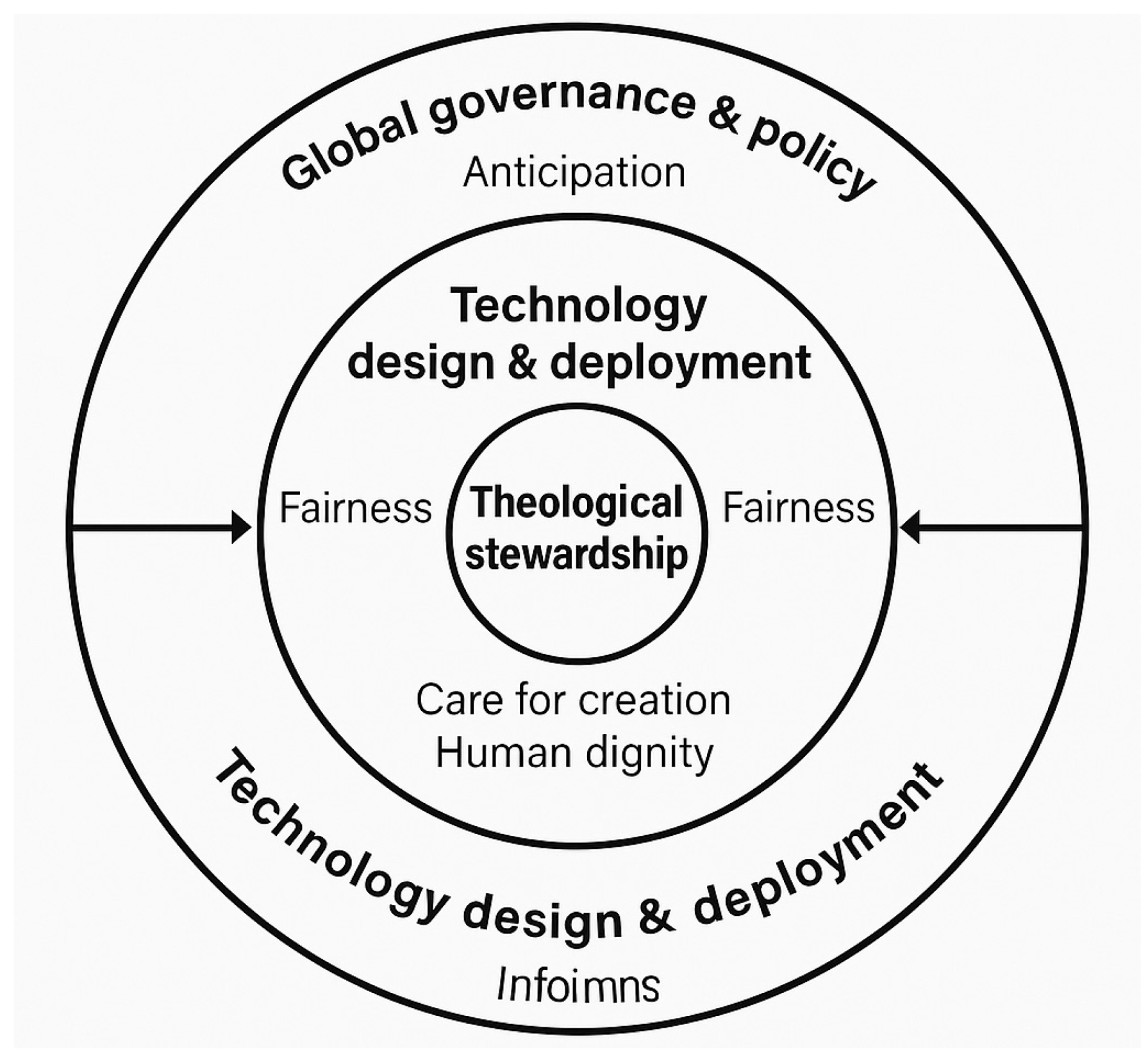

2.3. Theological Stewardship Framework

3. Methodology

3.1. Systematic Literature Review

3.2. Policy and Document Analysis

3.3. NLP-Based Meta-Synthesis

3.4. Theological Hermeneutics

3.5. Case Illustrations (Secondary Data)

4. Findings & Discussion

4.1. How Does Integrating AI into Agriculture Reshape Human Stewardship of the Earth and Food Systems? (RQ1)

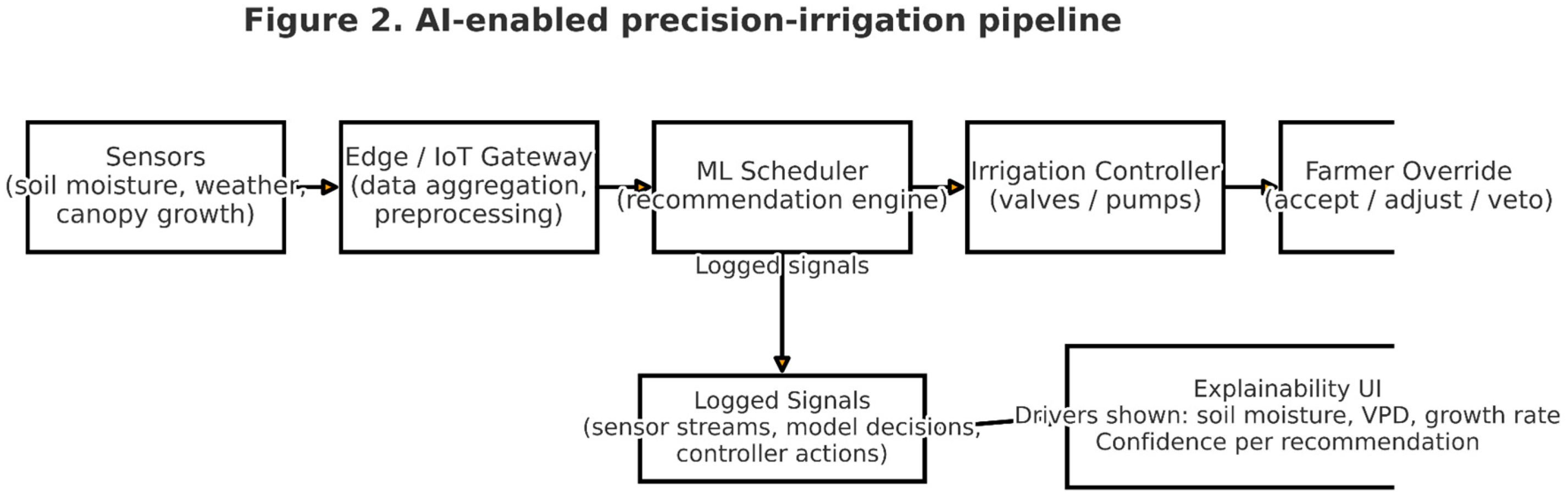

4.1.1. Enhancing Stewardship Through Precision and Efficiency

4.1.2. Diminishing or Displacing the Human Element

4.2. What Ethical and Spiritual Risks Emerge When Intelligent Agriculture Displaces Traditional Agrarian Ethics and Community Practices? (RQ2)

4.2.1. Exacerbating Inequalities (“Digital Divide” in the Fields)

4.2.2. Loss of Traditional Knowledge and Cultural Heritage

4.2.3. Dependency and Loss of Autonomy

4.2.4. Civilizational and Ethical Consequences

4.3. How Can Governance Frameworks Embed Theological and Ethical Principles—Such as Stewardship, Equity, and Justice—Into the Development of Intelligent Agriculture? (RQ3)

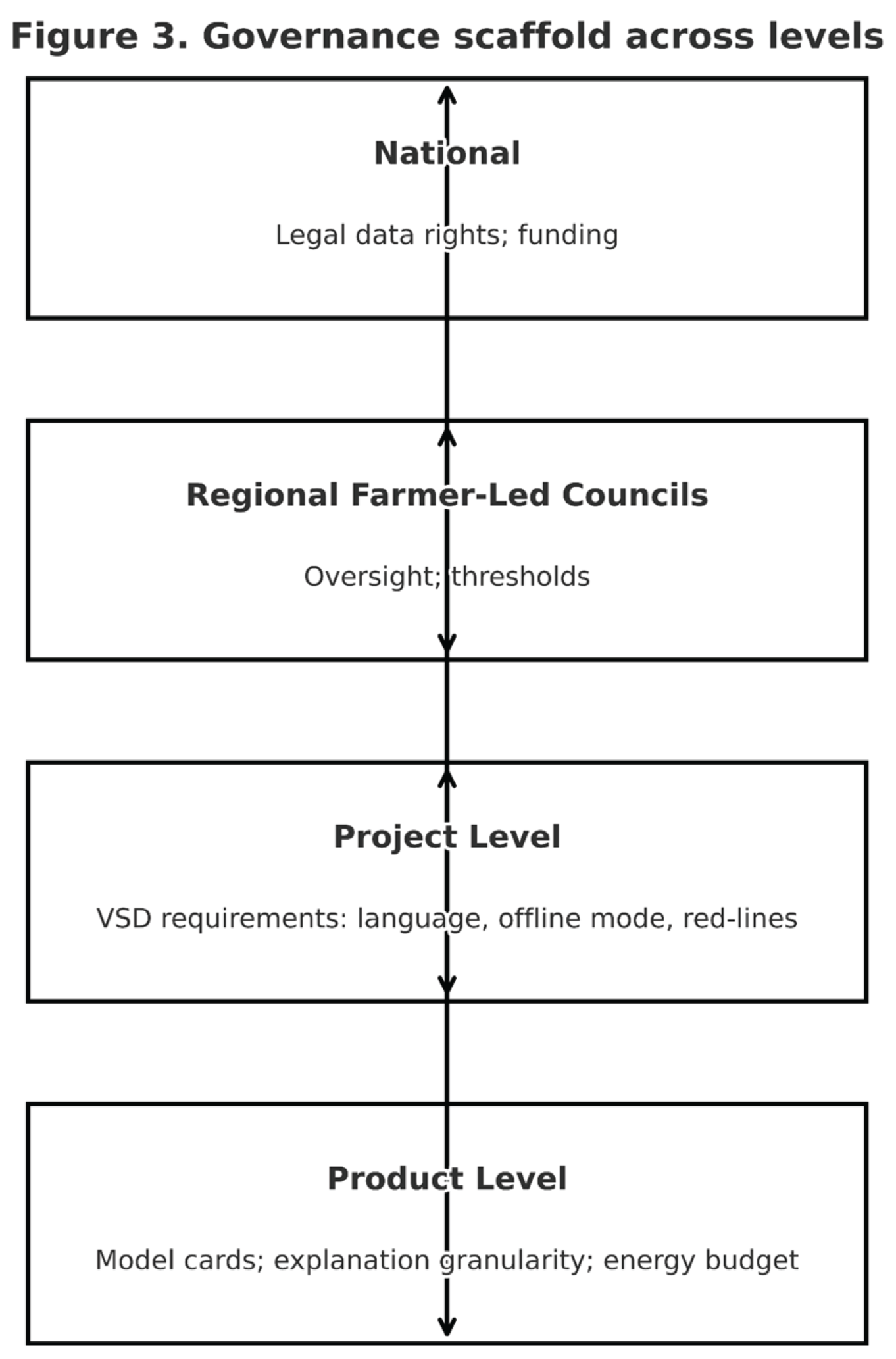

4.3.1. Multi-Level Governance Architecture

4.3.2. Empowering Stakeholders and Building Capacity

4.3.3. Ten-Year Foresight and Adaptive Governance

| Dimension | Key Questions | Example Indicators | Project X Rating (H/M/L) |

|---|---|---|---|

| Economic | Does it increase productivity and incomes? Does it distribute benefits fairly? | Yield per hectare; % income gain for small vs large farmers | High (yield +20%); Low (smallholders +5% vs large +15%) |

| Social | Who participates or is excluded? Effects on jobs and community? | Number of farmers adopting (disaggregated by gender, size); change in farm labor demand; community cohesion measures | Medium (30% adoption mostly large farms; labor demand -10%) |

| Environmental | Resource use efficiency and ecological impact? | Water saved; reduction in chemical use; soil health index; biodiversity index | High (50% less water, 20% less pesticide) |

| Cultural/Spiritual | Alignment with local values and practices? Impact on traditional knowledge or rituals? | Farmer satisfaction/ trust surveys; presence of local language & content; continuity of cultural practices | Low (app in English only; elders’ knowledge not integrated) |

5. Conclusion & Recommendations

5.1. Summary of Key Findings

5.2. Stakeholder-Specific Recommendations

5.2.1. Policymakers & Governments

5.2.2. Agricultural Technologists & Companies

5.2.3. Farmer Organizations & Civil Society

5.3. Ten-Year Foresight Framework

5.4. Impact Assessment Matrix & Monitoring

5.5. Limitations

References

- United Nations, Department of Economic and Social Affairs, Population Division (UN-DESA). (2024). World Population Prospects 2024. United Nations. https://population.un.org/wpp/?

- van Dijk, M., Morley, T., Rau, M.L. et al. (2021). A meta-analysis of projected global food demand and population at risk of hunger for the period 2010–2050. Nat Food 2, 494–501. [CrossRef]

- Intergovernmental Panel on Climate Change (IPCC). (2023). Climate Change 2023: Synthesis Report. Contribution of Working Groups I, II, and III to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change [Core Writing Team, H. Lee & J. Romero (Eds.)]. IPCC. https://www.ipcc.ch/report/ar6/syr/downloads/report/IPCC_AR6_SYR_LongerReport.pdf.

- El Jarroudi, M., Kouadio, L., Delfosse, P., et al. (2024). Leveraging edge artificial intelligence for sustainable agriculture. Nature Sustainability, 7(846–854). [CrossRef]

- Food and Agriculture Organization of the United Nations. (2018). Food and agriculture projections to 2050. FAO. https://www.fao.org/global-perspectives-studies/food-agriculture-projections-to-2050/en/.

- Food and Agriculture Organization of the United Nations. (2022). The State of Food and Agriculture 2022: Leveraging automation in agriculture for transforming agrifood systems. FAO. https://openknowledge.fao.org/server/api/core/bitstreams/1c329966-521a-4277-83d7-07283273b64b/content/cb9479en.html.

- FAO & ITU. (2023). Digital excellence in agriculture report: FAO-ITU regional contest on good practices advancing digital agriculture in Europe and Central Asia. Budapest, FAO. [CrossRef]

- Bolón-Canedo, V., Morán-Fernández, L., Cancela, B., & Alonso-Betanzos, A. (2024). A review of green artificial intelligence: Towards a more sustainable future. Neurocomputing, 599, 128096. [CrossRef]

- Beisner, E. C., Cromartie, M., Derr, T. S., Knippers, D., Hill, P. J., & Terrell, T. (2023). A biblical perspective on environmental stewardship. Acton Institute. https://www.acton.org/public-policy/environmental-stewardship/theology-e/biblical-perspective-environmental-stewardship.

- Zhao, C., Liu, B., Piao, S., Wang, X., Lobell, D. B., Huang, Y., Huang, M., Yao, Y., Bassu, S., Ciais, P., Durand, J.-L., Elliott, J., Ewert, F., Janssens, I. A., Li, T., Lin, E., Liu, Q., Martre, P., Müller, C., Peng, S., Peñuelas, J., Ruane, A. C., Wallach, D., Wang, T., Wu, D., Liu, Z., Zhu, Y., Zhu, Z., & Asseng, S. (2017). Temperature increase reduces global yields of major crops in four independent estimates. Proceedings of the National Academy of Sciences of the United States of America, 114(35), 9326–9331. [CrossRef]

- Dang, P., Ciais, P., Peñuelas, J., Lu, C., Gao, J., Zhu, Y., Batchelor, W. D., Xue, J., Qin, X., & Ros, G. H. (2025). Mitigating the detrimental effects of climate warming on major staple crop production through adaptive nitrogen management: A meta-analysis. Agricultural and Forest Meteorology, 367, 110524. [CrossRef]

- Dara, R., Hazrati Fard, S. M., & Kaur, J. (2022). Recommendations for ethical and responsible use of artificial intelligence in digital agriculture. Frontiers in Artificial Intelligence, 5, Article 884192. [CrossRef]

- Ryan, M. (2023). The social and ethical impacts of artificial intelligence in agriculture: Mapping the agricultural AI literature. AI and Society, 38(6), 2473-2485. [CrossRef]

- United Nations. (2024). Summit of the Future outcome documents: Pact for the Future, Global Digital Compact, and Declaration on Future Generations (A/RES/79/1). United Nations. https://digitallibrary.un.org/record/4063333?v=pdf.

- Lederer, E. M. (2024, September 22). UN nations endorse a ‘Pact for the Future,’ and the body’s leader says it must be more than talk. AP News. https://apnews.com/article/un-pact-future-russia-challenges-climate-ai-accf4523e01c6604707189333de289ce AP.

- Lederer, E. M. (2024, September 22). UN nations endorse a ‘Pact for the Future,’ and the body’s leader says it must be more than talk. AP News. https://apnews.com/article/un-pact-future-russia-challenges-climate-ai-accf4523e01c6604707189333de289ce AP. [CrossRef]

- Jouanjean, M.-A., Casalini, F., Wiseman, L., & Gray, E. (2020). Issues around data governance in the digital transformation of agriculture: The farmers’ perspective (OECD Food, Agriculture and Fisheries Papers No. 146). OECD Publishing. [CrossRef]

- Stilgoe, J., Owen, R., & Macnaghten, P. (2013). Developing a framework for responsible innovation. Research Policy, 42(9), 1568–1580. research-information.bris.ac.uk/ws/files/192256155/1_s2.0_S0048733313000930_main.pdf.

- Stilgoe, J., Owen, R., & Macnaghten, P. (2013). Developing a framework for responsible innovation. Research Policy, 42(9), 1568–1580. research-information.bris.ac.uk/ws/files/192256155/1_s2.0_S0048733313000930_main.pdf. [CrossRef]

- Friedman, B., Kahn, P. H., Jr., & Borning, A. (2002). Value Sensitive Design: Theory and Methods (Technical Report UW-CSE-02-12-01). University of Washington. https://dada.cs.washington.edu/research/tr/2002/12/UW-CSE-02-12-01.pdf.

- van Mierlo, B., Beers, P., & Hoes, A. C. (2020). Inclusion in responsible innovation: revisiting the desirability of opening up. Journal of Responsible Innovation, 7(3), 361–383. [CrossRef]

- Macnaghten, P. (2016). Responsible innovation and the reshaping of existing technological trajectories: The hard case of genetically modified crops. Journal of Responsible Innovation, 3(3), 282–289. [CrossRef]

- Lee, S. (2025). Human values in tech design: Integrating ethics and empathy into human-computer interaction. Number Analytics. https://www.numberanalytics.com/blog/human-values-in-tech-design.

- Tiwari, R. (2023). Human values at the core: Unpacking value-sensitive design. Medium. https://medium.com/design-bootcamp/human-values-at-the-core-unpacking-value-sensitive-design-4eaedb49b299.

- Mukherjee, S., Padaria, R. N., Burman, R. R., Velayudhan, P. K., Mahra, G. S., Aditya, K., Sahu, S., Saini, S., Mallick, S., Quader, S. W., Shravani, K., Ghosh, B., & Bhat, A. G. (2025). Global trends in ICT-based extension and advisory services in agriculture: A bibliometric analysis. Frontiers in Sustainable Food Systems, 9, Article 1430336. [CrossRef]

- Bauckham, R. (2010). Bible and ecology: Rediscovering the community of creation. Baylor University Press. https://library.oapen.org/handle/20.500.12657/58954.

- Moo, D. J., & Moo, J. A. (2018). Creation care: A biblical theology of the natural world. Zondervan Academic. https://www.christianbook.com/creation-care-biblical-theology-natural-world/douglas-moo/9780310293743/pd/293741.

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., Steve McDonald, McGuinness, L. A., Stewart, L. A., Thomas, J., Tricco, A. C., Welch, V. A., Whiting, P., & Moher, D. (2021, March 29). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ, 372, Article n71. [CrossRef]

- Landrigan, P. J., Fuller, R., Acosta, N. J. R., Adeyi, O., Arnold, R., Basu, N. N., Baldé, A. B., Bertollini, R., Bose-O'Reilly, S., Boufford, J. I., Breysse, P. N., Chiles, T., Mahidol, C., Coll-Seck, A. M., Cropper, M. L., Fobil, J., Fuster, V., Greenstone, M., Haines, A., Hanrahan, D., … Zhong, M. (2018). The Lancet Commission on pollution and health. Lancet (London, England), 391(10119), 462–512. [CrossRef]

- Government of India, NITI Aayog. (2018/2023). National strategy for artificial intelligence (#AIForAll). https://www.niti.gov.in/sites/default/files/2023-03/National-Strategy-for-Artificial-Intelligence.pdf.

- Republic of Rwanda. (2023). National artificial intelligence policy. https://extranet.who.int/countryplanningcycles/planning-cycle-files/national-ai-policy-rwanda.

- United Nations, Office for Digital and Emerging Technologies (UN-ODET). (2024). Global Digital Compact. United Nations. https://www.un.org/digital-emerging-technologies/global-digital-compact. /: Nations. https.

- Cohen, J. (1960). A Coefficient of Agreement for Nominal Scales. Educational and Psychological Measurement, 20(1), 37-46. [CrossRef]

- Sevinc, N. T. (2025). UN chief urges immediate action on global AI governance. Anadolu Agency. https://www.aa.com.tr/en/artificial-intelligence/un-chief-urges-immediate-action-on-global-ai-governance/3478581.

- Řehůřek, R., & Sojka, P. (2010). Software framework for topic modelling with large corpora. Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks, 45–50. ELRA. http://is.muni.cz/publication/884893/en.

- Řehůřek, R., & Sojka, P. (2010). Software framework for topic modelling with large corpora. Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks, 45–50. ELRA. http://is.muni.cz/publication/884893/en.

- Bouma-Prediger, S. (2023, June 26). A Christian perspective on environmental stewardship is at the heart of three new books by Dr. Steven-Bouma Prediger. Hope College. https://hope.edu/news/2023/academics/a-christian-perspective-on-environmental-stewardship-is-at-the-heart-of-three-new-books-by-dr-steven-bouma-prediger.html.

- Bouma-Prediger, S. (2023, June 26). A Christian perspective on environmental stewardship is at the heart of three new books by Dr. Steven-Bouma Prediger. Hope College. https://hope.edu/news/2023/academics/a-christian-perspective-on-environmental-stewardship-is-at-the-heart-of-three-new-books-by-dr-steven-bouma-prediger.html. [CrossRef]

- Bouma-Prediger, S. (2023, June 26). A Christian perspective on environmental stewardship is at the heart of three new books by Dr. Steven-Bouma Prediger. Hope College. https://hope.edu/news/2023/academics/a-christian-perspective-on-environmental-stewardship-is-at-the-heart-of-three-new-books-by-dr-steven-bouma-prediger.html.

- Yang, T. (2025, May 30). Smart Earth: How AI Is Rewriting Rural China. The World of Chinese. https://www.theworldofchinese.com/2025/05/smart-earth-how-ai-is-rewriting-rural-china/.

- Oğuztürk, G. E. (2025). AI-driven irrigation systems for sustainable water management: A systematic review and meta-analytical insights. Smart Agricultural Technology, 11, 100982. [CrossRef]

- Kataria, A., Min, H. (2025). AI-Based Smart Irrigation Systems for Water Conservation. In: Rani, S., Dutta, S., Rocha, Á., Cengiz, K. (eds) AI and Data Analytics in Precision Agriculture for Sustainable Development. Studies in Computational Intelligence, vol 1215. Springer, Cham. [CrossRef]

- Del-Coco, M., Leo, M., & Carcagnì, P. (2024). Machine Learning for Smart Irrigation in Agriculture: How Far along Are We? Information, 15(6), 306. [CrossRef]

- Lakhiar, I. A., Yan, H., Zhang, C., Wang, G., He, B., Hao, B., Han, Y., Wang, B., Bao, R., Syed, T. N., Chauhdary, J. N., & Rakibuzzaman, M. (2024). A Review of Precision Irrigation Water-Saving Technology under Changing Climate for Enhancing Water Use Efficiency, Crop Yield, and Environmental Footprints. Agriculture, 14(7), 1141. [CrossRef]

- Mrisho, L., Mbilinyi, N., Ndalahwa, M., Ramcharan, A., Kehs, A., McCloskey, P., Murithi, H., Hughes, D., & Legg, J. (2020). Evaluating the accuracy of a smartphone-based artificial intelligence system, PlantVillage Nuru, in diagnosing of the viral diseases of cassava [Preprint]. bioRxiv. [CrossRef]

- Mrisho, L., et al. (2020). Accuracy of the PlantVillage Nuru AI system for cassava disease diagnosis. Frontiers in Plant Science, 11, 590889. [CrossRef]

- Raj, M., Prahadeeswaran, M. (2025). Revolutionizing agriculture: a review of smart farming technologies for a sustainable future. Discov Appl Sci 7, 937. [CrossRef]

- Dhakshayani, J., Surendiran, B., & Jyothsna, J. (2023). Artificial intelligence in precision agriculture: A systematic review. In Predictive analytics in smart agriculture (pp. 21–45). CRC Press.https://www.taylorfrancis.com/chapters/edit/10.1201/9781003391302-3/artificial-intelligence-precision-agriculture-dhakshayani-surendiran-jyothsna.

- García-Munguía, A., Guerra-Ávila, P. L., Islas-Ojeda, E., Flores-Sánchez, J. L., Vázquez-Martínez, O., García-Munguía, A. M., & García-Munguía, O. (2024). A Review of Drone Technology and Operation Processes in Agricultural Crop Spraying. Drones, 8(11), 674. [CrossRef]

- North Dakota State University Extension (NDSUE). (2025, February 12). Pesticide applications the drone way [Conference handout]. North Dakota State University Extension. https://www.ndsu.edu/agriculture/sites/default/files/2025-02/Pesticide%20Applications%20the%20Drone%20Way.pdf.

- North Dakota State University Extension (NDSUE). (2025, February 12). Pesticide applications the drone way [Conference handout]. North Dakota State University Extension. https://www.ndsu.edu/agriculture/sites/default/files/2025-02/Pesticide%20Applications%20the%20Drone%20Way.pdf.

- Ashworth, B. (2025, January 15). The FTC suing John Deere is a tipping point for right-to-repair. Wired. https://www.wired.com/story/ftc-sues-john-deere-over-repairability/.

- Copa-Cogeca. (2018/2022). EU code of conduct on agricultural data sharing by contractual agreement. https://www.copa-cogeca.eu/download.ashx?docID=2860242; see also FAO summary: https://www.fao.org/family-farming/detail/en/c/1370911/.

- Ostrom, E. (2015). Governing the commons: The evolution of institutions for collective action. Cambridge University Press. [CrossRef]

- World Bank. (2021). ICTs for Agriculture in Africa. World Bank: Washington, DC. https://openknowledge.worldbank.org/entities/publication/401f07ed-3af5-5884-8b91-4a84fdb4a5e5.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).