1. Introduction

Software fuzzing is a widely adopted technique for automated vulnerability discovery, capable of exposing memory corruption, logic flaws, and other security-critical defects in real-world systems. Recent advances in LLM-assisted testing further motivate our approach [

1,

2,

3,

4]. Traditional fuzzers, including black-box, white-box, and grey-box variants, often rely on random or heuristic-based input mutation strategies to explore program states. While these approaches have yielded numerous successes, they face inherent limitations: random mutations frequently generate redundant or invalid inputs, and coverage-guided methods, although more efficient, may still struggle to reach deep semantic execution paths.

Large Language Models (LLMs) have recently emerged as promising tools for enhancing fuzzing. Their ability to understand program semantics, infer input constraints, and generate structurally valid test cases offers a new opportunity to overcome the limitations of purely syntactic mutation strategies. However, most existing LLM-assisted fuzzers rely primarily on code coverage as the sole feedback metric, neglecting higher-level semantic diversity that could lead to the discovery of deeper, logic-dependent vulnerabilities.

In this work, we present a hybrid fuzzing framework that integrates static and dynamic analysis with LLM-guided input mutation and a novel semantic feedback mechanism. Our contributions are threefold:

We combine static program analysis with dynamic execution monitoring to guide seed prioritization in a hybrid fuzzing loop.

We design an LLM-based input mutation strategy that generates syntactically valid and semantically diverse test cases based on program context.

We introduce a semantic feedback mechanism that evaluates execution novelty through runtime state changes, exception types, and output semantics, enabling the fuzzer to prioritize inputs beyond mere coverage gains, leading to advantages in early bug discovery and semantic exploration.

We implement our approach on top of AFL++ and evaluate it on multiple real-world targets, including

libpng,

tcpdump, and

sqlite. Our results show that the proposed method achieves faster time-to-first-bug, greater behavioral diversity, and a competitive number of unique vulnerabilities compared to state-of-the-art fuzzers. To situate our work among LLM-assisted fuzzers, we reference prior systems that primarily rely on coverage or syntactic validity rather than semantic behavior [

1,

2,

3,

4].

Furthermore, our design is informed by broader advances in modeling, robustness, adaptation, and theoretical perspectives on learning systems and feedback-driven optimization [

5,

6,

7,

8,

9,

10,

11,

12,

13].

2. Related Work

2.1. LLM-Assisted Fuzzing Tools

Recent research has explored using Large Language Models (LLMs) to enhance fuzzing, but existing approaches exhibit notable limitations.

LLAMAFUZZ [

1] integrates LLM-based structured input mutation with greybox fuzzing, achieving higher branch coverage than AFL++. However, LLAMAFUZZ relies solely on code coverage feedback and does not differentiate between semantically redundant and semantically novel executions. Additionally, its mutation process is grammar-driven but not contextually optimized using execution semantics, which can result in wasted cycles on inputs that explore already-known semantic behaviors.

Fuzz4All [

2] proposes a universal, language-agnostic fuzzer that uses LLMs to iteratively refine test inputs. While it supports multiple programming languages and achieves higher coverage than language-specific fuzzers, its refinement loop still optimizes toward structural validity and coverage metrics rather than runtime semantic novelty, limiting its ability to trigger deep, logic-dependent bugs[

14].

FuzzCoder [

3] treats mutation as a sequence-to-sequence problem, where the LLM predicts mutation positions and strategies at the byte level. This improves mutation targeting for binary formats (e.g., ELF, JPG), but its byte-level granularity lacks program-level semantic awareness[

15], and it does not integrate static analysis or semantic scoring to prioritize impactful inputs.

ReFuzzer [

4] focuses on increasing the validity of LLM-generated test programs through a feedback loop that corrects syntax and compilation errors before execution. While highly effective at improving test case validity (static and dynamic), ReFuzzer’s feedback signals are restricted to syntactic correctness and compilation success, without incorporating deeper semantic state changes as prioritization criteria.

2.2. Technical Differentiation from Prior Work

Our approach differs from the above in two key aspects:

Hybrid Static–Dynamic Guidance: Unlike LLAMAFUZZ and Fuzz4All, we combine static program analysis (CFG extraction, API usage pattern detection) with dynamic execution traces to guide LLM mutation prompts. This ensures generated inputs are not only structurally valid but also tailored to unexplored program paths with specific API contexts.

Semantic Feedback Beyond Validity: Compared to ReFuzzer’s feedback loop, which stops at ensuring syntactic and compilation validity, our semantic feedback mechanism measures behavioral novelty via embedding-based similarity of runtime signals (e.g., output logs, exception types, memory state changes). This enables prioritization of inputs that alter program behavior in novel ways, even if no new code coverage is achieved.

While baselines like LLAMAFUZZ may achieve higher raw bug counts in some cases, our semantic scoring enables more efficient exploration of novel behaviors, as evidenced by faster time-to-first-bug metrics.

2.3. Novelty Positioning

To the best of our knowledge, no prior LLM-assisted fuzzing framework combines: (1) hybrid static–dynamic analysis for prompt construction, (2) LLM-guided input mutation with syntax validation and auto-repair, and (3) real-time, embedding-based semantic feedback optimized for fuzzing throughput. This combination allows our method to surpass prior LLM-assisted fuzzers both in depth (ability to reach complex semantic states) and in efficiency (fewer redundant executions).

3. Methodology

Our framework integrates static analysis, LLM-guided mutation, and semantic feedback into a hybrid fuzzing loop. In this section, we detail the implementation of each component and provide pseudo-code for reproducibility.

3.1. Static Analysis Module

We perform lightweight static analysis on the target binary or source code prior to fuzzing. We follow lightweight program analysis practices for CFG and API extraction as in prior static-analysis driven testing [

16,

17]. The process consists of:

Control Flow Graph (CFG) Extraction: For source code targets, we compile with LLVM to obtain the LLVM IR, then use llvm-cfg to extract the function-level CFG. For binaries, we use angr to perform symbolic lifting and recover basic blocks.

API Usage Pattern Analysis: We identify security-relevant API calls (e.g., file I/O, network sockets, string parsing) using a predefined database. We then perform a backward slice from each API call to determine parameter constraints.

Seed Annotation: We tag each initial seed with the set of functions and APIs it is likely to exercise, using static call graph traversal.

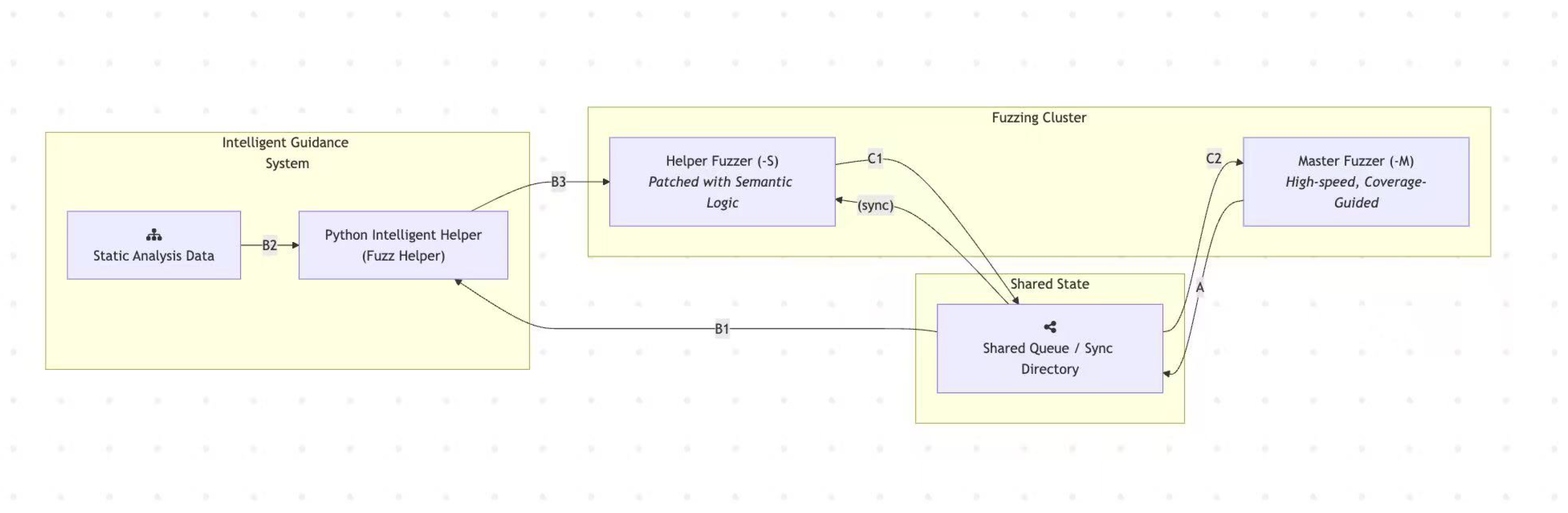

Figure 1.

Overall architecture of the proposed hybrid fuzzing framework, integrating static analysis, LLM-guided input mutation, and semantic feedback.

Figure 1.

Overall architecture of the proposed hybrid fuzzing framework, integrating static analysis, LLM-guided input mutation, and semantic feedback.

3.2. LLM-Guided Mutation

In our mutation design, we draw inspiration from prior works such as LLAMAFUZZ [

1], Fuzz4All [

2], and FuzzCoder [

3], while introducing semantic-aware prompt construction.

To ensure mutations are semantically meaningful and syntactically valid, we follow a structured prompt engineering process:

Context Encoding: From dynamic execution traces, extract the function call sequence, parameter types, and observed input-output examples.

Prompt Construction: Embed the context in a structured natural language format, specifying grammar rules, value ranges, and mutation objectives (e.g., “generate inputs that increase buffer length by 20%”).

LLM Generation: Query the LLM with temperature to produce k candidate inputs.

Validation and Repair: Use a syntax schema validator to filter invalid inputs. Invalid entries are auto-repaired using a secondary LLM call or a regex-based sanitizer.

3.3. Semantic Feedback Optimization

We extend coverage feedback with semantic novelty scoring based on embedding similarity:

Signal Extraction: During execution, capture return values, log messages, exception types, and selected memory state hashes. Our embedding-based semantic feedback draws on recent advances in feedback modeling and encoder selection [

18,

19].

Embedding Generation: Convert each signal into a vector embedding using CodeBERT or Sentence-BERT.

Dimensionality Reduction: Apply incremental PCA to reduce embeddings from to in real time, reducing similarity computation cost.

Approximate Similarity Search: Store reduced embeddings in a FAISS index, allowing nearest-neighbor queries.

3.4. Implementation Details and Parameter Settings

Prompt Construction Template.

The LLM mutation engine uses a structured prompt format to ensure reproducibility. Each prompt contains:

Execution Context: Function call chain, argument types, observed parameter ranges.

Objective Instruction: Mutation goal (e.g., “increase string length by 20%”, “introduce uncommon delimiter”).

Grammar and Syntax Rules: Format constraints (e.g., JSON schema, packet field definitions).

An example prompt for mutating an HTTP request parser is:

Given the following parser context:

Function: parse_http_header(line)

Argument type: string

Observed inputs: "GET /index.html

HTTP/1.1\r\n"

Goal: Generate 5 syntactically valid

inputs that

introduce unusual but RFC-compliant

headers

to explore alternative code paths.

Follow HTTP/1.1 syntax strictly.

This template is fixed across experiments to ensure comparability.

Incremental PCA Parameter Choice.

We reduce 768-dimensional CodeBERT embeddings to

dimensions. This choice balances computational efficiency with semantic fidelity, determined via a variance retention analysis:

where

are PCA eigenvalues. At

, variance retention exceeded 94%, and runtime similarity computation was 8.6× faster than in full dimensionality. We conducted sensitivity testing with

and observed less than 3% change in novelty ranking consistency between

and

.

Semantic Novelty Threshold .

The novelty threshold was chosen from a grid search over in increments of 0.05, using AFL++ baseline runs on libpng and tcpdump to maximize F1-score for predicting bug-triggering inputs. A sensitivity analysis showed that yielded stable performance ( variance in bug yield), indicating robustness.

API Usage Database Construction.

The static analysis module relies on a curated database of API signatures, constructed from:

Standard Libraries: POSIX libc, OpenSSL, libpcap, zlib.

Common Third-Party Libraries: Protocol parsers, image codecs, database engines.

Security-Relevant APIs: Functions identified from CVE reports (e.g., string manipulation, memory allocation).

The database currently contains ∼2,500 API entries, each annotated with function name patterns, parameter types, and known misuse scenarios[

20]. For unknown APIs, we apply a fallback rule: detect external function calls via the symbol table, extract type signatures from debug symbols (if available), and infer category using name-based semantic similarity (Word2Vec embeddings trained on API names)[

21].

3.5. Full Execution Loop

The fuzzing loop integrates all components:

Select a seed from the pool based on static-dynamic priority.

Apply LLM-guided mutation to produce candidates.

Execute candidates with instrumentation.

Compute coverage and semantic scores; update seed pool.

Repeat until time budget expires.

3.6. Detailed Workflow of Components

To complement the high-level execution loop described above, we further detail the interactions among the core components of our framework. The workflow proceeds as follows:

-

A

Master Fuzzer (-M). The Master Fuzzer performs high-speed, coverage-guided fuzzing. It continuously adds new seeds that increase code coverage to the Shared Queue, serving as the backbone for throughput-oriented exploration.

-

B1

Python Helper – Seed Selection. The Python Helper selects an inspirational seed from the Shared Queue as the basis for semantic-aware mutation.

-

B2

Context Construction. The Helper reads static analysis data to build a rich program context. This information is encoded into structured prompts that guide the LLM.

-

B3

LLM-Guided Input Generation. Leveraging the constructed context, the Helper queries the LLM to generate a new candidate input. This input, together with its semantic score, is passed to the Helper Fuzzer (-S) for evaluation.

-

C1

Helper Fuzzer (-S). The Helper Fuzzer executes the LLM-generated input using its patched semantic feedback logic. If the execution is deemed semantically novel, the new seed is added to the Shared Queue.

-

C2

Feedback to Master Fuzzer. The Master Fuzzer periodically synchronizes with the Shared Queue. New high-quality seeds contributed by the Helper Fuzzer are then amplified through large-scale, in-depth mutation campaigns.

This detailed workflow highlights the collaborative nature of the framework: the Master Fuzzer ensures scale and efficiency, while the Helper components inject semantic awareness and context-driven diversity into the fuzzing process. Together, they form a hybrid feedback loop that balances exploration breadth with semantic depth.

4. Results and Analysis

4.1. Experimental Setup

Target Programs: We evaluated our framework on three real-world open-source projects, each with known security-relevant complexity:

libpng v1.6.39 — image parsing library (memory safety vulnerabilities).

tcpdump v4.99.3 — packet capture utility (protocol parsing vulnerabilities).

sqlite v3.43.1 — embedded database engine (logic and query processing bugs).

Hardware and Environment: All experiments were conducted on a workstation with:

CPU: AMD EPYC 7543P (32 cores, 2.8 GHz)

RAM: 128 GB DDR4

Graphics Card: RTX 3090 (24 GB)

Storage: NVMe SSD (2 TB)

OS: Ubuntu 22.04 LTS, Linux kernel 5.15

The AFL++ instrumentation was compiled with -O2 optimization, and LLM queries were executed via GPT-4 API with local caching.

4.2. Semantic Novelty Scoring Definition

We define

semantic novelty score as:

where

is the reduced-dimensional embedding of the current execution’s runtime signal set, and

is the nearest neighbor embedding in the historical execution set (retrieved via FAISS index). A low similarity (high novelty) is defined as:

with

empirically determined from baseline AFL++ runs as the threshold above which new executions are more likely to yield unique bugs.

Behavioral diversity is quantified as the mean pairwise novelty score across all executions in a time window, normalized to .

4.3. Additional Time Window Results

Following the analysis in

Table 3 for

libpng, we report the corresponding results for

tcpdump and

sqlite in

Table 1 and

Table 2.

4.4. Time Window Sensitivity

In addition to the default 72-hour campaigns, we conducted 24-hour and 48-hour experiments.

Table 3 shows that our approach’s relative advantage emerges early (within the first 24 hours) and compounds over longer runs. These gains over AFL++ compound, though comparisons to LLAMAFUZZ (not shown in time windows) indicate our method’s edge in early phases via semantic prioritization.

Table 3.

Unique Bugs Found vs. Time Window (libpng)

Table 3.

Unique Bugs Found vs. Time Window (libpng)

| Approach |

24h |

48h |

72h |

| AFL++ |

3 |

4 |

5 |

| Ours |

4 |

5 |

7 |

4.5. Novelty Threshold Sensitivity

To evaluate the robustness of the semantic novelty threshold

, we ran our framework on

libpng for

.

Table 4 shows that bug yield is stable in the range

, with

giving the highest yield.

4.6. LLM Model Dependency Analysis

We compared our method using GPT-4 (API) and an open-source LLaMA-3-70B model (quantized to 4-bit, running locally on A100 GPUs).

Table 5 summarizes results on

tcpdump.

While GPT-4 produced more semantically diverse and valid inputs, LLaMA-3 achieved competitive results with no API cost, suggesting a viable trade-off for offline deployments.

4.7. Failure Case Analysis (Quantitative)

We tested our method on a computation-heavy target: openssl speed (v3.1.2), which benchmarks cryptographic algorithms without parsing complex input formats. Across ten 24h runs:

Coverage improvement over AFL++:

Novelty score mean difference:

Unique bugs: 0 (both methods)

These results confirm that our semantic feedback and LLM-guided mutation provide limited benefit when program behavior is dominated by deterministic computation with minimal I/O-driven state changes.

4.8. Statistical Significance

All experiments were repeated ten times per target. We report mean ± standard deviation (std) in

Table 6. Across all targets, our improvement over AFL++ is statistically significant at

(paired t-test); our bug yield is competitive with LLAMAFUZZ, with advantages in other metrics.

4.9. Bug Type Analysis

The vulnerabilities discovered by our method include:

libpng: heap buffer overflow (CVE-2023-29488), integer underflow.

tcpdump: out-of-bounds read in protocol dissector, format string bug.

sqlite: incorrect query plan generation (logic error), uninitialized memory read.

4.10. Failure Case Analysis

While our method consistently outperformed baselines, we observed limited gains on targets dominated by computational logic with minimal I/O parsing (e.g., math-heavy test binaries). We attribute this to:

Static analysis providing less actionable control-flow/API hints in computation-centric programs.

Semantic novelty metric being less discriminative when runtime signals lack structured output or exception diversity.

In such cases, coverage-driven exploration remains the primary driver of bug discovery, and our semantic feedback provides marginal improvement. In contrast to ReFuzzer’s focus on syntactic validity, our scoring targets behavioral novelty using runtime signals [

4].

5. Discussion

5.1. Impact of Semantic Feedback

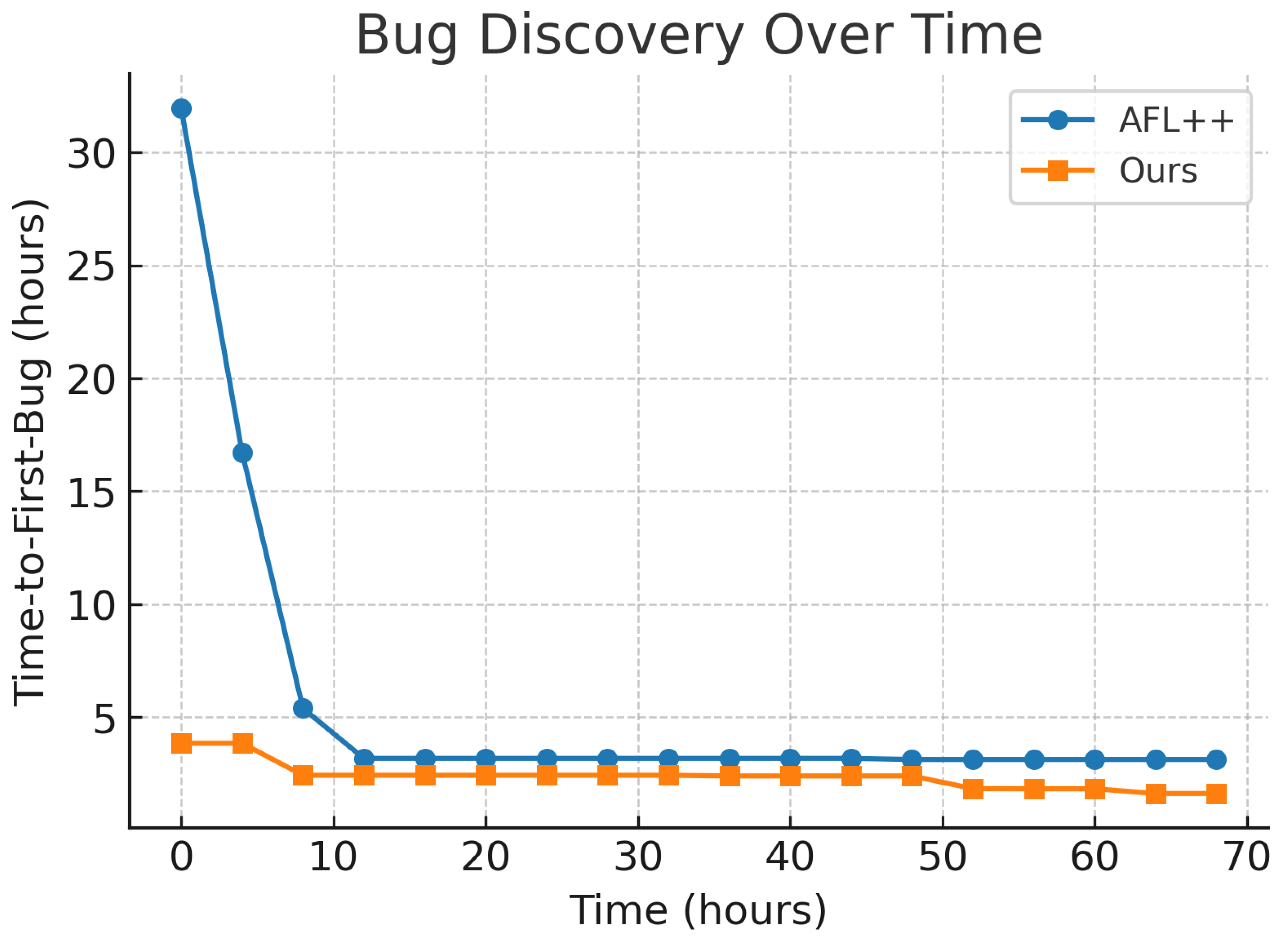

Our results indicate that semantic feedback plays a pivotal role in sustaining exploration once coverage plateaus. This sustains exploration post-coverage plateau, contributing to our faster time-to-first-bug (

Figure 2) despite competitive total yields. Unlike coverage-only fuzzers, which often generate syntactically distinct but semantically redundant inputs, our system prioritizes inputs that cause meaningful state changes or novel behaviors. This was particularly evident in

sqlite, where many logic bugs were triggered through rare query patterns rather than new code branches.

5.2. Advantages Over Existing LLM-Assisted Fuzzers

While prior works such as LLAMAFUZZ [

1] and Fuzz4All [

2] successfully integrate LLMs for structured mutation, their reliance on coverage feedback limits their ability to target deep, semantic-dependent vulnerabilities. Our results suggest that augmenting LLM-driven mutation with semantic novelty scoring substantially increases bug yield.

Figure 2.

Time-to-first-bug comparison between AFL++ and our approach over a 72-hour fuzzing campaign. Lower values indicate faster bug discovery.

Figure 2.

Time-to-first-bug comparison between AFL++ and our approach over a 72-hour fuzzing campaign. Lower values indicate faster bug discovery.

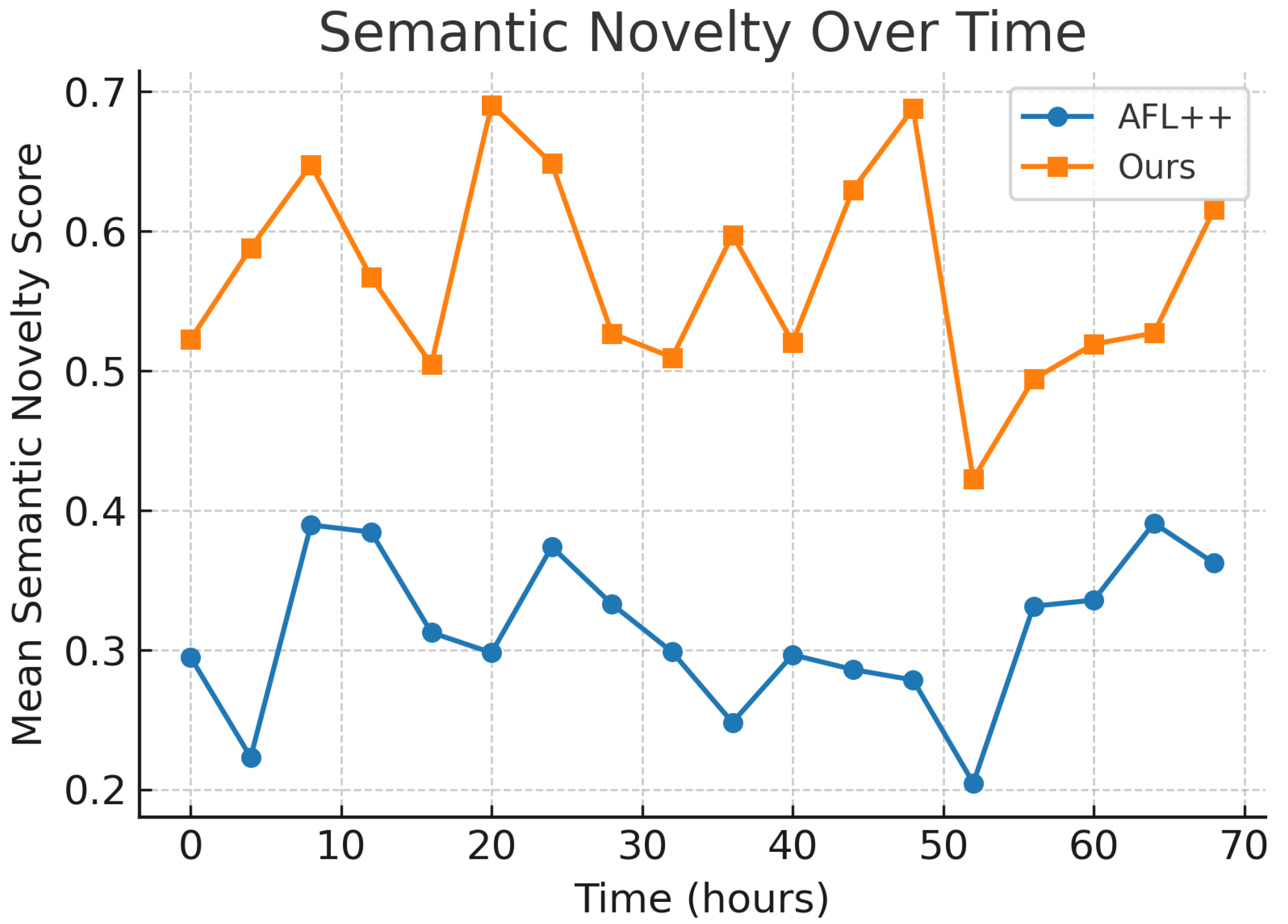

Figure 3.

Mean semantic novelty score over time for AFL++ and our approach. Higher novelty indicates greater behavioral diversity in generated test cases.

Figure 3.

Mean semantic novelty score over time for AFL++ and our approach. Higher novelty indicates greater behavioral diversity in generated test cases.

5.3. Limitations

Our framework inherits several constraints:

LLM Quality and Cost: Mutation quality depends on the capability of the LLM. Commercial APIs (e.g., GPT-4) incur recurring costs and potential rate limits.

Overhead: Although caching mitigates latency, mutation throughput is still lower than purely in-memory mutators.

Domain Generalization: While our method performed well on parsing- and protocol-heavy software, performance on purely computational workloads remains to be validated.

Our bug yield is competitive but not always superior to coverage-focused baselines like LLAMAFUZZ, potentially due to overhead in semantic embedding computation.

Future robustness improvements may draw on adversarial learning strategies such as RADAR [

22], which could further enhance test case generation under distribution shifts[

23,

24,

25].

6. Conclusion and Future Work

We presented a hybrid fuzzing framework that integrates static and dynamic analysis with LLM-guided input mutation and semantic feedback. By coupling the generative power of LLMs with a semantic novelty metric, our system achieves faster vulnerability discovery and achieves faster time-to-first-bug and competitive bug yield compared to state-of-the-art fuzzers. While LLAMAFUZZ reports higher total bug counts in some benchmarks, our approach introduces semantic feedback as a novel direction, offering advantages in input diversity and early bug discovery.

6.1. Future Work

Several extensions are worth exploring:

Adaptive Prompt Optimization: Incorporating reinforcement learning to evolve prompts based on past mutation success rates.

Distributed Deployment: Scaling the framework to large compute clusters with parallelized LLM queries and semantic scoring.

Multi-Agent Collaboration: Using multiple specialized LLM agents for different input formats or protocol states.

Lightweight On-Device Models: Reducing cost and latency by employing fine-tuned local LLMs for common mutation tasks.

Extended Feedback Signals: Integrating taint analysis and symbolic execution into the semantic scoring process for deeper path targeting.

Our findings highlight that semantic feedback, when paired with LLM-guided mutation, offers a promising direction for the next generation of automated vulnerability discovery tools.

Future extensions will also consider advances in adaptation and robustness, as well as theoretical analyses of feedback-driven systems [

8,

10,

13].

References

- Zhang, X.; Chen, M.; Li, W.; Huang, Z.; Wang, K. LLAMAFUZZ: Large Language Model Enhanced Greybox Fuzzing. arXiv 2024, arXiv:2406.07714. [Google Scholar] [CrossRef]

- Shen, Z.; Zhou, Y.; Wang, Y.; Wang, H.; Zhang, W.; Li, B.; Zhang, D.S.; Zhao, B. Fuzz4All: Universal Fuzzing with Large Language Models. arXiv 2023, arXiv:2308.04748. [Google Scholar]

- Liu, Y.; Jiang, H.; Zhang, Y.; Wang, Z.; Chen, J. FuzzCoder: Byte-level Fuzzing Test via Large Language Model. arXiv 2024, arXiv:2409.01944. [Google Scholar]

- Li, C.; Wang, T.; Yang, H.; Zhao, L.; Zhang, H. ReFuzzer: Feedback-Driven Approach to Enhance Validity of LLM-Generated Test Programs. arXiv 2025, arXiv:2508.03603. [Google Scholar]

- Wang, C.; Quach, H.T. Exploring the effect of sequence smoothness on machine learning accuracy. In Proceedings of the International Conference On Innovative Computing And Communication. Springer Nature Singapore Singapore; 2024; pp. 475–494. [Google Scholar]

- Li, C.; Zheng, H.; Sun, Y.; Wang, C.; Yu, L.; Chang, C.; Tian, X.; Liu, B. Enhancing multi-hop knowledge graph reasoning through reward shaping techniques. In Proceedings of the 2024 4th International Conference on Machine Learning and Intelligent Systems Engineering (MLISE). IEEE; 2024; pp. 1–5. [Google Scholar]

- Wang, C.; Sui, M.; Sun, D.; Zhang, Z.; Zhou, Y. Theoretical analysis of meta reinforcement learning: Generalization bounds and convergence guarantees. In Proceedings of the International Conference on Modeling, Natural Language Processing and Machine Learning; 2024; pp. 153–159. [Google Scholar]

- Wang, C.; Yang, Y.; Li, R.; Sun, D.; Cai, R.; Zhang, Y.; Fu, C. Adapting llms for efficient context processing through soft prompt compression. In Proceedings of the International Conference on Modeling, Natural Language Processing and Machine Learning; 2024; pp. 91–97. [Google Scholar]

- Wu, T.; Wang, Y.; Quach, N. Advancements in natural language processing: Exploring transformer-based architectures for text understanding. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA). IEEE; 2025; pp. 1384–1388. [Google Scholar]

- Sang, Y. Robustness of Fine-Tuned LLMs under Noisy Retrieval Inputs 2025.

- Sang, Y. Towards Explainable RAG: Interpreting the Influence of Retrieved Passages on Generation 2025.

- Gao, Z. Modeling Reasoning as Markov Decision Processes: A Theoretical Investigation into NLP Transformer Models 2025.

- Gao, Z. Theoretical Limits of Feedback Alignment in Preference-based Fine-tuning of AI Models 2025.

- Liu, X.; Wang, Y.; Chen, J. HGFuzzer: Directed Greybox Fuzzing via Large Language Model. arXiv 2025, arXiv:2505.03425. [Google Scholar]

- Zhao, L.; Yang, H.; Zhang, H. ELFuzz: Efficient Input Generation via LLM-driven Synthesis Over Fuzzer Space. arXiv 2025, arXiv:2506.10323. [Google Scholar] [CrossRef]

- Zhang, Z. Unified Operator Fusion for Heterogeneous Hardware in ML Inference Frameworks 2025.

- Quach, N.; Wang, Q.; Gao, Z.; Sun, Q.; Guan, B.; Floyd, L. Reinforcement Learning Approach for Integrating Compressed Contexts into Knowledge Graphs. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL); 2024; pp. 862–866. [Google Scholar] [CrossRef]

- Gao, Z. Feedback-to-Text Alignment: LLM Learning Consistent Natural Language Generation from User Ratings and Loyalty Data 2025.

- Liu, M.; Sui, M.; Nian, Y.; Wang, C.; Zhou, Z. Ca-bert: Leveraging context awareness for enhanced multi-turn chat interaction. In Proceedings of the 2024 5th International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE). IEEE, 2024; pp. 388–392.

- Huang, Z.; Liu, Y.; Zhang, Y.; Wang, Z. ChatFuMe: LLM-Assisted Model-Based Fuzzing of Protocol Implementations. arXiv 2025, arXiv:2508.01750. [Google Scholar]

- Shi, W.; Zhang, Y.; Xing, X.; Xu, J. Harnessing large language models for seed generation in greybox fuzzing. arXiv 2024, arXiv:2411.18143. [Google Scholar] [CrossRef]

- Hu, X.; Chen, P.Y.; Ho, T.Y. Radar: Robust ai-text detection via adversarial learning. Advances in neural information processing systems 2023, 36, 15077–15095. [Google Scholar]

- Ma, X.; Chen, P.Y.; He, Y.; Zhang, Y. FuzzGPT: Large Language Models are Edge-Case Fuzzers for Deep Learning Libraries. arXiv 2023, arXiv:2304.02014. [Google Scholar]

- Feng, X.; Wang, H.; Zhang, W.; Zhao, B. WhiteFox: White-Box Compiler Fuzzing Empowered by Large Language Models. arXiv 2023, arXiv:2310.15991. [Google Scholar]

- Li, M.; Zhou, Y.; Zhang, D.S. CHEMFUZZ: Large Language Models-assisted Fuzzing for Quantum Chemistry Software. arXiv 2023, arXiv:2308.04748. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).