Submitted:

29 July 2025

Posted:

30 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Data Collection

2.3. Ground Truth

2.4. Data Processing

2.4.1. Sensor Location

2.4.2. Filter Range

2.4.3. Window Length

2.4.4. Regressor Type

2.5. Data Analysis

2.5.1. Annotator Agreement

2.5.2. Optimal Sensing and Analysis Configuration

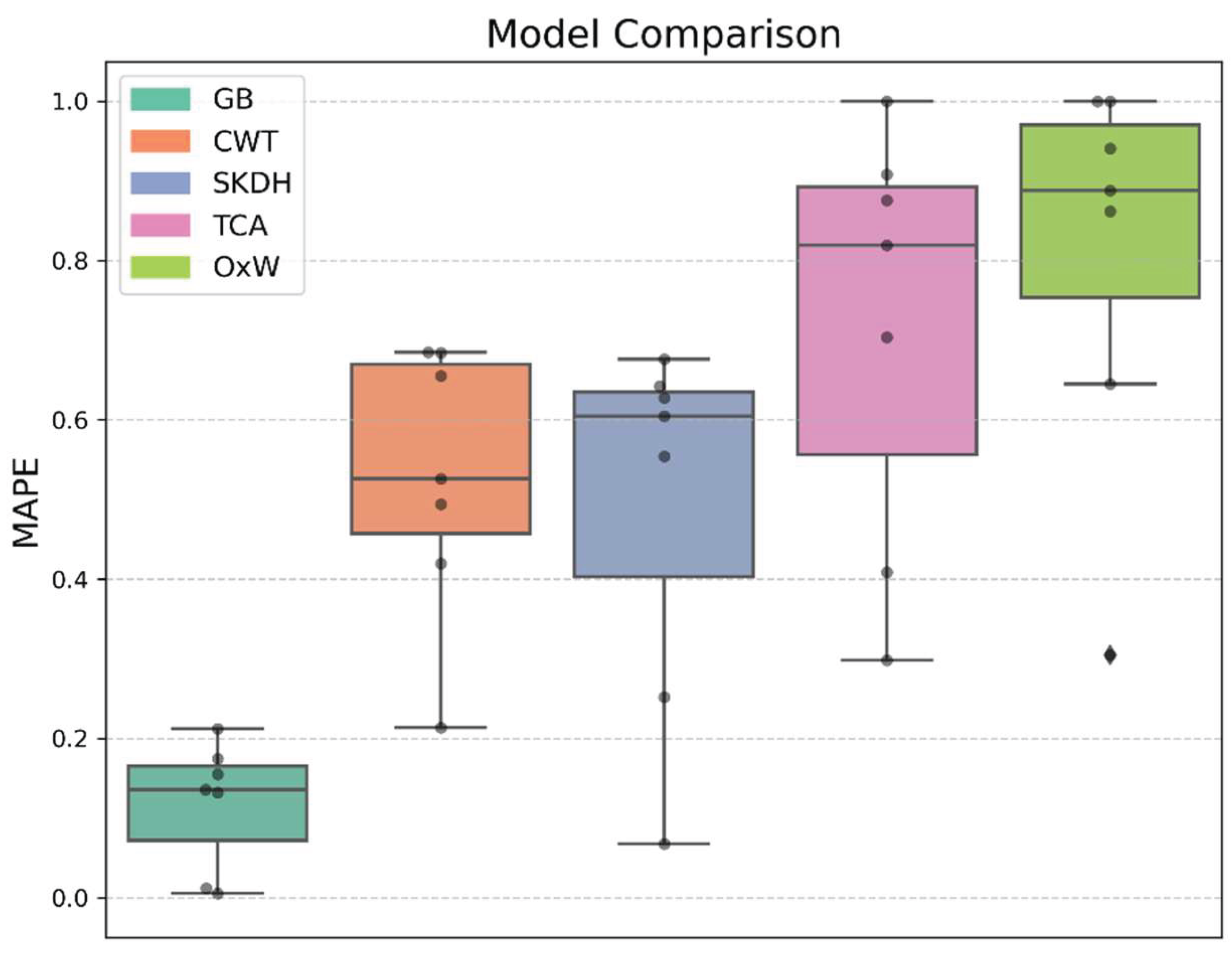

2.5.3. Algorithm Comparison

- Threshold-Crossing Algorithm (TCA), detects a step when the vector magnitude of acceleration exceeds a fixed threshold (here: 0.3 m/s2) [48].

- Continuous Wavelet Transform (CWT): applies a Morlet wavelet transform to the signal, followed by peak detection. The wavelet scale adapts to walking speed, improving robustness to temporal variation [49].

- SciKit Digital Health (SKDH): a pre-trained machine learning algorithm designed for lower back sensor data [46].

- OxWearables (OxW): a pre-trained machine learning algorithm designed for wrist worn sensors [50].

3. Results

3.1. Optimal Sensing and Analysis Configuration

3.2. Algorithm Comparison

4. Discussion

4.1. Methodological Considerations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PA | Physical Activity |

| ADL | Activities of Daily Living |

| RMSE | Root Mean Square Error |

| MAPE | Mean Absolute Percentage Error |

| GB | Gradient Boosting |

| kNN | k-Nearest Neighbors |

| MLP | Multilayer Perceptron |

| RF | Random Forest |

| SVR | Support Vector Regression |

| LOSO | Leave-one-subject-out |

| TCA | Threshold-Crossing Algorithm |

| CWT | Continuous Wavelet Transform |

| SKDH | SciKit Digital Health |

| OxW | OxWearables |

References

- Lear SA, Hu W, Rangarajan S, Gasevic D, Leong D, Iqbal R, et al. The effect of physical activity on mortality and cardiovascular disease in 130 000 people from 17 high-income, middle-income, and low-income countries: the PURE study. The Lancet. 2017 Dec;390(10113):2643–54.

- Morley JF, Subramanian I, Farahnik J, Grout L, Salcido C, Kurtzer J, et al. Physical Activity, Patient-Reported Outcomes, and Quality of Life in Parkinson’s Disease. J Geriatr Psychiatry Neurol. 2025 ;08919887251346495. 30 May.

- Yu, C.; Cao, Y.; Liu, Q.; Tan, H.; Xia, G.; Chen, B.; Du, F.; Lu, K.; Saposnik, G. Sitting Time, Leisure-Time Physical Activity, and Risk of Mortality Among US Stroke Survivors: A Prospective Cohort Study From the NHANES 2007 to 2018. Stroke 2025, 56, 1738–1747. [Google Scholar] [CrossRef]

- Andersen, T.M.; Andersen, A.M.; Riemenschneider, M.; Taul-Madsen, L.; Diechmann, M.; Gaemelke, T.; Dalgas, U.; Brønd, J.C.; Hvid, L.G. Comprehensive evaluation of accelerometer-based physical activity in persons with multiple sclerosis – The influence of disability status and its impact on walking capacity. Mult. Scler. Relat. Disord. 2024, 93, 106243. [Google Scholar] [CrossRef]

- Cieza, A.; Causey, K.; Kamenov, K.; Hanson, S.W.; Chatterji, S.; Vos, T. Global estimates of the need for rehabilitation based on the Global Burden of Disease study 2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet 2020, 396, 2006–2017. [Google Scholar] [CrossRef]

- Butler, E.N.; Evenson, K.R. Prevalence of Physical Activity and Sedentary Behavior Among Stroke Survivors in the United States. Top. Stroke Rehabilitation 2014, 21, 246–255. [Google Scholar] [CrossRef] [PubMed]

- English, C.; Manns, P.J.; Tucak, C.; Bernhardt, J. Physical Activity and Sedentary Behaviors in People With Stroke Living in the Community: A Systematic Review. Phys. Ther. 2014, 94, 185–196. [Google Scholar] [CrossRef] [PubMed]

- Fini, N.A.; Holland, A.E.; Keating, J.; Simek, J.; Bernhardt, J. How Physically Active Are People Following Stroke? Systematic Review and Quantitative Synthesis. Phys. Ther. 2017, 97, 707–717. [Google Scholar] [CrossRef]

- Fini, N.A.; Bernhardt, J.; Churilov, L.; Clark, R.; Holland, A.E. Adherence to physical activity and cardiovascular recommendations during the 2 years after stroke rehabilitation discharge. Ann. Phys. Rehabilitation Med. 2021, 64, 101455. [Google Scholar] [CrossRef] [PubMed]

- Gebruers, N.; Vanroy, C.; Truijen, S.; Engelborghs, S.; De Deyn, P.P. Monitoring of Physical Activity After Stroke: A Systematic Review of Accelerometry-Based Measures. Arch. Phys. Med. Rehabilitation 2010, 91, 288–297. [Google Scholar] [CrossRef]

- Hobeanu, C.; Lavallée, P.C.; Charles, H.; Labreuche, J.; Albers, G.W.; Caplan, L.R.; A Donnan, G.; Ferro, J.M.; Hennerici, M.G.; A Molina, C.; et al. Risk of subsequent disabling or fatal stroke in patients with transient ischaemic attack or minor ischaemic stroke: an international, prospective cohort study. Lancet Neurol. 2022, 21, 889–898. [Google Scholar] [CrossRef]

- Kang, S.-M.; Kim, S.-H.; Han, K.-D.; Paik, N.-J.; Kim, W.-S. Physical activity after ischemic stroke and its association with adverse outcomes: A nationwide population-based cohort study. Top. Stroke Rehabilitation 2020, 28, 170–180. [Google Scholar] [CrossRef]

- Turan, T.N.; Nizam, A.; Lynn, M.J.; Egan, B.M.; Le, N.-A.; Lopes-Virella, M.F.; Hermayer, K.L.; Harrell, J.; Derdeyn, C.P.; Fiorella, D.; et al. Relationship between risk factor control and vascular events in the SAMMPRIS trial. Neurology 2017, 88, 379–385. [Google Scholar] [CrossRef]

- Adams, S.A.; Matthews, C.E.; Ebbeling, C.B.; Moore, C.G.; Cunningham, J.E.; Fulton, J.; Hebert, J.R. The Effect of Social Desirability and Social Approval on Self-Reports of Physical Activity. Am. J. Epidemiol. 2005, 161, 389–398. [Google Scholar] [CrossRef]

- Prince, S.A.; Adamo, K.B.; Hamel, M.E.; Hardt, J.; Gorber, S.C.; Tremblay, M. A comparison of direct versus self-report measures for assessing physical activity in adults: A systematic review. Int. J. Behav. Nutr. Phys. Act. 2008, 5, 56. [Google Scholar] [CrossRef]

- Troiano, R.P.; McClain, J.J.; Brychta, R.J.; Chen, K.Y. Evolution of accelerometer methods for physical activity research. Br. J. Sports Med. 2014, 48, 1019–1023. [Google Scholar] [CrossRef]

- Bernaldo de Quirós M, Douma EH, van den Akker-Scheek I, Lamoth CJC, Maurits NM. Quantification of Movement in Stroke Patients under Free Living Conditions Using Wearable Sensors: A Systematic Review. Sensors. 2022 Jan;22(3):1050.

- Boukhennoufa, I.; Zhai, X.; Utti, V.; Jackson, J.; McDonald-Maier, K.D. Wearable sensors and machine learning in post-stroke rehabilitation assessment: A systematic review. Biomed. Signal Process. Control. 2022, 71. [Google Scholar] [CrossRef]

- Godfrey, A.; Hetherington, V.; Shum, H.; Bonato, P.; Lovell, N.; Stuart, S. From A to Z: Wearable technology explained. Maturitas 2018, 113, 40–47. [Google Scholar] [CrossRef]

- Johansson, D.; Malmgren, K.; Alt Murphy, M. Wearable sensors for clinical applications in epilepsy, Parkinson’s disease, and stroke: a mixed-methods systematic review. J. Neurol. 2018, 265, 1740–1752. [Google Scholar] [CrossRef]

- Letts, E.; Jakubowski, J.S.; King-Dowling, S.; Clevenger, K.; Kobsar, D.; Obeid, J. Accelerometer techniques for capturing human movement validated against direct observation: a scoping review. Physiol. Meas. 2024, 45, 07TR01. [Google Scholar] [CrossRef] [PubMed]

- Peters, D.M.; O’bRien, E.S.; Kamrud, K.E.; Roberts, S.M.; Rooney, T.A.; Thibodeau, K.P.; Balakrishnan, S.; Gell, N.; Mohapatra, S. Utilization of wearable technology to assess gait and mobility post-stroke: a systematic review. J. Neuroeng. Rehabilitation 2021, 18, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Chen, J.; Ji, M.; Li, X. Wearable Technologies for Health Promotion and Disease Prevention in Older Adults: Systematic Scoping Review and Evidence Map. J. Med Internet Res. 2025, 27, e69077. [Google Scholar] [CrossRef] [PubMed]

- A Fini, N.; Simpson, D.; A Moore, S.; Mahendran, N.; Eng, J.J.; Borschmann, K.; Conradsson, D.M.; Chastin, S.; Churilov, L.; English, C. How should we measure physical activity after stroke? An international consensus. Int. J. Stroke 2023, 18, 1132–1142. [Google Scholar] [CrossRef]

- Pohl, J.; Ryser, A.; Veerbeek, J.M.; Verheyden, G.; Vogt, J.E.; Luft, A.R.; Easthope, C.A. Accuracy of gait and posture classification using movement sensors in individuals with mobility impairment after stroke. Front. Physiol. 2022, 13, 933987. [Google Scholar] [CrossRef]

- Bassett, D.R.; Toth, L.P.; LaMunion, S.R.; Crouter, S.E. Step Counting: A Review of Measurement Considerations and Health-Related Applications. Sports Med. 2016, 47, 1303–1315. [Google Scholar] [CrossRef]

- Brandenbarg, P.; Hoekstra, F.; Barakou, I.; Seves, B.L.; Hettinga, F.J.; Hoekstra, T.; van der Woude, L.H.V.; Dekker, R.; Krops, L.A. Measurement properties of device-based physical activity instruments in ambulatory adults with physical disabilities and/or chronic diseases: a scoping review. BMC Sports Sci. Med. Rehabilitation 2023, 15, 1–35. [Google Scholar] [CrossRef]

- Fini NA, Holland AE, Keating J, Simek J, Bernhardt J. How is physical activity monitored in people following stroke? Disability and Rehabilitation. 2015 Sep 11;37(19):1717–31.

- Larsen, R.T.; Wagner, V.; Korfitsen, C.B.; Keller, C.; Juhl, C.B.; Langberg, H.; Christensen, J. Effectiveness of physical activity monitors in adults: systematic review and meta-analysis. BMJ 2022, 376, e068047. [Google Scholar] [CrossRef]

- Neumann S, Ducrot M, Vavanan M, Naef AC, Easthope Awai C. Providing Personalized Gait Feedback in Daily Life. In: Pons JL, Tornero J, Akay M, editors. Converging Clinical and Engineering Research on Neurorehabilitation V. Cham: Springer Nature Switzerland; 2025. p. 632–5.

- Coca-Tapia, M.; Cuesta-Gómez, A.; Molina-Rueda, F.; Carratalá-Tejada, M. Gait Pattern in People with Multiple Sclerosis: A Systematic Review. Diagnostics 2021, 11, 584. [Google Scholar] [CrossRef]

- Lavelle, G.; Norris, M.; Flemming, J.; Harper, J.; Bradley, J.; Johnston, H.; Fortune, J.; Stennett, A.; Kilbride, C.; Ryan, J.M. Validity and Acceptability of Wearable Devices for Monitoring Step-Count and Activity Minutes Among People With Multiple Sclerosis. Front. Rehabilitation Sci. 2022, 2. [Google Scholar] [CrossRef]

- Xu, J.; Witchalls, J.; Preston, E.; Pan, L.; Waddington, G.; Adams, R.; Han, J. Stroke-related factors associated with gait asymmetry in ambulatory stroke survivors: A systematic review and meta-analysis. Gait Posture 2025, 121, 173–181. [Google Scholar] [CrossRef] [PubMed]

- Zanardi, A.P.J.; da Silva, E.S.; Costa, R.R.; Passos-Monteiro, E.; dos Santos, I.O.; Kruel, L.F.M.; Peyré-Tartaruga, L.A. Gait parameters of Parkinson’s disease compared with healthy controls: a systematic review and meta-analysis. Sci. Rep. 2021, 11, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Costa, P.H.V.; de Jesus, T.P.D.; Winstein, C.; Torriani-Pasin, C.; Polese, J.C. An investigation into the validity and reliability of mHealth devices for counting steps in chronic stroke survivors. Clin. Rehabilitation 2019, 34, 394–403. [Google Scholar] [CrossRef] [PubMed]

- Fulk, G.D.; Combs, S.A.; Danks, K.A.; Nirider, C.D.; Raja, B.; Reisman, D.S. Accuracy of 2 Activity Monitors in Detecting Steps in People With Stroke and Traumatic Brain Injury. Phys. Ther. 2014, 94, 222–229. [Google Scholar] [CrossRef]

- Katzan, I.; Schuster, A.; Kinzy, T. Physical Activity Monitoring Using a Fitbit Device in Ischemic Stroke Patients: Prospective Cohort Feasibility Study. JMIR mHealth uHealth 2021, 9, e14494. [Google Scholar] [CrossRef]

- Macko, R.F.; Haeuber, E.; Shaughnessy, M.; Coleman, K.L.; Boone, D.A.; Smith, G.V.; Silver, K.H. Microprocessor-based ambulatory activity monitoring in stroke patients. Med. Sci. Sports Exerc. 2002, 34, 394–399. [Google Scholar] [CrossRef]

- Mahendran N, Kuys SS, Downie E, Ng P, Brauer SG. Are Accelerometers and GPS Devices Valid, Reliable and Feasible Tools for Measurement of Community Ambulation After Stroke? Brain Impairment. 2016 Sep;17(2):151–61.

- Schaffer, S.D.; Holzapfel, S.D.; Fulk, G.; Bosch, P.R. Step count accuracy and reliability of two activity tracking devices in people after stroke. Physiother. Theory Pr. 2017, 33, 788–796. [Google Scholar] [CrossRef]

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Participants. JAMA. 2025 Jan 7;333(1):71–4.

- Ustad, A.; Logacjov, A.; Trollebø, S.Ø.; Thingstad, P.; Vereijken, B.; Bach, K.; Maroni, N.S. Validation of an Activity Type Recognition Model Classifying Daily Physical Behavior in Older Adults: The HAR70+ Model. Sensors 2023, 23, 2368. [Google Scholar] [CrossRef] [PubMed]

- Kuster, R.P.; Baumgartner, D.; Hagströmer, M.; Grooten, W.J. Where to Place Which Sensor to Measure Sedentary Behavior? A Method Development and Comparison Among Various Sensor Placements and Signal Types. J. Meas. Phys. Behav. 2020, 3, 274–284. [Google Scholar] [CrossRef]

- Xu, Y.; Li, G.; Li, Z.; Yu, H.; Cui, J.; Wang, J.; Chen, Y. Smartphone-Based Unconstrained Step Detection Fusing a Variable Sliding Window and an Adaptive Threshold. Remote. Sens. 2022, 14, 2926. [Google Scholar] [CrossRef]

- Kobsar, D.; Osis, S.T.; Phinyomark, A.; Boyd, J.E.; Ferber, R. Reliability of gait analysis using wearable sensors in patients with knee osteoarthritis. J. Biomech. 2016, 49, 3977–3982. [Google Scholar] [CrossRef] [PubMed]

- Adamowicz L, Christakis Y, Czech MD, Adamusiak T. SciKit Digital Health: Python Package for Streamlined Wearable Inertial Sensor Data Processing (Preprint) [Internet]. 2022 [cited 2024 Jul 1]. Available from: http://preprints.jmir. 3676.

- Kuster, R.P.; Huber, M.; Hirschi, S.; Siegl, W.; Baumgartner, D.; Hagströmer, M.; Grooten, W. Measuring Sedentary Behavior by Means of Muscular Activity and Accelerometry. Sensors 2018, 18, 4010. [Google Scholar] [CrossRef]

- Ducharme, S.W.; Lim, J.; Busa, M.A.; Aguiar, E.J.; Moore, C.C.; Schuna, J.M.; Barreira, T.V.; Staudenmayer, J.; Chipkin, S.R.; Tudor-Locke, C. A Transparent Method for Step Detection Using an Acceleration Threshold. J. Meas. Phys. Behav. 2021, 4, 311–320. [Google Scholar] [CrossRef]

- Brajdic, A.; Harle, R.K. Walk detection and step counting on unconstrained smartphones. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; 225–234; Association for Computing Machinery(ACM): New York, NY, USA, ; pp, 2013. [Google Scholar]

- Small SR, Chan S, Walmsley R, von Fritsch L, Acquah A, Mertes G, et al. Development and Validation of a Machine Learning Wrist-worn Step Detection Algorithm with Deployment in the UK Biobank. medRxiv. 2023 Feb 22;2023.02.20.23285750.

- Kim, K.; Kim, Y.M.; Kim, E.K. Correlation between the Activities of Daily Living of Stroke Patients in a Community Setting and Their Quality of Life. J. Phys. Ther. Sci. 2014, 26, 417–419. [Google Scholar] [CrossRef]

- Ramos-Lima MJM, Brasileiro I de C, de Lima TL, Braga-Neto P. Quality of life after stroke: impact of clinical and sociodemographic factors. Clinics (Sao Paulo). 2018;73:e418.

- Henderson, C.E.; Toth, L.; Kaplan, A.; Hornby, T.G. Step Monitor Accuracy during Poststroke Physical Therapy and Simulated Activities. Transl. J. Am. Coll. Sports Med. 2021, 7. [Google Scholar] [CrossRef] [PubMed]

- Compagnat, M.; Batcho, C.S.; David, R.; Vuillerme, N.; Salle, J.Y.; Daviet, J.C.; Mandigout, S. Validity of the Walked Distance Estimated by Wearable Devices in Stroke Individuals. Sensors 2019, 19, 2497. [Google Scholar] [CrossRef]

- Zhang, W.; Smuck, M.; Legault, C.; Ith, M.A.; Muaremi, A.; Aminian, K. Gait Symmetry Assessment with a Low Back 3D Accelerometer in Post-Stroke Patients. Sensors 2018, 18, 3322. [Google Scholar] [CrossRef]

- Kuster, RP. Advancing the measurement of sedentary behaviour: classifying posture and physical (in-)activity. Stockholm: Karolinska Institutet; 2021. 80 p.

- Andrews K, Stewart J. STROKE RECOVERY: HE CAN BUT DOES HE? Rheumatology and Rehabilitation. 1979;18(1):43–8.

- Kuster, R.P.; Grooten, W.J.A.; Baumgartner, D.; Blom, V.; Hagströmer, M.; Ekblom, Ö. Detecting prolonged sitting bouts with the ActiGraph GT3X. Scand. J. Med. Sci. Sports 2019, 30, 572–582. [Google Scholar] [CrossRef] [PubMed]

- Schmid, A.; Duncan, P.W.; Studenski, S.; Lai, S.M.; Richards, L.; Perera, S.; Wu, S.S. Improvements in Speed-Based Gait Classifications Are Meaningful. Stroke 2007, 38, 2096–2100. [Google Scholar] [CrossRef]

- Neumann S, Bauer CM, Nastasi L, Läderach J, Thürlimann E, Schwarz A, et al. Accuracy, concurrent validity, and test–retest reliability of pressure-based insoles for gait measurement in chronic stroke patients. Frontiers in Digital Health. 2024 Apr 3;6:1359771.

- Werner, C.; Easthope, C.A.; Curt, A.; Demkó, L. Towards a Mobile Gait Analysis for Patients with a Spinal Cord Injury: A Robust Algorithm Validated for Slow Walking Speeds. Sensors 2021, 21, 7381. [Google Scholar] [CrossRef]

- Clay, L.; Webb, M.; Hargest, C.; Adhia, D.B. Gait quality and velocity influences activity tracker accuracy in individuals post-stroke. Top. Stroke Rehabilitation 2019, 26, 412–417. [Google Scholar] [CrossRef] [PubMed]

- Taraldsen, K.; Askim, T.; Sletvold, O.; Einarsen, E.K.; Bjåstad, K.G.; Indredavik, B.; Helbostad, J.L. Evaluation of a Body-Worn Sensor System to Measure Physical Activity in Older People With Impaired Function. Phys. Ther. 2011, 91, 277–285. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Level | Effect Size | 95% CI | p-value | RMSE |

| Reference (Ref) | 0.30 | ||||

| Location (Ref = Waist) |

Lower Back* | 1.100 | [1.002, 1.206] | 0.044 | 0.33 |

| Ankle* | 1.101 | [1.004, 1.208] | 0.041 | 0.33 | |

| Thigh* | 1.195 | [1.089, 1.310] | <0.001 | 0.36 | |

| Chest* | 1.276 | [1.164, 1.400] | <0.001 | 0.39 | |

| Wrist (l)* | 1.848 | [1.685, 2.027] | <0.001 | 0.56 | |

| Wrist (r)* | 2.002 | [1.825, 2.196] | <0.001 | 0.61 | |

| Filter (Ref = wide band) |

narrow band* | 0.902 | [0.849, 0.958] | 0.001 | 0.27 |

| medium band* | 0.911 | [0.857, 0.968] | 0.003 | 0.28 | |

| Window (Ref = medium) |

long* | 0.772 | [0.734, 0.811] | 0.000 | 0.23 |

| Regressor (Ref = GB) |

RF | 0.989 | [0.914, 1.069] | 0.776 | 0.30 |

| MLP | 1.063 | [0.983, 1.150] | 0.125 | 0.32 | |

| SVR* | 1.089 | [1.007, 1.178] | 0.032 | 0.33 | |

| kNN* | 1.128 | [1.043, 1.219] | 0.003 | 0.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).