Submitted:

10 February 2025

Posted:

11 February 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

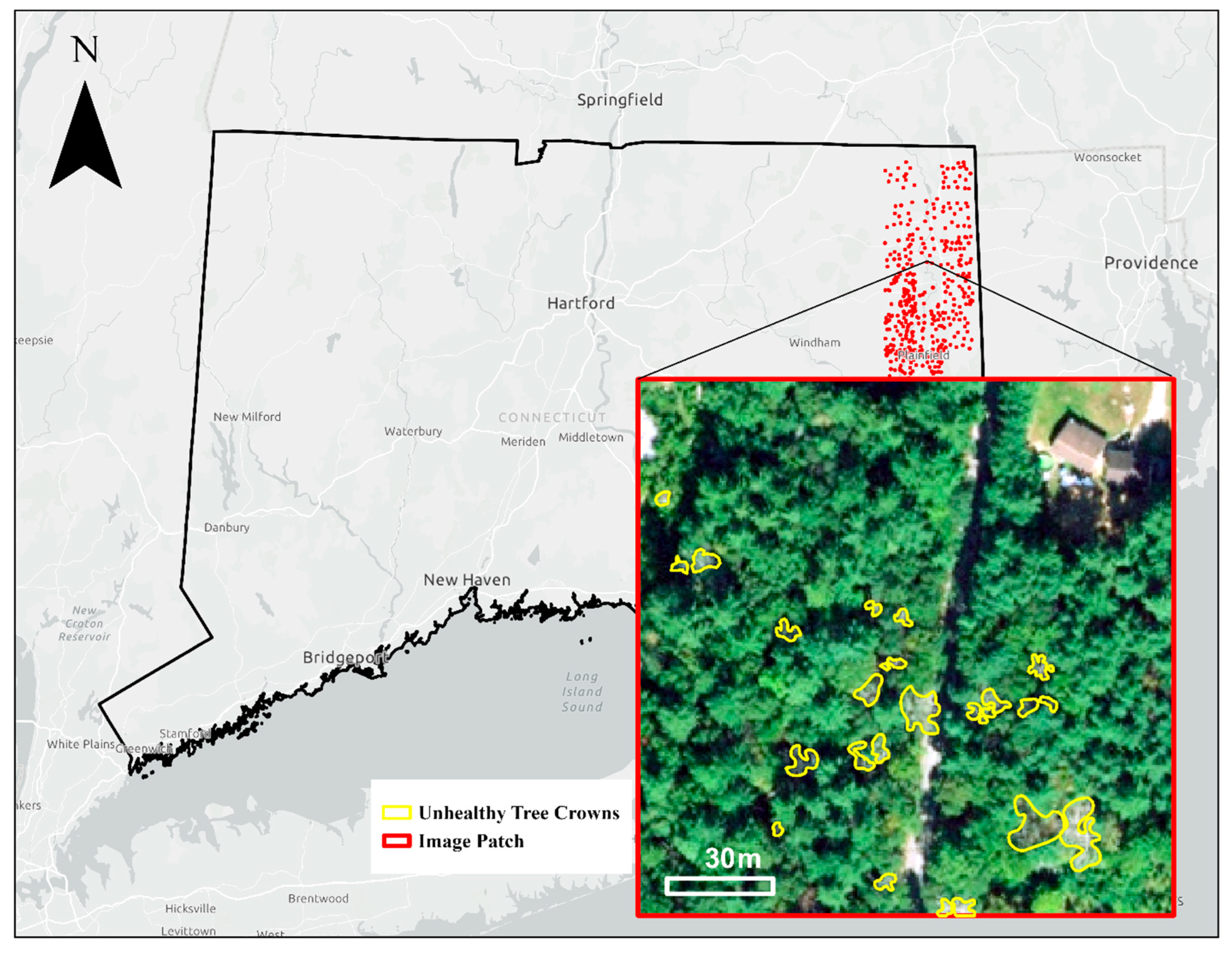

2.1. Study Area

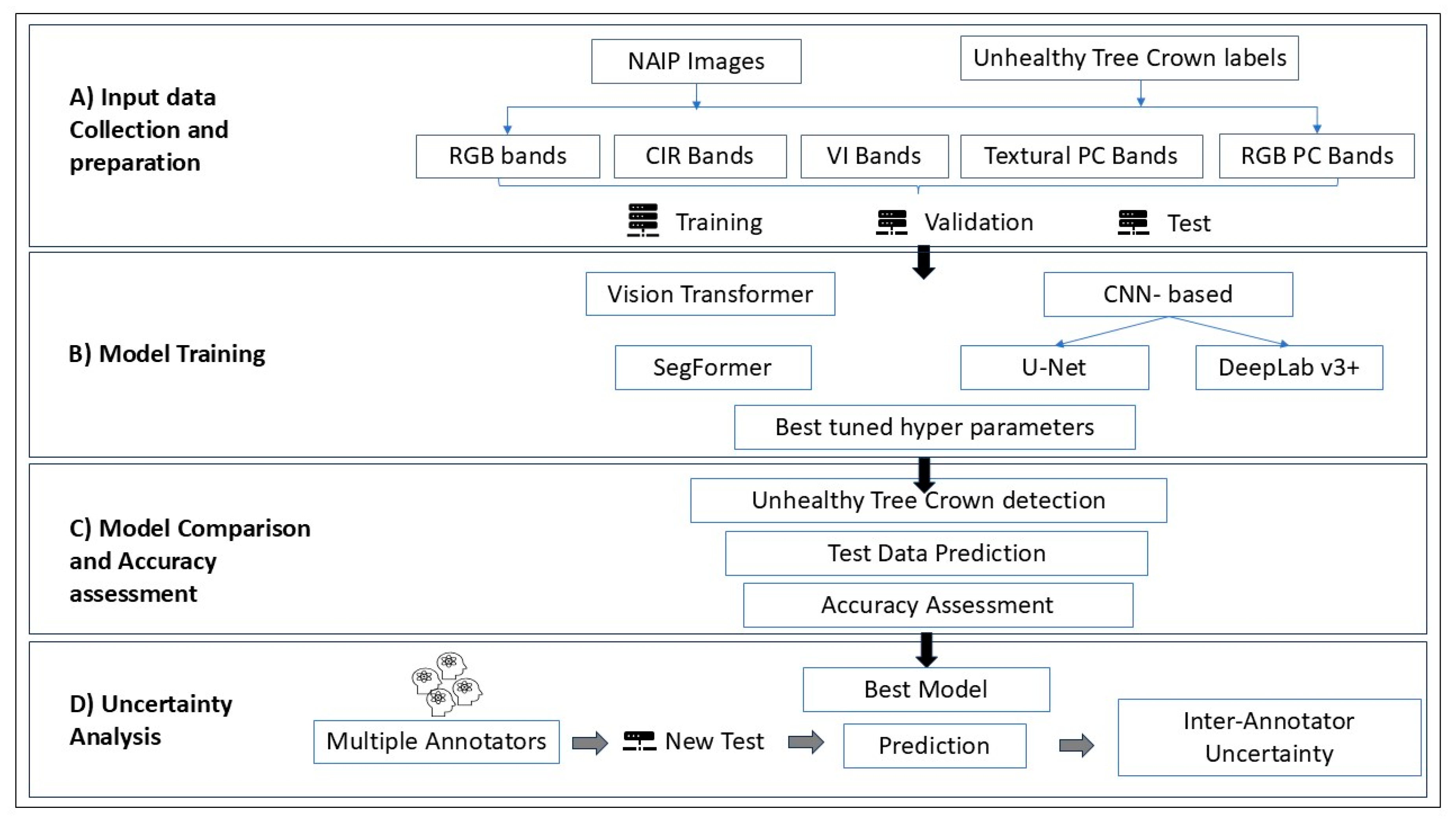

2.2. Modeling Framework

2.3. Data Retrieval and Preparation

2.3.1. Image Data

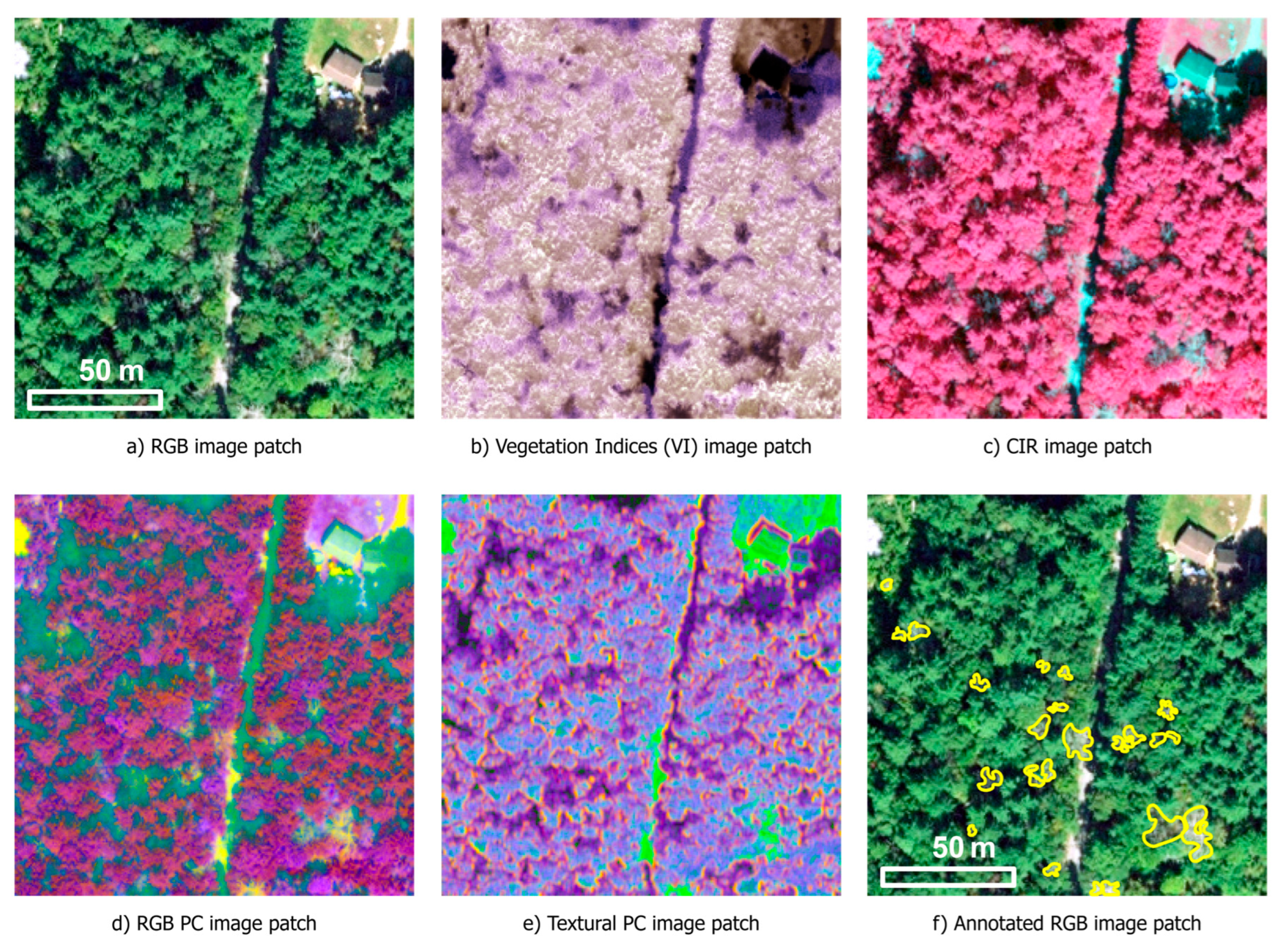

2.3.2. Spectral Band Combination

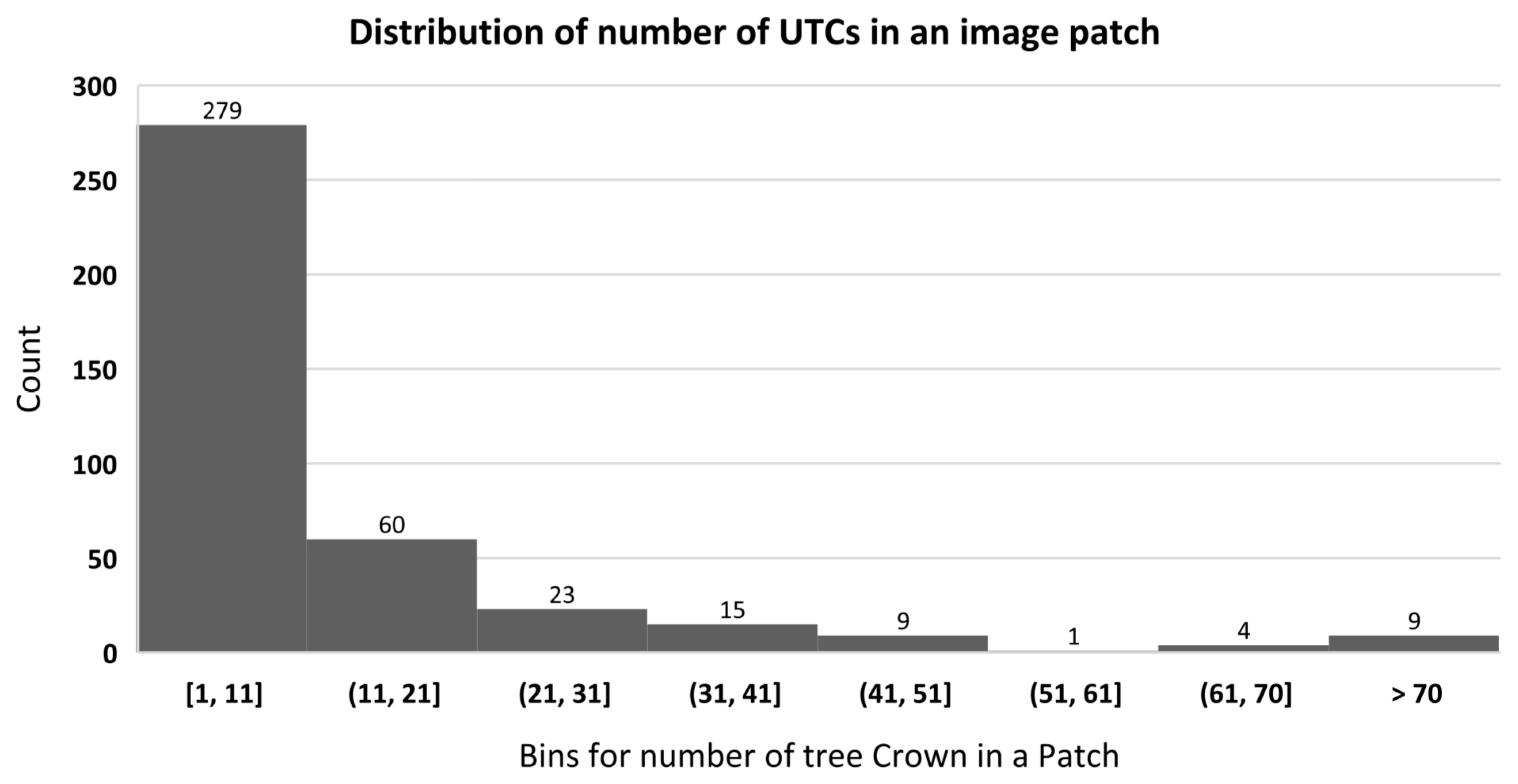

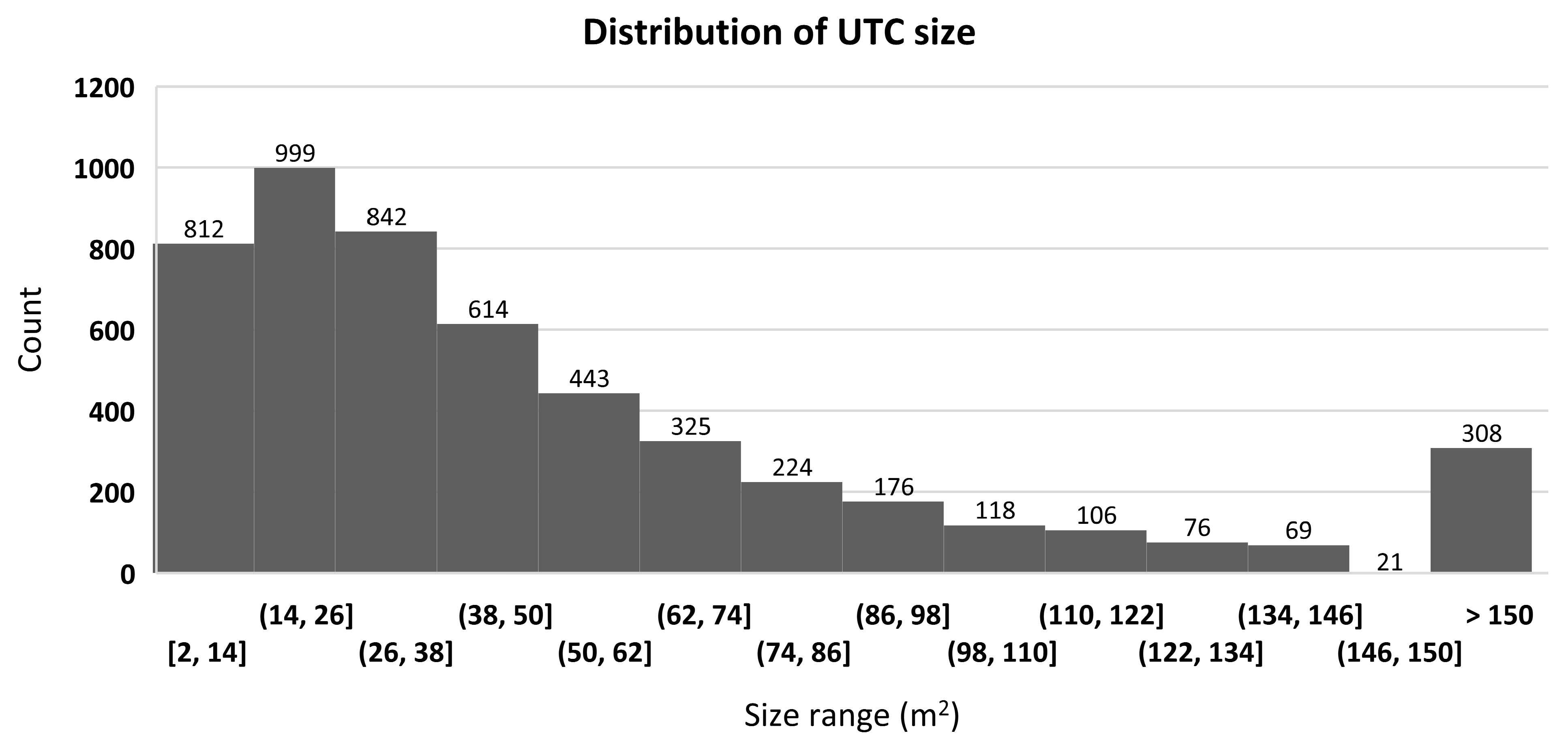

2.3.3. Manual UTC Annotation

2.4. Deep Learning Models

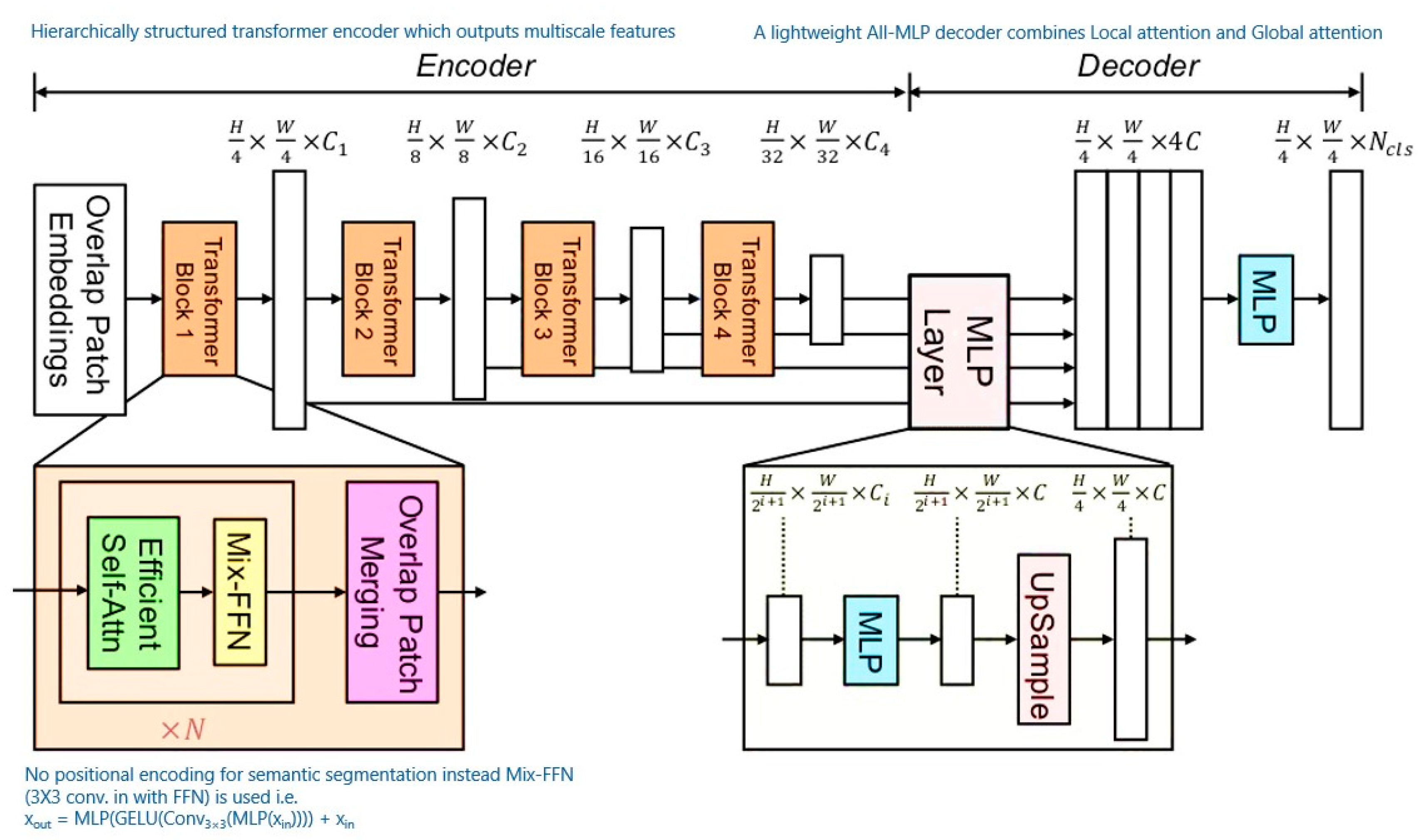

2.4.1. SegFormer Model

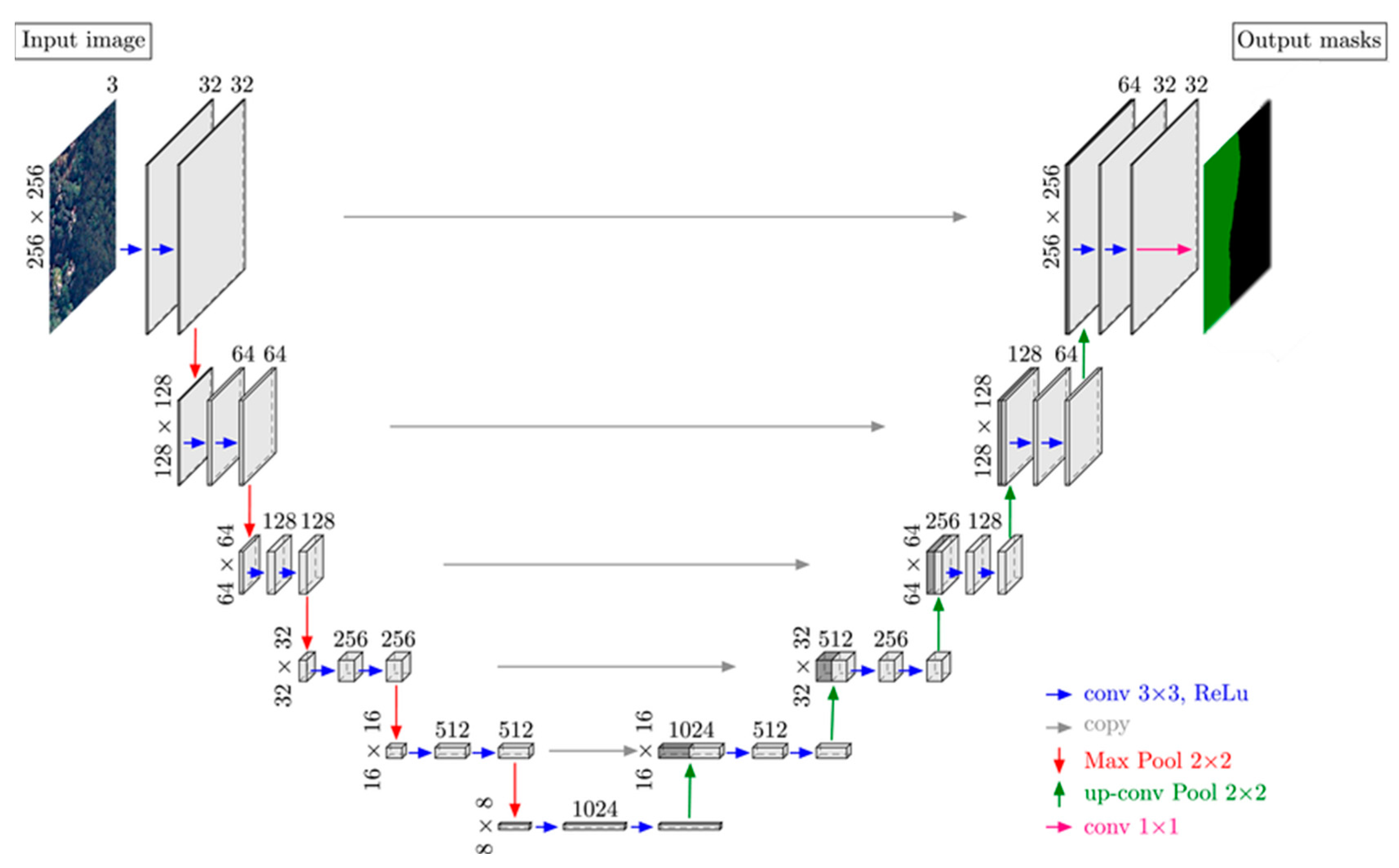

2.4.2. U-Net Model

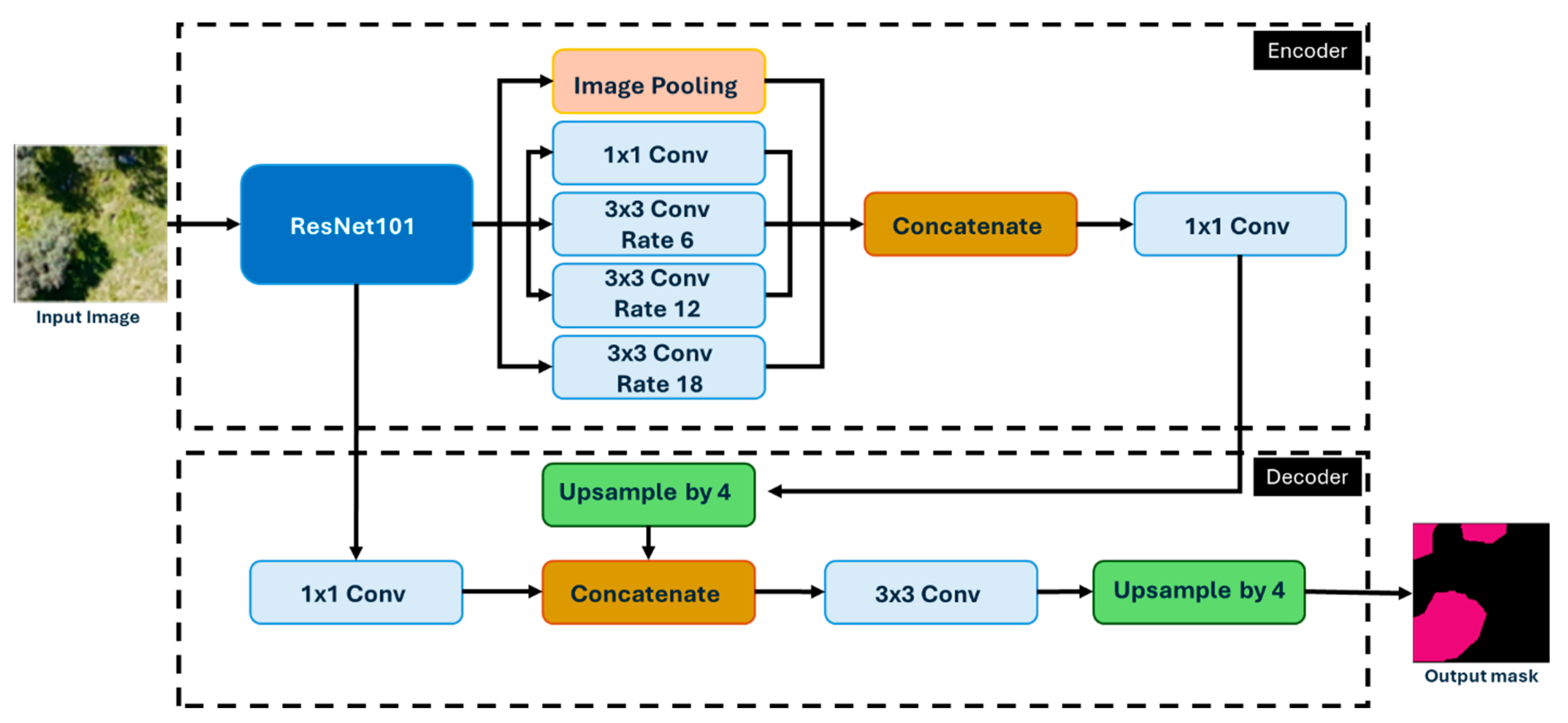

2.4.3. DeepLab Model

2.5. DL Model Training

2.6. Accuracy Assessment

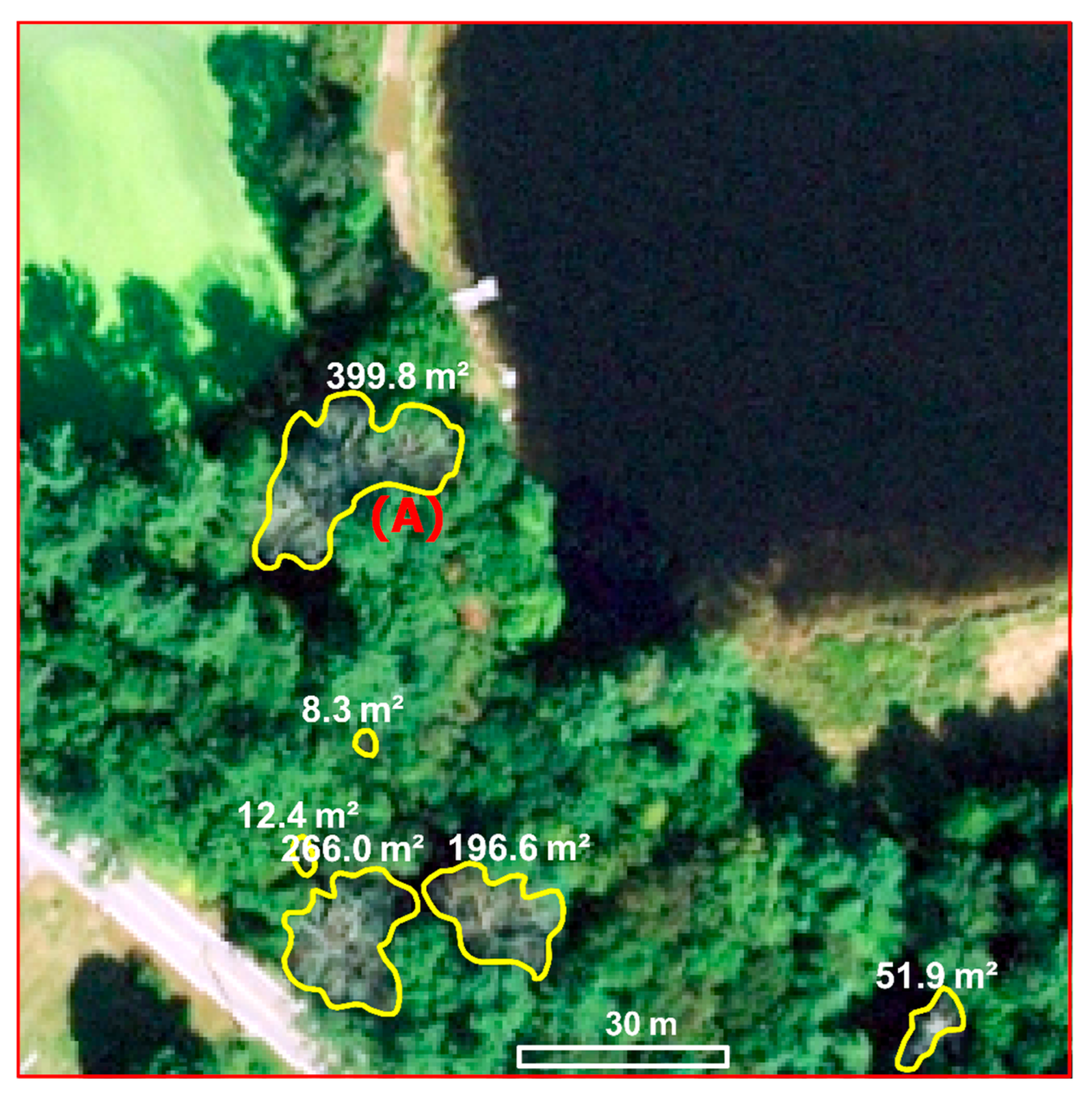

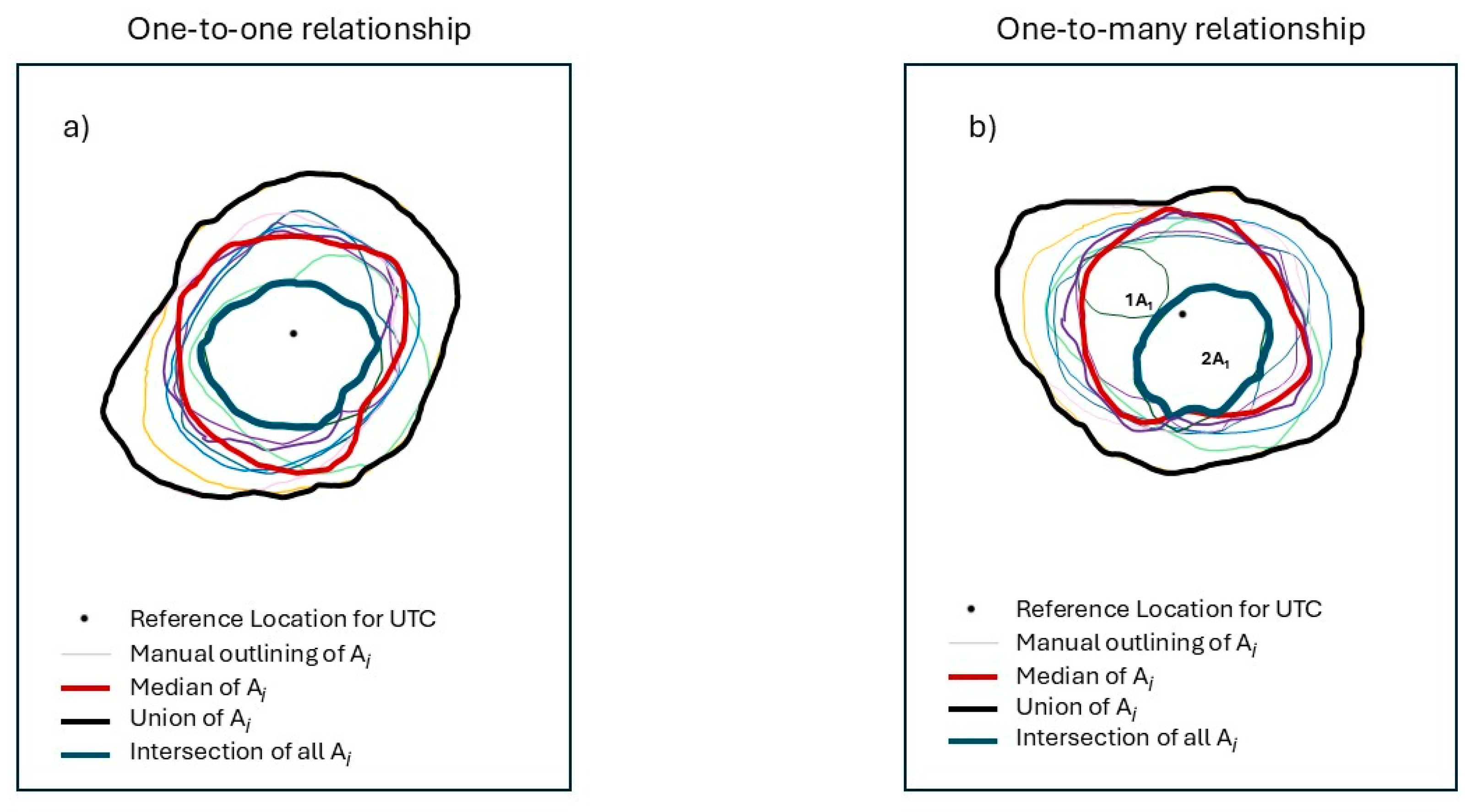

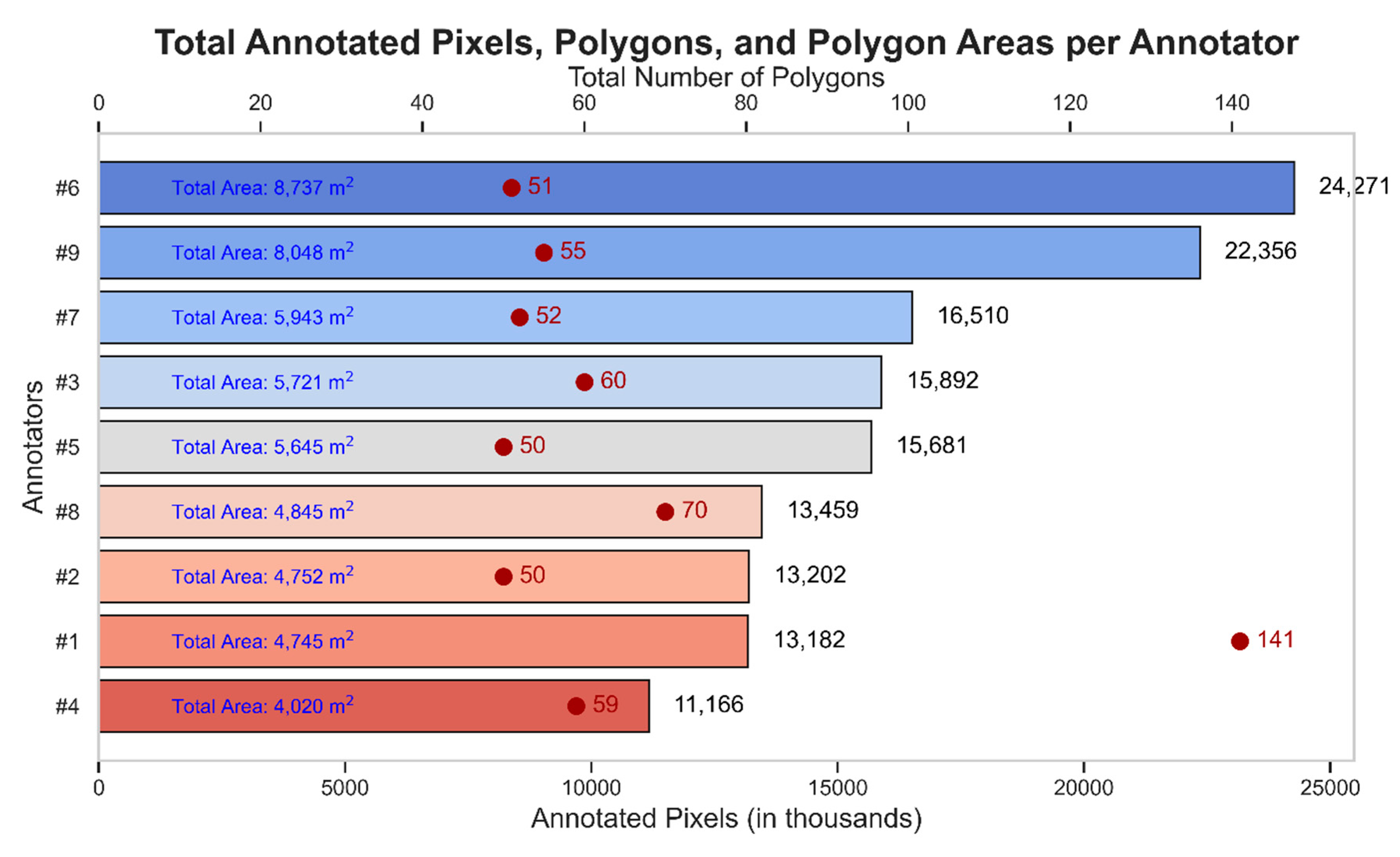

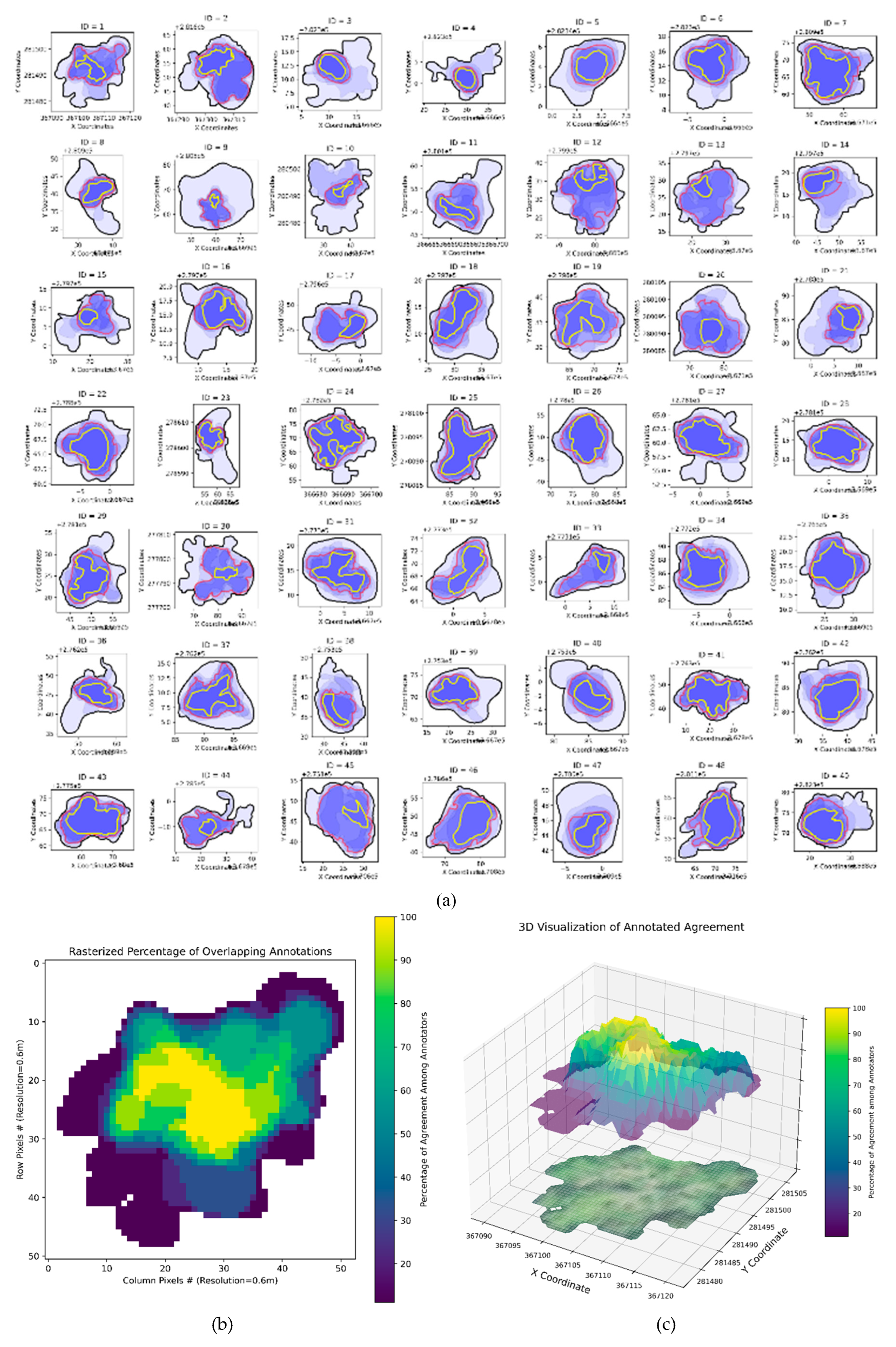

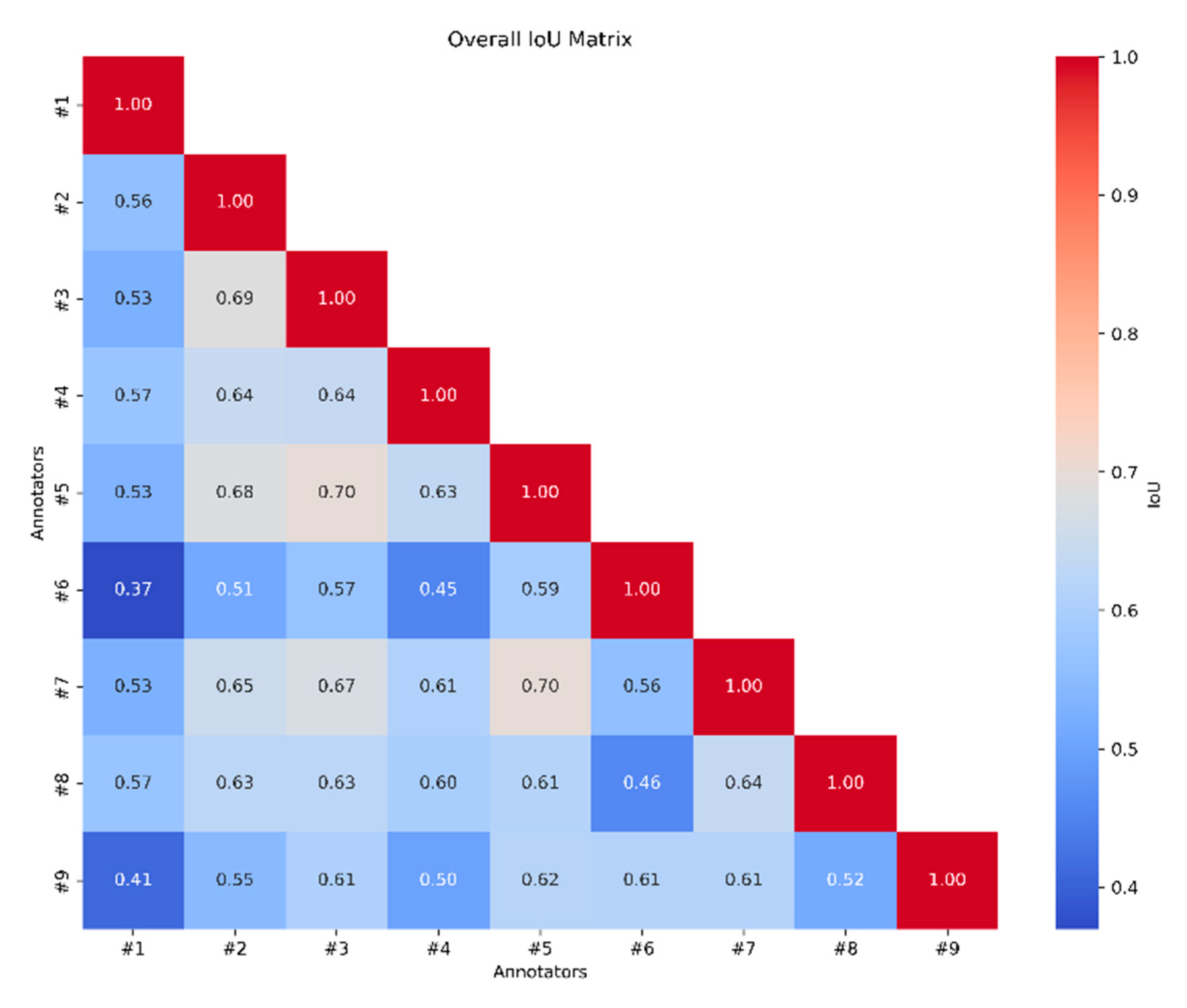

2.7. Manual Annotation Uncertainty Analysis

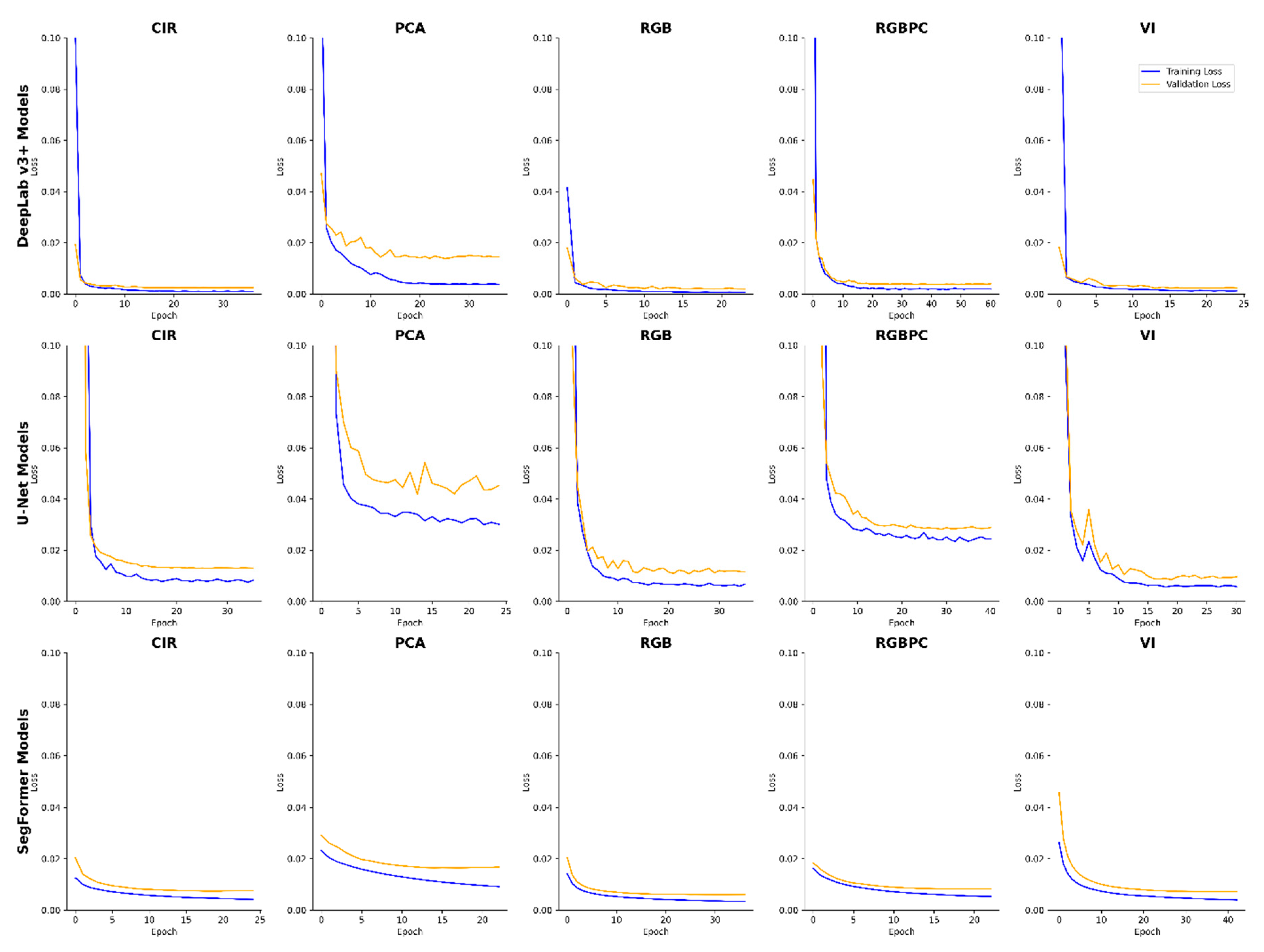

3. Results

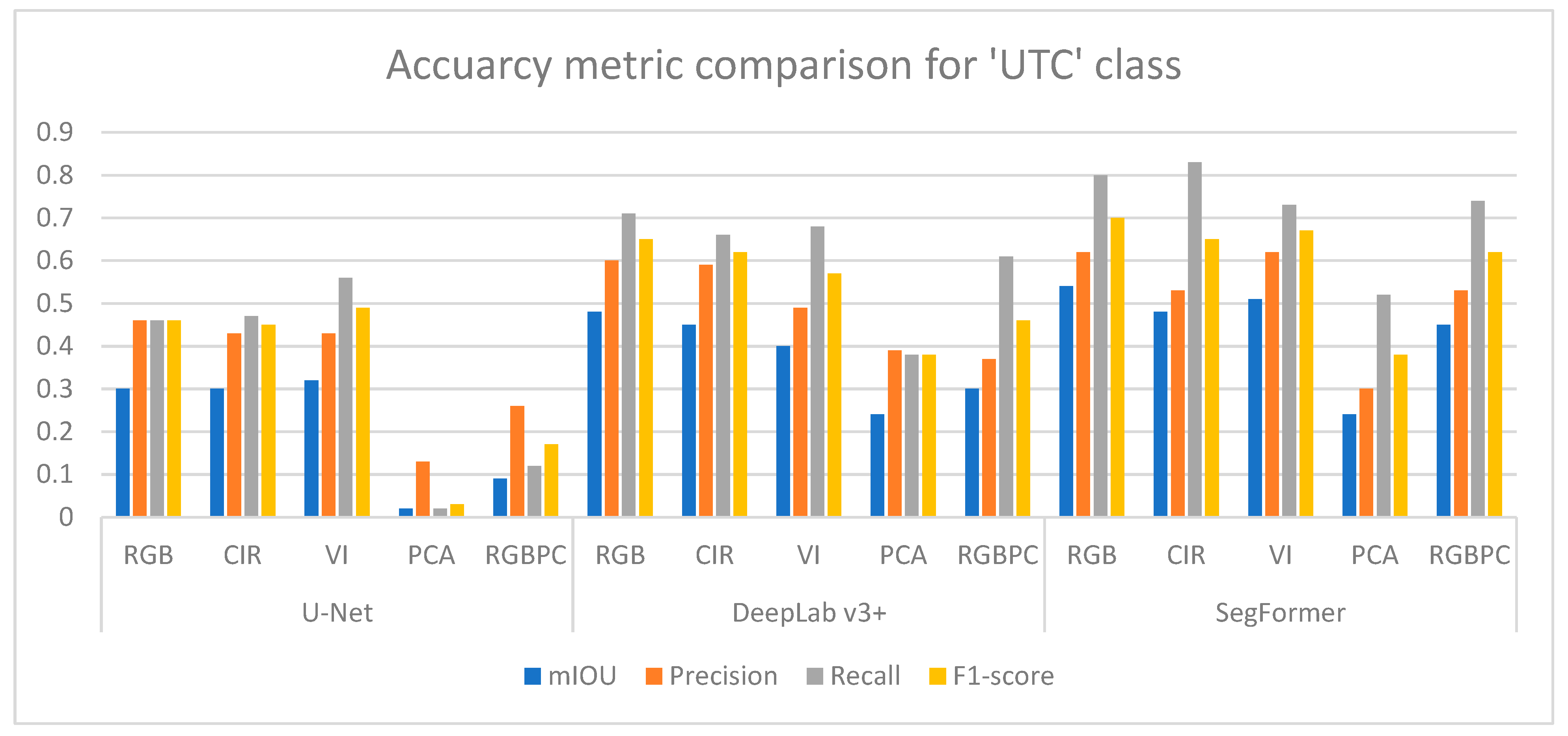

3.1. Evaluation Metrics

3.1.1. CNN Models

3.1.2. ViT-Based Model

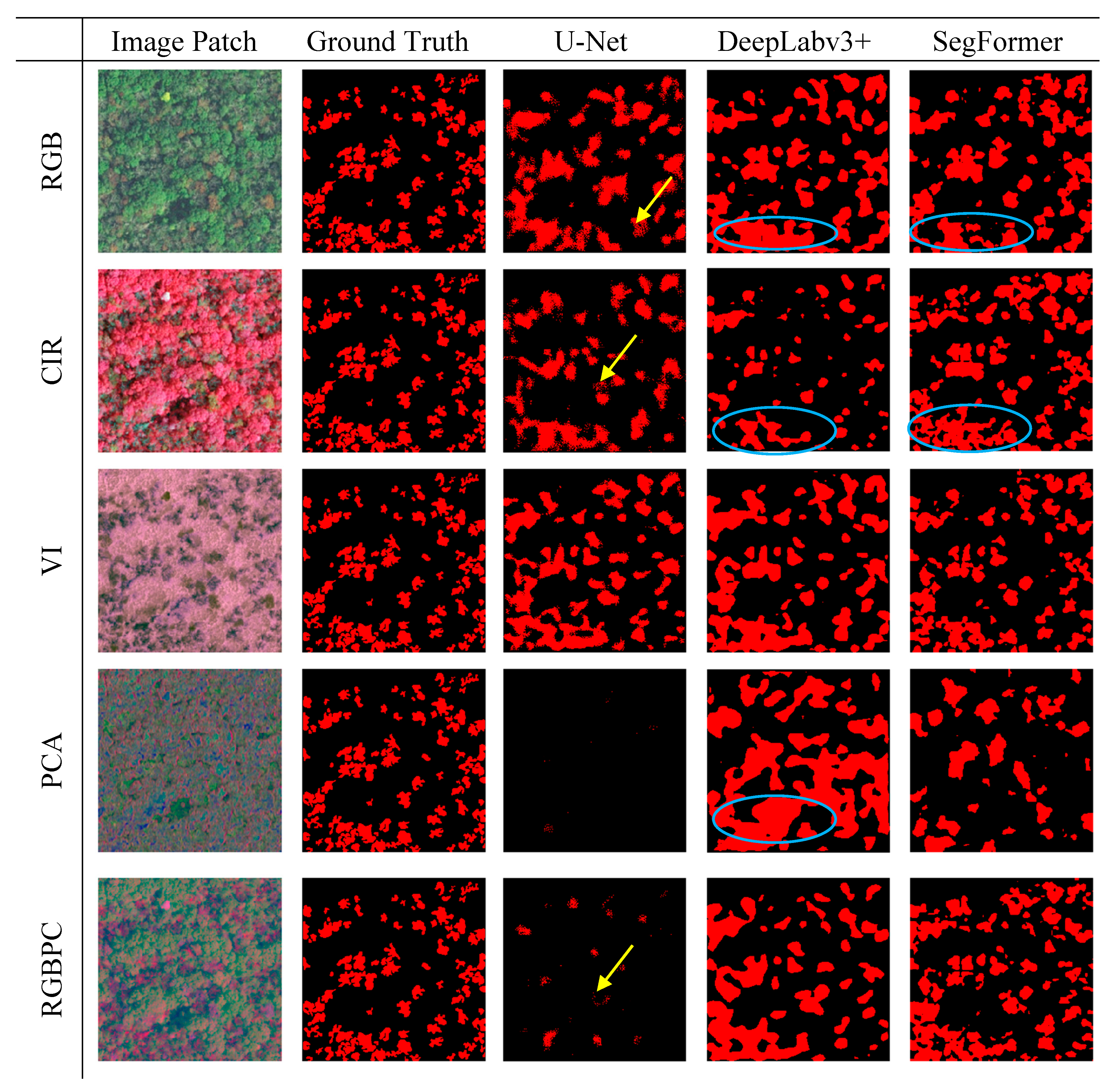

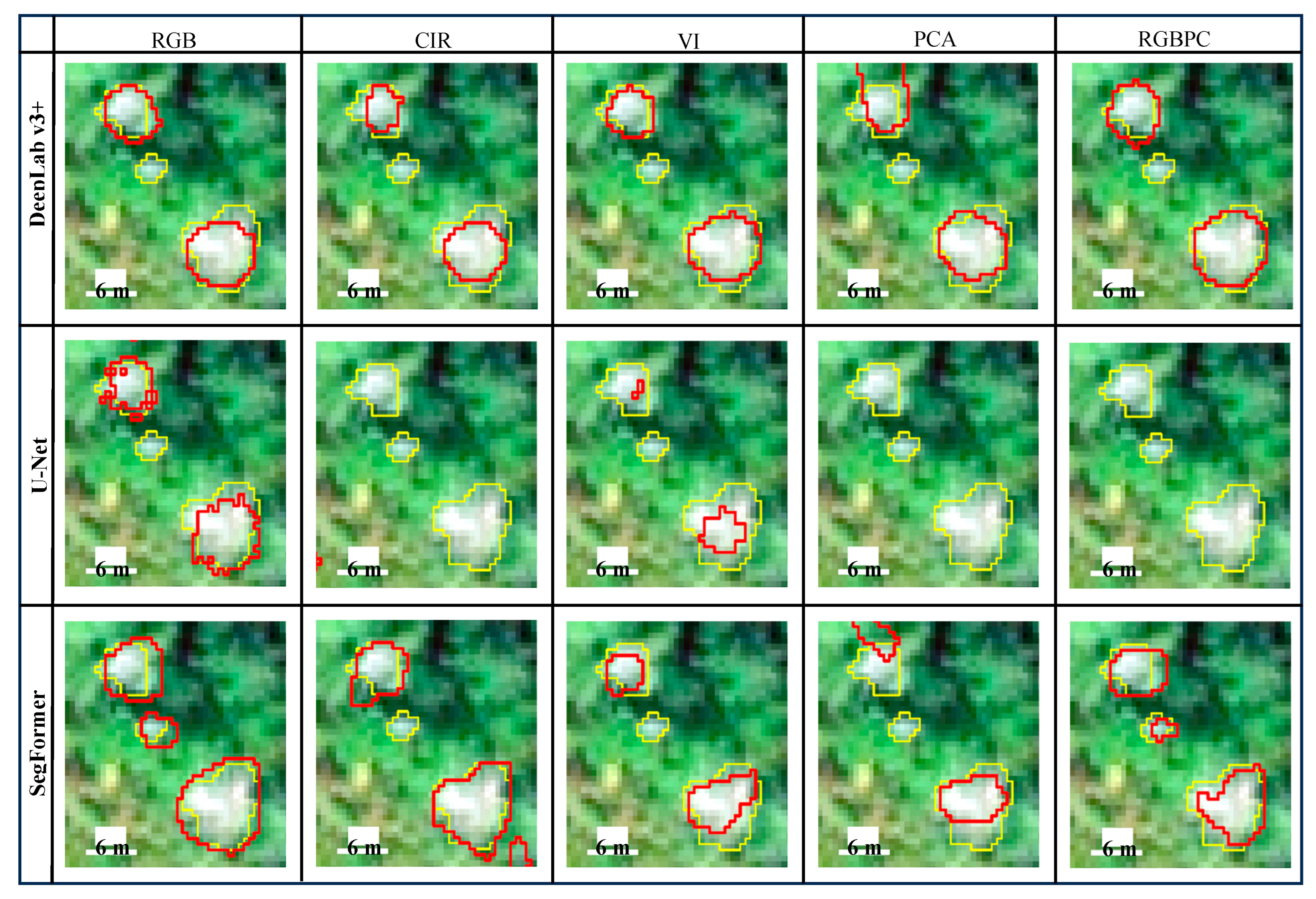

3.2. Visual Quality Assessment

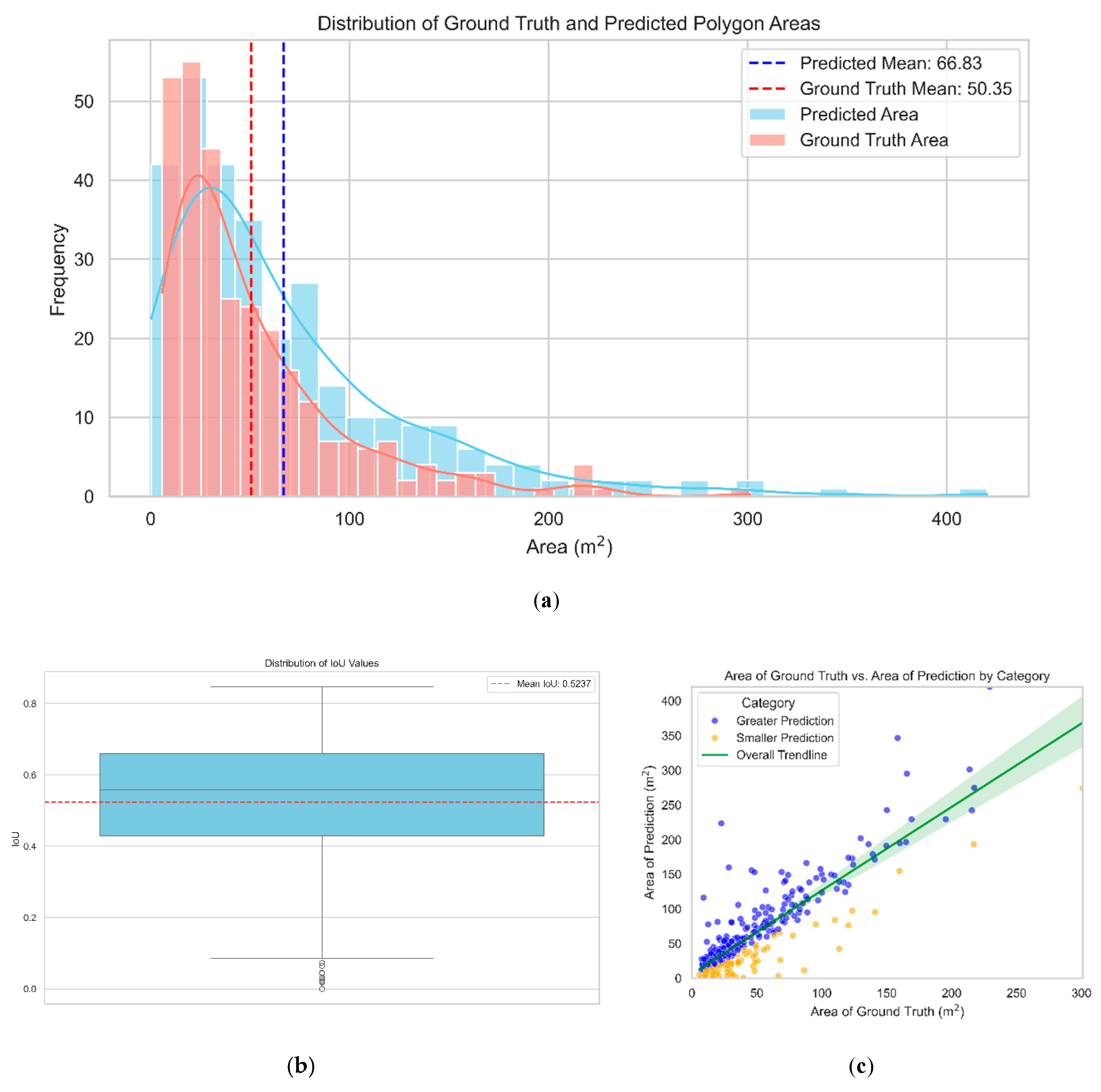

3.3. Annotation Uncertainty Analysis

4. Discussion

4.1. Performance of DL Models in UTC Segmentation

4.2. Performance of Band Combination in UTC Segmentation

4.3. Uncertainty and Limitations

4.4. Future Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gauthier, S.; Bernier, P.; Kuuluvainen, T.; Shvidenko, A.Z.; Schepaschenko, D.G. Boreal Forest Health and Global Change. Science 2015, 349, 819–822. [Google Scholar] [CrossRef] [PubMed]

- Burgess, T.I.; Oliva, J.; Sapsford, S.J.; Sakalidis, M.L.; Balocchi, F.; Paap, T. Anthropogenic Disturbances and the Emergence of Native Diseases: A Threat to Forest Health. Current Forestry Reports 2022, 8, 111–123. [Google Scholar] [CrossRef]

- Trumbore, S.; Brando, P.; Hartmann, H. Forest Health and Global Change. Science 2015, 349, 814–818. [Google Scholar] [CrossRef]

- Dale, V.H.; Joyce, L.A.; McNulty, S.; Neilson, R.P.; Ayres, M.P.; Flannigan, M.D.; Hanson, P.J.; Irland, L.C.; Lugo, A.E.; Peterson, C.J. Climate Change and Forest Disturbances: Climate Change can Affect Forests by Altering the Frequency, Intensity, Duration, and Timing of Fire, Drought, Introduced Species, Insect and Pathogen Outbreaks, Hurricanes, Windstorms, Ice Storms, Or Landslides. Bioscience 2001, 51, 723–734. [Google Scholar] [CrossRef]

- Sapkota, I.P.; Tigabu, M.; Odén, P.C. Changes in Tree Species Diversity and Dominance Across a Disturbance Gradient in Nepalese Sal (Shorea Robusta Gaertn. F). Forests. Journal of Forestry Research 2010, 21, 25–32. [Google Scholar] [CrossRef]

- Vilà-Cabrera, A.; Martínez-Vilalta, J.; Vayreda, J.; Retana, J. Structural and Climatic Determinants of Demographic Rates of Scots Pine Forests Across the Iberian Peninsula. Ecol. Appl. 2011, 21, 1162–1172. [Google Scholar] [CrossRef]

- Song, W.; Deng, X. Land-use/Land-Cover Change and Ecosystem Service Provision in China. Sci. Total Environ. 2017, 576, 705–719. [Google Scholar] [CrossRef] [PubMed]

- USDA Forest Service. Forests of Connecticut, 2021. Resource Update FS-370. Madison, WI: U.S. Department of Agriculture, Forest Service, Northern Research Station 2022.

- Han, Z.; Hu, W.; Peng, S.; Lin, H.; Zhang, J.; Zhou, J.; Wang, P.; Dian, Y. Detection of Standing Dead Trees After Pine Wilt Disease Outbreak with Airborne Remote Sensing Imagery by Multi-Scale Spatial Attention Deep Learning and Gaussian Kernel Approach. Remote Sensing 2022, 14, 3075. [Google Scholar] [CrossRef]

- Krzystek, P.; Serebryanyk, A.; Schnörr, C.; Červenka, J.; Heurich, M. Large-Scale Mapping of Tree Species and Dead Trees in Šumava National Park and Bavarian Forest National Park using Lidar and Multispectral Imagery. Remote Sensing 2020, 12, 661. [Google Scholar] [CrossRef]

- Kamińska, A.; Lisiewicz, M.; Stereńczak, K.; Kraszewski, B.; Sadkowski, R. Species-Related Single Dead Tree Detection using Multi-Temporal ALS Data and CIR Imagery. Remote Sens. Environ. 2018, 219, 31–43. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A Review of Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep Learning for Remote Sensing Image Classification: A Survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 2018, 8, e1264. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS journal of photogrammetry and remote sensing 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Chen, K.; Fu, K.; Yan, M.; Gao, X.; Sun, X.; Wei, X. Semantic Segmentation of Aerial Images with Shuffling Convolutional Neural Networks. IEEE Geoscience and Remote Sensing Letters 2018, 15, 173–177. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very High Resolution Urban Remote Sensing with Multimodal Deep Networks. ISPRS journal of photogrammetry and remote sensing 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery using Semi-Supervised Deep Learning Neural Networks. Remote Sensing 2019, 11, 1309. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Hung, N.D. Deep Learning Based Banana Plant Detection and Counting using High-Resolution Red-Green-Blue (RGB) Images Collected from Unmanned Aerial Vehicle (UAV). PloS one 2019, 14, e0223906. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote sensing 2016, 9, 22. [Google Scholar] [CrossRef]

- Lee, M.; Cho, H.; Youm, S.; Kim, S. Detection of Pine Wilt Disease using Time Series UAV Imagery and Deep Learning Semantic Segmentation. Forests 2023, 14, 1576. [Google Scholar] [CrossRef]

- Ulku, I.; Akagündüz, E.; Ghamisi, P. Deep Semantic Segmentation of Trees using Multispectral Images. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2022, 15, 7589–7604. [Google Scholar] [CrossRef]

- Zhang, J.; Cong, S.; Zhang, G.; Ma, Y.; Zhang, Y.; Huang, J. Detecting Pest-Infested Forest Damage through Multispectral Satellite Imagery and Improved UNet. Sensors 2022, 22, 7440. [Google Scholar] [CrossRef]

- Jiang, S.; Yao, W.; Heurich, M. Dead Wood Detection Based on Semantic Segmentation of VHR Aerial CIR Imagery using Optimized FCN-Densenet. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2019, 42, 127–133. [Google Scholar] [CrossRef]

- Liu, D.; Jiang, Y.; Wang, R.; Lu, Y. Establishing a Citywide Street Tree Inventory with Street View Images and Computer Vision Techniques. Comput. Environ Urban Syst. 2023, 100, 101924. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks. ISPRS Journal of Photogrammetry and Remote Sensing 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Joshi, D.; Witharana, C. Roadside Forest Modeling using Dashcam Videos and Convolutional Neural Nets. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2022, 46, 135–140. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing. ISPRS journal of photogrammetry and remote sensing 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Advances in neural information processing systems 2015, 28. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; pp. 3431–3440.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision; pp. 2961–2969.

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18; pp. 234–241.

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV); pp. 801–818.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; pp. 779–788.

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Ola Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry using Uav-Acquired Rgb Data: A Practical Review. Remote Sensing 2021, 13, 2837. [Google Scholar] [CrossRef]

- Brandt, M.; Tucker, C.J.; Kariryaa, A.; Rasmussen, K.; Abel, C.; Small, J.; Chave, J.; Rasmussen, L.V.; Hiernaux, P.; Diouf, A.A. An Unexpectedly Large Count of Trees in the West African Sahara and Sahel. Nature 2020, 587, 78–82. [Google Scholar] [CrossRef]

- Yang, J.; Kang, Z.; Cheng, S.; Yang, Z.; Akwensi, P.H. An Individual Tree Segmentation Method Based on Watershed Algorithm and Three-Dimensional Spatial Distribution Analysis from Airborne LiDAR Point Clouds. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2020, 13, 1055–1067. [Google Scholar] [CrossRef]

- Dersch, S.; Heurich, M.; Krueger, N.; Krzystek, P. Combining Graph-Cut Clustering with Object-Based Stem Detection for Tree Segmentation in Highly Dense Airborne Lidar Point Clouds. ISPRS Journal of Photogrammetry and Remote Sensing 2021, 172, 207–222. [Google Scholar] [CrossRef]

- Li, Q.; Yuan, P.; Liu, X.; Zhou, H. Street Tree Segmentation from Mobile Laser Scanning Data. Int. J. Remote Sens. 2020, 41, 7145–7162. [Google Scholar] [CrossRef]

- Solberg, S.; Naesset, E.; Bollandsas, O.M. Single Tree Segmentation using Airborne Laser Scanner Data in a Structurally Heterogeneous Spruce Forest. Photogrammetric Engineering & Remote Sensing 2006, 72, 1369–1378. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv, 2020; arXiv:2010.11929. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all You Need. Advances in neural information processing systems 2017, 30.

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM computing surveys (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolò, C.; Bazi, Y.; Al Rahhal, M.M.; Alsharif, N.A.; Al Maghayreh, E. Contrasting YOLOv5, Transformer, and EfficientDet Detectors for Crop Circle Detection in Desert. IEEE Geoscience and Remote Sensing Letters 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision Transformers for Remote Sensing Image Classification. Remote Sensing 2021, 13, 516. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Kaselimi, M.; Voulodimos, A.; Daskalopoulos, I.; Doulamis, N.; Doulamis, A. A Vision Transformer Model for Convolution-Free Multilabel Classification of Satellite Imagery in Deforestation Monitoring. IEEE Transactions on Neural Networks and Learning Systems 2022. [Google Scholar] [CrossRef]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Applied Sciences 2023, 13, 5521. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision; pp. 9650–9660.

- Li, Z.; Chen, G.; Zhang, T. A CNN-Transformer Hybrid Approach for Crop Classification using Multitemporal Multisensor Images. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Advances in Neural Information Processing Systems 2021, 34, 12077–12090. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision; pp. 10012–10022.

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.; Girshick, R. Detectron2. 2019.

- Wang, W.; Xie, E.; Li, X.; Fan, D.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision; pp. 568–578.

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-Attention Mask Transformer for Universal Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; pp. 1290–1299.

- Thisanke, H.; Deshan, C.; Chamith, K.; Seneviratne, S.; Vidanaarachchi, R.; Herath, D. Semantic Segmentation using Vision Transformers: A Survey. Eng Appl Artif Intell 2023, 126, 106669. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Shanableh, A.; Al-Ruzouq, R.; bin Hashim, S.J.; Wayayok, A.; Sachit, M.S. Large-Scale Assessment of Date Palm Plantations Based on UAV Remote Sensing and Multiscale Vision Transformer. Remote Sensing Applications: Society and Environment 2024, 34, 101195. [Google Scholar] [CrossRef]

- Jiang, J.; Xiang, J.; Yan, E.; Song, Y.; Mo, D. Forest-CD: Forest Change Detection Network Based on VHR Images. IEEE Geoscience and Remote Sensing Letters 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Wildfire Segmentation using Deep Vision Transformers. Remote Sensing 2021, 13, 3527. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A. A Review of Vegetation Indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Huete, A.R. Vegetation Indices, Remote Sensing and Forest Monitoring. Geography Compass 2012, 6, 513–532. [Google Scholar] [CrossRef]

- Peddle, D.R.; Brunke, S.P.; Hall, F.G. A Comparison of Spectral Mixture Analysis and Ten Vegetation Indices for Estimating Boreal Forest Biophysical Information from Airborne Data. Canadian journal of remote sensing 2001, 27, 627–635. [Google Scholar] [CrossRef]

- Franklin, S.E.; Waring, R.H.; McCreight, R.W.; Cohen, W.B.; Fiorella, M. Aerial and Satellite Sensor Detection and Classification of Western Spruce Budworm Defoliation in a Subalpine Forest. Canadian Journal of Remote Sensing 1995, 21, 299–308. [Google Scholar] [CrossRef]

- Coburn, C.A.; Roberts, A.C. A Multiscale Texture Analysis Procedure for Improved Forest Stand Classification. Int. J. Remote Sens. 2004, 25, 4287–4308. [Google Scholar] [CrossRef]

- Franklin, S.E.; Hall, R.J.; Moskal, L.M.; Maudie, A.J.; Lavigne, M.B. Incorporating Texture into Classification of Forest Species Composition from Airborne Multispectral Images. Int. J. Remote Sens. 2000, 21, 61–79. [Google Scholar] [CrossRef]

- Moskal, L.M.; Franklin, S.E. Relationship between Airborne Multispectral Image Texture and Aspen Defoliation. Int. J. Remote Sens. 2004, 25, 2701–2711. [Google Scholar] [CrossRef]

- Karpatne, A.; Atluri, G.; Faghmous, J.H.; Steinbach, M.; Banerjee, A.; Ganguly, A.; Shekhar, S.; Samatova, N.; Kumar, V. Theory-Guided Data Science: A New Paradigm for Scientific Discovery from Data. IEEE Trans. Knowled. Data Eng. 2017, 29, 2318–2331. [Google Scholar] [CrossRef]

- Nowak, S.; Rüger, S. How Reliable are Annotations Via Crowdsourcing: A Study about Inter-Annotator Agreement for Multi-Label Image Annotation. In Proceedings of the International Conference on Multimedia Information Retrieval; pp. 557–566.

- Vădineanu, Ş; Pelt, D.M.; Dzyubachyk, O.; Batenburg, K.J. An Analysis of the Impact of Annotation Errors on the Accuracy of Deep Learning for Cell Segmentation. In International Conference on Medical Imaging with Deep Learning; pp. 1251–1267.

- Rädsch, T.; Reinke, A.; Weru, V.; Tizabi, M.D.; Schreck, N.; Kavur, A.E.; Pekdemir, B.; Roß, T.; Kopp-Schneider, A.; Maier-Hein, L. Labelling Instructions Matter in Biomedical Image Analysis. Nature Machine Intelligence 2023, 5, 273–283. [Google Scholar] [CrossRef]

- FS, R.U. Forests of Connecticut, 2017. Forest 2018, 1. [Google Scholar]

- Elaina, H. Planting New Trees in the Wake of the Gypsy Moths. UConn Today 2019. [Google Scholar]

- USDA-FPAC-BC-APFO Aerial Photography Field Office. NAIP Digital Georectified Image. 2019, 2024.

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the Radiometric and Biophysical Performance of the MODIS Vegetation Indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically Resistant Vegetation Index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Greenacre, M.; Groenen, P.J.; Hastie, T.; d’Enza, A.I.; Markos, A.; Tuzhilina, E. Principal Component Analysis. Nature Reviews Methods Primers 2022, 2, 100. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 610–621. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; pp. 6881–6890.

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.; Gloor, E.; Phillips, O.L.; Aragao, L.E. Using the U-net Convolutional Network to Map Forest Types and Disturbance in the Atlantic Rainforest with very High Resolution Images. Remote Sensing in Ecology and Conservation 2019, 5, 360–375. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowled. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. International journal of computer vision 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Banko, G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data and of Methods Including Remote Sensing Data in Forest Inventory. 1998.

- Smith, B.; Varzi, A.C. Fiat and Bona Fide Boundaries. Philosophical and phenomenological research 2000, 401–420. [Google Scholar] [CrossRef]

- Sani-Mohammed, A.; Yao, W.; Heurich, M. Instance Segmentation of Standing Dead Trees in Dense Forest from Aerial Imagery using Deep Learning. ISPRS Open Journal of Photogrammetry and Remote Sensing 2022, 6, 100024. [Google Scholar] [CrossRef]

- Tao, H.; Li, C.; Zhao, D.; Deng, S.; Hu, H.; Xu, X.; Jing, W. Deep Learning-Based Dead Pine Tree Detection from Unmanned Aerial Vehicle Images. Int. J. Remote Sens. 2020, 41, 8238–8255. [Google Scholar] [CrossRef]

- He, J.; Zhao, L.; Yang, H.; Zhang, M.; Li, W. HSI-BERT: Hyperspectral Image Classification using the Bidirectional Encoder Representation from Transformers. IEEE Transactions on Geoscience and Remote Sensing 2020, 58, 165–178. [Google Scholar] [CrossRef]

- Shahid, M.; Chen, S.; Hsu, Y.; Chen, Y.; Chen, Y.; Hua, K. Forest Fire Segmentation Via Temporal Transformer from Aerial Images. Forests 2023, 14, 563. [Google Scholar] [CrossRef]

- Deininger, L.; Stimpel, B.; Yuce, A.; Abbasi-Sureshjani, S.; Schönenberger, S.; Ocampo, P.; Korski, K.; Gaire, F. A Comparative Study between Vision Transformers and CNNs in Digital Pathology. arXiv 2022, arXiv:2206.00389. [Google Scholar]

- Minařík, R.; Langhammer, J.; Lendzioch, T. Detection of Bark Beetle Disturbance at Tree Level using UAS Multispectral Imagery and Deep Learning. Remote Sensing 2021, 13, 4768. [Google Scholar] [CrossRef]

- Ahlswede, S.; Schulz, C.; Gava, C.; Helber, P.; Bischke, B.; Förster, M.; Arias, F.; Hees, J.; Demir, B.; Kleinschmit, B. TreeSatAI Benchmark Archive: A Multi-Sensor, Multi-Label Dataset for Tree Species Classification in Remote Sensing. Earth System Science Data Discussions 2022, 2022, 1–22. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification using a WorldView-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef] [PubMed]

- Hao, Z.; Lin, L.; Post, C.J.; Mikhailova, E.A.; Li, M.; Chen, Y.; Yu, K.; Liu, J. Automated Tree-Crown and Height Detection in a Young Forest Plantation using Mask Region-Based Convolutional Neural Network (Mask R-CNN). ISPRS Journal of Photogrammetry and Remote Sensing 2021, 178, 112–123. [Google Scholar] [CrossRef]

- Alhazmi, K.; Alsumari, W.; Seppo, I.; Podkuiko, L.; Simon, M. Effects of Annotation Quality on Model Performance. In 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC); pp. 63.

- Nitze, I.; van der Sluijs, J.; Barth, S.; Bernhard, P.; Huang, L.; Lara, M.; Kizyakov, A.; Runge, A.; Veremeeva, A.; Jones, M.W. A Labeling Intercomparison of Retrogressive Thaw Slumps by a Diverse Group of Domain Experts. 2024. [CrossRef]

- Li, X.; Ding, H.; Yuan, H.; Zhang, W.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-Based Visual Segmentation: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024. [Google Scholar] [CrossRef]

| Name | Abbreviation | Description |

|---|---|---|

| Natural Color Image Patch | RGB | Red, Green, and Blue bands |

| False Color Image Patch | CIR | Near-infrared, Red, and Green bands |

| RGBPC Image Patch | RGBPC | Red, Green, and 1st Principal Component (derived from all 4 bands) |

| Vegetation Indices Image patch | VI | NDVI, EVI, ARVI bands |

| Textural PC Image Patch | PCA | First 3 PCs bands. PCA was done on eight GLCM texture matrices generated for each band (Variance, Entropy, Contrast, Homogeneity, Angular Second Momentum, Dissimilarity, Mean, and Correlation) |

| Variables | Parameters |

|---|---|

| Number of NAIP scenes used | 16 (each scene 12,596 x 12596 pixels) |

| Image resolution | 0.6m |

| Spectral resolution | 4 (Red, Green, Blue, Near infrared) |

| Date of image acquisition | September 2018 |

| Random points generated | 500 |

| Number of image patches used | 400 |

| Image patches size | 254 X 254 pixels |

| Total number of crowns annotated | 5133 |

| Minimum number of tree crowns in a patch | 1 |

| Maximum number of tree crowns in a patch | 170 |

| Average number of crowns in a patch | 13 |

| Vegetation type | Densely mixed forest |

| Season | Leaf on, early Fall season |

| Annotation software | ArcGIS Pro 3.2.2 |

| Average time to manually annotate a single image patch | 260 second |

| Train: Test: Validation data ratio | 80: 20: 20 |

| Model | Bands | IoU | OA | Precision | Recall | F1-Score | Avg P | Avg R | Avg F | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BG | UTC | BG | UTC | BG | UTC | |||||||

| U-Net | RGB | 0.30 | 0.96 | 0.98 | 0.46 | 0.98 | 0.46 | 0.98 | 0.46 | 0.72 | 0.72 | 0.72 |

| CIR | 0.30 | 0.96 | 0.98 | 0.43 | 0.98 | 0.47 | 0.98 | 0.45 | 0.71 | 0.73 | 0.72 | |

| VI | 0.32 | 0.96 | 0.99 | 0.43 | 0.97 | 0.56 | 0.98 | 0.49 | 0.71 | 0.77 | 0.74 | |

| PCA | 0.02 | 0.96 | 0.98 | 0.13 | 0.99 | 0.02 | 0.98 | 0.03 | 0.56 | 0.51 | 0.51 | |

| RGBPC | 0.09 | 0.96 | 0.97 | 0.26 | 0.99 | 0.12 | 0.98 | 0.17 | 0.62 | 0.56 | 0.58 | |

| DeepLab v3+ | RGB | 0.48 | 0.97 | 0.99 | 0.6 | 0.98 | 0.71 | 0.99 | 0.65 | 0.8 | 0.85 | 0.82 |

| CIR | 0.45 | 0.97 | 0.99 | 0.59 | 0.98 | 0.66 | 0.99 | 0.62 | 0.79 | 0.82 | 0.81 | |

| VI | 0.4 | 0.96 | 0.99 | 0.49 | 0.97 | 0.68 | 0.98 | 0.57 | 0.74 | 0.83 | 0.78 | |

| PCA | 0.24 | 0.96 | 0.98 | 0.39 | 0.98 | 0.38 | 0.98 | 0.38 | 0.69 | 0.68 | 0.68 | |

| RGBPC | 0.30 | 0.95 | 0.99 | 0.37 | 0.96 | 0.61 | 0.97 | 0.46 | 0.68 | 0.79 | 0.72 | |

| SegFormer | RGB | 0.54 | 0.98 | 0.99 | 0.62 | 0.98 | 0.8 | 0.99 | 0.7 | 0.81 | 0.89 | 0.85 |

| CIR | 0.48 | 0.97 | 0.99 | 0.53 | 0.97 | 0.83 | 0.98 | 0.65 | 0.76 | 0.9 | 0.82 | |

| VI | 0.51 | 0.97 | 0.99 | 0.62 | 0.98 | 0.73 | 0.99 | 0.67 | 0.81 | 0.86 | 0.83 | |

| PCA | 0.24 | 0.94 | 0.98 | 0.30 | 0.96 | 0.52 | 0.97 | 0.38 | 0.64 | 0.74 | 0.68 | |

| RGBPC | 0.45 | 0.97 | 0.99 | 0.53 | 0.98 | 0.74 | 0.98 | 0.62 | 0.76 | 0.86 | 0.80 | |

| Recall | Precision | F1-Score | IoU | Relative Change in IoU (%) | Relative Change in F1-Score (%) | |

|---|---|---|---|---|---|---|

| Intersection | 0.95 | 0.23 | 0.37 | 0.23 | -57.41 | -47.14 |

| Union | 0.57 | 0.73 | 0.64 | 0.46 | -14.81 | -8.57 |

| Median | 0.9 | 0.57 | 0.7 | 0.54 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).