1. Introduction

Monitoring bridge structures is a vital aspect of bridge engineering and is significant for ensuring the safe operation of bridges. Structural displacement is a key indicator in monitoring, as it can be used to calculate the static and dynamic characteristics of bridges, such as deflection and modal parameters. This data is crucial for safety assessments and early warning systems for bridge structures [

1].

Currently, various technologies are employed for monitoring structural displacements in bridges, including Global Navigation Satellite System (GNSS) [

2,

3,

4], Linear Variable Differential Transformers (LVDTs) [

5], strain gauges [

6], Image-Assisted Total Stations (IATS) [

7,

8], and microwave radar interferometers [

9]. However, each of these technologies has its limitations in the context of bridge monitoring. For instance, GNSS lacks the accuracy needed to measure the high dynamic responses of bridge structures; LVDT technology is constrained by measurement distance; and the installation of strain gauges can be time-consuming and labor-intensive. Robotic total stations are widely used because of their high accuracy and non-contact capabilities. Some re-searchers have combined robotic total stations with image sensors to create IASTs, enabling precise multi-point displacement monitoring [

8]. Additionally, microwave radar interferometers, which provide high measurement accuracy and can operate un-der various weather conditions, have also gained considerable attention in recent years. Nevertheless, both IATS and microwave radar interferometry tend to be relatively ex-pensive, which hinders their widespread adoption for bridge monitoring.

With advancements in computer vision technology, vision-based measurement systems have become increasingly prevalent in structural displacement measurements due to their non-contact operation, high dynamic response, precision, and ability to perform real-time multi-point measurements [

10,

11,

12,

13,

14,

15]. To attain high-precision dis-placement measurements of bridge structures using vision sensors, researchers have proposed various methods that yield promising outcomes. For instance, Lee et al. [

12] developed a vision-based dynamic displacement measurement system utilizing artificial targets and cameras, which enabled real-time quantification of the parameters. Feng et al. [

13] achieved high-precision measurements of railway bridges by employing template matching techniques alongside artificial targets.

To further extend the applicability of vision-based measurement, vision measurement systems equipped with cameras on Unmanned Aerial Vehicles (UAVs) have become a research hotspot in recent years [

19,

20]. With UAV platforms, cameras can measure deformable objects at short distances to improve measurement accuracy. However, the over-all accuracy of existing UAV vision measurement systems remains low due to two primary issues: first, significant jitter from the UAV platform, which adversely impacts measurement accuracy; and second, the inadequate performance of cameras installed on current UAVs, characterized by low-quality images and low frame rates. Existing efforts to address UAV jitter mainly fall into three categories: (1) High-pass filtering techniques, which separate the UAV’s ego-motion from the target’s dynamic displacement. For example, Hoskere et al. [

21] proposed a high-pass filtering method to remove the low-frequency noise caused by the hovering vibration of the UAV, but this method can only be used for the case that the structural natural frequency is much higher than the noise frequency caused by the UAV motion.(2) Motion correction using the UAV’s built-in Inertial Measurement Unit (IMU) to estimate attitude changes for camera motion correction. Riveiro et al. [

22] applied an IMU-based system to mitigate UAV-induced measurement errors. Nevertheless, the attitude estimation accuracy of onboard IMUs is generally insufficient for millimeter-level positioning, limiting the broader applicability of this strategy.(3) Camera motion correction based on fixed reference points, which is currently the most widely adopted approach. For instance, Weng et al. [

23] employed homography-based perspective transformation using reference targets and validated their method on an elevator tower. However, homography describes a planar projective relationship and becomes inapplicable when reference targets lie on non-coplanar surfaces. To address these limitations, this paper proposes a motion correction framework for the UAV-borne camera by analyzing the 3D motion characteristics of the camera. In addition, the hardware system is reconfigured through the integration of a UAV-mounted camera ,a computing terminal,and a UAV .This work aims to provide a novel solution for high-precision monitoring of bridge displacement.

The remainder of this paper is organized as follows.

Section 2 details the materials and methods, including the UAV vision-based measurement system and the camera motion correction method.

Section 3 presents the Experiment, covering the method validation and the monitoring of Lunzhou bridge.

Section 4 provides a comprehensive discussion of the findings. Finally,

Section 5 concludes the paper.

2. Materials and Methods

2.1. UAV Vision-Based Measurement System

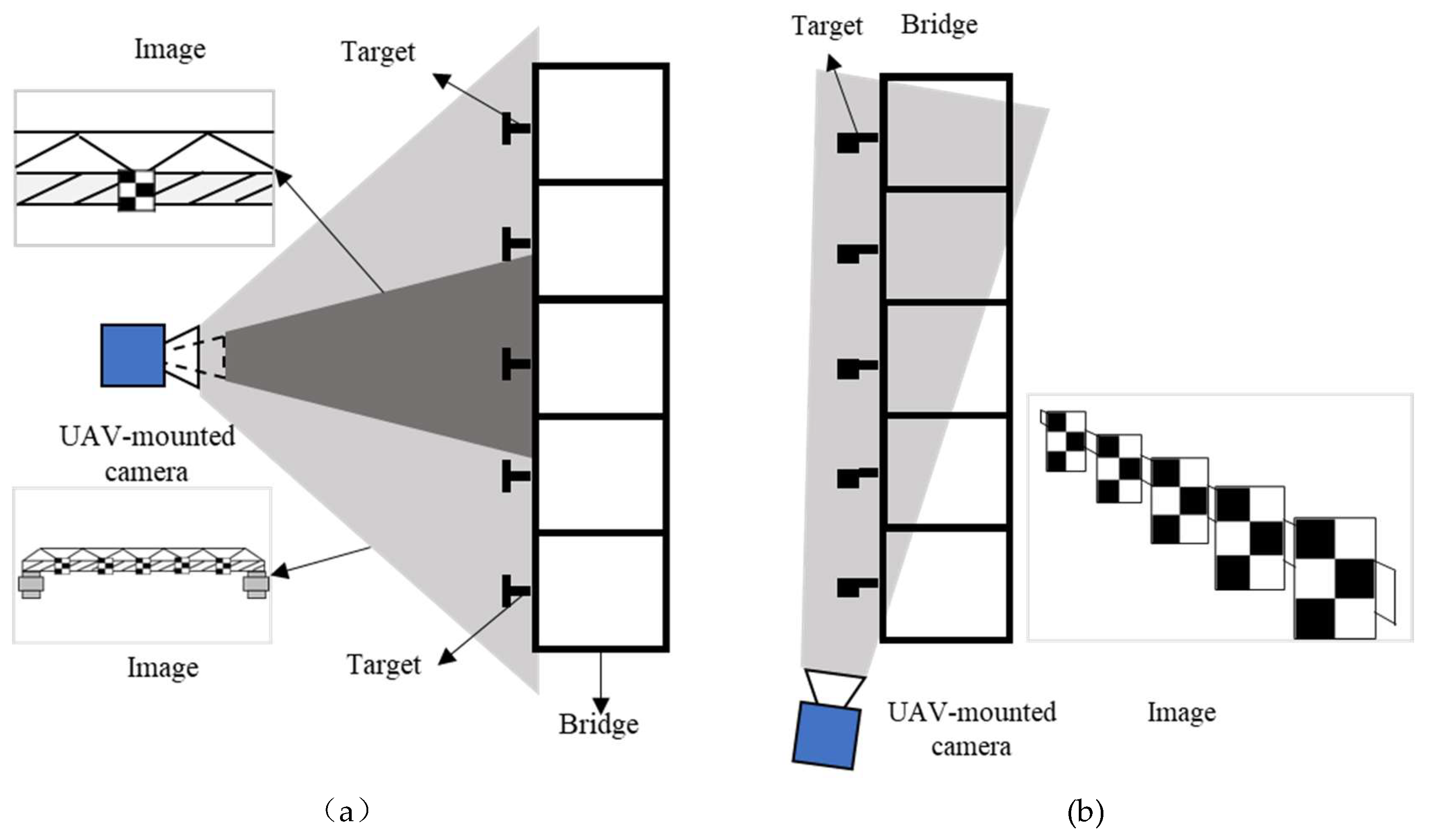

There are two primary configurations for applying UAV vision-based measurement technology to multi-point displacement monitoring of bridges, as illustrated in

Figure 1.

Figure 1(a) demonstrates the transverse shooting configuration, where the vision sensor is positioned laterally to the bridge, enabling observation of all targets from the side. However, this configuration requires reducing the camera magnification to simultaneously capture multiple targets, consequently diminishing the resolution of targets in the imagery and compromising measurement accuracy. In contrast,

Figure 1(b) shows the longitudinal shooting configuration, where the vision sensor is deployed at either end of the bridge. With proper angle adjustment, all targets remain observable in this arrangement, which proves more conducive to achieving multi-point displacement measurements across the bridge structure.

It should be noted that in the configuration shown in

Figure 1(b), limitations in the camera’s depth of field may result in blurred target imaging. Therefore, to ensure effective measurements, the UAV generally needs to maintain a considerable distance from the targets to achieve clear imaging. Based on the configuration illustrated in

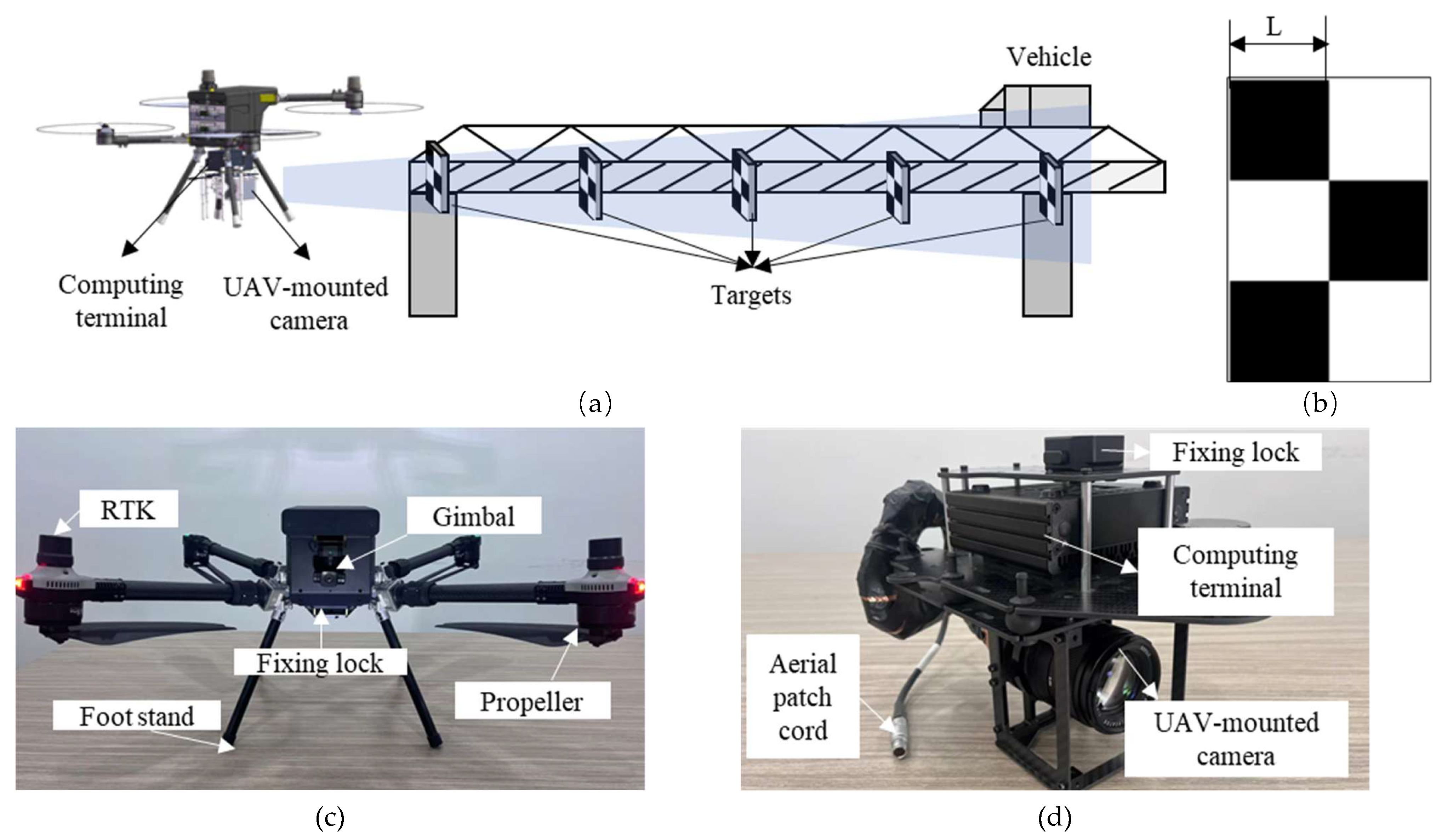

Figure 1(b), this paper presents a low-cost, high-precision monitoring system based on UAV vision to measure multi-point displacement of bridges. The system comprises a UAV-mounted camera, a displacement calculation terminal, and targets, as illustrated in

Figure 2.

Figure 2(a) illustrates the overall configuration of the monitoring system,

Figure 2(b) shows the Target,

Figure 2(c) displays the UAV, and

Figure 2(d) shows the integrated computing terminal and camera assembly secured by fastening locks. It is important to note that the system employs a high-performance industrial camera as the image acquisition unit, coupled with a high-capacity computing terminal, to achieve high-frequency and high-precision displacement data acquisition. Furthermore, both power supply and communication functions are uniformly provided by the UAV’s built-in battery and router, thereby ensuring operational stability throughout the measurement process. The detailed specifications of this UAV vision-based measurement system are provided in

Table 1.

Similar to monocular vision displacement measurement methods, UAV vision-based method for displacement measurement mainly consists of three steps. Initially, image coordinates are identified. To enhance the stability of the cross-shaped marker positioning method, this paper employs a highly robust detection and positioning technique proposed by Xing [

24]. Thereafter, the pixel displacement of the monitored target is calculated by determining the difference in the target’s center across consecutive image sequences. Finally, the pixel displacement is converted into actual displacement by solving for the scale factor. Assuming that both the imaging plane of the camera and the monitored target plane are perpendicular to the horizontal plane, the calculation formula for the vertical scale factor is as follows:

Where,

represent the scale factors, specifically the vertical scale factors

and horizontal scale factors

, respectively.

denotes the known actual distance, and and

is the pixel distance corresponding to this known actual distance. The actual distance between the focal plane and the measured target is represented by

, while

is the focal length of the lens. The mathematical expression for determining the actual displacement is as follows:

where

is the actual displacement, and

is the pixel displacement.

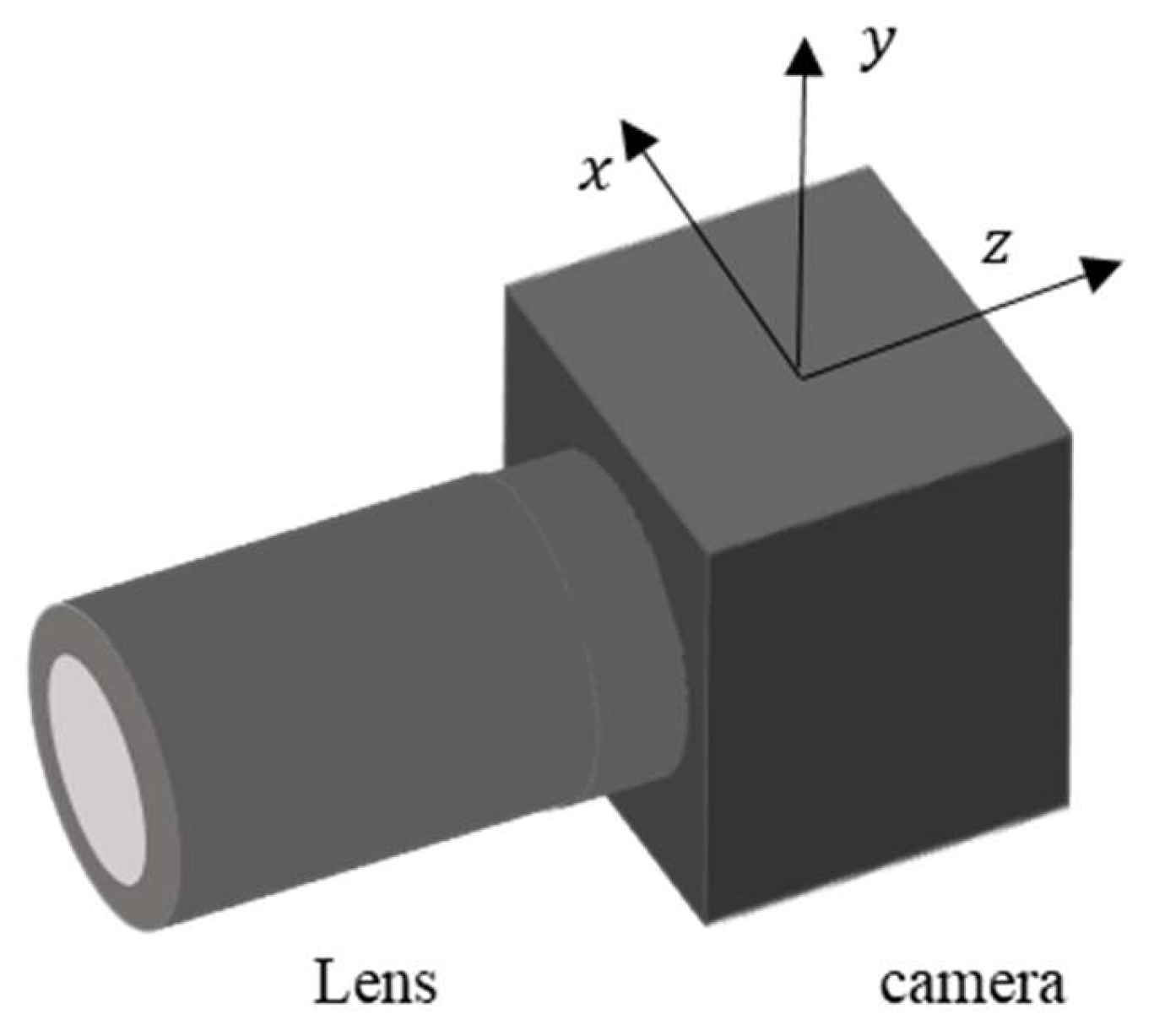

2.2. Camera Motion Correction Method

When employing a UAV for bridge monitoring, camera instability caused by UAV jitter can affect the accuracy of displacement monitoring results. Therefore, it is essential to compensate for camera motion in the UAV vision-based measurement system. The unstable motion of the camera on the UAV platform is divided into rotational and translational movements in the x, y, and z directions, as illustrated in

Figure 3. This camera motion results in changes in the coordinates of image points on the image plane, leading to camera motion errors. These errors are classified based on different motion directions: x-axis rotation, x-axis translation, y-axis rotation, y-axis translation, z-axis rotation, and z-axis translation, which are respectively denoted as

, and

. Among these, the camera motions that cause changes in the x-coordinate of image points on the image plane include translation along the x-axis, rotation around the y-axis, rotation around the z-axis, and translation along the z-axis. In contrast, the camera motions that induce changes in the y-coordinate of image points are rotation around the x-axis, translation along the y-axis, rotation around the z-axis, and translation along the z-axis.

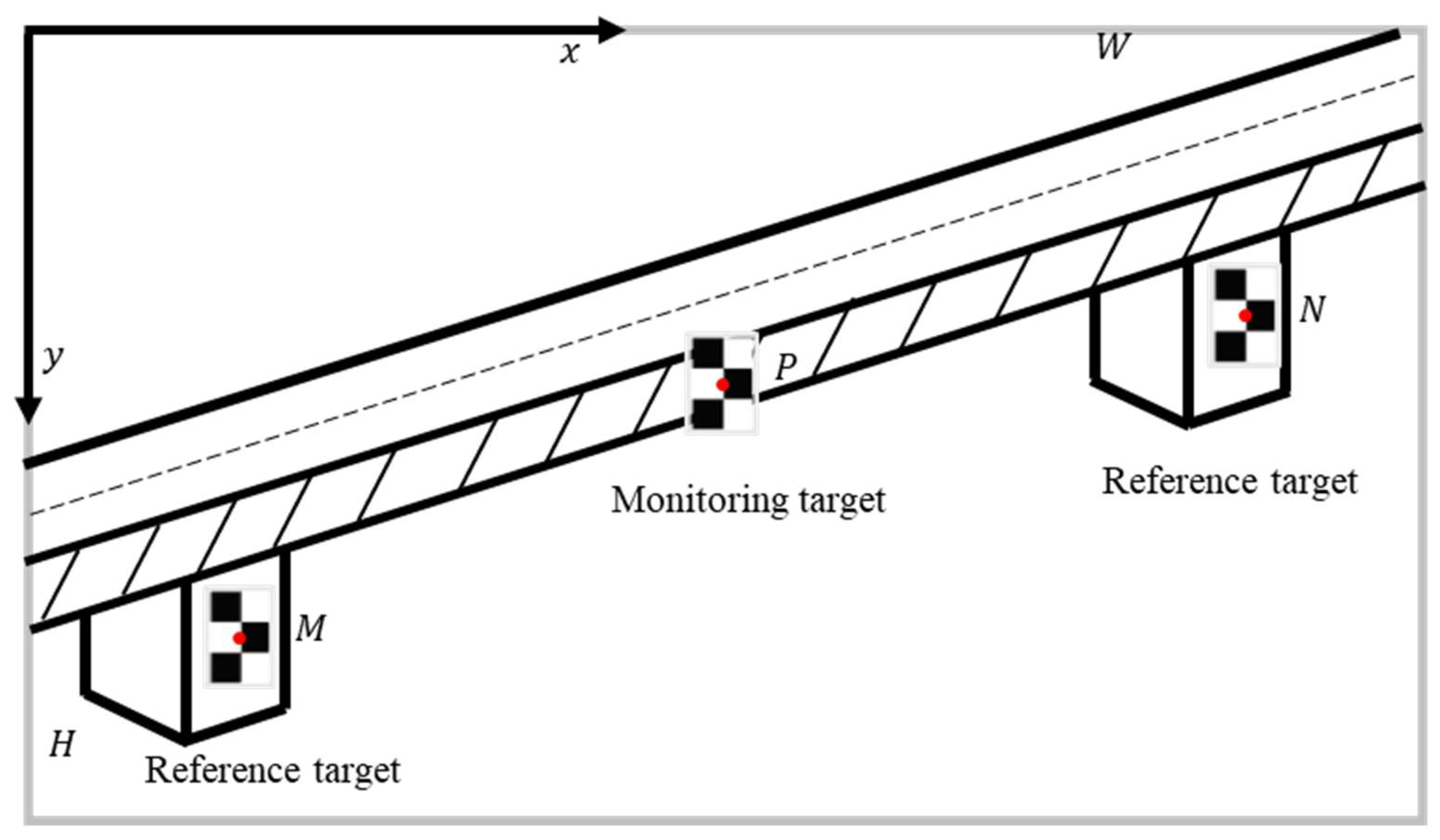

During the real-time monitoring process, a measurement model is established that clearly distinguishes between two reference targets and one monitoring target on the image plane. Each target features a black-and-white chessboard pattern consisting of 3 rows and 2 columns, with two corner points for each, as depicted in

Figure 4. The midpoints of the corner points for the two reference targets are labeled as N and M, while their corresponding points on the image plane are represented as n and m, respectively. The midpoint of the corner points for the monitoring target is designated as P, with its corresponding image point labeled as p. The dimensions of the image plane are W×H, with the coordinates of its center point identified as (W/2, H/2). The displacements of points N, M, and P are computed using Equations (3), (4), and (5):

Where

and

are the actual displacements of point P without motion error correction, and

, and

are the true displacements of point P.

and

represent the camera motion errors of reference points N and M, respectively, along x- and y-axes.

and

denote the horizontal x-direction and vertical y-direction scale factors of points N, M, and P, respectively. Similarly,

and

indicate the y-axis translational motion errors of points N, M, and P, respectively. Likewise,

and

signify the x-axis rotational motion errors of points N, M, and P, respectively.

and

represent the horizontal x-direction and vertical y-direction error components of the z-axis rotational motion errors of points N, M, and P, respectively.

and

are the horizontal x-direction and vertical y-direction error components of the z-axis translational motion errors of points N, M, and P, respectively.

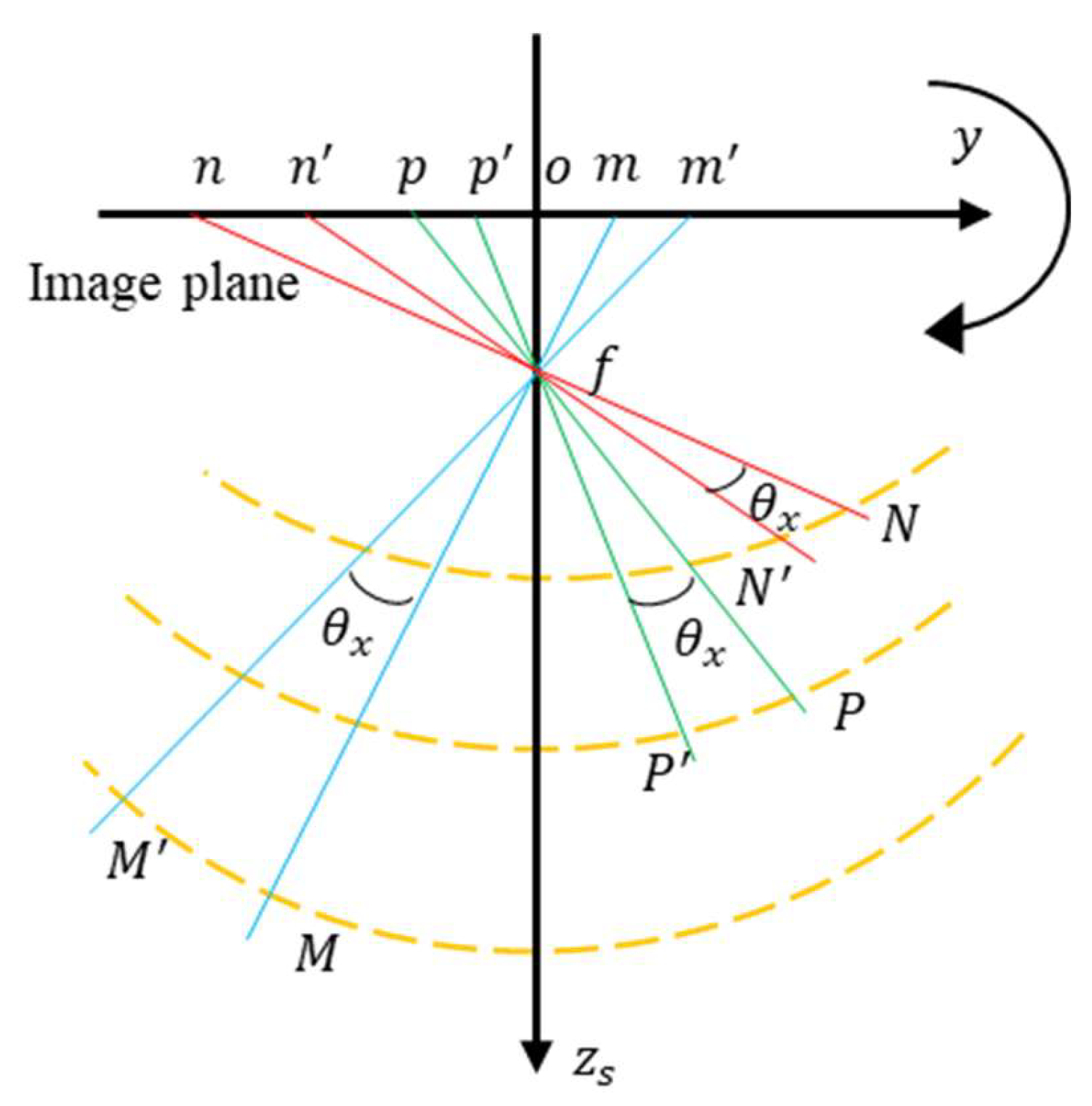

2.2.1. Camera Rotational Motion Around the x-axis and y-axis

Since the image plane is parallel to the lens plane, the law of the camera’s rotational motion around the x-axis is consistent with its motion around the y-axis. Taking the camera’s rotation around the x-axis as an example, this rotation introduces an error in the vertical y-direction, denoted as

. As shown in

Figure 5, the x-axis is perpendicular to the plane of the paper and points inward. The points obtained after N, M, and P are rotated around the x-axis by an angle

. They are

,

, and

, respectively. Here, ’o’ represents the center of the image, and ’f’ denotes the focal length. The image points corresponding to the spatial points before and after the rotation are represented as

,

, and

与

,

, and

, respectively.

Since the rotation angle

is a small angle, the rotational motion errors of points N, M, and P around the x-axis can be calculated using Equation (6) based on geometric relationships:

where,

,

, and

. Among these

、

, and

can be calculated using Equation (7), as follows:

where,

, with the unit of pixels. By comparing the rotational motion errors of points N, M, and P, following expression can be obtained:

Similarly, for the camera’s rotational motion around the y-axis, following equation is derived:

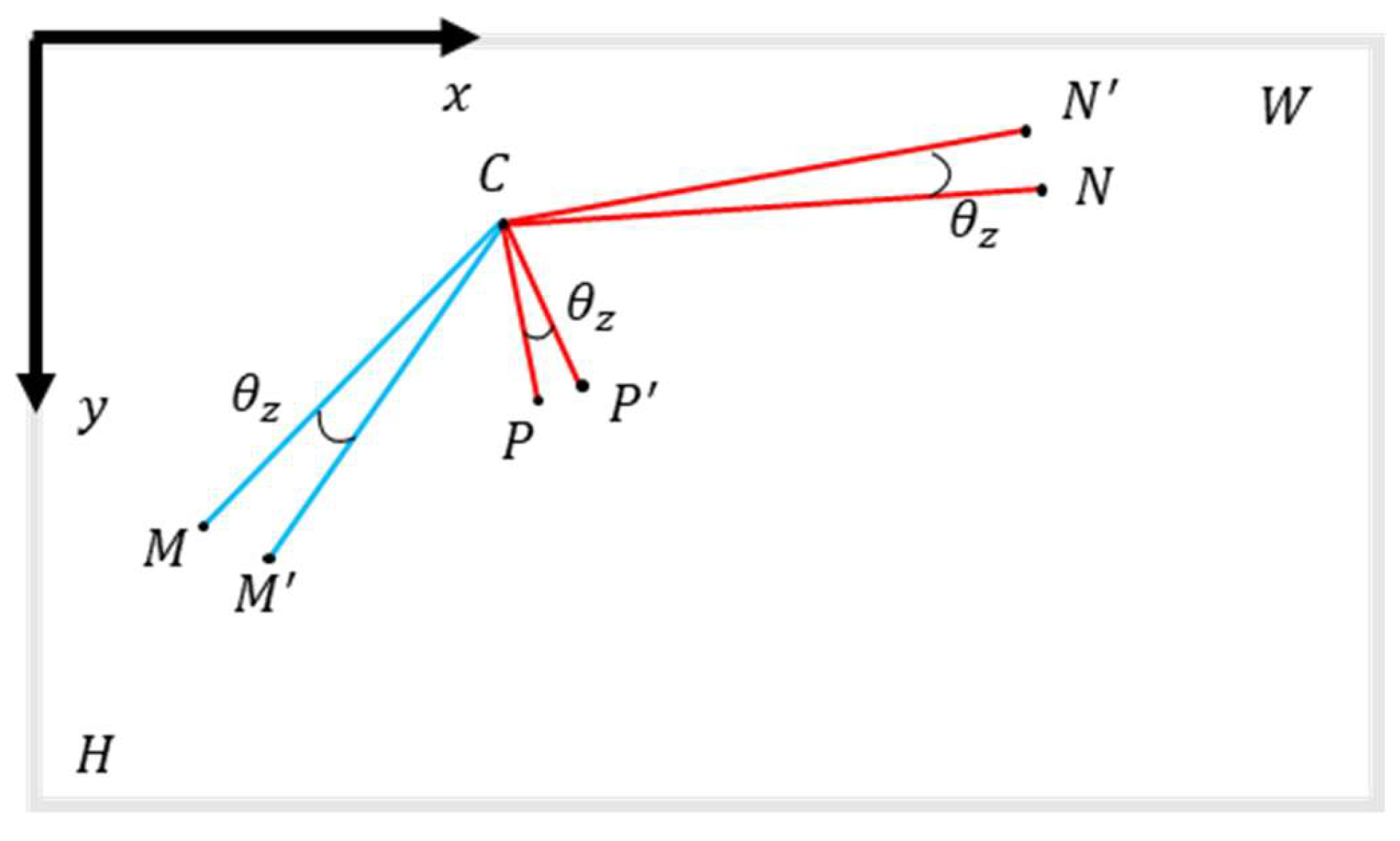

2.2.2. Camera Rotation Around the z-axis

Rotation of the camera around the z-axis introduces displacement errors in both the x and y directions. The image point errors resulting from this type of rotation occur only within the image plane, allowing for analysis within this plane. As shown in

Figure 6,

in the image plane is the center of rotation. After rotating around the z-axis by an angle

, the spatial points N, M, and P become

,

, and

, respectively, along with their corresponding image points before and after rotation, being indicated as

,

,

,

,

, and

.

When rotating counterclockwise by an angle

, following equation can be derived based on geometric relationships:

The image point displacements of points N, M, and P can be mathematically represented as follows:

Subtracting the first term from the second term in Equation (11) yields the following expression:

Similarly, subtracting the first term from the third term in Equation (12) leads to:

Likewise, when rotating clockwise by an angle

, Equations (14) and (15) are obtained based on geometric relationships:

According to Equations (12) to (15), the differences in image point displacements between measurement points caused by z-axis rotation are independent of the center of rotation.

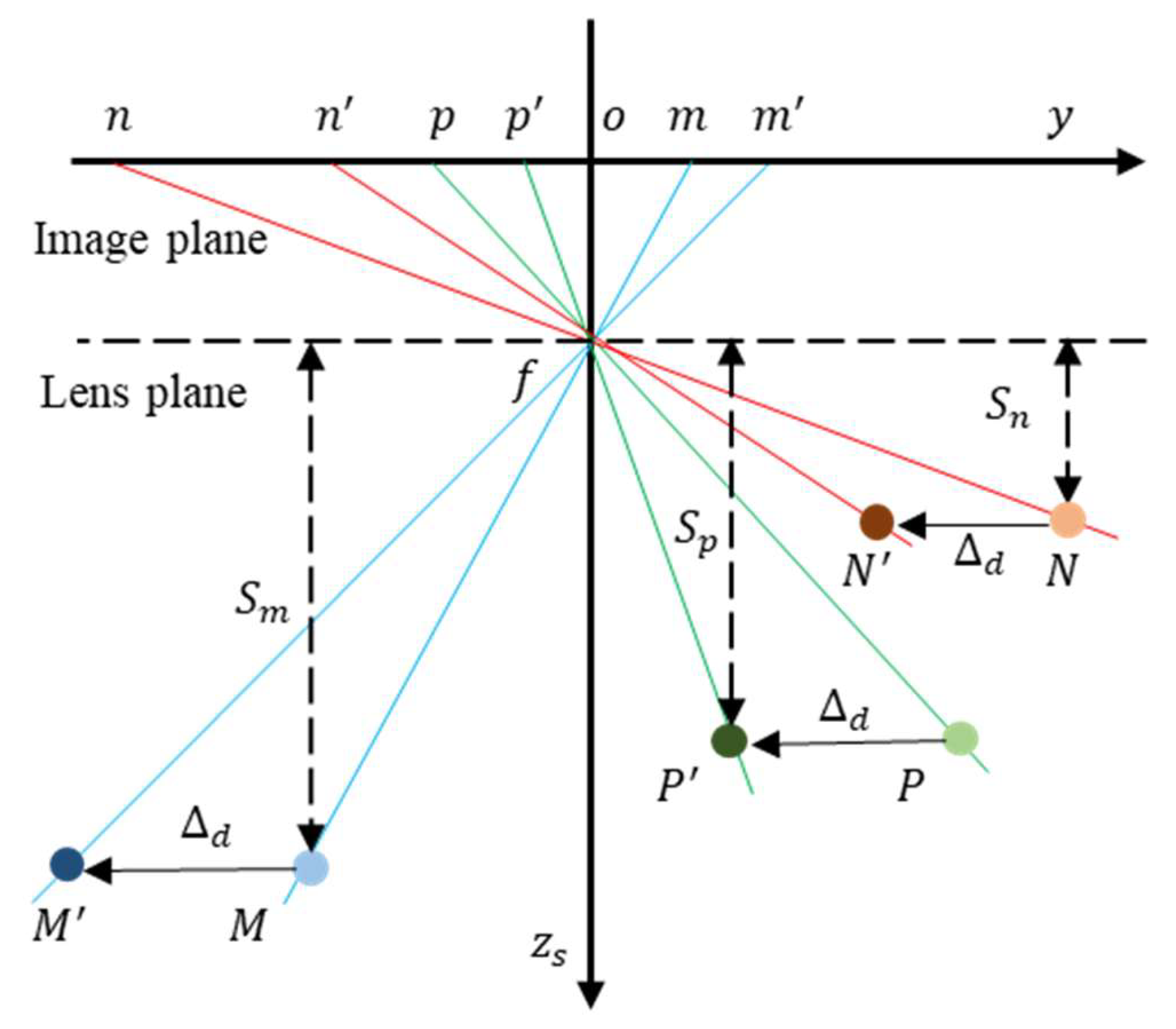

2.2.3. Camera Translational Motion Along the x-axis and y-axis

The translational motion of the camera is analyzed along the y-axis. As illustrated in

Figure 7, the translation of the camera along this axis only affects the y-coordinates of the measurement points, with the translation magnitude along the y-axis being

. After translation, the spatial points N, M, and P are transformed into

,

, and

, respectively. Here, ’o’ represents the center of the image,

f is the focal length, and f refers to the principal optical axis of the lens. The corresponding image points for the spatial points before and after translation are indicated as

,

,

,

,

, and

, respectively. The translational motion errors along the y-axis for points N, M, and P can be calculated using Equation (16):

From the geometric relationship, the ratios of the y-axis translational motion errors between reference point N and reference point M, and between reference point N and monitoring point P, can be obtained as follows:

where,

,

, and

are the actual distances from points N, M, and P to the lens plane, respectively;

,

, and

are the vertical y-direction scale factors of points N, M, and P, respectively. Similarly, following expression can be used to determine the camera’s translation along the x-axis:

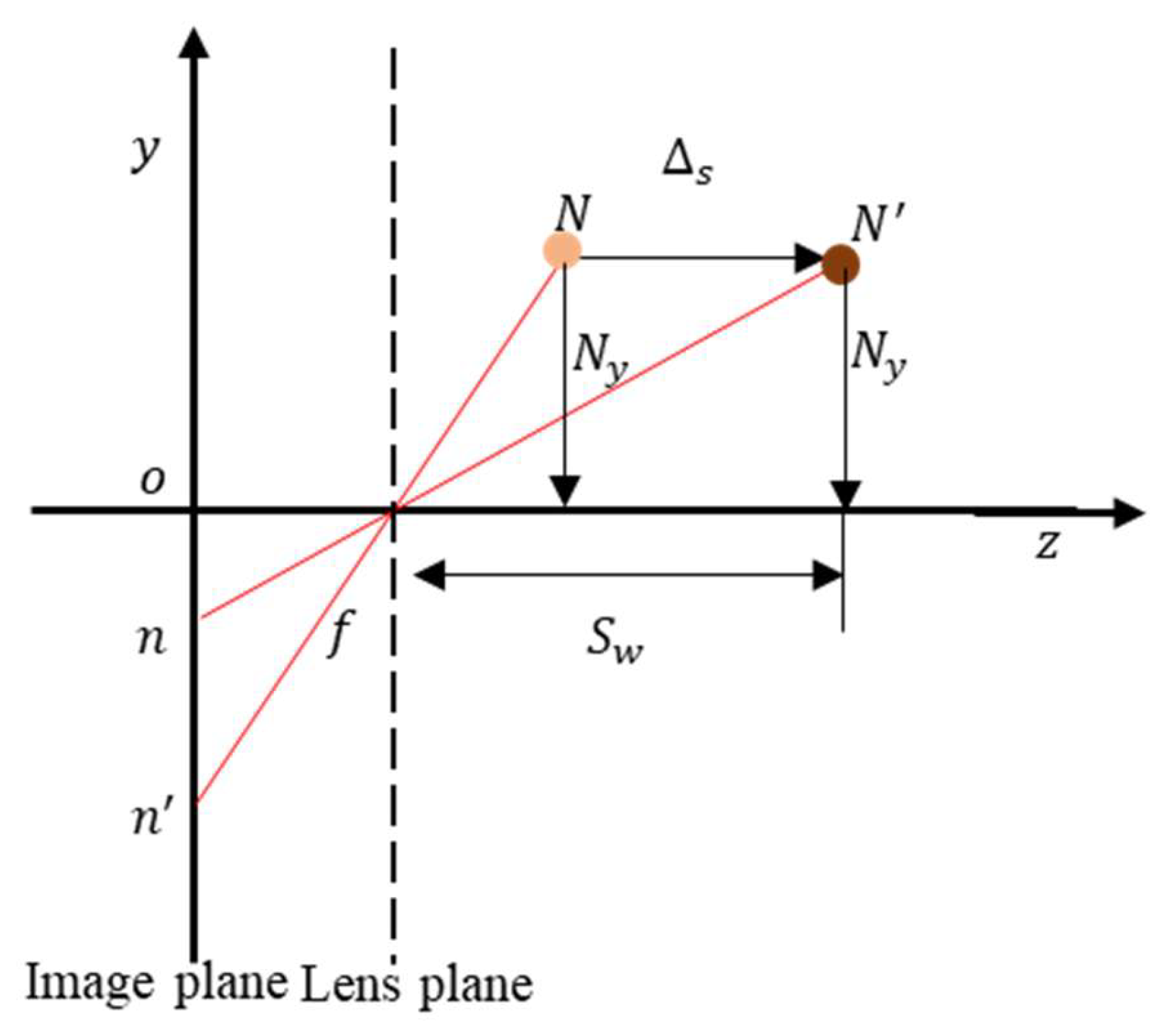

2.2.4. Camera Translation Along the z-axis

Translation of the camera along the z-axis alters the distance from the monitoring point to the lens plane, which manifests as displacement errors in both the x and y directions on the image plane. To probe this effect, a change in the y-coordinate of point N due to the camera’s translation is considered along the z-axis. As shown in

Figure 8, assume the camera moves along the z-axis by a magnitude of

, and the distance from point N to the z-axis is defined as

. After translation, the spatial point N shifts accordingly to

. Here,

denotes the distance from

to the optical center of the lens. The corresponding image points of spatial point N before and after translation are represented by

and

, respectively.

Based on the geometric relationship, following equation can be derived:

where

is displacement error of point N in the y-direction, which is caused by z-axis translation. To resolve Equation (19), the unit pixel displacement variation is defined as

, which can be determined using the following expression:

Rearranging this equation yields Equation (21):

The displacement error associated with a spatial point N is then computed using the following expression:

Consequently, the error components for the z-axis translational motion along the vertical y-direction at points N, M, and P can be determined as follows:

Similarly, the mathematical expression for error components of the z-axis translational movement along the horizontal x-direction for points N, M, and P is given as follows:

where,

, and

represent the differences between the y-coordinates of image points, while

represent the differences between the x-coordinates of image points n, m, p, and W/2. By defining the scale change as

, it can be concluded from Equation (21) that the scale change is independent of the measurement points, meaning the scale factors for each measurement point affected by translational motion along the z-axis change synchronously.

Based on the error patterns, this paper designs a stepwise error correction scheme as follows: initially, the rotational error along the z-axis is corrected, followed by rectification of errors in z-axis translation. Thereafter, the differences observed during x-axis rotation and y-axis translation were resolved systematically. The specific method steps are detailed in the upcoming sections.

(1) Correction of z-axis Rotation Error

In the measurement model, for the vertically-aligned reference target N, the x-coordinates of its two corner points

and

are nearly equal. Consequently, the error terms

and

in the x-direction displacements of these two points are also equal. Additionally, since the two corner points on the same target share the identical scale factor, the error terms

and

, as well as

and

in the x-direction displacements of these two points are equal as well. Therefore, by calculating the difference between the x-displacements of the two points, only the error term caused by z-axis rotation is retained in the determined value. Given that the z-axis rotation angle

is small, and

<<

, Equation (13) can be simplified as follows:

The z-axis rotation angle

can be solved using Equation (25). Further, Equation 26 and Equation 27 for the error components

of the z-axis rotational motion errors of points M and P are derived. Similarly, the derivation process for the clockwise rotation angle

is the same and will not be repeated here:

(2) Correction of z-axis Translation Error

To rectify the z-axis translational motion error, it is necessary to calculate the variation in unit pixel displacement using Equation (21). Since the scale change is consistent across various measurement points, the correction accuracy is enhanced by calculating the scale change using the nearest reference point. Subsequently, a variation in the unit pixel displacement is computed. For instance, if the scale change is calculated using target N, then the unit pixel displacement variations and for the monitoring point P will be calculated. Finally, Equations (23) and (24) are used to compute the z-axis translational motion error terms and for the monitoring point P.

(3) Correction of x and y axis Rotation and Translation errors

After correcting the z-axis rotation and translation errors, only rotational and translational motion errors in the x- and y-axes remain for the monitoring point P. At this point, Equations (3), (4), and (5) can be simplified to Equations (28)~(30):

In these equations, and are the actual displacements of point N and M after correcting for the z-axis rotation and translation errors, which are known values. Similarly, and represent the actual displacement of point P, which is an unknown value. The actual displacements of reference points N and M after correcting for the z-axis rotation error are denoted by , and these are also known values. In fact, all scale factors are established as known values in this work. The solution process is outlined as follows: In the first step, Equations (9) and (17) are combined, along with the error patterns of the x-axis rotational motion and y-axis translational motion of the reference points N and M, to solve for , , , and using Equations (28) and (29) and further solve for and . Thereafter, and are substituted into Equation (30) to determine . At this point, the displacement result of the monitoring point is obtained after correcting the 3D motion errors.

3. Experiment

3.1. Method Validation

3.1.1. Experimental Protocol

The UAV-based vision method is effective for scenarios where deploying fixed monitoring equipment is challenging. To assess the reliability of the proposed method, displacement monitoring experiments were conducted using the UAV, focusing on the side of a building as the monitoring target. The instruments and equipment utilized in the experiment are detailed in

Table 2.

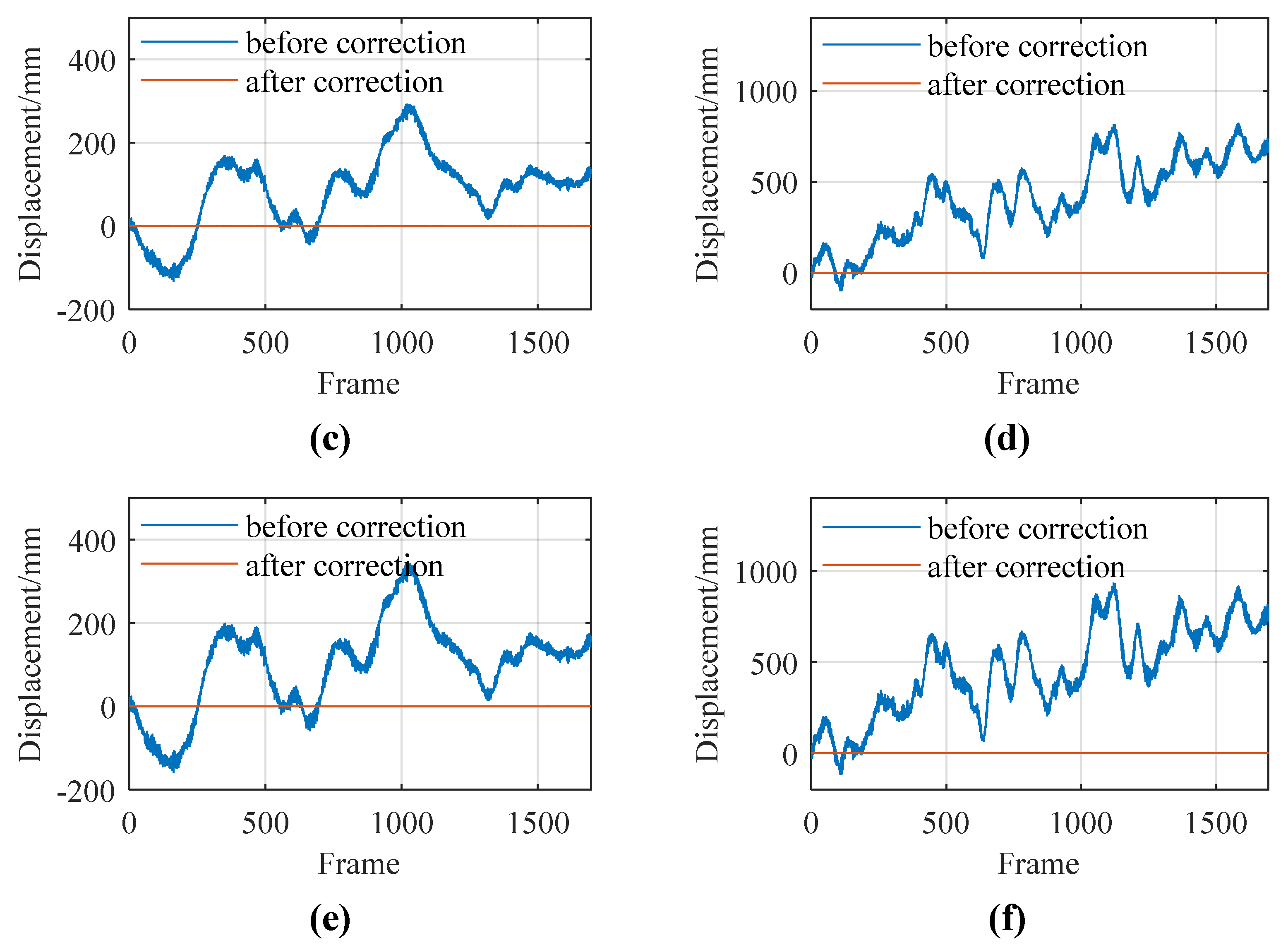

During the experiment, five targets were placed at equal intervals of 7 m along the outer side of the windowsill on the fourth floor of the building, numbered T1 to T5 from left to right. A translational sliding table was installed at the base of target T2 to simulate actual displacement. To obtain a true reference value for the displacement of target T2, a fixed camera was positioned 2 m away for synchronous monitoring.

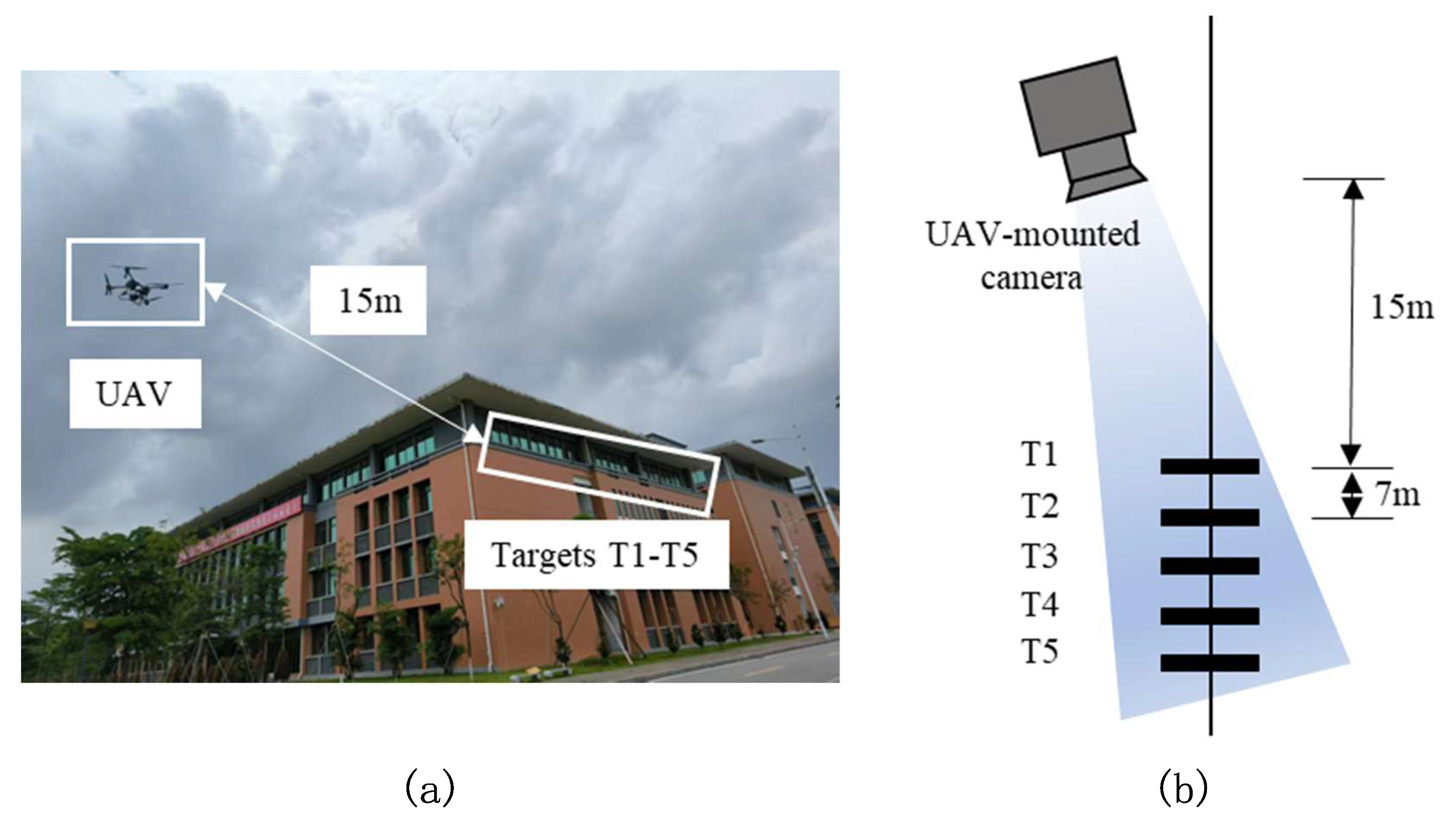

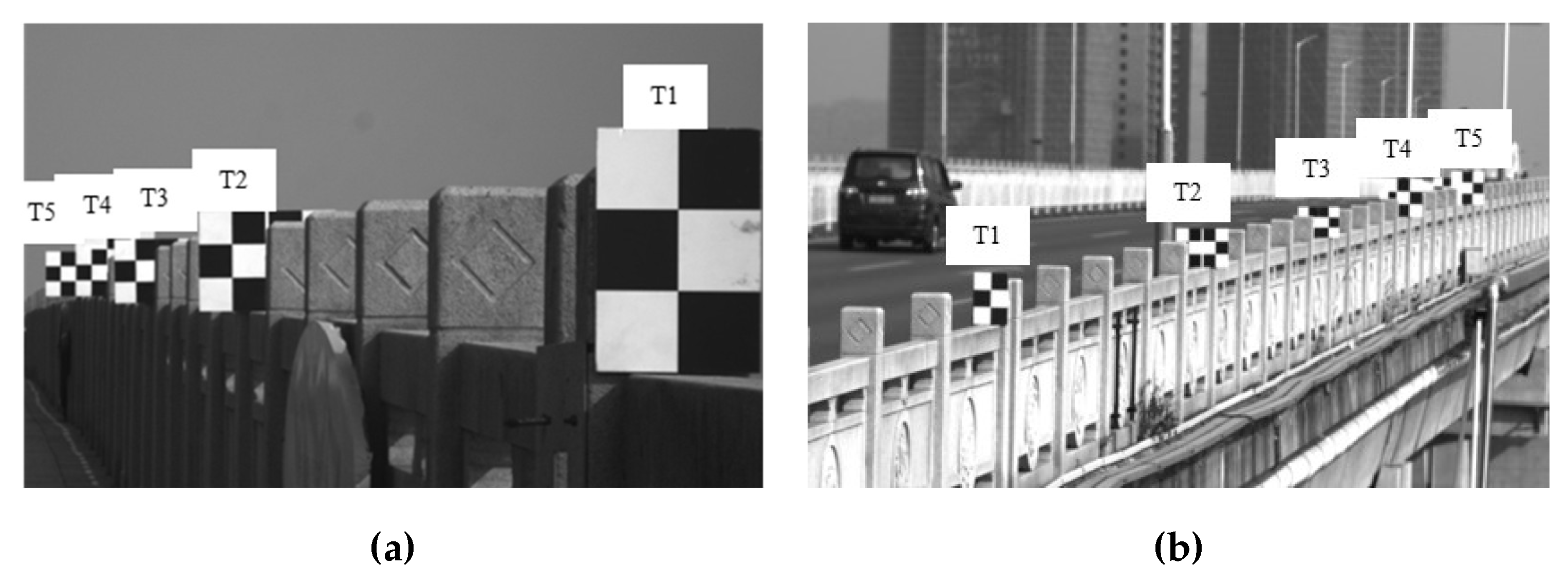

Figure 9(a) depicts the UAV monitoring scenario, while

Figure 9(b) illustrates the experimental setup.

Figure 9(c) shows the on-site arrangement of the targets, translation slide, and fixed camera.

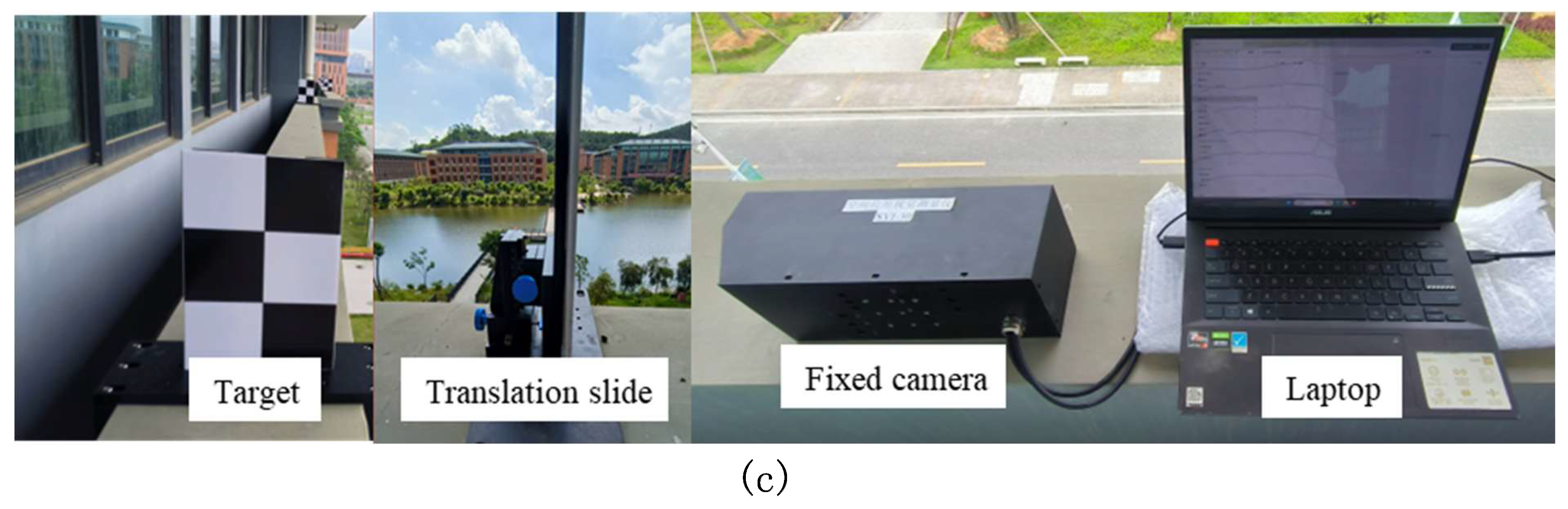

Figure 10 displays the on-site images captured by the UAV-mounted camera during the experiment.

During the experiment, the acquisition frequency of both the UAV-mounted camera and the fixed camera was set to 100 frames per second. It lasts for 20 seconds. Move target T2 vertically by translating the slide table. Following the method described in section 2.2, targets T1 and T5 were used as reference targets in this experiment, while targets T2, T3, and T4 were used as monitoring targets. Due to significant vibrations in the UAV system during the experiment, some targets were lost in the camera’s field of view, resulting in a total of 1695 valid images being collected

3.1.2. Experimental Results and Analysis

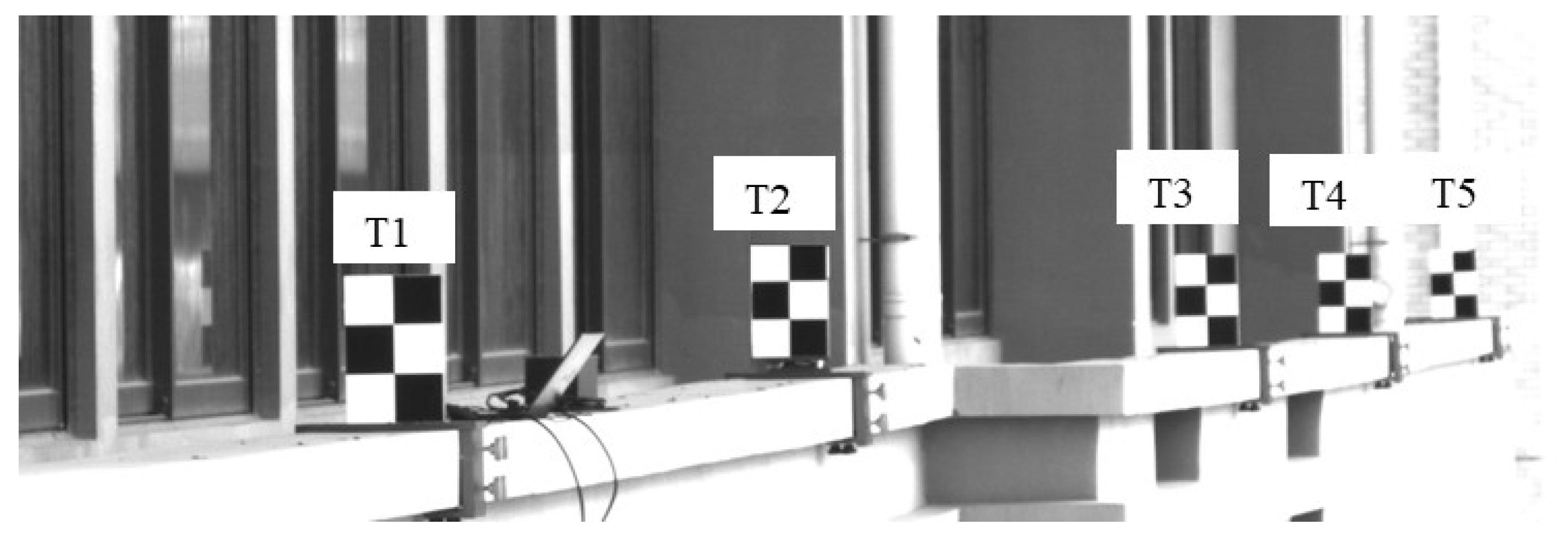

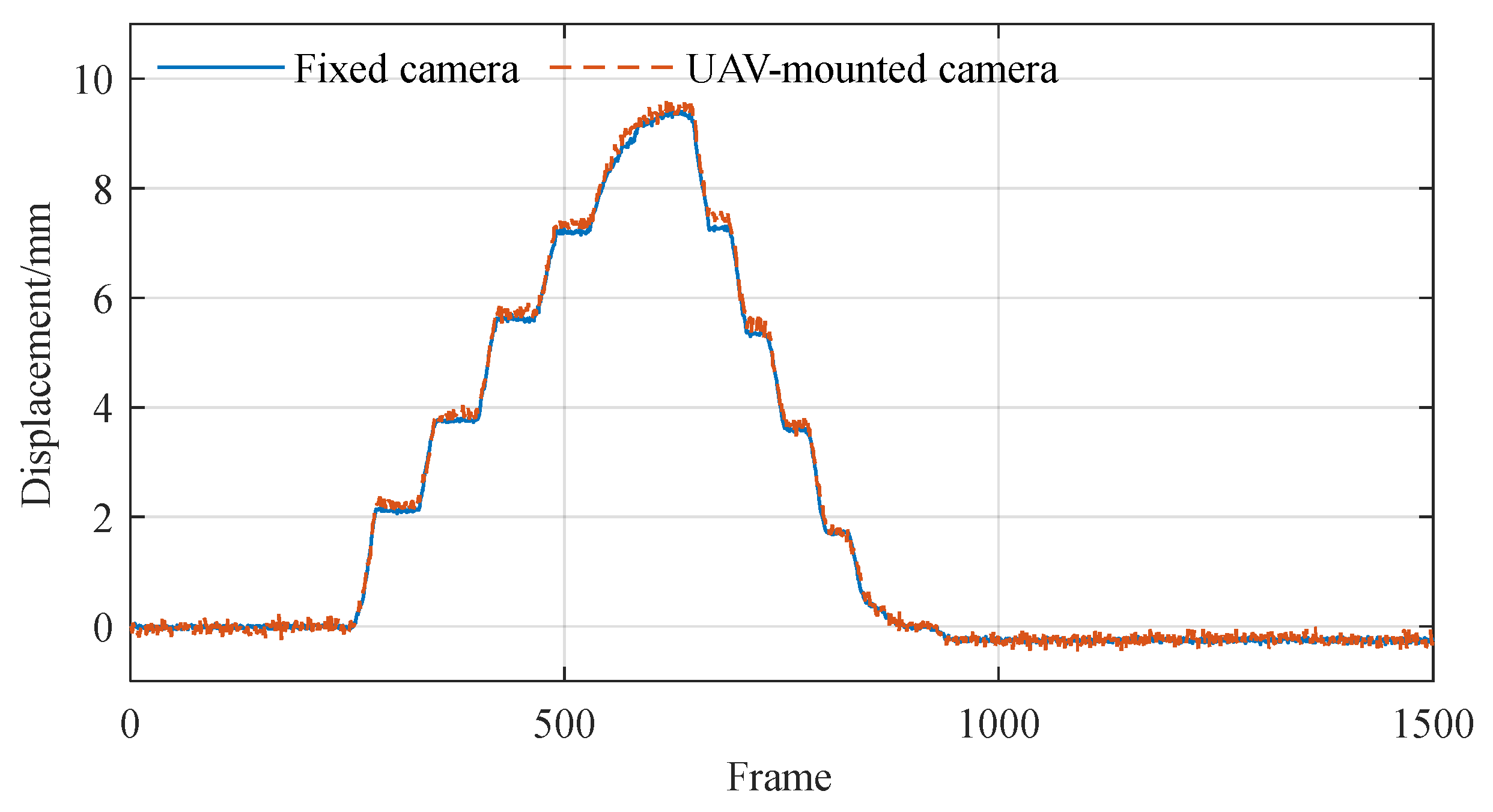

Using the method proposed in this study, the experimental data were processed, and the results are presented in

Figure 11. The findings show that the displacement results for targets T2, T3, and T4 approached zero after incorporating the proposed correction schemes. This suggests that the proposed method effectively rectified the errors encountered during the camera movement on UAV platforms in outdoor conditions.

Furthermore, the corrected T2 target displacement monitoring results were compared with those measured by the fixed camera, matching a total of 1,500 images, as shown in

Figure 12. The comparison results indicate a high consistency between the monitoring results from the UAV-mounted camera and the fixed camera, which intuitively reinforces the measurement accuracy of the proposed method. To quantitatively evaluate measurement errors, root mean square error (RMSE) statistical analysis was conducted on the results obtained, with specific statistical details presented in

Table 3.

3.2. Monitoring of Lunzhou Bridge

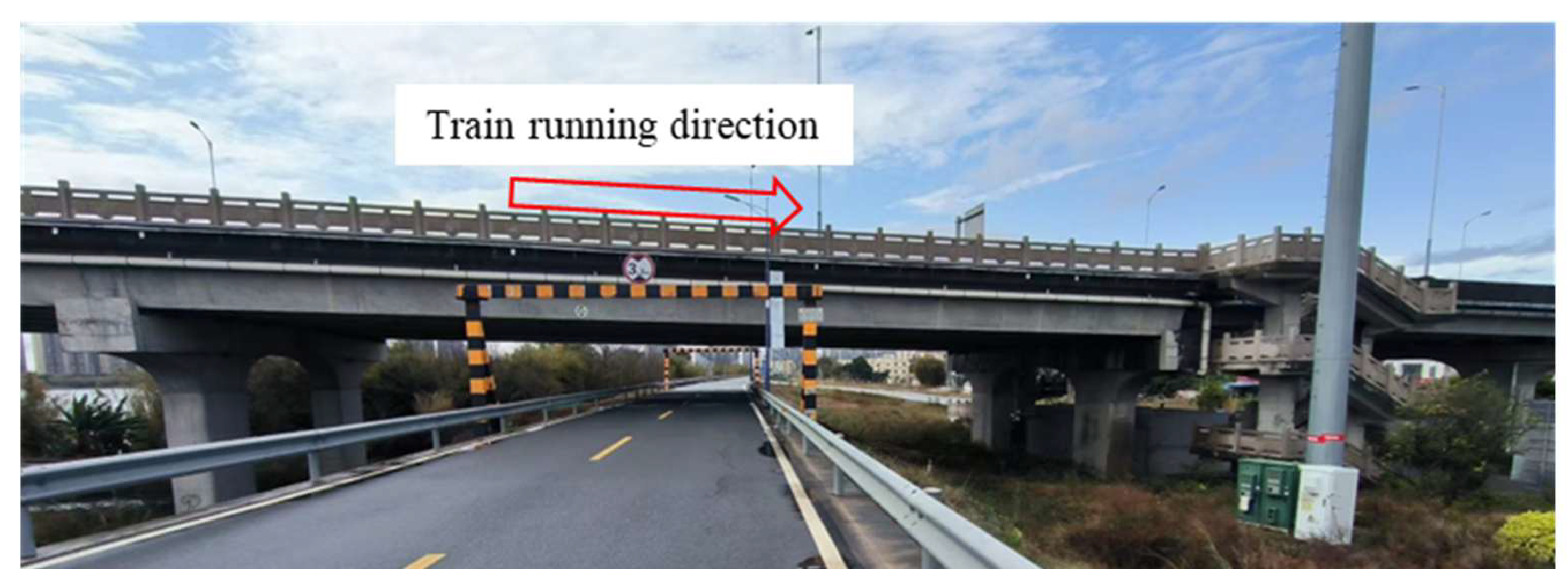

3.2.1. Overview

To further assess the performance of the UAV-based vision system for monitoring displacement, experiments were conducted on the Lunzhou Bridge, as illustrated in

Figure 13. The Lunzhou Bridge is situated in Qingyuan City, Guangdong Province, China. It serves as a vital cross-river passage that connects both sides of the Beijiang River, extending from Qingfei Highway in the northwest to Beijiang East Road in the southeast. Monitoring the displacement of the Lunzhou Bridge is essential for verifying the adaptability and accuracy of the UAV platform system in engineering applications. Additionally, it provides valuable data to support the structural safety assessment and long-term operation and maintenance of highway bridges. Furthermore, to evaluate the accuracy of the UAV-based monitoring system, a multi-point displacement measurement method employing a Scheimpflug camera, as proposed by Xing [

25], was used for comparison.

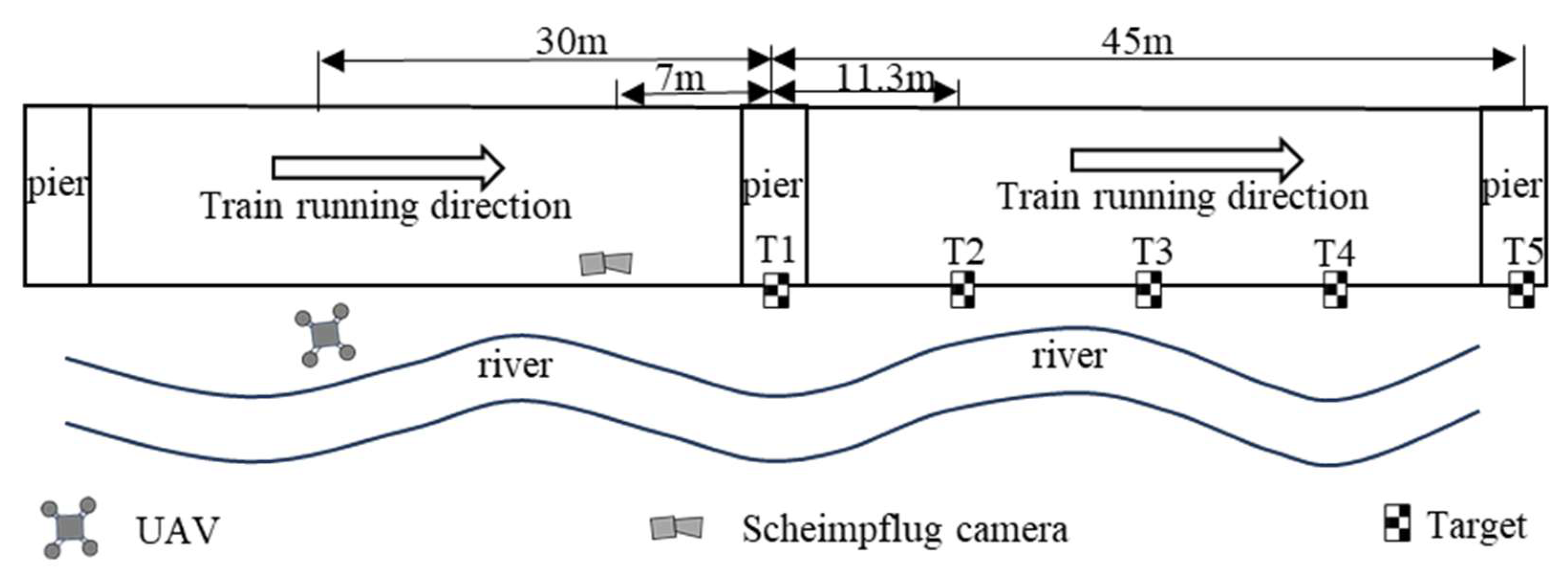

3.2.2. Monitoring Points

In this article, a motion correction method is explored for UAVs that is similar to the multi-point displacement measurement approach using a Scheimpflug camera [

25]. Both methods require the use of two reference targets. Following these considerations, specific road sections were monitored, as sketched in

Figure 14. The bridge section being investigated is 45 m long, equipped with 5 targets strategically placed along the pedestrian guardrail. Targets T1 and T5 are positioned above the bridge pier and serve as reference points. In contrast, targets T2, T3, and T4 are located 11.3 m, 22.5 m, and 33.8 m away from the bridge pier, respectively. The Scheimpflug camera is installed at a horizontal distance of 7 m from the nearest bridge pier, enabling comparison of the measurement results with those obtained from the UAV vision-based displacement monitoring system. This UAV system is deployed on the side of the bridge, hovering at a horizontal distance of 30 m from the nearest pier.

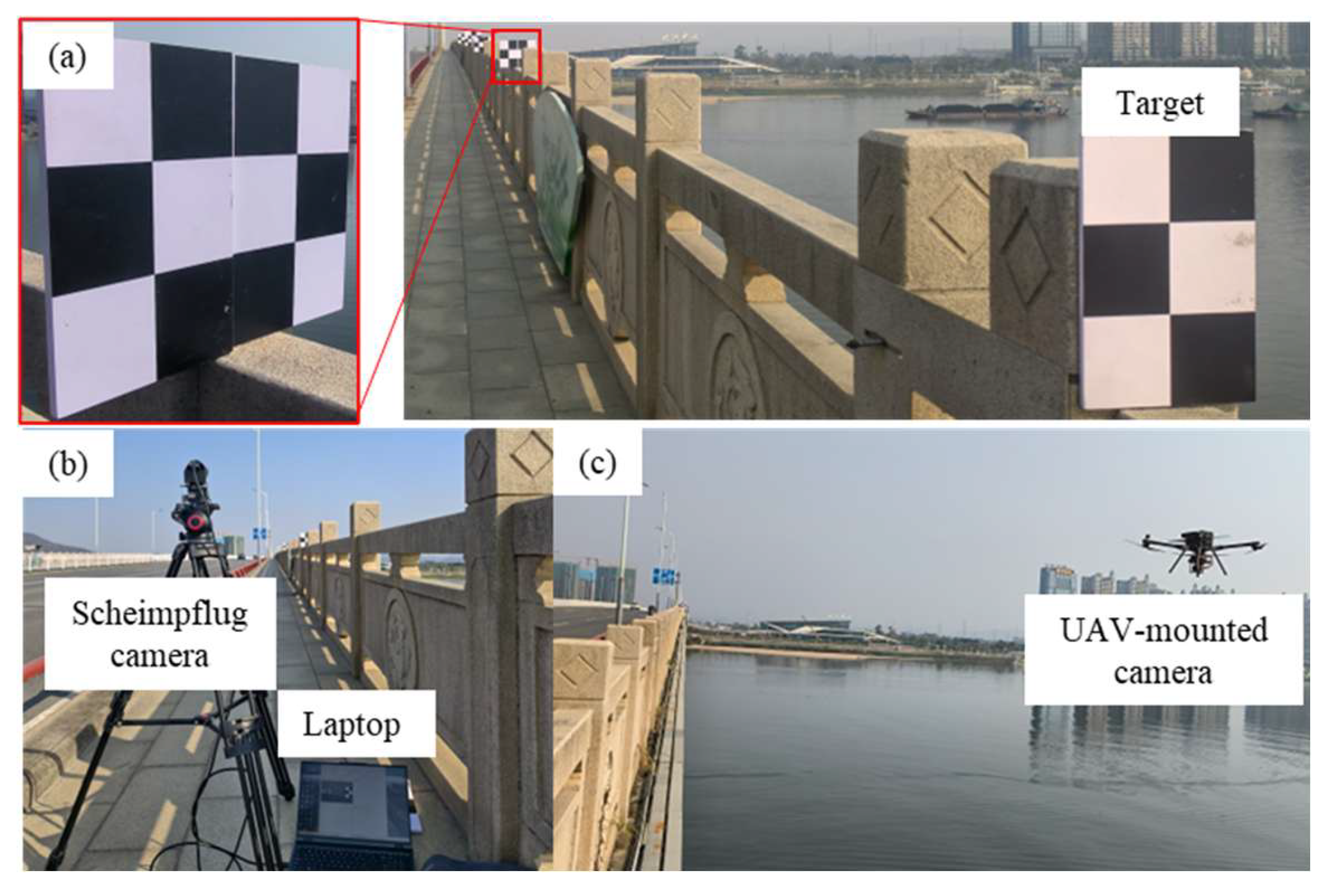

3.2.3. Monitoring Implementation

Initially, the targets were arranged according to the configuration shown in

Figure 14. The corresponding images of the targets at different locations on the bridge are shown in

Figure 15(a). To avoid mutual occlusion within the camera’s field of view, two targets were installed at each of the points T2 through T5. The target size L observed by the UAV-mounted camera at points T1 to T5 were 100,100,100,150, and 150 mm, while the target size L recorded by the fixed platform camera at T1 to T5 were 100,100,100,100, and 120 mm.

Upon positioning the targets, the bridge camera and UAV vision measurement system were deployed, as shown in

Figure 15(b) and 15(c), respectively.

Figure 16(a) illustrates the imaging results from the Scheimpflug camera, while

Figure 16(b) displays the imaging results obtained from the UAV-mounted camera. Thereafter, data collection and processing were executed with a frame rate set to 100 frames per second for both the fixed platform and the UAV-mounted camera, with a total collection time of 15 sec. When processing data from the UAV platforms, the accuracy of corner positioning was hindered by the long measurement distance, leading to significant noise in the scale factor calculation and substantial noise levels in the z-axis translation motion error results. To mitigate this, a median filtering method with a filtering radius of 100 was applied to denoise the scale factor calculations. Since the bridge predominantly undergoes vertical deformation during vehicle traffic, the subsequent analysis focuses on the vertical displacement monitoring results.

3.2.4. Monitoring Results and Analysis

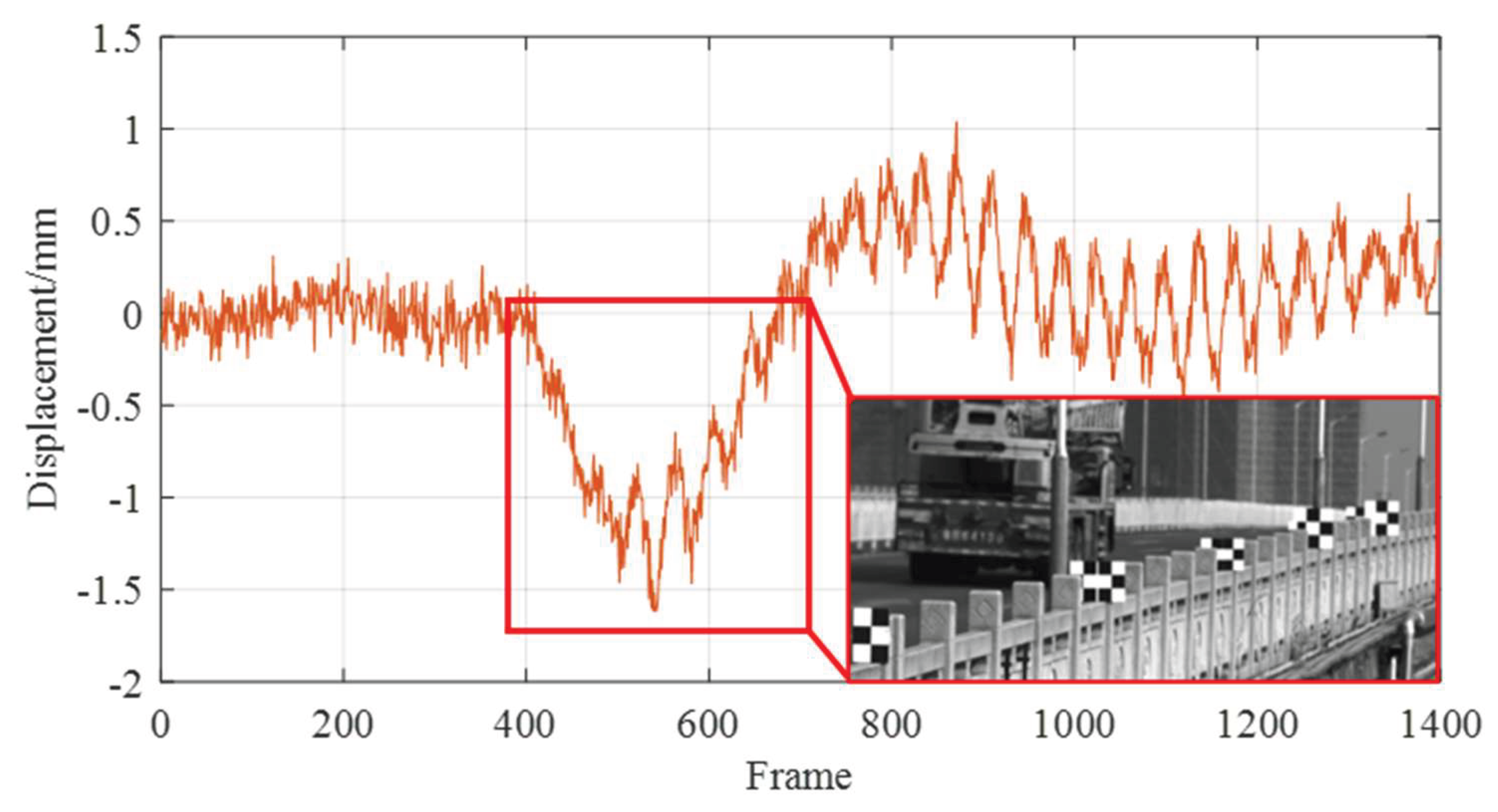

According to the method described in

Section 2.2, in this experiment, targets T1 and T5 were used as reference targets, while targets T2, T3, and T4 were used as monitoring targets. Due to significant vibrations of the drone system during the experiment, some targets were lost in the camera’s field of view, and a total of 1400 valid images were collected. The obtained image data was compared and analyzed with the displacement results of the mid span target T3 in this article’s system. These results are shown in

Figure 17.

As evident from

Figure 18, when the vehicle crosses the bridge, it exhibits a noticeable downward deflection trend, which aligns with theoretical expectations. This finding initially confirms the reliability of the UAV vision-based displacement measurement system. Furthermore, a comparison was made between the displacement monitoring results from the fixed-platform camera and the UAV-mounted camera. The results are displayed in

Figure 18, which indicates that the dis-placement monitoring outcomes from both platforms are highly consistent, thereby validating the accuracy of the UAV vision-based system in monitoring the displacement of the bridge.

Based on the comparative analysis presented in

Figure 18, it can be observed that the vertical displacement at target T3, located at the mid-span of the bridge, was the largest, exceeding 1.5 mm. The vertical displacements at targets T2 and T4 were both approximately 1 mm. It is worth noting that the displacement at T4 was slightly smaller, which may be attributed to the longer monitoring distance, resulting in reduced image resolution and measurement accuracy. In addition, the monitoring results for targets T3 and T4 obtained from the fixed-platform camera exhibited significant fluctuations. This behavior can mainly be ascribed to two factors: first, potential stray light interference caused by the shading cloth enclosing the connection between the Scheimpflug camera and the lens; and second, the relatively small aperture of the lens, which limited the amount of incoming light and led to a lower signal-to-noise ratio (SNR) in the acquired data.

To quantitatively evaluate the accuracy of displacement measurements obtained from the UAV-mounted camera, the results from a fixed-platform camera were used as the reference values. The RMSE of the UAV-based vision system was calculated. The specific findings are tabulated in

Table 4. It was observed that, at a measurement distance of 75 m, the RMSE for vertical displacement monitoring results improved to less than 0.3 mm. This indicates that the UAV-mounted camera can maintain a high level of measurement accuracy even during long-distance monitoring, confirming the precision of the UAV vision-based displacement monitoring system.

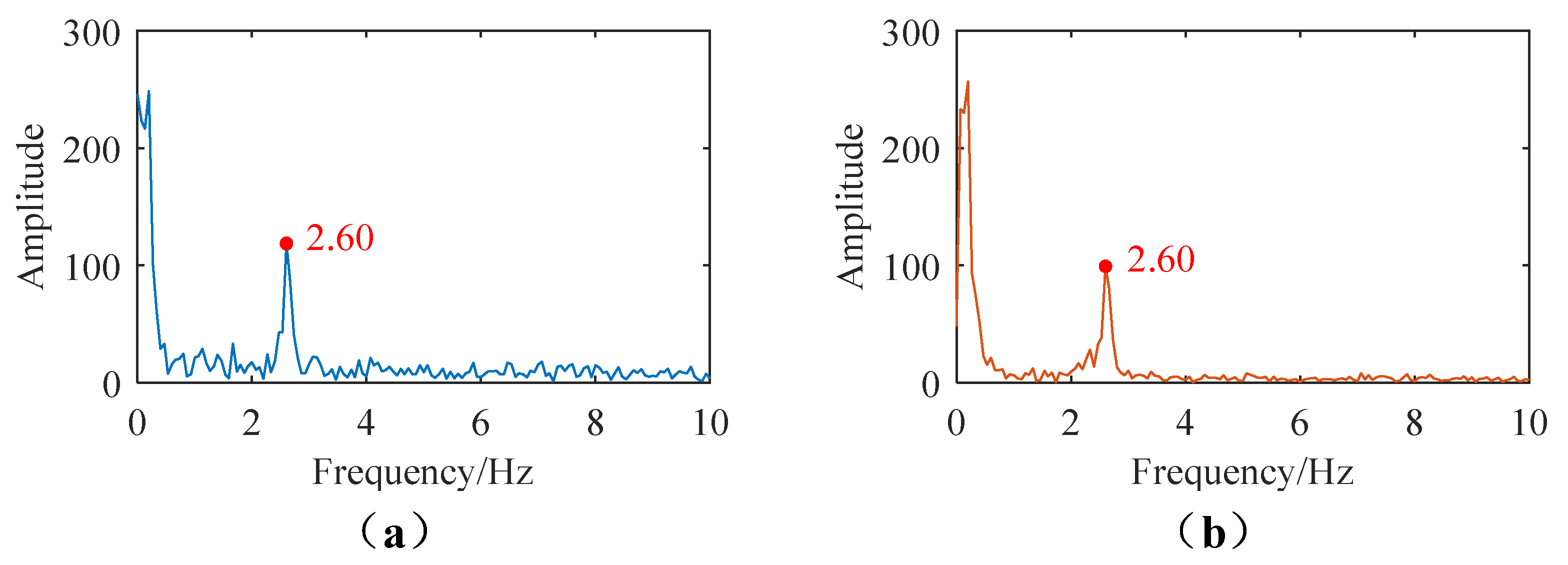

Furthermore, a spectral analysis was performed on the measurement results obtained from both the Scheimpflug camera and the UAV-mounted camera, as illustrated in

Figure 19. The results revealed that both cameras yielded a similar dominant frequency of 2.60 Hz, which further validates the high accuracy of the displacement measurement system obtained using the UAV-mounted camera.

4. Discussion

The UAV vision-based multi-point displacement measurement method and system proposed in this study addresses the low accuracy challenges typically associated with traditional UAV vision monitoring, such as interference from UAV jitter. The accuracy of this technique has been confirmed through comparisons with the Scheimpflug camera. In a field experiment conducted on the Lunzhou Highway Bridge, the system successfully monitored vertical multi-point displacement across the entire span of the bridge, achieving an RMSE of less than 0.3 mm. The measurement results were highly consistent with those obtained from the Scheimpflug camera, meeting the precision requirements (sub-millimeter to millimeter level) for monitoring the dynamic displacement across the bridge.

Moreover, the spectral analysis exhibited that both the UAV vision system and the Scheimpflug camera identified the vertically dominant response frequency of the bridge as 2.60 Hz. This consistency not only demonstrates the UAV system’s capability to capture the dynamic characteristics of the structure but also provides reliable data for future bridge safety assessments, such as determining structural integrity based on frequency variations. Additionally, unlike the Scheimpflug camera, which is fixed in position (installed 7 m away from the nearest pier during the experiment), the UAV can hover 30 m away from the pier and adjust its position flexibly to navigate around obstacles, making it more adaptable to complex on-site environments.

Despite the verified accuracy compared to the Scheimpflug camera, there are some limitations to the study. One issue is the short duration of monitoring: the data collected on the Lunzhou Bridge spanned only 15 sec (with a frame rate of 100 fps), focusing specifically on dynamic displacement during vehicle traffic, without any longer-term monitoring (such as a continuous 24-hour monitoring period). The Scheimpflug camera, being a fixed device, can easily provide long-term monitoring, while the UAV’s full-load endurance is limited to only 25 minutes, which is inadequate for extended data comparisons.

In summary, the UAV vision system discussed in this study displayed high consistency with the Scheimpflug camera (RMSE < 0.3 mm) and accurately captured the dominant frequency of the bridge at 2.60 Hz. Though limitations such as short monitoring duration exist, the UAV’s flexible deployment offers significant advantages over the Scheimpflug camera, making it a practical tool for bridge displacement monitoring. Future work should focus on extending the monitoring duration to further enhance its practical value.

5. Conclusions

This paper proposed a UAV vision-based method for measuring displacements at multiple points across bridges. A monitoring system comprising a UAV, an industrial camera, a computing terminal, and measurement targets was developed. The system was utilized for the dynamic displacement monitoring of the Lunzhou Highway Bridge in Qingyuan City, Guangdong Province, China. By analyzing the multi-point displacement data obtained by the system, the spatiotemporal deformation evolution of the bridge during vehicle traffic was discussed, and the following conclusions can be drawn from this work:

(1) The system successfully acquired multi-point dynamic displacement data of the bridge, with results largely consistent with those measured by the Scheimpflug camera. The maximum RMSE of the system was observed to be less than 0.3 mm.

(2) The proposed UAV vision-based method effectively mitigated errors caused by UAV jitter, thereby enhancing the monitoring accuracy of the system.

(3) The research indicated that the vertical dominant response frequency of the Lunzhou Highway Bridge was found to be 2.6 Hz.

The proposed system developed in this study not only provided novel and detailed data support for bridge safety assessment but also demonstrated highly consistent measurement results compared to the Scheimpflug camera. By positioning multiple targets along the bridge, the system facilitates multi-point synchronous measurement and effectively reduces the average cost associated with single-point measurements.

Author Contributions

Conceptualization, Wujiao Dai; methodology, Deyong Pan, Wujiao Dai, Lei Xing; formal analysis, Wujiao Dai, Zhiwu Yu; investigation, Wujiao Dai, Jun Wu; resources, Wujiao Dai, Zhiwu Yu; data curation, Deyong Pan, Lei Xing; validation, Deyong Pan, Lei Xing, Yunsheng Zhang; writing—original draft preparation, Deyong Pan, Wujiao Dai, Lei Xing; writing—review and editing, Deyong Pan, Wujiao Dai, Lei Xing; supervision, Zhiwu Yu, Yunsheng Zhang; project administration, Zhiwu Yu, Jun Wu; funding acquisition, Wujiao Dai, Zhiwu Yu. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Science and Technology Research and Development Program Project of China Railway Group Limited (Major Special Project, No.: 2022-Special-09).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request due to restrictions (e.g., privacy, legal or ethical reasons).

Acknowledgments

The authors would like to thank the technical support team for their assistance in field data collection and equipment maintenance during the study. We also express our gratitude to Zhubao Xiang for his contributions related to technical consultation in the research.

Conflicts of Interest

Author Jun Wu is affiliated with China Railway Group Limited, which provided funding for this study through the Science and Technology Research and Development Program Project (Major Special Project, No.: 2022 - Special - 09). The authors declare no conflicts of interest related to the design of the study, data collection, analysis, manuscript writing, or the decision to publish the research results. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results..

References

- Feng, D.M.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection–A review. Engineering Structures 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Nickitopoulou, A.; Protopsalti, K.; Stiros, S. Monitoring dynamic and quasi-static deformations of large flexible engineering structures with GPS: Accuracy, limitations and promises. Engineering Structures 2006, 28, 1471–1482, 10. [Google Scholar] [CrossRef]

- Nakamura, S.I. GPS measurement of wind-induced suspension bridge girder displacements. Journal of Structural Engineering 2000, 126, 1413–1419, 10. [Google Scholar] [CrossRef]

- Ma, Z.X.; Choi, J.; Sohn, H. Structural displacement sensing techniques for civil infrastructure: A review. Journal of Infrastructure Intelligence and Resilience 2023, 2, 100041, 10. [Google Scholar] [CrossRef]

- Li, J.; Hao, H. Health monitoring of joint conditions in steel truss bridges with relative displacement sensors. Measurement 2016, 88, 360–371, 10. [Google Scholar] [CrossRef]

- Guo, T.; Chen, Y.W. Field stress/displacement monitoring and fatigue reliability assessment of retrofitted steel bridge details. Engineering Failure Analysis 2011, 18, 354–363, 10. [Google Scholar] [CrossRef]

- Lienhart, W.; Ehrhart, M.; Grick, M. High frequent total station measurements for the monitoring of bridge vibrations. Journal of Applied Geodesy 2017, 11, 1–8, 10. [Google Scholar] [CrossRef]

- Paar, R.; Žnidarič, M.; Slavič, J.; Boltežar, M. Vibration monitoring of civil engineering structures using contactless vision-based low-cost iats prototype. Sensors 2021, 21, 7952, 10. [Google Scholar] [CrossRef] [PubMed]

- Pieraccini, M.; Guidi, G.; Luzi, G.; Masini, N. Static and dynamic testing of bridges through microwave interferometry. NDT & E International 2007, 40, 208–214, 10. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Y.; Wang, Z.; Yang, G. Realtime in-plane displacements tracking of the precision positioning stage based on computer micro-vision. Mechanical Systems and Signal Processing 2019, 124, 111–123, 10. [Google Scholar] [CrossRef]

- Song, Q.S.; Zhang, L.; Li, H.N.; Zhang, Y.; Wang, Z.H. Computer vision-based illumination-robust and multi-point simultaneous structural displacement measuring method. Mechanical Systems and Signal Processing 2022, 170, 108822, 10. [Google Scholar] [CrossRef]

- Lee, J.J.; Shinozuka, M. A vision-based system for remote sensing of bridge displacement. NDT & E International 2006, 39, 425–431, 10. [Google Scholar] [CrossRef]

- Feng, D.M.; Feng, M.Q.; Pan, B. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575, 10. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.M.; Zhang, L.; Song, Q.S.; Li, H.N. A novel gradient-based matching via voting technique for vision-based structural displacement measurement. Mechanical Systems and Signal Processing 2022, 171, 108951, 10. [Google Scholar] [CrossRef]

- Lee, J.H.; Park, J.W.; Sim, S.H. Long-term displacement measurement of full-scale bridges using camera ego-motion compensation. Mechanical Systems and Signal Processing 2020, 140, 106651, 10. [Google Scholar] [CrossRef]

- Ribeiro, D.; Silva, A.; Figueiredo, E.; Calçada, R. Non-contact structural displacement measurement using Unmanned Aerial Vehicles and video-based systems. Mechanical Systems and Signal Processing 2021, 160, 107869, 10. [Google Scholar] [CrossRef]

- Yu, Q.F.; Li, H.N.; Song, Q.S.; Zhang, L. Displacement measurement of large structures using nonoverlap field of view multi-camera systems under six degrees of freedom ego-motion. Computer-Aided Civil and Infrastructure Engineering 2023, 38, 1483–1503, 10. [Google Scholar] [CrossRef]

- Huang, X.Y.; Song, Q.S.; Zhang, L.; Li, H.N. A Mitigation Method for Optical-Turbulence-Induced Errors and Optimal Target Design in Vision-Based Displacement Measurement. Sensors 2023, 23, 1884, 10. [Google Scholar] [CrossRef]

- Yoon, H.C.; Shin, J.; Spencer, B.F., Jr. Structural displacement measurement using an unmanned aerial system. Computer-Aided Civil and Infrastructure Engineering 2018, 33, 183–192, 10. [Google Scholar] [CrossRef]

- Yoon, H.C.; Park, J.W.; Spencer, B.F., Jr.; Sim, S.H. Cross-correlation-based structural system identification using unmanned aerial vehicles. Sensors 2017, 17, 2075, 10. [Google Scholar] [CrossRef]

- Hoskere, V.; Park, J.W.; Yoon, H.; Spencer, B.F., Jr. Vision-Based Modal Survey of Civil Infrastructure Using Unmanned Aerial Vehicles. Journal of Structural Engineering. 2019, 145, 04019062. [Google Scholar] [CrossRef]

- Ribeiro, D.; Silva, A.; Figueiredo, E.; Calçada, R. Non-contact structural displacement measurement using Unmanned Aerial Vehicles and video-based systems. Mechanical Systems and Signal Processing 2021, 160, 107869, 10. [Google Scholar] [CrossRef]

- Weng, Y.F.; Li, H.N.; Song, Q.S.; Zhang, L. Homography-based structural displacement measurement for large structures using unmanned aerial vehicles. Computer-Aided Civil and Infrastructure Engineering 2021, 36, 1114–1128, 10. [Google Scholar] [CrossRef]

- Xing, L.; Dai, W.J. A Robust Detection and Localization Method for Cross Markers Oriented to Visual Measurement. Surveying and Mapping Science, 1016. [Google Scholar]

- Xing, L.; Dai, W.J.; Zhang, Y.S. Scheimpflug Camera-Based Technique for Multi-Point Displacement Monitoring of Bridges. Sensors 2022, 22, 4093, 10. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

UAV vision-based bridge monitoring configurations(a) Lateral view shooting.(b) Longitudinal view shooting.

Figure 1.

UAV vision-based bridge monitoring configurations(a) Lateral view shooting.(b) Longitudinal view shooting.

Figure 2.

UAV vision-based measurement system.(a) Monitoring layout (b) Target (c) UAV.(d) Computing terminal and camera.

Figure 2.

UAV vision-based measurement system.(a) Monitoring layout (b) Target (c) UAV.(d) Computing terminal and camera.

Figure 3.

Camera motion along axis.

Figure 3.

Camera motion along axis.

Figure 4.

Measurement model.

Figure 4.

Measurement model.

Figure 5.

Variations in measurement points when the camera rotates around the x-axis.

Figure 5.

Variations in measurement points when the camera rotates around the x-axis.

Figure 6.

Change of measurement points upon rotating the camera around the z-axis.

Figure 6.

Change of measurement points upon rotating the camera around the z-axis.

Figure 7.

Measurement point change of camera translation along y-direction.

Figure 7.

Measurement point change of camera translation along y-direction.

Figure 8.

Variation in the y-coordinate of measurement point N when the camera is translated along the z-axis.

Figure 8.

Variation in the y-coordinate of measurement point N when the camera is translated along the z-axis.

Figure 9.

Monitoring scene and field deployment (a) monitoring scene (b) experimental setup (c) target, translation slide and fixed camera field deployment.

Figure 9.

Monitoring scene and field deployment (a) monitoring scene (b) experimental setup (c) target, translation slide and fixed camera field deployment.

Figure 10.

UAV-mounted camera imaging results.

Figure 10.

UAV-mounted camera imaging results.

Figure 11.

Displacement curve before and after motion error correction of UAV-mounted camera (a) horizontal x displacement curve of target T2 (b) vertical y displacement curve of target T2 (c) horizontal x displacement curve of target T3 (d) vertical y displacement curve of target T3 (e) horizontal x displacement curve of target T4 (f) vertical y displacement curve of target T4.

Figure 11.

Displacement curve before and after motion error correction of UAV-mounted camera (a) horizontal x displacement curve of target T2 (b) vertical y displacement curve of target T2 (c) horizontal x displacement curve of target T3 (d) vertical y displacement curve of target T3 (e) horizontal x displacement curve of target T4 (f) vertical y displacement curve of target T4.

Figure 12.

Comparison of monitoring results of UAV-mounted camera and fixed camera.

Figure 12.

Comparison of monitoring results of UAV-mounted camera and fixed camera.

Figure 13.

Lunzhou Highway Bridge in China.

Figure 13.

Lunzhou Highway Bridge in China.

Figure 14.

Monitoring points of Lunzhou Highway Bridge.

Figure 14.

Monitoring points of Lunzhou Highway Bridge.

Figure 15.

Installation location and site layout.

Figure 15.

Installation location and site layout.

Figure 16.

Images of target locations obtained using (a) Scheimpflug camera, (b) UAV-mounted camera.

Figure 16.

Images of target locations obtained using (a) Scheimpflug camera, (b) UAV-mounted camera.

Figure 17.

Displacement curve of midspan target T3.

Figure 17.

Displacement curve of midspan target T3.

Figure 18.

Comparison of measurement results between the UAV-mounted camera and Scheimpflug camera for (a) Target T2, (b) Target T3, and (c) Target T4.

Figure 18.

Comparison of measurement results between the UAV-mounted camera and Scheimpflug camera for (a) Target T2, (b) Target T3, and (c) Target T4.

Figure 19.

Spectrum analysis and comparison of measurement results of (a) Scheimpflug camera and (b) UAV-mounted camera.

Figure 19.

Spectrum analysis and comparison of measurement results of (a) Scheimpflug camera and (b) UAV-mounted camera.

Table 1.

UAV vision-based measurement system parameters.

Table 1.

UAV vision-based measurement system parameters.

| Equipment |

Specifications |

| UAV-mounted Camera |

Pixel size: 5.86 μm

Resolution: 1920×1200

Acquisition frequency: ≤165 FPS

Focal length: 85 mm |

| Computing Terminal |

CPU: Core i3-N305

RAM: 32 G |

| Targets |

Size: 3L×2L |

| UAV |

Maximum payload: 5 kg

Full-load endurance: 25 min

Positioning accuracy: ≤0.1 m |

Table 2.

UAV Vision-Based Measurement Experimental Equipment.

Table 2.

UAV Vision-Based Measurement Experimental Equipment.

| equipment |

parameter |

quantity |

| UAV-mounted camera |

Resolution:1920×1200

Focal length:85mm |

1 |

| Fixed camera |

Resolution:720x540

Focal length:8mm |

1 |

| steel ruler |

3m |

1 |

| Target and scaffold |

Size:20×30cm |

5 |

| Translation slide table |

0-3cm |

1 |

Table 3.

RMSE statistics of displacement measurement results of UAV-mounted camera monitoring experiment (mm).

Table 3.

RMSE statistics of displacement measurement results of UAV-mounted camera monitoring experiment (mm).

| Target Number |

Horizontal x- Direction Displacement |

Vertical y- Direction Displacement |

| Before Correction |

After Correction |

RMSE Improvement rate (%) |

Before Correction |

After Correction |

RMSE Improvement rate (%) |

| T2 |

99.82 |

0.14 |

99 |

365.77 |

0.11 |

99 |

| T3 |

123.57 |

0.21 |

99 |

462.58 |

0.19 |

99 |

| T4 |

144.91 |

0.15 |

99 |

516.08 |

0.25 |

99 |

Table 4.

RMSE statistics of monitoring vertical displacement measurement results of Lunzhou Highway Bridge (mm).

Table 4.

RMSE statistics of monitoring vertical displacement measurement results of Lunzhou Highway Bridge (mm).

| Target Number |

Vertical Displacement Measurement Result |

| T2 |

0.18 |

| T3 |

0.25 |

| T4 |

0.29 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).