Submitted:

23 September 2025

Posted:

10 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Research Methodology

3.1. Data Collection from Cloud Security Benchmark

3.2. Data Pre-Processing Techniques

3.3. Feature Extraction Methods

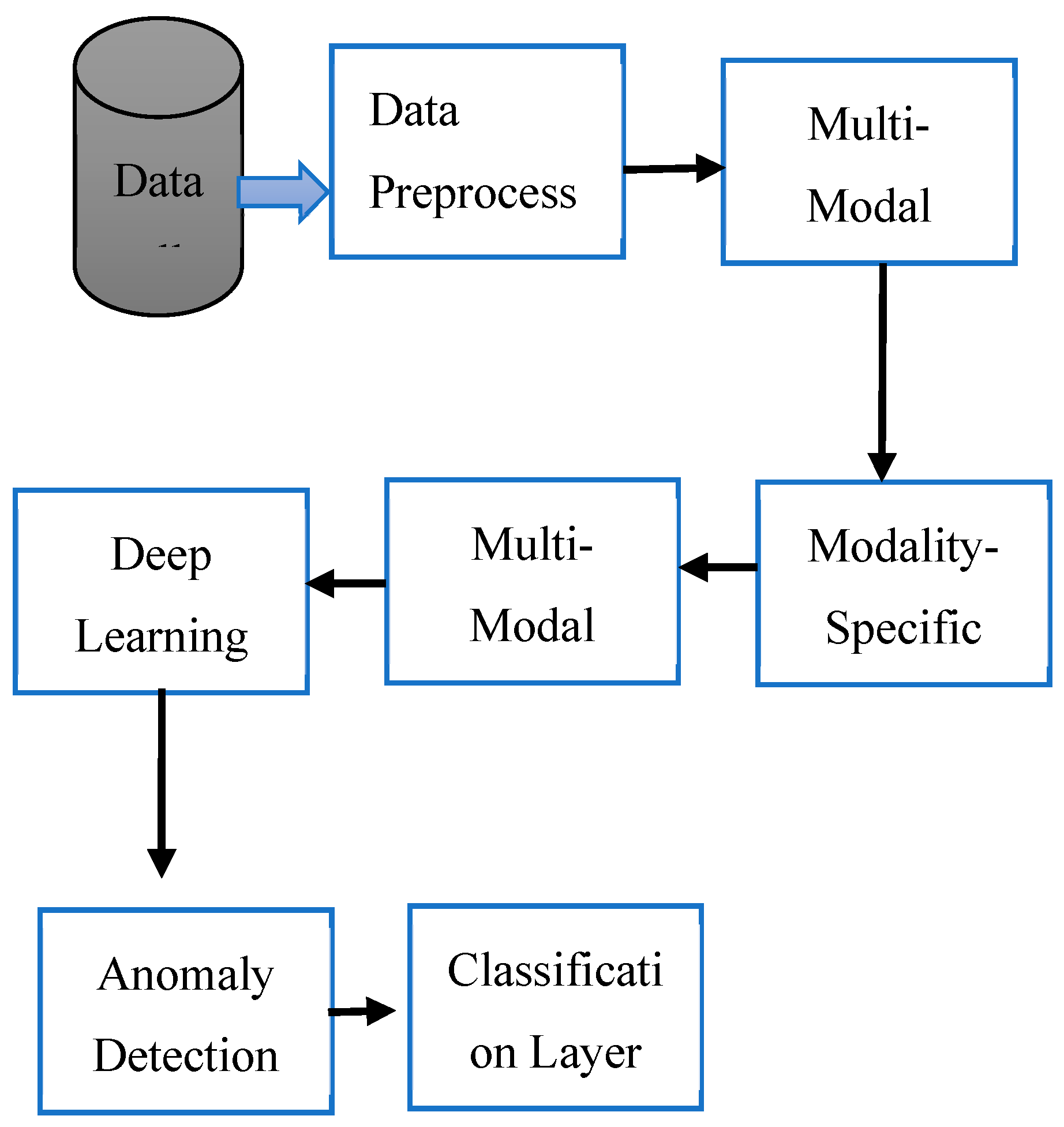

3.4. Design the Architecture of the Novel Multi-Modal Deep Learning Enhanced Autoencoder Model

| Algorithm 1. Multimodal Autoencoder. |

| for number of training iterations do Sample mini-batch of N multi-modal data {( Get using E1, E2, and FL. Reconstruct from using D1 and D2. Update the multi-modal autoencoder weights by descending their Stacked Graph Transformer: end for |

- Extract high-level characteristics: To extract spatial and temporal data, use convolutional neural networks (CNNs) or recurrent neural networks (RNNs).

- Model complicated interactions: To model relationships between several modalities, methods such as attention processes or graph convolutional networks (GCNs) are utilized.

- Improve representation: The fused data’s representation can be improved by applying strategies like residual networks or dense connections.

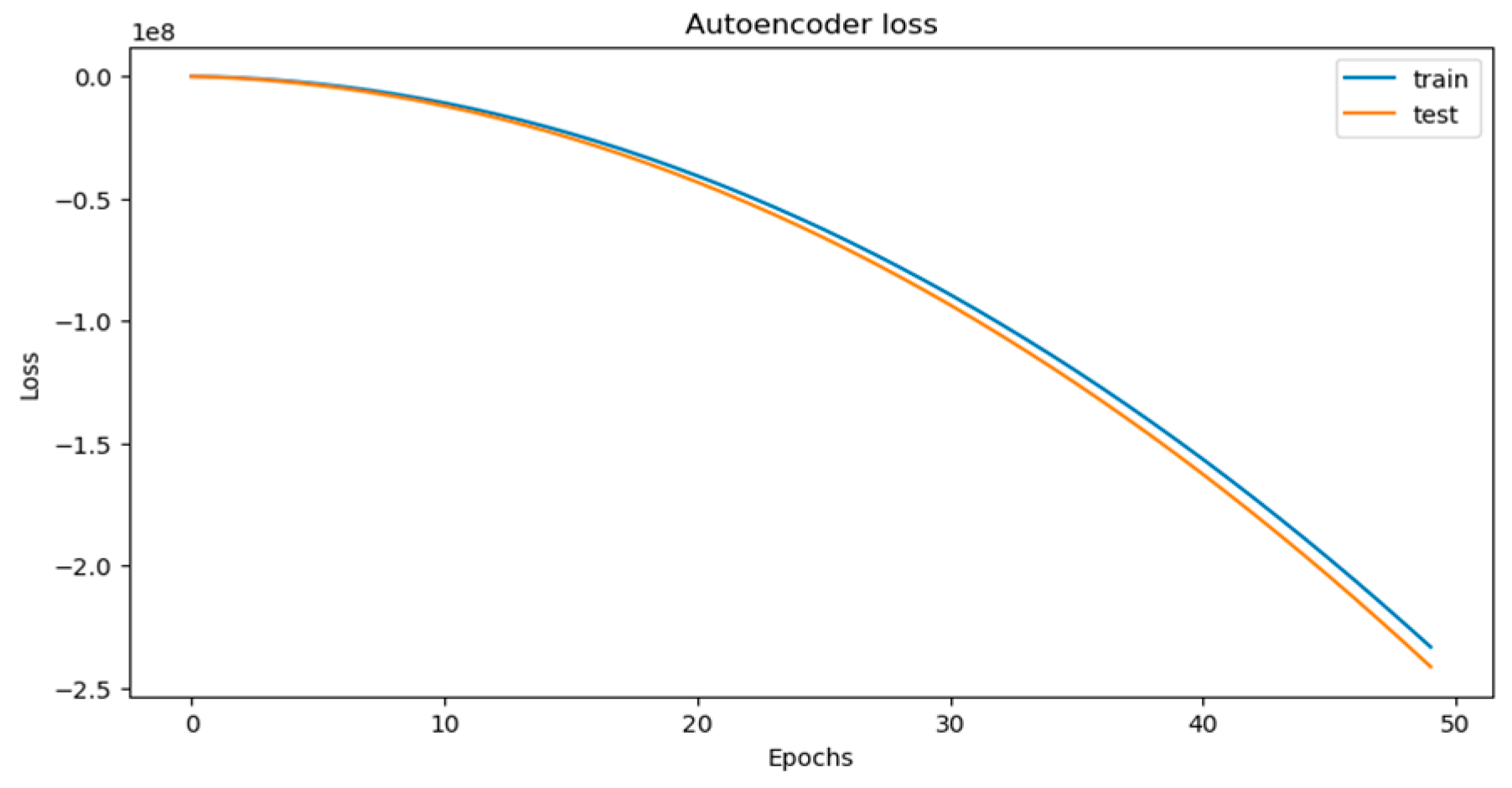

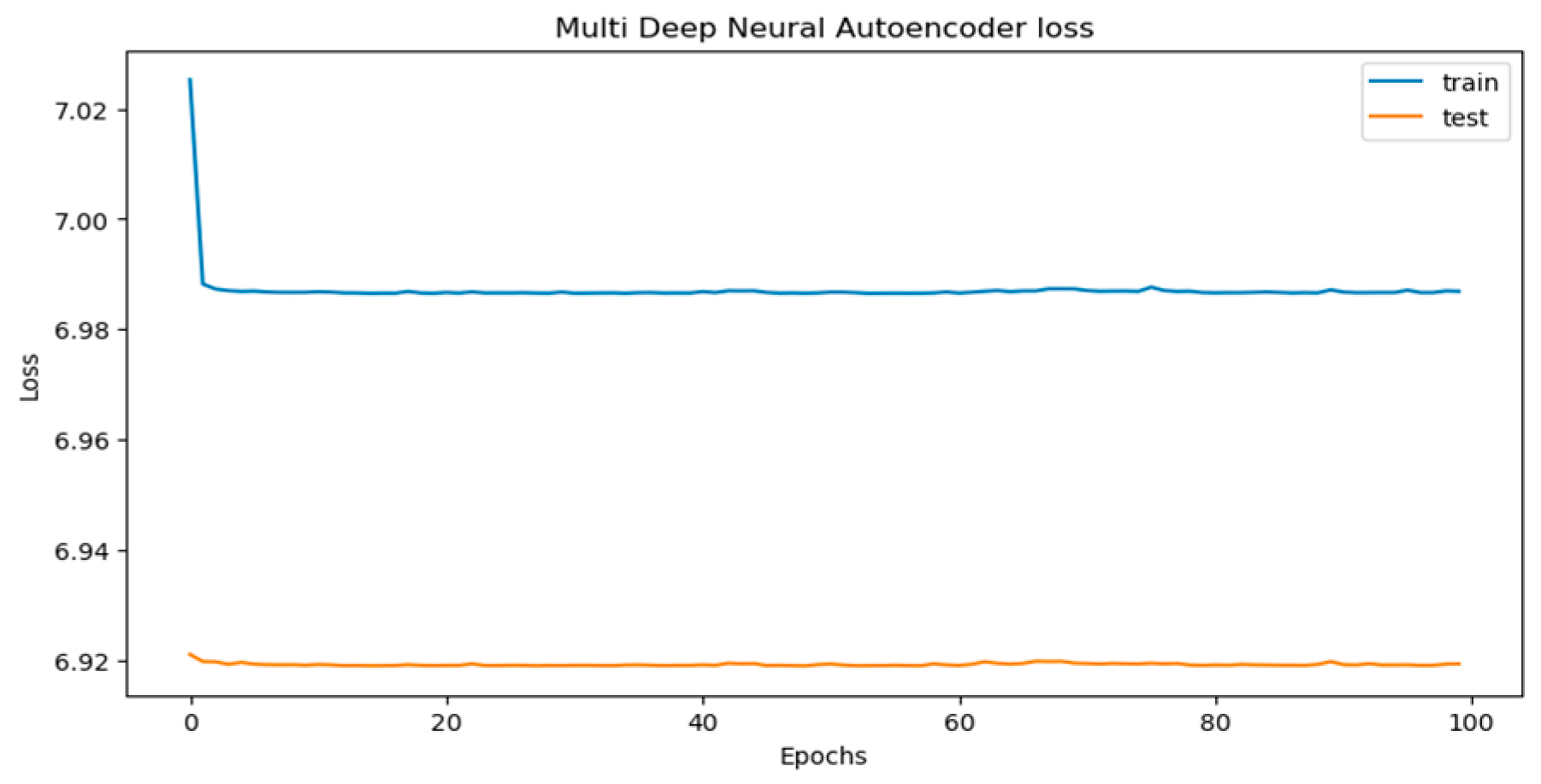

3.5. Model Training and Optimization

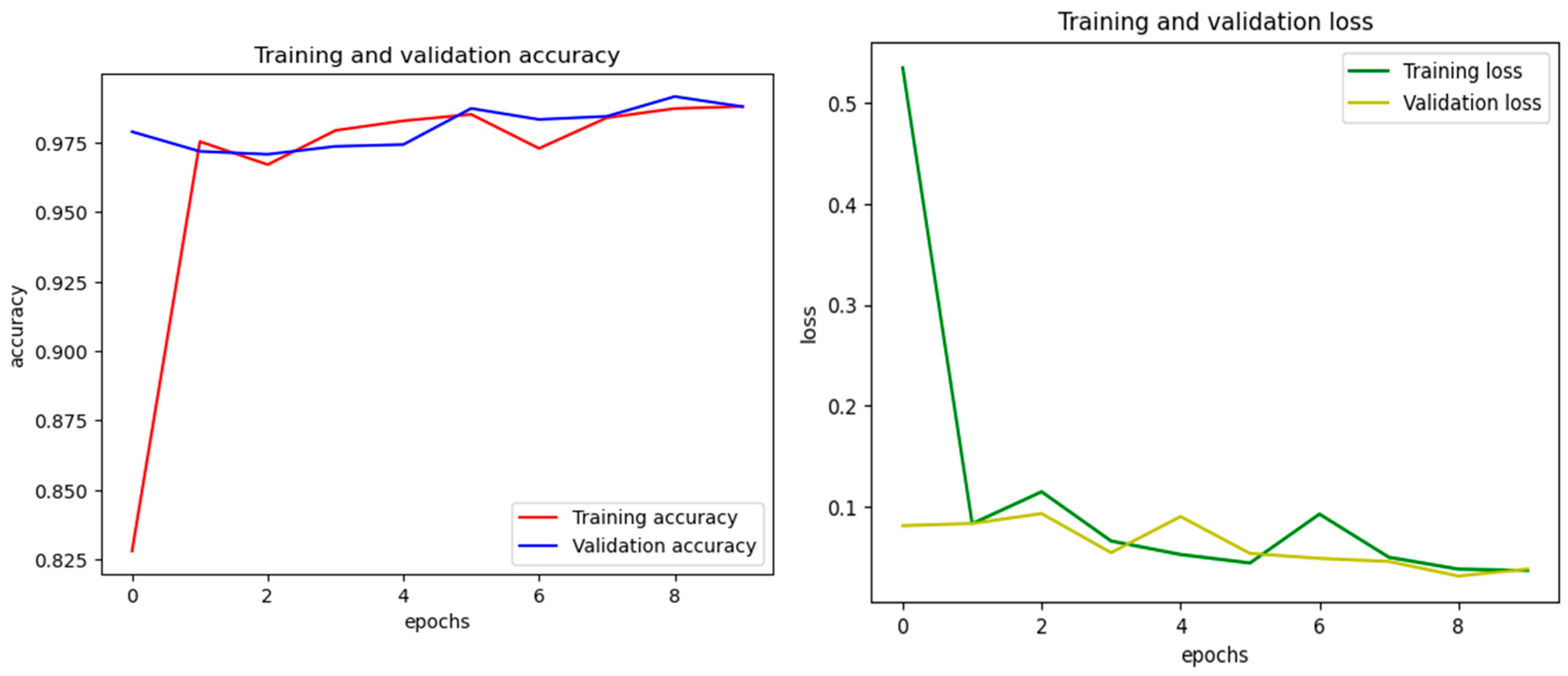

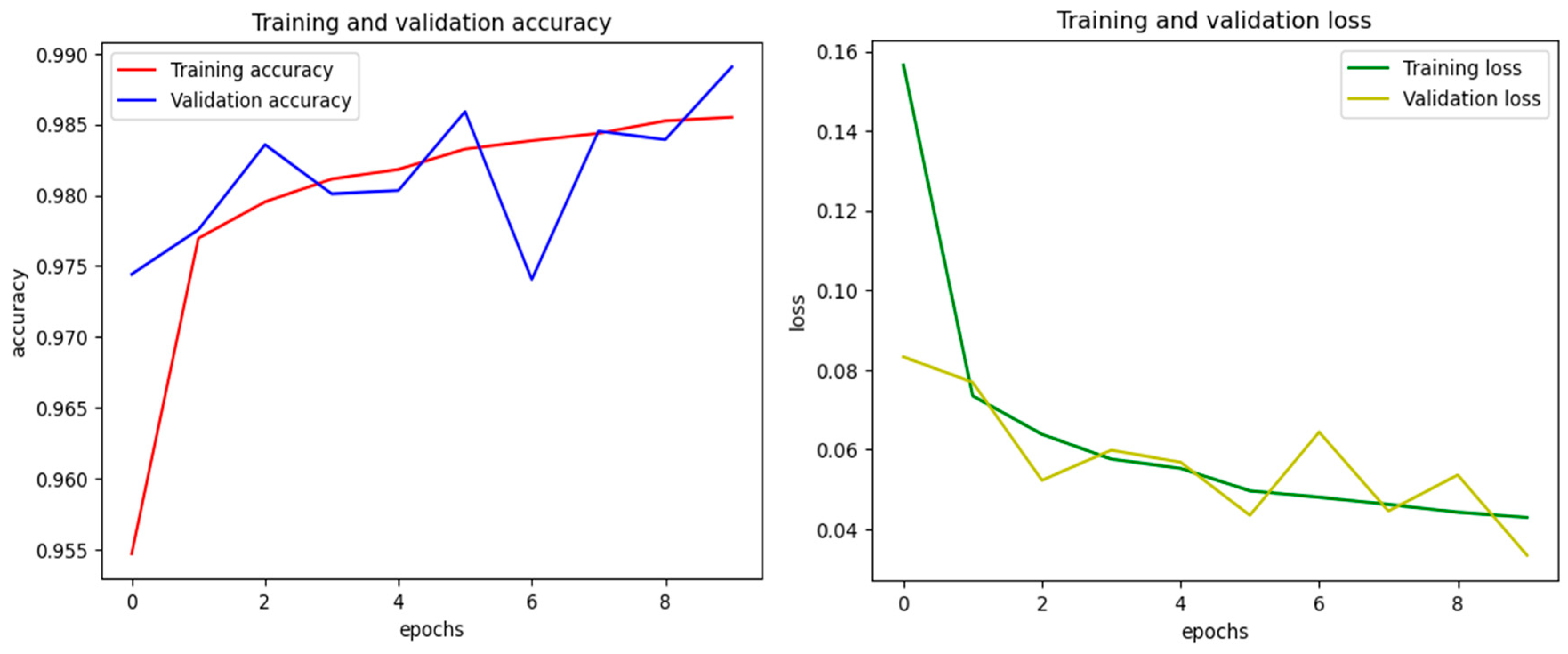

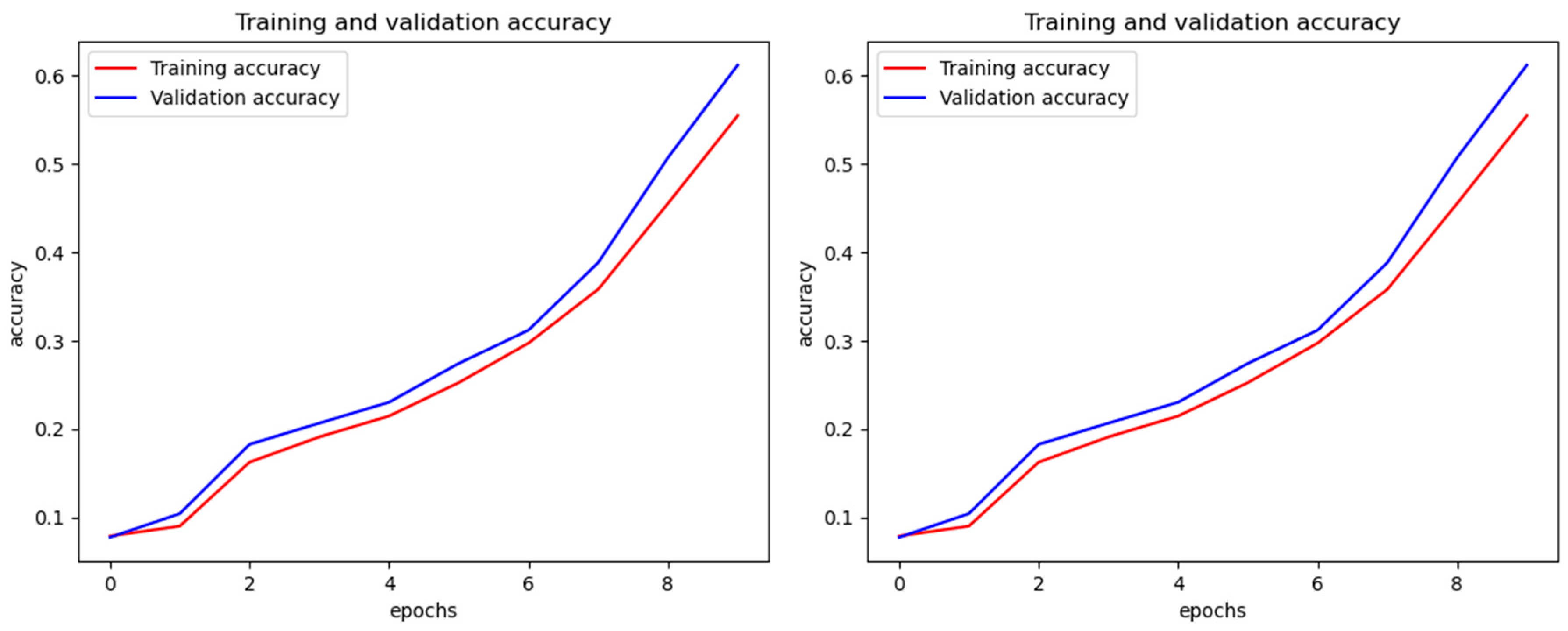

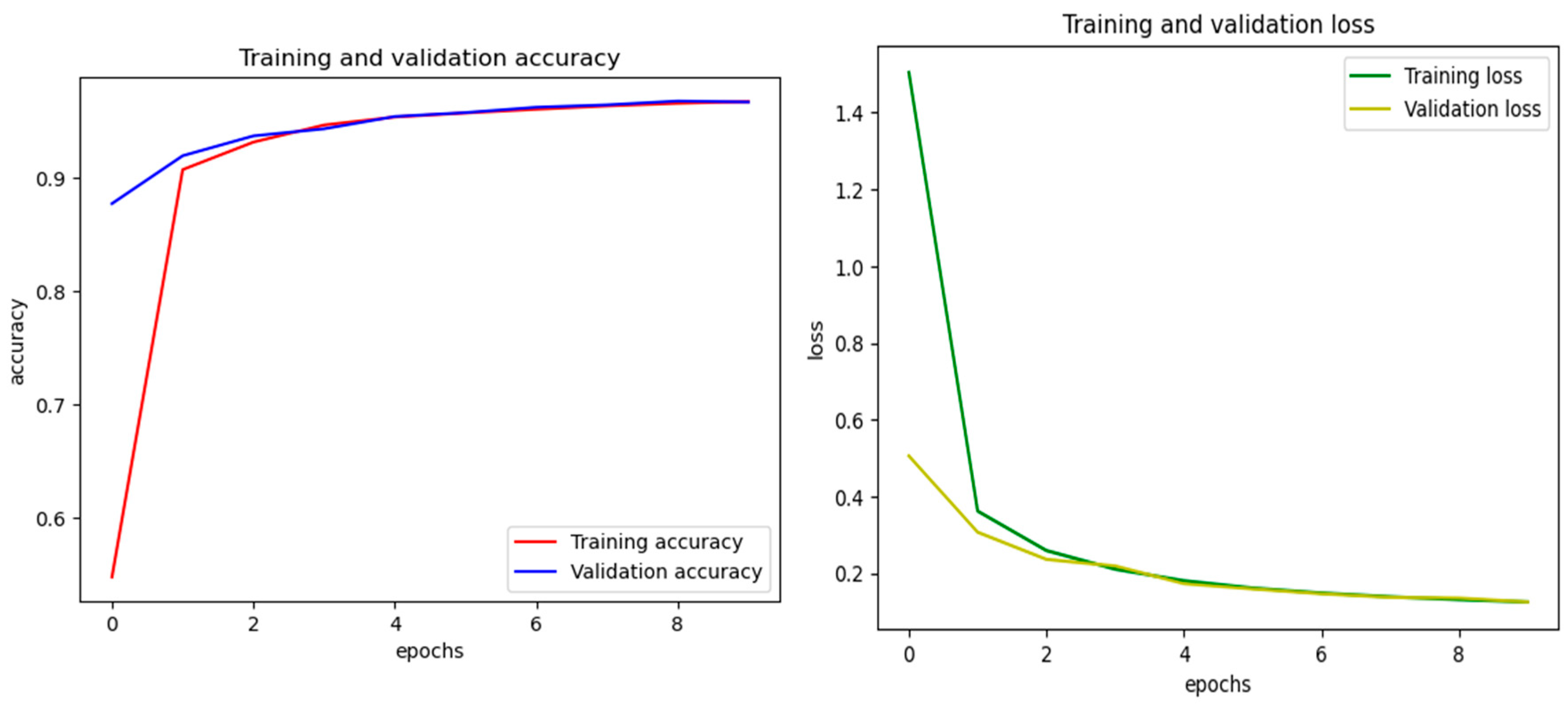

4. Simulation Results:

4.1. Performance Evaluation:

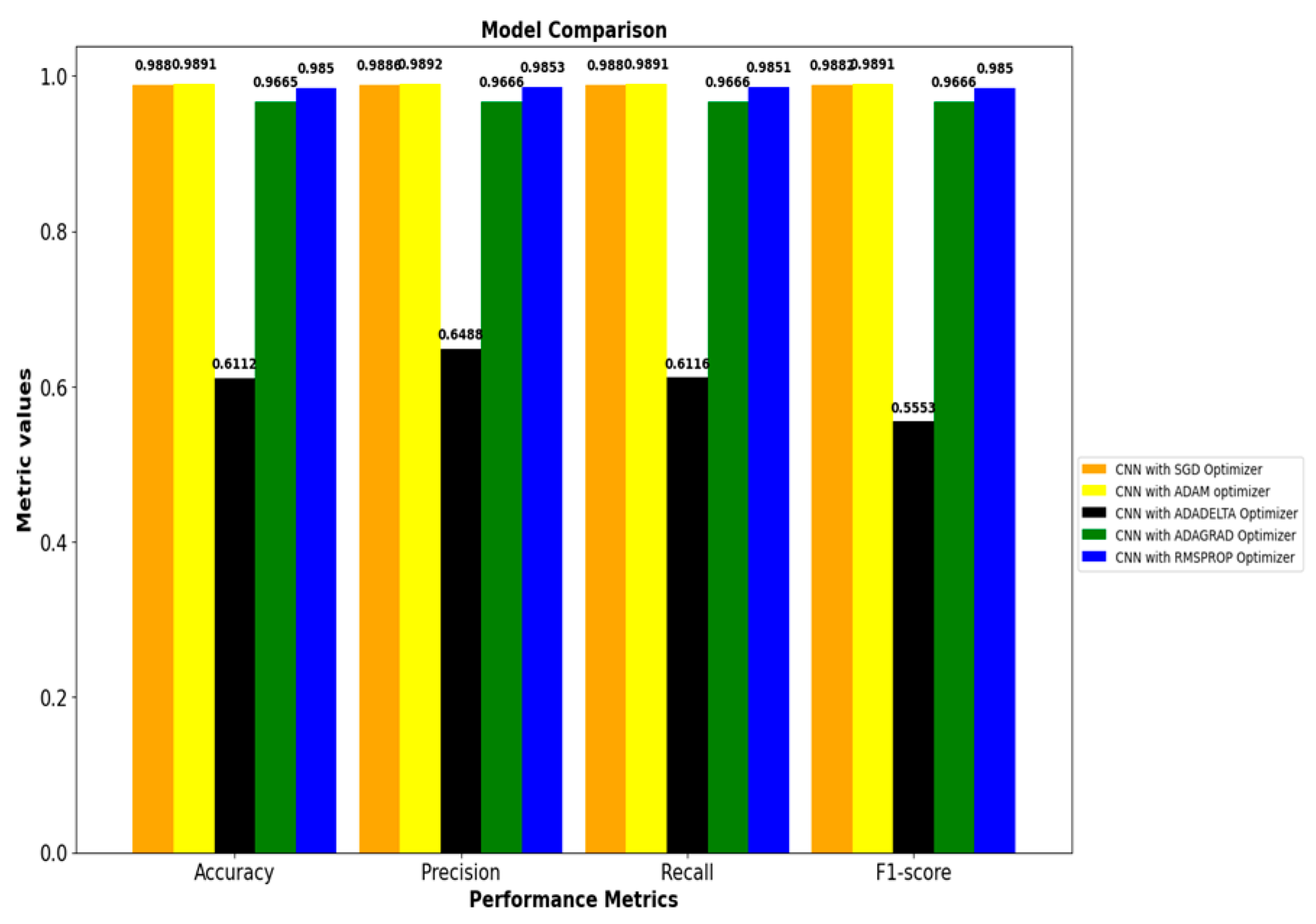

4.2. Performance Comparison:

| CNN with SGD Optimizer | CNN with ADAM Optimizer | CNN with ADADELTA Optimizer | CNN with ADAGRAD Optimizer | CNN with RMSPROP Optimizer | |

| Accuracy | 0.9880 | 0.9891 | 0.6112 | 0.9665 | 0.9850 |

| Precision | 0.9886 | 0.9892 | 0.6488 | 0.9666 | 0.9853 |

| Recall | 0.9880 | 0.9891 | 0.6116 | 0.9666 | 0.9851 |

| F1_score | 0.9882 | 0.9891 | 0.5553 | 0.9666 | 0.9850 |

5. Discussion:

6. Conclusions:

References

- Rehan, H. . (2024). AI-Driven Cloud Security: The Future of Safeguarding Sensitive Data in the Digital Age. Journal of Artificial Intelligence General Science (JAIGS) ISSN:3006-4023, 1(1), 132–151.

- Raja, V. ., & chopra, B. . (2024). Exploring Challenges and Solutions in Cloud Computing: A Review of Data Security and Privacy Concerns. Journal of Artificial Intelligence General Science (JAIGS) ISSN:3006-4023, 4(1), 121–144. [CrossRef]

- Abba Ari, A. A., Aziz, H. A., Njoya, A. N., Aboubakar, M., Djedouboum, A. C., Thiare, O., & Mohamadou, A. (2024). Data collection in IoT networks: Architecture, solutions, protocols and challenges. IET Wireless Sensor Systems, 1-26.

- Papageorgiou, G., Sarlis, V., & Tjortjis, C. (2024). Unsupervised Learning in NBA Injury Recovery: Advanced Data Mining to Decode Recovery Durations and Economic Impacts. Information, 15(1), 61. [CrossRef]

- Al-mugern, S., Othman, S. H., & Al-Dhaqm, A. (2024). An Improved Machine Learning Method by Applying Cloud Forensic Meta-Model to Enhance the Data Collection Process in Cloud Environments. Engineering, Technology & Applied Science Research, 14(1), 13017–13025.

- Gkonis, P. K., Nomikos, N., Trakadas, P., Sarakis, L., Xylouris, G., & Masip-Bruin, X. (2024). Leveraging Network Data Analytics Function and Machine Learning for Data Collection, Resource Optimization, Security and Privacy in 6G Networks. IEEE Access, 12, 21320–21336. [CrossRef]

- Mohammad, N., & Satish, K. S. R. V. (2020). BigData Security and Data Encryption in Cloud Computing. International Journal of Computer Science Trends and Technology (IJCST), 7(4), 35-40.

- Thabit, F., Alhomdy, S., Al-Ahdal, A. H. A., & Jagtap, S. (2021). A new lightweight cryptographic algorithm for enhancing data security in cloud computing. Global Transitions Proceedings, 2(1), 91-99. [CrossRef]

- Thabit, F., Alhomdy, S., & Jagtap, S. (2021). A new data security algorithm for cloud computing based on genetics techniques and logical-mathematical functions. International Journal of Intelligent Networks, 2, 18-33. [CrossRef]

- Awaysheh, F. M., Aladwan, M. N., Alazab, M., Alawadi, S., Cabaleiro, J. C., & Pena, T. F. (2022). Security by Design for Big Data Frameworks Over Cloud Computing. IEEE Transactions on Engineering Management, 69(6), 3676-3693. [CrossRef]

- Dai, Y., Yan, Z., Cheng, J., Duan, X., & Wang, G. (2023). Analysis of multimodal data fusion from an information theory perspective. Information Sciences, 623, 164-183. [CrossRef]

- Pawłowski, M., Wróblewska, A., & Sysko-Romańczuk, S. (2023). Effective techniques for multimodal data fusion: A comparative analysis. Sensors, 23(5), 2381. [CrossRef] [PubMed]

- Liu, Q., Huang, Y., Jin, C., Zhou, X., Mao, Y., Catal, C., & Cheng, L. (2024). Privacy and integrity protection for IoT multimodal data using machine learning and blockchain. ACM Transactions on Multimedia Computing, Communications and Applications, 20(6), 1-18. [CrossRef]

- Ridhun, M., Lewis, R. S., Misquith, S. C., Poojary, S., & Mahesh Karimbi, K. (2022, October). Multimodal Human Computer Interaction Using Hand Gestures and Speech. In International Conference on Intelligent Human Computer Interaction (pp. 63-74). Cham: Springer Nature Switzerland.

- Roy, D., Li, Y., Jian, T., Tian, P., Chowdhury, K., & Ioannidis, S. (2022). Multi-modality sensing and data fusion for multi-vehicle detection. IEEE Transactions on Multimedia, 25, 2280-2295. [CrossRef]

- Khan, S. U., Khan, M. A., Azhar, M., Khan, F., & Javed, M. (2023). Multimodal medical image fusion towards future research: A review. Journal of King Saud University-Computer and Information Sciences, 101733. [CrossRef]

- Cai, Q., Pan, Y., Yao, T., Ngo, C. W., & Mei, T. (2023). Objectfusion: Multi-modal 3d object detection with object-centric fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 18067-18076).

- Liang, P. P., Zadeh, A., & Morency, L. P. (2024). Foundations & trends in multimodal machine learning: Principles, challenges, and open questions. ACM Computing Surveys, 56(10), 1-42.

- Baltrušaitis, T., Ahuja, C., & Morency, L. P. (2018). Multimodal machine learning: A survey and taxonomy. IEEE transactions on pattern analysis and machine intelligence, 41(2), 423-443. [CrossRef] [PubMed]

- Zhang, Y., Sidibé, D., Morel, O., & Mériaudeau, F. (2021). Deep multimodal fusion for semantic image segmentation: A survey. Image and Vision Computing, 105, 104042. [CrossRef]

- Coppolino, L., D’Antonio, S., Mazzeo, G., & Romano, L. (2017). Cloud security: Emerging threats and current solutions. Computers & Electrical Engineering, 59, 126-140.

- Sen, J. (2015). Security and privacy issues in cloud computing. In Cloud technology: concepts, methodologies, tools, and applications (pp. 1585-1630). IGI global.

- Rabbani, M., Wang, Y. L., Khoshkangini, R., Jelodar, H., Zhao, R., & Hu, P. (2020). A hybrid machine learning approach for malicious behavior detection and recognition in cloud computing. Journal of Network and Computer Applications, 151, 102507. [CrossRef]

- Elsayed, M. A., & Zulkernine, M. (2020). PredictDeep: security analytics as a service for anomaly detection and prediction. IEEE Access, 8, 45184-45197. [CrossRef]

- Sethi, K., Kumar, R., Prajapati, N., & Bera, P. (2020, January). Deep reinforcement learning based intrusion detection system for cloud infrastructure. In 2020 International Conference on COMmunication Systems & NETworkS (COMSNETS) (pp. 1-6). IEEE.

- Abusitta, A., Bellaiche, M., Dagenais, M., & Halabi, T. (2019). A deep learning approach for proactive multi-cloud cooperative intrusion detection systems. Future Generation Computer Systems, 98, 308-318. [CrossRef]

- Chiba, Z., Abghour, N., Moussaid, K., El Omri, A., & Rida, M. (2019, April). A clever approach to develop an efficient deep neural network based IDS for cloud environments using a self-adaptive genetic algorithm. In 2019 international conference on advanced communication technologies and networking (CommNet) (pp. 1-9). IEEE.

- Molina Zarca, A., Bagaa, M., Bernal Bernabe, J., Taleb, T., & Skarmeta, A. F. (2020). Semantic-aware security orchestration in SDN/NFV-enabled IoT systems. Sensors, 20(13), 3622. [CrossRef] [PubMed]

- Bocci, A., Forti, S., Ferrari, G. L., & Brogi, A. (2021). Secure FaaS orchestration in the fog: how far are we?. Computing, 103(5), 1025-1056. [CrossRef]

- McDole, A., Gupta, M., Abdelsalam, M., Mittal, S., & Alazab, M. (2021). Deep learning techniques for behavioral malware analysis in cloud iaas. Malware analysis using artificial intelligence and deep learning, 269-285.

- Kimmell, J. C., Abdelsalam, M., & Gupta, M. (2021, August). Analyzing machine learning approaches for online malware detection in the cloud. In 2021 IEEE International Conference on Smart Computing (SMARTCOMP) (pp. 189-196). IEEE.

- McDole, A., Abdelsalam, M., Gupta, M., & Mittal, S. (2020). Analyzing CNN based behavioral malware detection techniques on cloud IaaS. In Cloud Computing–CLOUD 2020: 13th International Conference, Held as Part of the Services Conference Federation, SCF 2020, Honolulu, HI, USA, September 18-20, 2020, Proceedings 13 (pp. 64-79). Springer International Publishing.

- Alayrac, J. B., Recasens, A., Schneider, R., Arandjelović, R., Ramapuram, J., De Fauw, J., ... & Zisserman, A. (2020). Self-supervised multimodal versatile networks. Advances in neural information processing systems, 33, 25-37.

- Castrejon, L., Aytar, Y., Vondrick, C., Pirsiavash, H., & Torralba, A. (2016). Learning aligned cross-modal representations from weakly aligned data. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2940-2949).

- Hu, R., & Singh, A. (2021). Unit: Multimodal multitask learning with a unified transformer. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1439-1449).

- Kamath, A., Singh, M., LeCun, Y., Synnaeve, G., Misra, I., & Carion, N. (2021). Mdetr-modulated detection for end-to-end multi-modal understanding. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1780-1790).

- Karpathy, A., & Fei-Fei, L. (2015). Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3128-3137).

- Kim, W., Son, B., & Kim, I. (2021, July). Vilt: Vision-and-language transformer without convolution or region supervision. In International conference on machine learning (pp. 5583-5594). PMLR.

- Lu, J., Goswami, V., Rohrbach, M., Parikh, D., & Lee, S. (2020). 12-in-1: Multi-task vision and language representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10437-10446).

- Sutter, T., Daunhawer, I., & Vogt, J. (2020). Multimodal generative learning utilizing jensen-shannon-divergence. Advances in neural information processing systems, 33, 6100-6110.

- Tan, H., & Bansal, M. (2019). Lxmert: Learning cross-modality encoder representations from transformers. arXiv preprint. arXiv:1908.07490.

- Wu, M., & Goodman, N. (2018). Multimodal generative models for scalable weakly-supervised learning. Advances in neural information processing systems, 31.

- Ngiam, J., Khosla, A., Kim, M., Nam, J., Lee, H., & Ng, A. Y. (2011). Multimodal deep learning. In Proceedings of the 28th international conference on machine learning (ICML-11) (pp. 689-696).

- Xu, H., Yan, M., Li, C., Bi, B., Huang, S., Xiao, W., & Huang, F. (2021). E2E-VLP: End-to-end vision-language pre-training enhanced by visual learning. arXiv preprint. arXiv:2106.01804.

- Girdhar, R., Singh, M., Ravi, N., Van Der Maaten, L., Joulin, A., & Misra, I. (2022). Omnivore: A single model for many visual modalities. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 16102-16112).

- Akbari, H., Yuan, L., Qian, R., Chuang, W. H., Chang, S. F., Cui, Y., & Gong, B. (2021). Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. Advances in Neural Information Processing Systems, 34, 24206-24221.

- Menze, B. H., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., ... & Van Leemput, K. (2014). The multimodal brain tumor image segmentation benchmark (BRATS). IEEE transactions on medical imaging, 34(10), 1993-2024. [CrossRef]

- Schmidt, P., Reiss, A., Duerichen, R., Marberger, C., & Van Laerhoven, K. (2018, October). Introducing wesad, a multimodal dataset for wearable stress and affect detection. In Proceedings of the 20th ACM international conference on multimodal interaction (pp. 400-408).

- Zheng, X., Huang, X., Ji, C., Yang, X., Sha, P., & Cheng, L. (2024). Multi-modal person re-identification based on transformer relational regularization. Information Fusion, 103, 102128. [CrossRef]

- Ghorbanali, A., & Sohrabi, M. K. (2023). A comprehensive survey on deep learning-based approaches for multimodal sentiment analysis. Artificial Intelligence Review, 56(Suppl 1), 1479-1512. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).