Submitted:

22 August 2025

Posted:

25 August 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

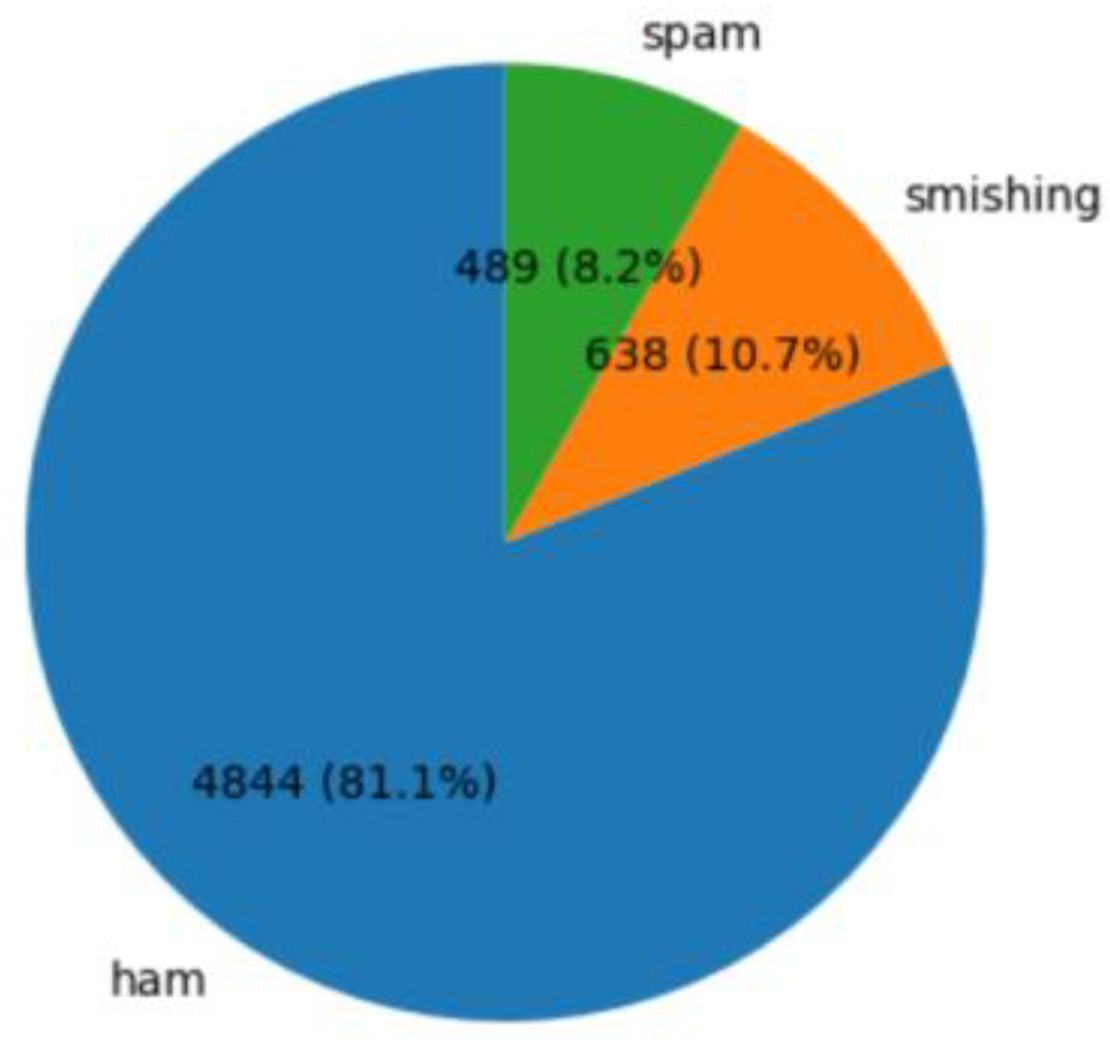

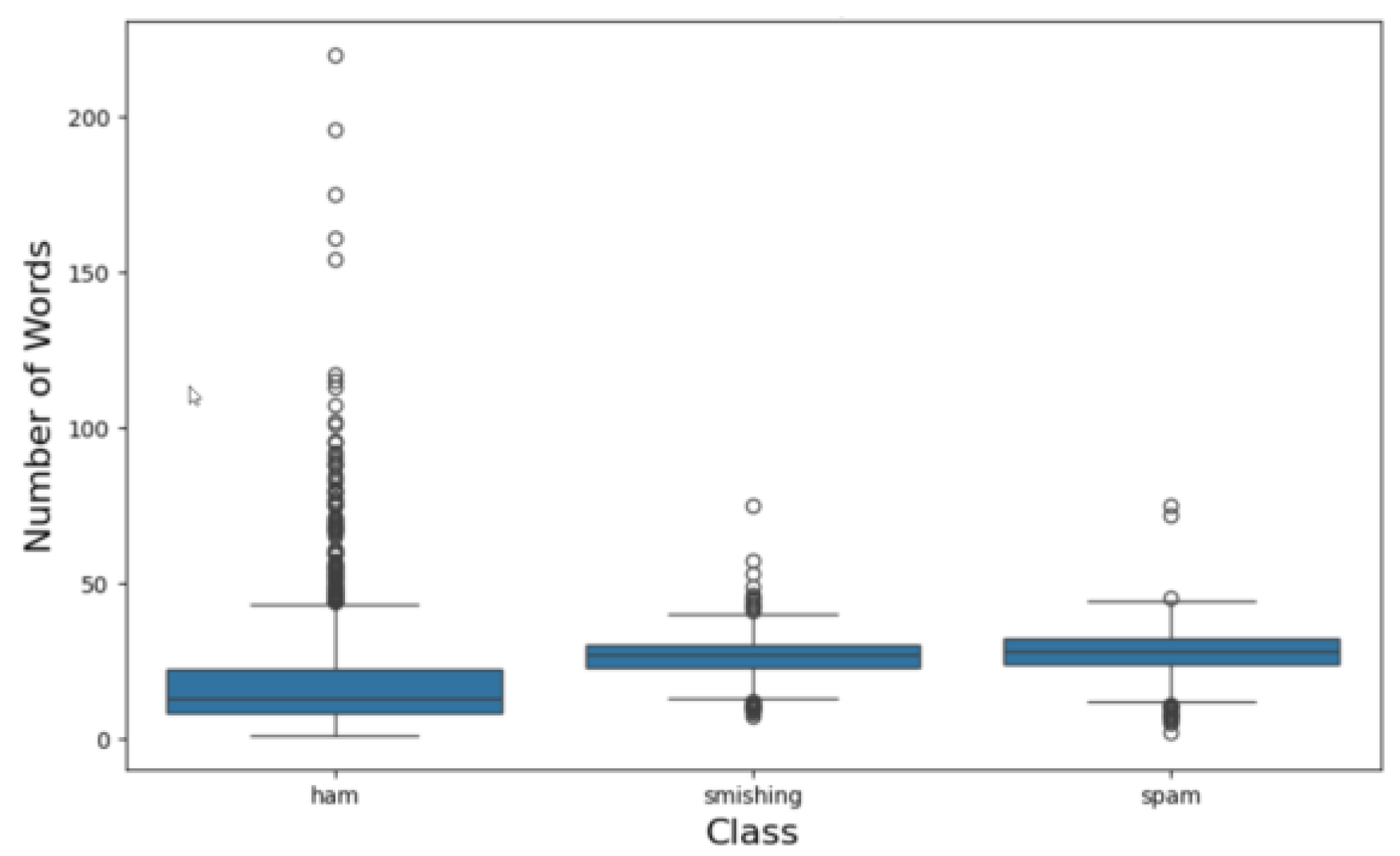

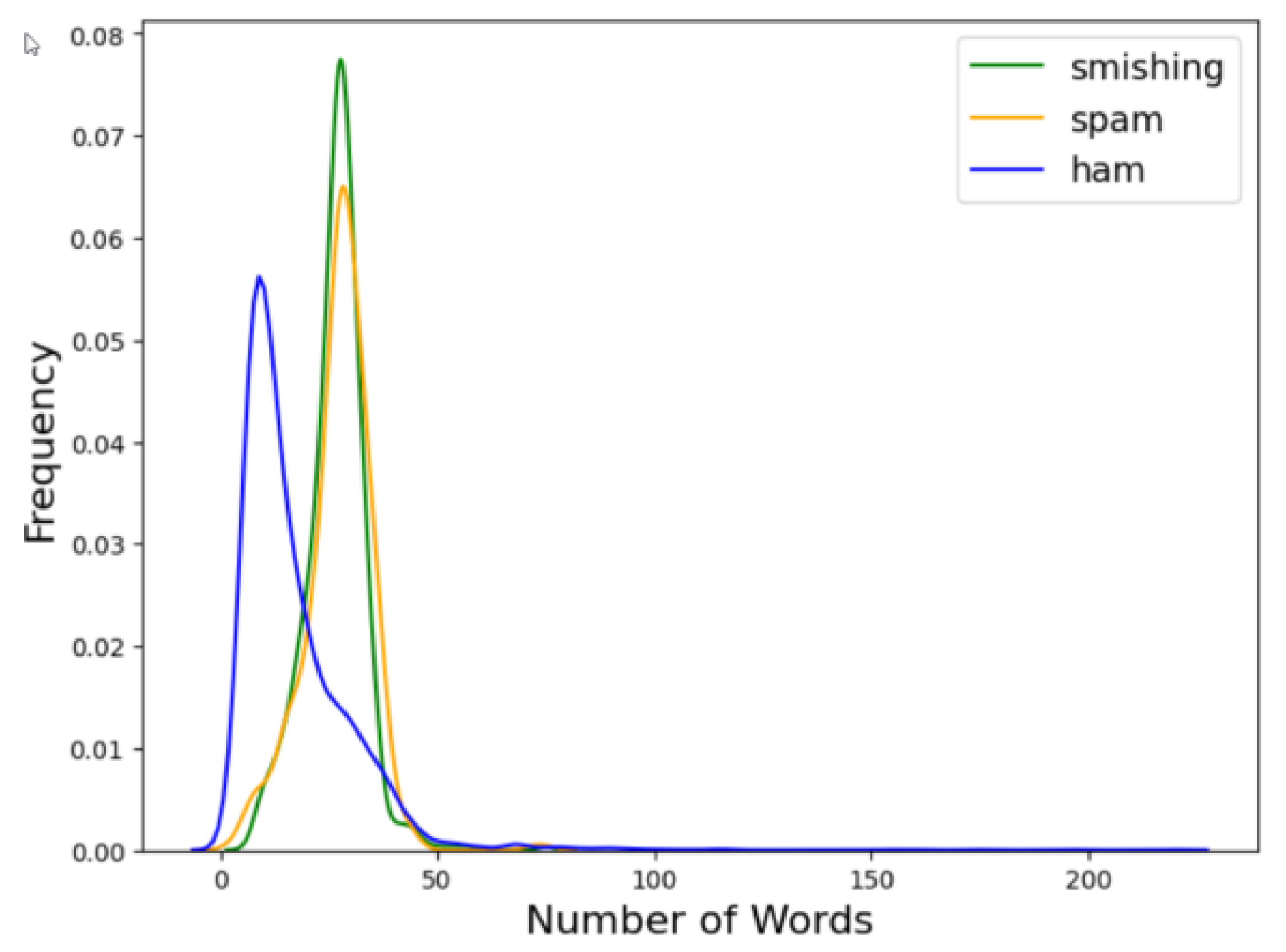

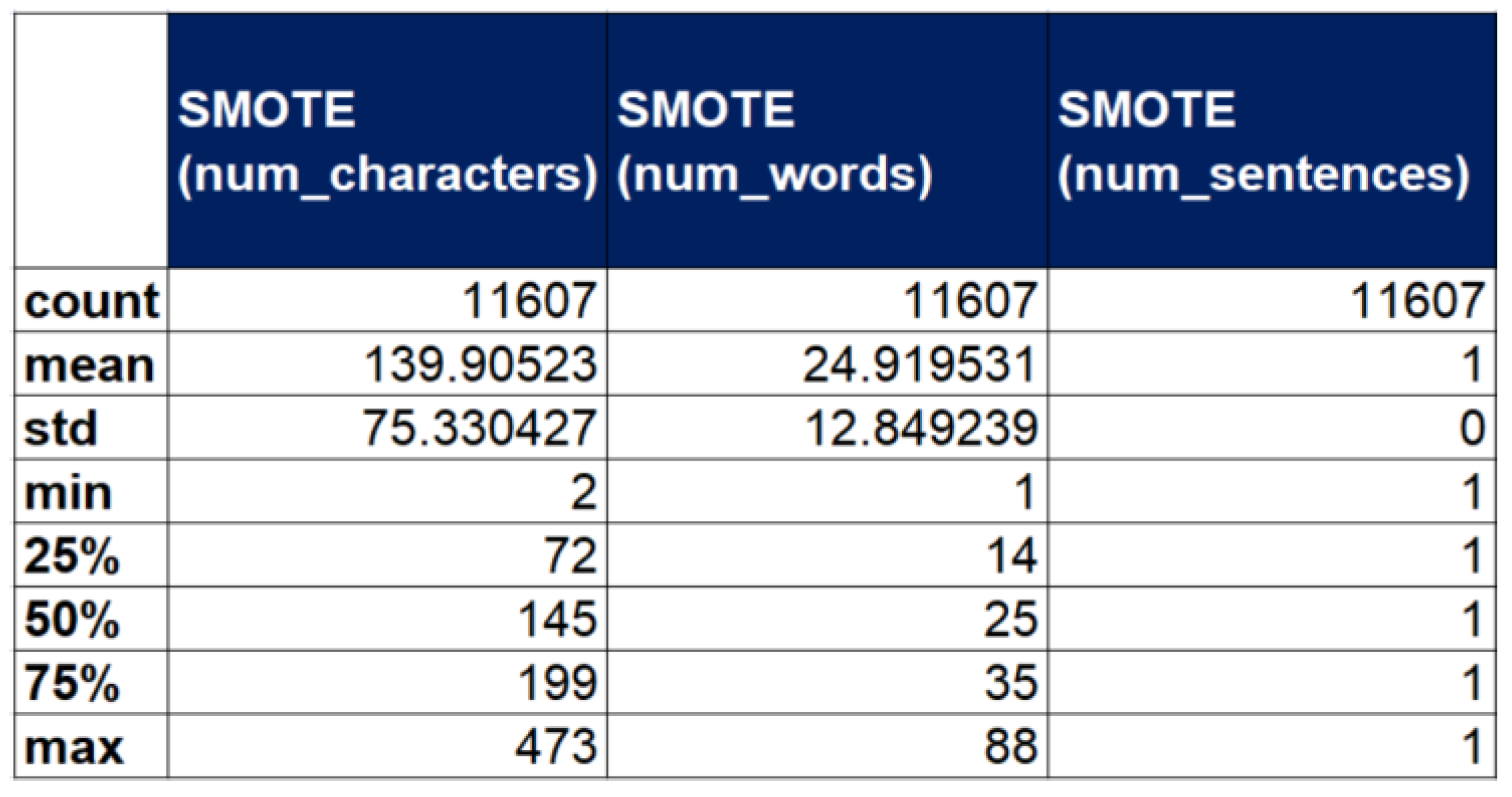

3. Phishing Dataset Specifications

4. Design and Implementation

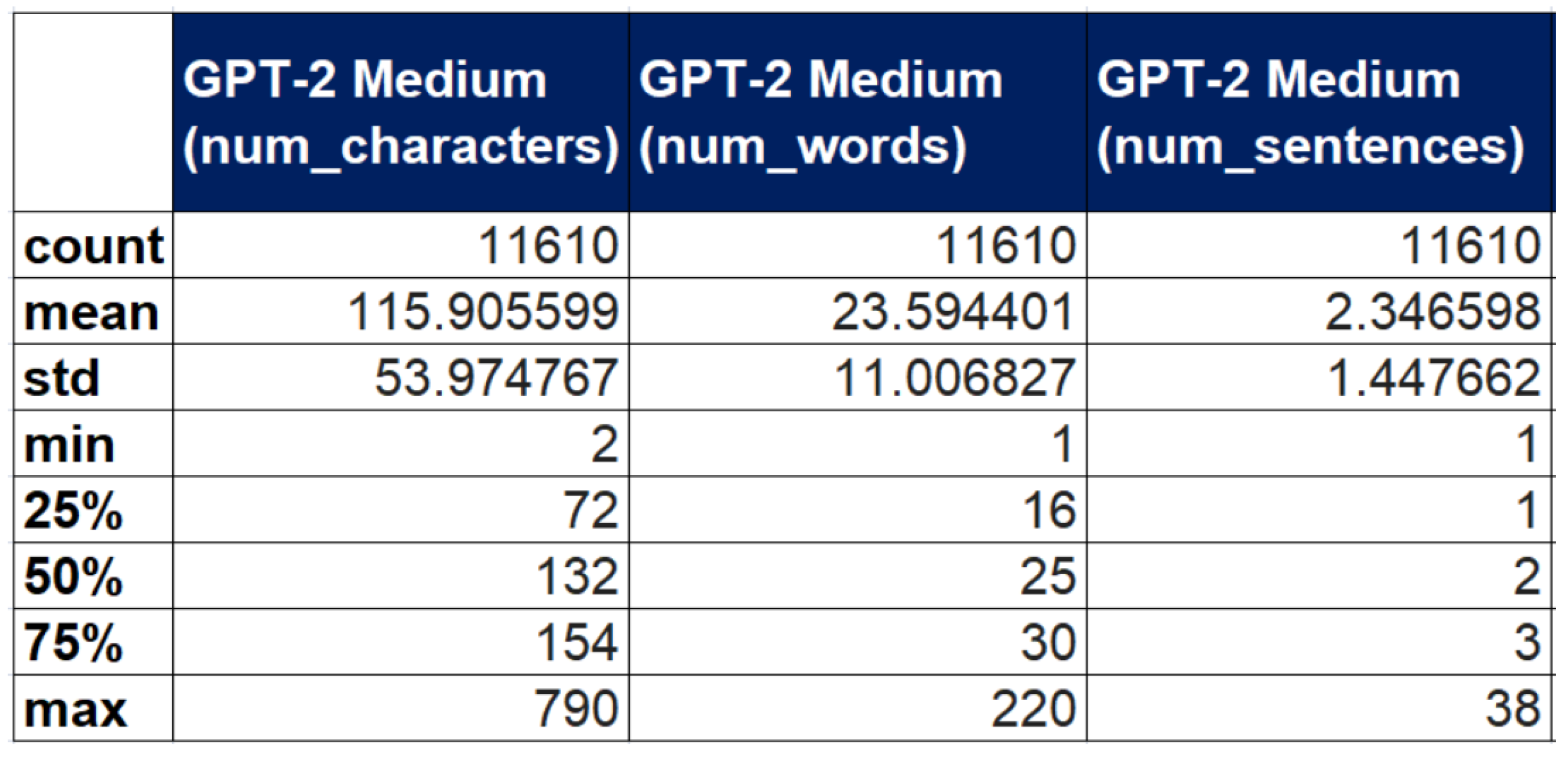

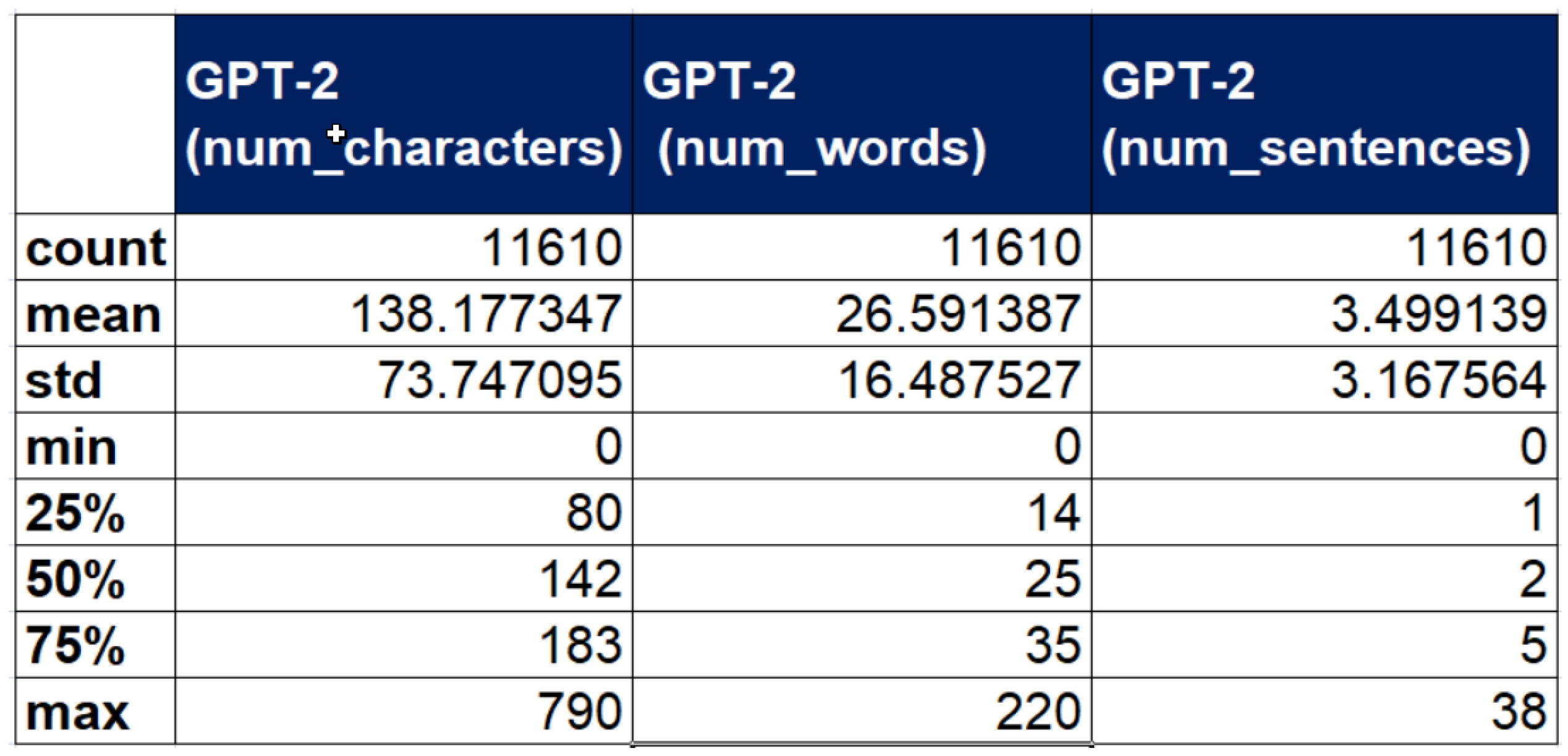

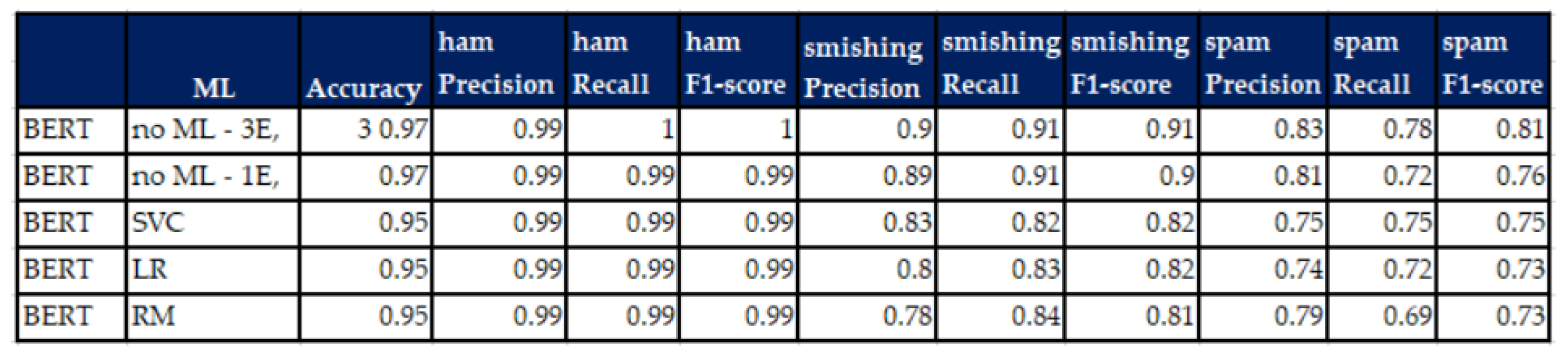

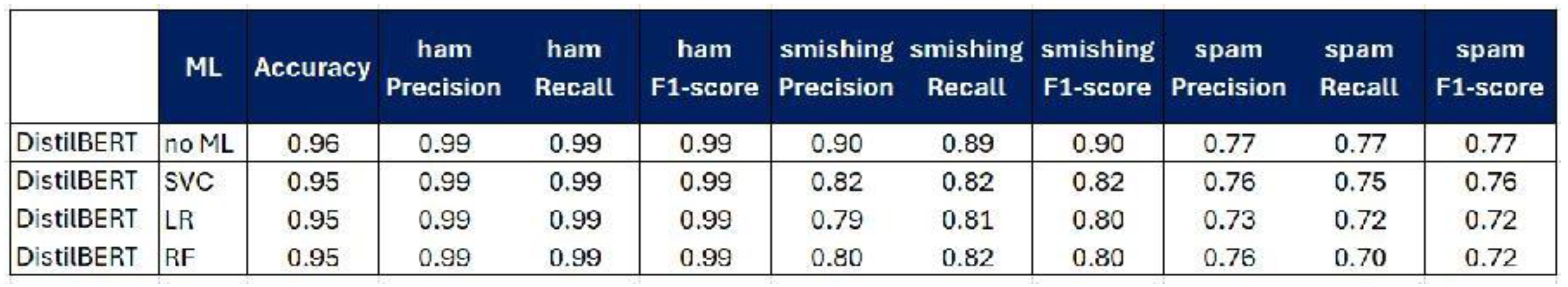

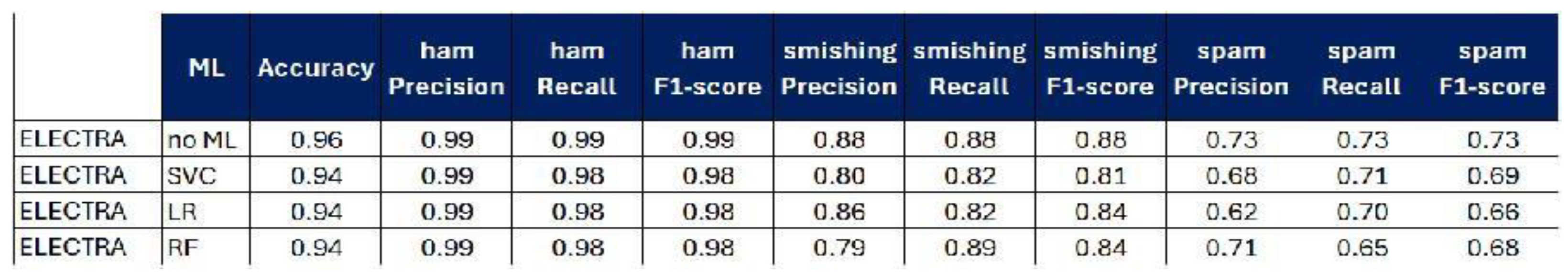

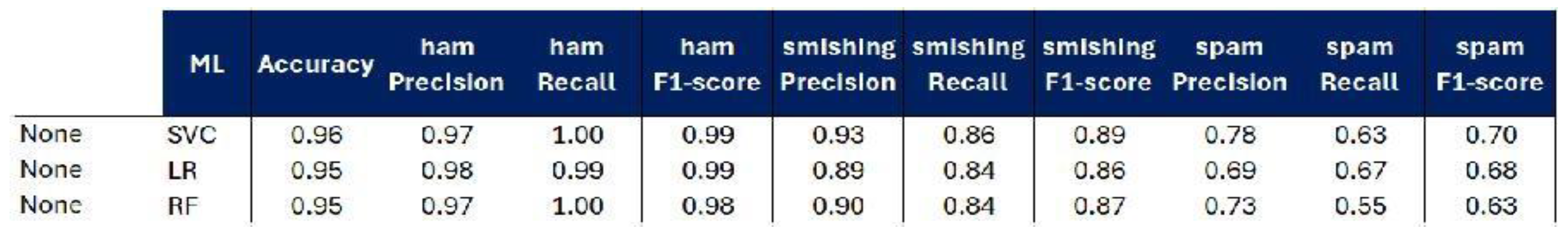

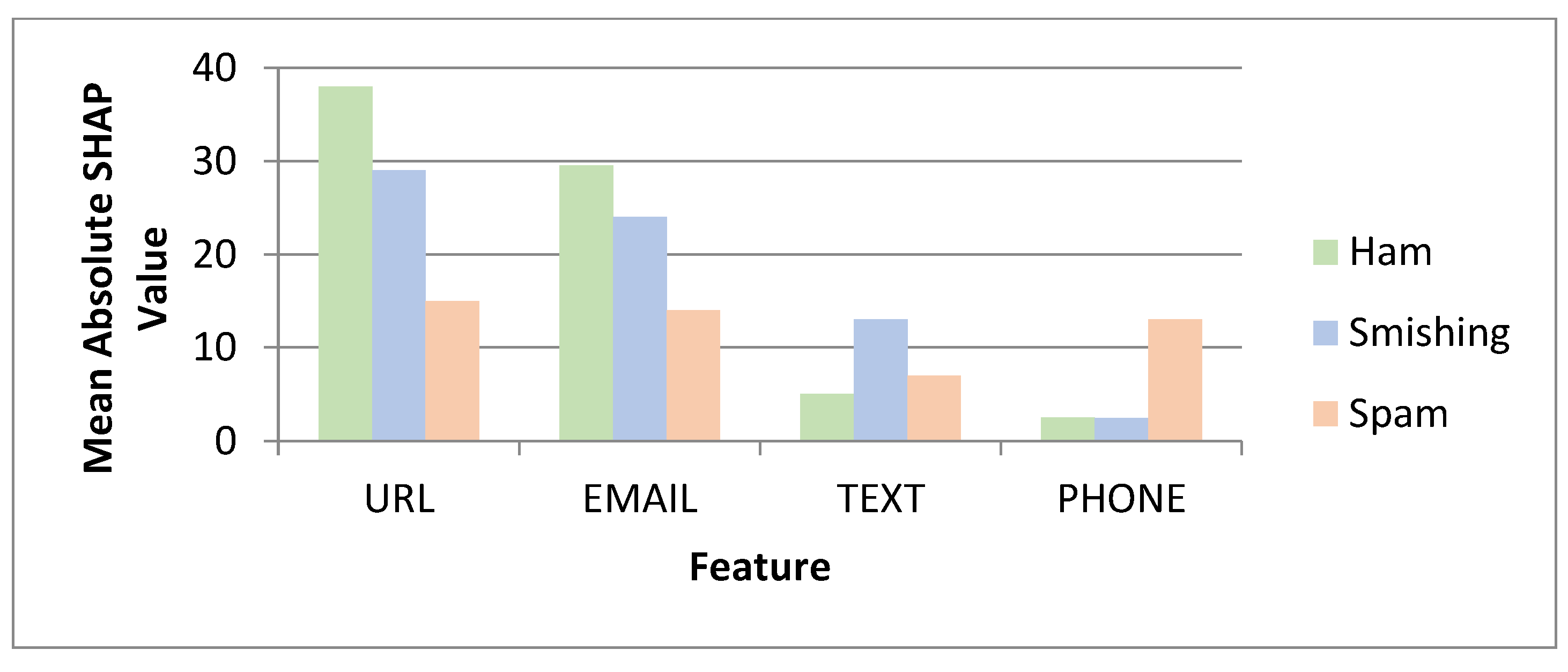

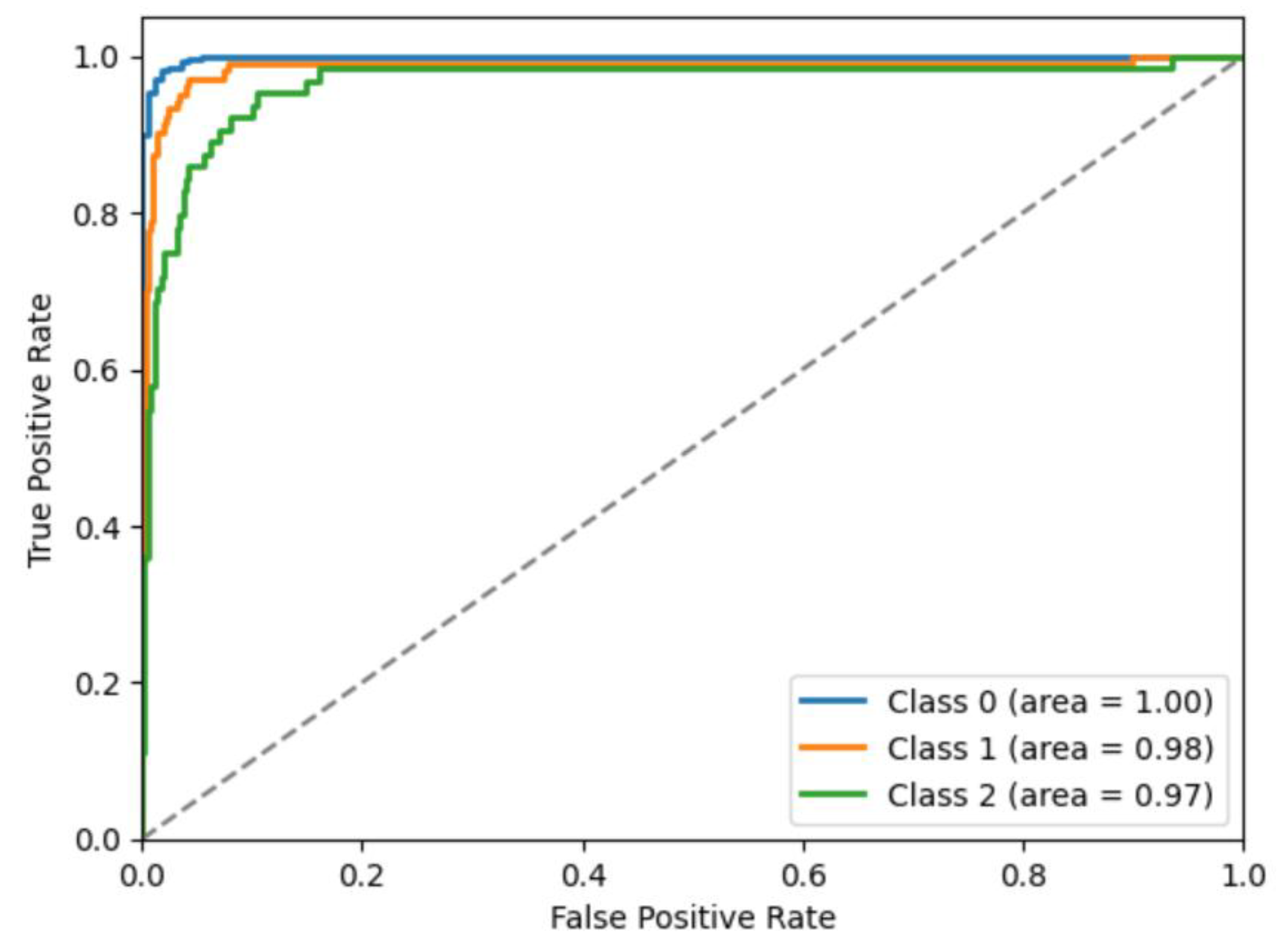

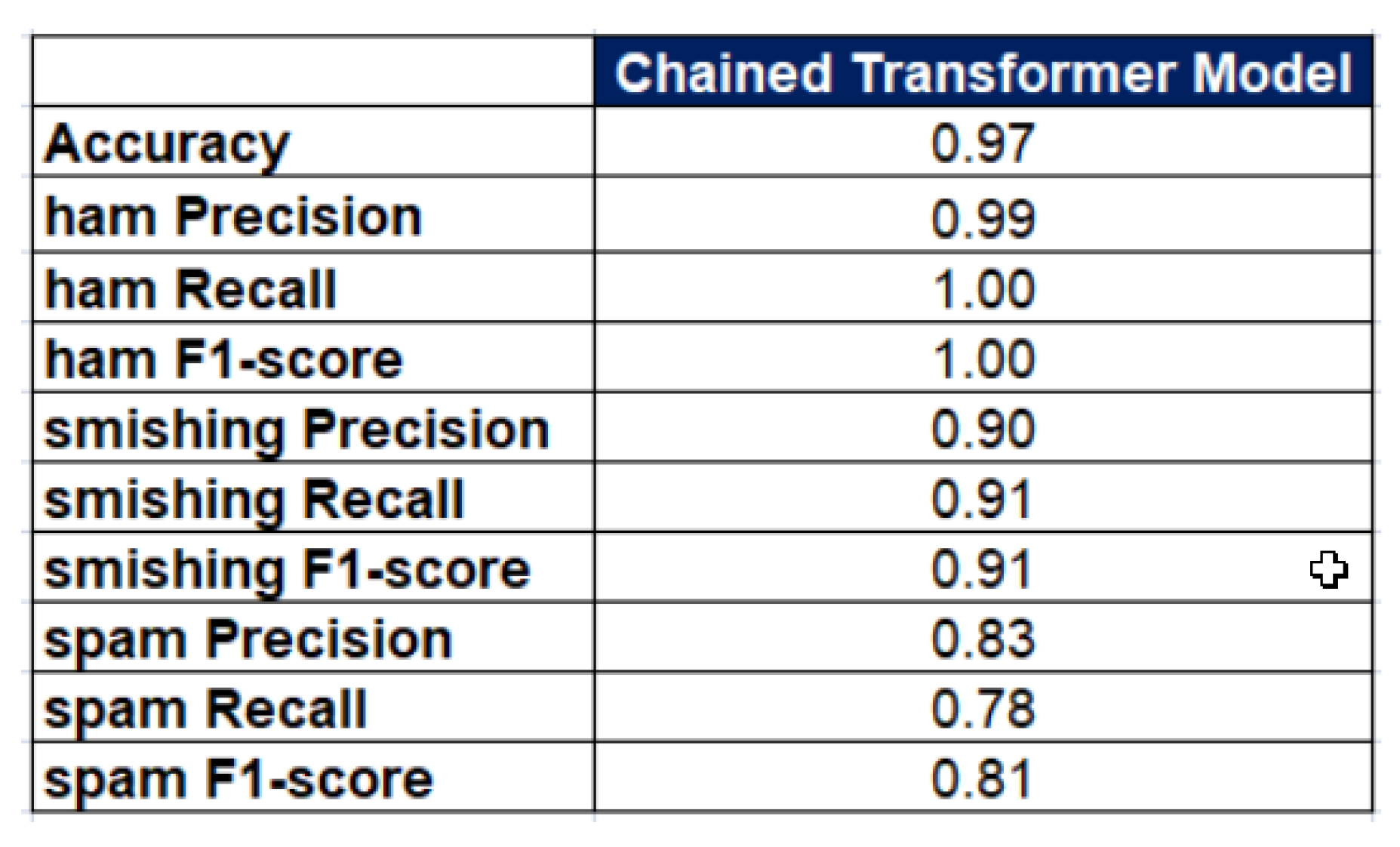

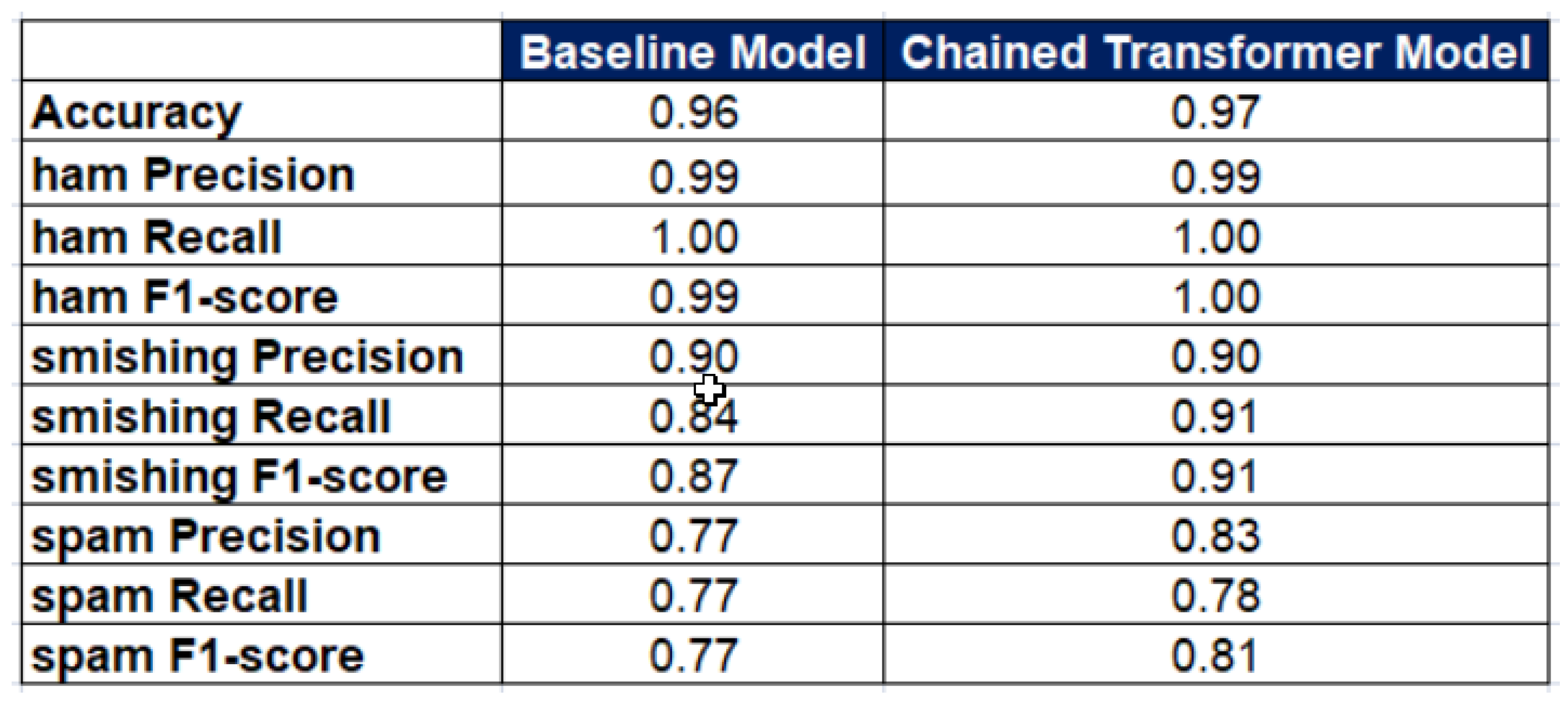

5. Results

6. Conclusion and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SMS | Short Message Service |

| GPT-2 | Generative Pre-trained Transformer 2 |

| BERT | Bidirectional Encoder Representations from Transformers |

| URL | Uniform Resource Locator |

| LSTM | Long Short-Term Memory |

| SMSPD | SMS Phishing Dataset for Machine Learning and Pattern Recognition |

| KDE | Kernel Density Estimation |

| EDA | Exploratory Data Analysis |

| SHAP | Shapley Additive Explanations |

| DistilBERT | Distilled BERT |

| ELECTRA | Efficiently Learning and Encoder that Classifies Token Replacements Accurately |

| ML | Machine Learning |

| SMOTE | Synthetic Minority Oversampling Technique |

| SVC | Support Vector Classifier |

| ROC | Receiver Operating Characteristic |

References

- Alkhalil, Z., Hewage, C., Nawaf, L., & Khan, I. (2021). Phishing attacks: A recent comprehensive study and a new anatomy. Frontiers in Computer Science, 3, 563060. [CrossRef]

- Gupta, M., Bakliwal, A., Agarwal, S., & Mehndiratta, P. (2018, August 2-4). A comparative study of spam SMS detection using machine learning classifiers. 2018 Eleventh International Conference on Contemporary Computing (IC3), 1–7. Noida, India. [CrossRef]

- Pant, V. K., Pant, J., Singh, R. K., & Srivastava, S. (2024). Social Engineering in the Digital Age: A Critical Examination of Attack Techniques, Consequences, and Preventative Measures. In Effective Strategies for Combatting Social Engineering in Cybersecurity (pp. 61–76). IGI Global. [CrossRef]

- Chan-Tin, E., & J. Stalans, L. (2023). Phishing for profit. In D. Hummer & J. Byrne (Eds.), Handbook on Crime and Technology (pp. 54–71). Gloucestershire, UK:Edward Elgar Publishing. [CrossRef]

- FTC. (2023, June 8). New FTC data analysis shows bank impersonation is most-reported text message scam. Federal Trade Commission. https://www.ftc.gov/newsevents/news/press-releases/2023/06/new-ftc-data-analysis-shows-bankimpersonation-most-reported-text-message-scam.

- Orunsolu, A. A., Sodiya, A. S., & Akinwale, A. T. (2022). A predictive model for phishing detection. Journal of King Saud University - Computer and Information Sciences, 34(2), 232–247. [CrossRef]

- Sengupta, P., Zhang, Y., Maharjan, S., & Eliassen, F. (2023). Balancing explainability- Accuracy of complex models. arXiv:2305.14098[cs.LG]. http://arxiv.org/abs/2305.14098.

- Mishra, S., & Soni, D. (2023b). SMS phishing dataset for machine learning and pattern recognition. In A. Abraham, T. Hanne, N. Gandhi, P. Manghirmalani Mishra, A. Bajaj, & P. Siarry (Eds.), Proceedings of the 14th International Conference on Soft Computing and Pattern Recognition (SoCPaR 2022) (pp. 597–604). Cham, Switzerland: Springer. [CrossRef]

- Brownlee, J. (2021a, January 5). Random oversampling and undersampling for imbalanced classification. Machine Learning Mastery. https://machinelearningmastery.com/random-oversampling-and-undersampling-forimbalanced-classification/ (Accessed: 17 October 2024).

- Qiu, S., Hu, W., Wu, J., Liu, W., Du, B., & Jia, X. (2020), Temporal network embedding with high-order nonlinear information [Conference session]. Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada. [CrossRef]

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805[cs.CL]. http://arxiv.org/abs/1810.04805.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. arXiv:1706.03762[cs.CL]. https://arxiv.org/abs/1706.03762.

- Indurkhya, N., Damerau, F. J. (2010). Handbook of natural language processing. (2nd ed.) Chapman and Hall/CRC. [CrossRef]

- Mishra, S., & Soni, D. (2020). Smishing detector: A security model to detect Smishing through SMS content analysis and URL behavior analysis. Future Generation Computer Systems, 108, 803–815. [CrossRef]

- Mishra, S., & Soni, D. (2022). Implementation of ‘Smishing Detector’: An efficient model for Smishing detection using neural network. SN Computer Science, 3(189), 189. [CrossRef]

- Mishra, S., & Soni, D. (2023a). DSmishSMS-A system to detect Smishing SMS. Neural Computing and Applications, 35(7), 4975–4992. [CrossRef]

- Sonowal, G., & Kuppusamy, K. S. (2018). SmiDCA: An anti-Smishing model with machine learning approach. The Computer Journal, 61(8), 1143–1157. [CrossRef]

- Joo, J. W., Moon, S. Y., Singh, S., & Park, J. H. (2017). S-Detector: An enhanced security model for detecting Smishing attack for mobile computing. Telecommunication Systems, 66(1), 29–38. [CrossRef]

- Harichandana, B. S. S., Kumar, S., Ujjinakoppa, M. B., & Raja, B. R. K. (2024). COPS: A compact on-device pipeline for real-time Smishing detection. arXiv:2402.04173[cs.CR]. http://arxiv.org/abs/2402.04173.

- Verma, S., Ayala-Rivera, V., & Portillo-Dominguez, A. O. (2023, November 6-10). Detection of phishing in mobile instant messaging using natural language processing and machine learning. 2023 11th International Conference in Software Engineering Research and Innovation (CONISOFT),Guanajuato, Mexico. [CrossRef]

- Min, B., Ross, H., Sulem, E., Veyseh, A. P. B., Nguyen, T. H., Sainz, O., Agirre, E., Heintz, I., & Roth, D. (2024). Recent advances in natural language processing via large pre-trained language models: A survey. ACM Computing Surveys, 56(2), 1–40. [CrossRef]

- Treviso, M., Lee, J.-U., Ji, T., Aken, B. van, Cao, Q., Ciosici, M. R., Hassid, M., Heafield, K., Hooker, S., & Raffel, C. (2023). Efficient methods for natural language processing: A survey. Transactions of the Association for Computational Linguistics, 11, 826–860. [CrossRef]

- Salman, M., Ikram, M., & Kaafar, M. A. (2024). Investigating evasive techniques in SMS spam filtering: A comparative analysis of machine learning models. IEEE Access, 12, 24306–24324. [CrossRef]

- Ma, S. (2023, May 12). Enhancing NLP model performance through data filtering (Technical Report No. UCB/EECS-2023-170). Electrical Engineering and Computer Sciences, University of California, Berkeley.

- Uddin, M. A., Islam, M. N., Maglaras, L., Janicke, H., & Sarker, I. H. (2024). ExplainableDetector: Exploring transformer-based language modeling approach for SMS spam detection with explainability analysis. arXiv:2405.08026[cs.LG] http://arxiv.org/abs/2405.08026.

- Tabani, H., Balasubramaniam, A., Marzban, S., Arani, E., & Zonooz, B. (2021, September 1-3). Improving the efficiency of transformers for resource-constrained devices [Conference session]. 2021 24th Euromicro Conference on Digital System Design (DSD), Palermo, Italy.

- Muralitharan, J., & Arumugam, C. (2024). Privacy BERT-LSTM: A novel NLP algorithm for sensitive information detection in textual documents. Neural Computing and Applications, 36(25), 15439–15454. [CrossRef]

- Khan, M. A., Huang, Y., Feng, J., Prasad, B. K., Ali, Z., Ullah, I., & Kefalas, P. (2023). A multi-attention approach using BERT and stacked bidirectional LSTM for improved dialogue state tracking. Applied Sciences, 13(3), 1775. https://www.mdpi.com/2076-3417/13/3/1775.

- Remmide, M. A., Boumahdi, F., Ilhem, B., & Boustia, N. (2025). A privacy-preserving approach for detecting smishing attacks using federated deep learning. InternationalJournal of Information Technology, 17(1), 547-553. [CrossRef]

- Almeida, T. A., Hidalgo, J. M. G., & Yamakami, A. (2011, September 19-22). Contributions to the study of SMS Spam filtering: New collection and results. DocEng ’11:Proceedings of the 11th ACM Symposium on Document Engineering, Mountain View, CA, USA, 259–262. [CrossRef]

- Alpaydin, E. (2020. Introduction to machine learning (4th ed.) The MIT Press. https://mitpress.mit.edu/9780262043793/introduction-to-machine-learning/.

- Bishop, C. M., (2006). Pattern recognition and machine learning (Vol. 4). Springer. https://link.springer.com/book/9780387310732.

- Géron, A. (2022). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow. (3rd ed.). O’Reilly Media, Inc. https://www.oreilly.com/library/view/hands-on-machine-learning/9781492032632/.

- Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: A simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(56), 1929–1958. https://www.cs.toronto.edu/~rsalakhu/papers/srivastava14a.pdf.

- Bengio, Y. (2012). Practical recommendations for gradient-based training of deep architectures. In G. Montavon, G. B. Orr, & K.-R. Müller (Eds.), Neural Networks: Tricks of the Trade: Second Edition (pp. 437–478). Berlin, Germay: Springer. [CrossRef]

- Johnson, R., & Zhang, T. (2015). Semi-supervised convolutional neural networks for text categorization via region embedding [Conference presentation]. NIPS ’15: Proceedings of the 28th International Conference on Neural Information Processing Systems – Volume 1, Cambridge, MA, USA. https://proceedings.neurips.cc/paper/2015/file/acc3e0404646c57502b480dc052c4fe1-Paper.pdf. [CrossRef]

- Houston, R. A. (2024). Transformer-enhanced text classification in cybersecurity: GPTAugmented synthetic data generation, BERT-based semantic encoding, and multiclass analysis [Unpublished PhD Thesis] The George Washington University. https://search.proquest.com/openview/fe2a7d3fb1e4ac4426755c3237663c7c/1?pqorigsite=gscholar&cbl=18750&diss=y.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).