A. Datasets

In this study, we use the Super-Natural Instructions dataset as the primary basis for investigating instruction tuning and multi-task generalization. This dataset consists of a wide range of natural language processing tasks, including classification, generation, ranking, and question answering. Each task is accompanied by a natural language instruction and input-output pairs. It is well-suited for research on unified modeling driven by instructions. The dataset is large and well-structured, which supports the study of model generalization and adaptability under diverse task instructions.

Each task in Super-NaturalInstructions contains several samples. Each sample includes a task instruction, an input text, and an expected output. The task designs cover various linguistic structures, semantic types, and levels of reasoning depth. This makes the dataset suitable for analyzing how models perform when faced with semantic variation and distributional heterogeneity. All instructions are written in natural language, reflecting an interaction style that closely resembles real-world applications.

Another advantage of this dataset lies in its cross-task organization. This structure enables the construction of a unified framework for multi-task fine-tuning. By comparing task similarities and instruction type variations, researchers can systematically evaluate the generalization capacity of instruction tuning. This also provides a foundation for deeper exploration of theoretical boundaries and optimization strategies.

B. Experimental Results

First, this paper gives the comparative experimental results with other models. The experimental results are shown in

Table 1.

The results in the table show that different instruction tuning methods exhibit significant differences in multi-task generalization. UnifiedPrompt, as the baseline method, achieves an average task accuracy of 72.4%. It performs relatively poorly among all models. Its scores on Generalization Gap and Gradient Conflict Score are also unfavorable. This indicates limited generalization ability on unseen tasks and evident conflicts in task optimization.

MTL-LoRA introduces a parameter-efficient tuning strategy. It slightly improves task accuracy to 74.8%, and its Generalization Gap drops to 6.9%. This suggests stronger adaptability to task distribution while maintaining parameter efficiency. Its Gradient Conflict Score is also better than that of UnifiedPrompt, showing a more coordinated gradient update mechanism during multi-task training.

Prompt-aligned further introduces a gradient alignment mechanism, which effectively reduces interference among tasks. Its accuracy rises to 76.3%. The Generalization Gap decreases to 5.7%, and the Gradient Conflict Score drops significantly to 0.21. These results demonstrate that gradient alignment is effective for promoting generalization and balanced training. It serves as a valid structural optimization technique.

Our method achieves the best results across all metrics. In particular, the Gradient Conflict Score is reduced to 0.13, indicating a very low level of conflict among tasks. The Generalization Gap is only 4.2%. These results validate the effectiveness of the combined design, which includes joint instruction representation modeling, task similarity regularization, and gradient projection. This design significantly enhances the robustness and generalization ability of multi-task fine-tuning, highlighting both the theoretical strengths and practical value of our approach in cross-task scenarios.

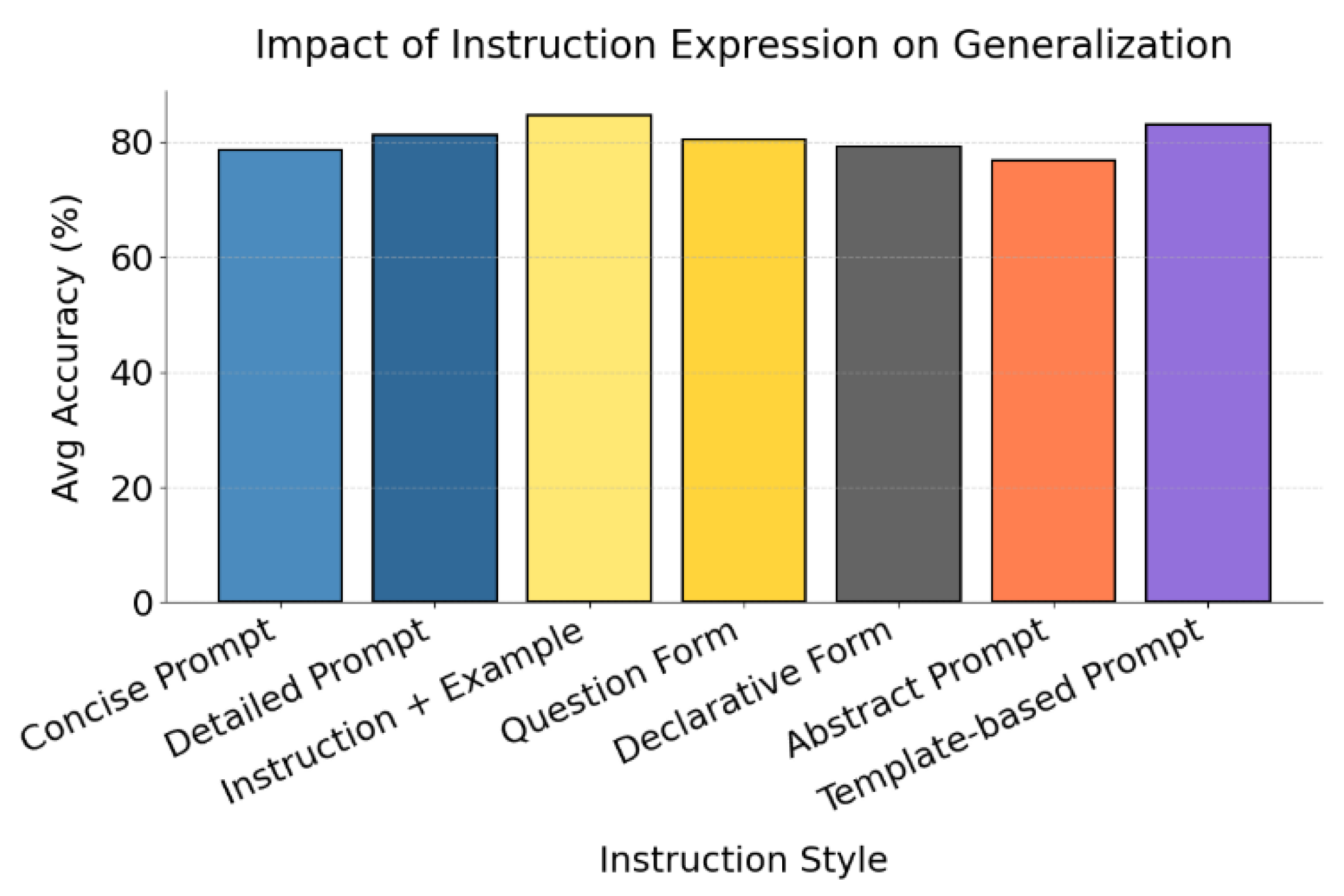

Furthermore, this paper also gives the experimental results of analyzing the impact of different instruction expressions on the generalization ability of the model, as shown in

Figure 2.

The figure shows that the model exhibits significant performance fluctuations under different instruction formulations. This directly reflects the critical impact of instruction expression on multi-task generalization. In particular, the "Instruction + Example" and "Template-based Prompt" formats achieve clearly higher accuracy than other types. This indicates that structured instruction design and example guidance can significantly enhance the model's ability to understand and transfer task semantics.

In contrast, formats such as "Abstract Prompt" and "Declarative Form" show relatively poor performance. These expressions are more abstract or neutral in tone and often fail to define the task objective clearly. The results suggest that a lack of explicit operational cues or task boundaries may hinder the model's ability to accurately represent the target. This finding confirms the importance of task alignment mechanisms in instruction tuning and supports the view that vague instructions can exacerbate conflicts in task representation.

Instruction types with clear, specific, and even redundant cues tend to perform better. These features help the model reduce mapping errors between instruction expressions and task semantics. In our modeling framework, instruction embeddings are jointly modeled with input representations. Therefore, differences in instruction form directly influence the stability of representation learning and the generalization performance. This further validates our hypothesis about the model's sensitivity to instruction semantics.

In summary, the experimental results highlight the decisive role of instruction design in multi-task learning. They also empirically support our exploration of the theoretical boundaries of instruction tuning. Future optimization processes may benefit from structured prompt generation or automatic instruction reformulation. These strategies could further improve generalization and robustness, enabling more stable adaptation across tasks.

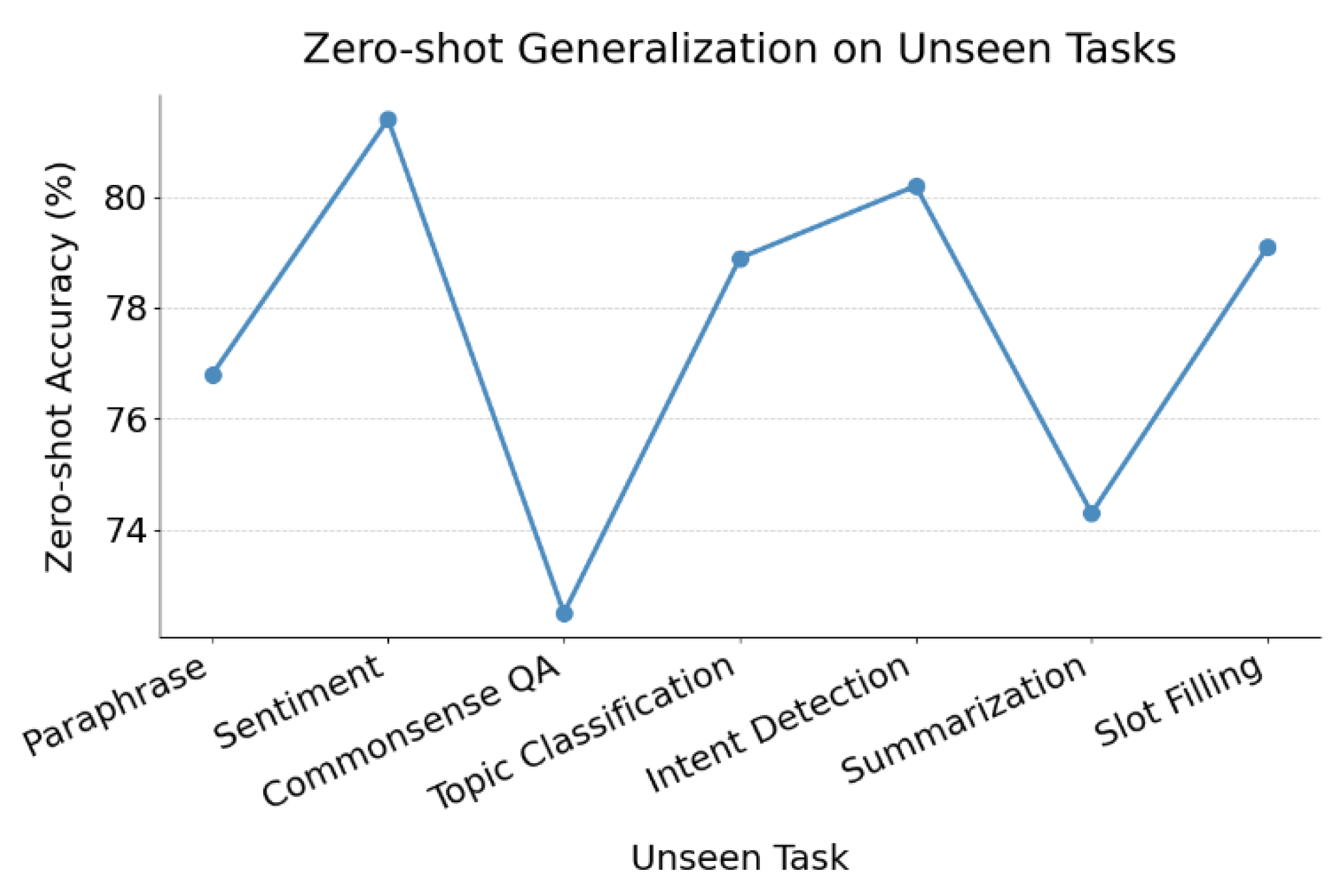

This paper also gives the experimental results of comparing the zero-shot generalization capabilities of different fine-tuning strategies on unseen tasks, as shown in

Figure 3.

The figure shows that the model's zero-shot generalization ability on unseen tasks fluctuates to some extent. This indicates that while the model has certain cross-task transfer capabilities, its performance remains closely tied to task type. For example, it performs well on tasks such as "Sentiment" and "Intent Detection," with accuracy exceeding 80 percent. This suggests that the model can better transfer prior knowledge to tasks with clear goals and well-defined semantic boundaries.

However, the model performs relatively poorly on tasks like "Topic Classification" and "Commonsense QA." This shows that when facing tasks with complex semantic distributions, abstract categories, or implicit reasoning requirements, the model struggles to generalize even when instruction formats remain consistent. These results confirm the importance of task characteristics in determining model adaptability during instruction tuning, especially under zero-shot conditions without explicit supervision.

This performance gap also supports our hypothesis that instruction semantics influence representation learning paths. The model's success on unseen tasks depends on its ability to extract generalizable instruction-to-task mappings from prior instruction-input pairs. Tasks with limited generalization often involve a larger semantic gap or structural mismatch between the instruction and the actual task goal.

Overall, this experiment highlights the theoretical boundary issues faced by instruction tuning strategies when building unified multi-task representations. Although existing methods demonstrate some degree of zero-shot generalization, representation conflicts caused by task heterogeneity remain a key factor limiting model robustness. These findings further underline the need for gradient alignment mechanisms and similarity-based regularization to improve generalization performance.

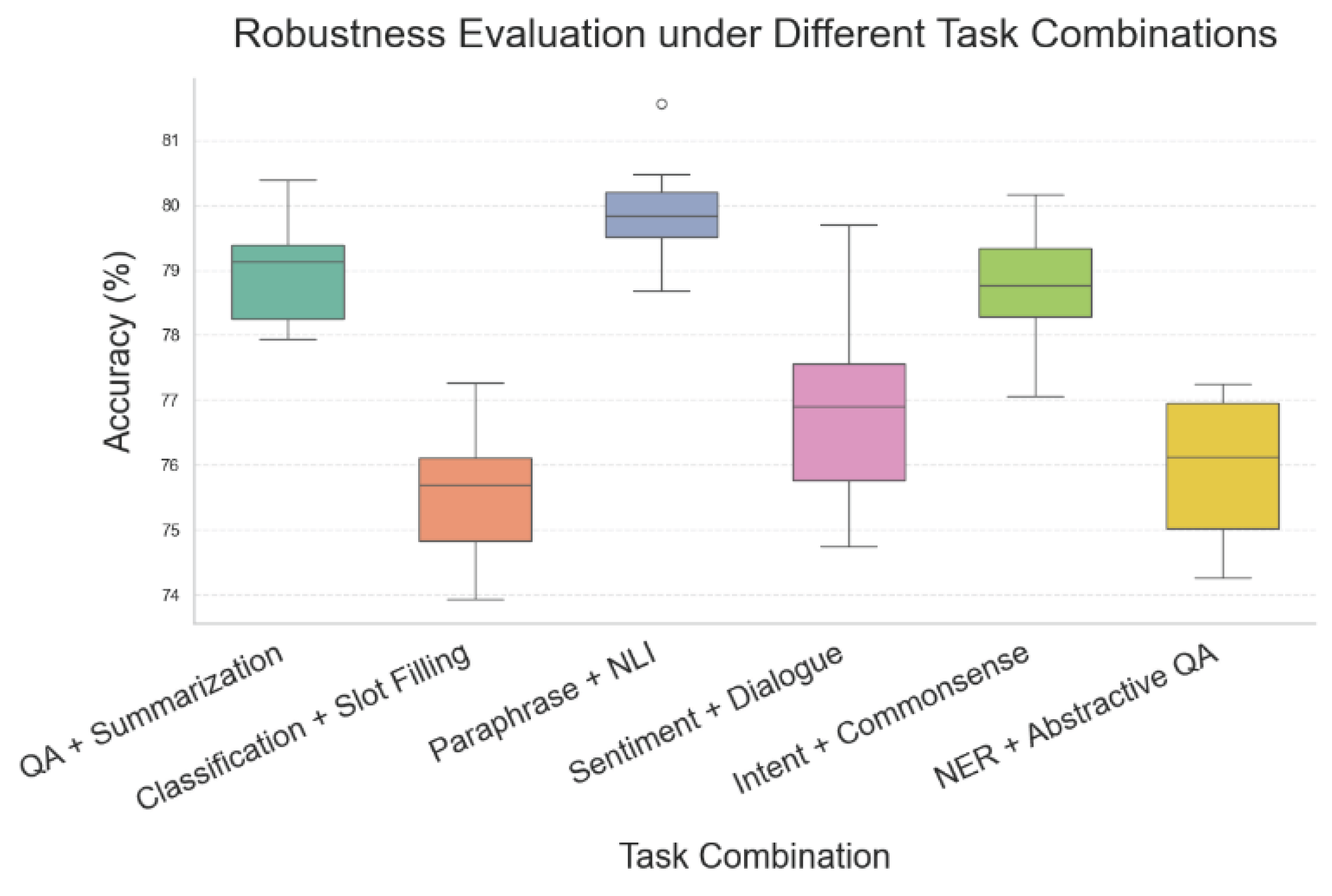

Finally, this paper gives a robustness evaluation of the instruction fine-tuning model under different task combinations, and the experimental results are shown in

Figure 4.

Figure 4 presents the robustness performance of instruction-tuned models under different task combinations. The box plots of accuracy distributions show the model's adaptability to task switching and the degree of performance variation. The results indicate that combinations such as "Paraphrase + NLI" and "Intent + Commonsense," which share strong semantic relevance or logical consistency, exhibit stable performance. Accuracy values are concentrated with narrow upper and lower bounds. This suggests that when instruction information is sufficient and task paradigms are aligned, the model can effectively model cross-task transfer and maintain robustness.

In contrast, combinations such as "Classification + Slot Filling" and "NER + Abstractive QA" show more dispersed distributions. Some results display significant lower bounds in performance. This implies that when task structures differ greatly and information is expressed in inconsistent ways, the model struggles with instruction alignment and generalization across tasks. This phenomenon supports the theoretical boundary of robustness proposed in this paper. Task heterogeneity is a key factor affecting the stability of multi-task models, especially under a unified instruction paradigm.

Overall, these experimental results highlight the importance of considering semantic relevance and structural compatibility when designing instruction tuning systems. Introducing task similarity constraints and targeted optimization mechanisms can further improve generalization and robustness under complex task switches. This also provides theoretical support for future work on multi-task scheduling strategies and automatic instruction generation.