The HB thermodynamic formalism allows us to define a probability density see (

10) which is related to the microcanonical one but it is "projected" on the configuration space. Instead of prescribing the interaction between the elementary units of the system, all the information about the system is encoded in its potential energy

depending on multiple parameters and the energy

. So we are using a formalism, which loosely speaking is in between statistical mechanics and catastrophe theory, and a probability density, which is not an exponential family. Nevertheless we still have, see (

16) and (

32) that for multidimensional HB thermodynamics –compare with (

38)–

We want to investigate whether the divergence of elements of the Fisher matrix for particular values of parameters can detect qualitative changes in the dynamics in mechanical systems described by potential energy .

5.1. Harmonic Potential Energy

Let us consider the simplest potential energy with no external parameters

with

. This energy represents

d independent one-dimensional harmonic oscillators. Then

and

, the ball of radius

in

. Let us compute

so that

where

is the Gamma function and the entropy function are

By direct computation we get the temperature

and the specific heat can be computed from

a result which holds also in Boltzmann Gibbs statistical mechanics (see [

19]). The one dimensional Fisher matrix, also called Fisher information is, see (

33)

where the probability density (

29) is

and

thereby obtaining (note that the average value exists for

)

We see that diverges for , that is, when the system energy e tends to the minimum of the potential energy u.

Remark. This quadratic potential energy system is the prototype of the description of a mechanical system in the vicinity of a minimum of the potential energy. By a translation of the reference frame we can suppose that the minimum is in

and that

so that using a Taylor expansion we have

and

where

and

are the smallest and greatest (real) eigenvalues of the symmetric matrix

. So we can conclude that the Fisher information diverges when the energy

e approaches the minimum of the potential energy from above. This can be interpreted by recalling that the Fisher information measures the change in the "shape" of the probability distribution

with respect to a change in the parameter

e; when the energy becomes equal to the minimum of the potential energy, the probability density becomes a Dirac delta concentrated at the minimum

. We can say that the divergence of the Fisher information

at

corresponds to the qualitative "phase transition" between a point orbit and a set of

d closed orbits for the

d oscillators when

.

5.2. The Elastic Chain

The following example shows that for a

n-dimensional mechanical systems with convex potential energy, HB thermodynamics gives a sound description of the system’s behavior (see also [

7]).

Let us consider a chain of

point particles of mass

m linked by linearly elastic springs and constrained to move on the real axis. Suppose that particle 0 is fixed in the origin and that the coordinate of the

n-th particle is

so that the length

of the chain is a controlled parameter

corresponding to the equilibrium length of the

n springs. Let the elongation of the spring

be written as

. The length constraint is

Due to the length constraint the chain potential energy of the resulting

dimensional system can be written as

where

. Therefore the region

is

i.e.

where

is the ball in

of radius

a,

and

L is the

dimensional hyperplane

. We compute the entropy

by setting

and (here

)

By standard computation on radial function we get

Let us compute the temperature of this elongated chain using

which coincides with the kinetic energy

per degree of freedom. Let us compute the pressure

P corresponding to the controlled parameter

which is the elongation on the elastic chain using

. We have

which corresponds to our physical intuition of the reaction force exerted by the chain on its controlled end. The specific heat

is

Remark. Note that the elastic chain model fails to define a statistical model because conditions 1) and 2) in Definition 1 are not met. Indeed concerning condition 2) we have

so the two score vectors

and

are not independent functions on

. Therefore we can not compute the Fisher matrix for this mechanical system. If we consider only the energy parameter

e, the system is similar to the previous example 1.

5.3. Two-Body System

In this example we consider an isolated system of two points of equal mass

m subject to gravitational forces. If

,

,

denote the positions of the two points, the potential energy is

, where

can be considered as a parameter. A system of points interacting via gravity requires a specific description in Statistical Mechanics due to the long-range nature of the force which prevents these systems to display the extensive character of the total energy. Also, it is known that gravitating systems exhibit the phenomenon of negative specific heat [

24], a possibility which is excluded in the canonical description, see (

36). Therefore, the correct statistical ensemble to adopt is the microcanonical one, see [

25]. In [

25] the above two body system is modified to construct a toy model which exhibits all the features of a many-body self-gravitating system. This is obtained by imposing a short range cutoff on the particles inter-distance

, which behaves as hard spheres of radius

, and a long range cutoff

. This modified system display a phase transition between phases of positive and negative specific heat when the system energy is varied. We want to discuss this mechanical system using HB thermodynamics. See also [

5] for a different analysis of a two body system with HB thermodynamics. Therefore we compute the probability density (

10) and the Fisher information for this system and discuss its ability to describe phase transitions. Using (12) we have for

,

and from (

10) with

,

,

To compute the last integral it is useful to perform the change of variables

where

is the inter-particle vector. We have

and

where

therefore, by using the change of variable and Fubini theorem, we can write

The integral in

is infinite unless we assume that the particle

x is confined in a bounded region of volume

V. Therefore we can write

and, since we deal with a function radial in

s we have that

is finite and

The probability density (

10) is thus factorized into the product of two densities

showing that the positions of the two particles are independent random variables. Therefore we can forget about the

particle and perform all the computations in the

s variable.

If we let the energy tend to zero, so that the inter-particle distance

s can be arbitrarily large, we make

Z in (

40), hence the microcanonical entropy of the system

, infinite. The HB or volume entropy is

and it is easy to see that

is infinite if we let the inter-particle distance

s go to zero, so the entropy

S is infinite when

stend to zero or to infinity, while

is infinite only in the latter case.

Therefore, it is necessary to assume, as in [

25], that there are two cutoffs

, usually with

, so that the two body system is confined in a bounded region of space. Moreover the system displays two energy scales

Also, it is stipulated in [

25] that if the energy is in the range

, the integration in the

s variable is performed between extrema

a and

, while for

the integration is between fixed extrema

a and

R. Due to the presence of the cutoff

a, the system displays a negative specific heat phase (see again [

25]).

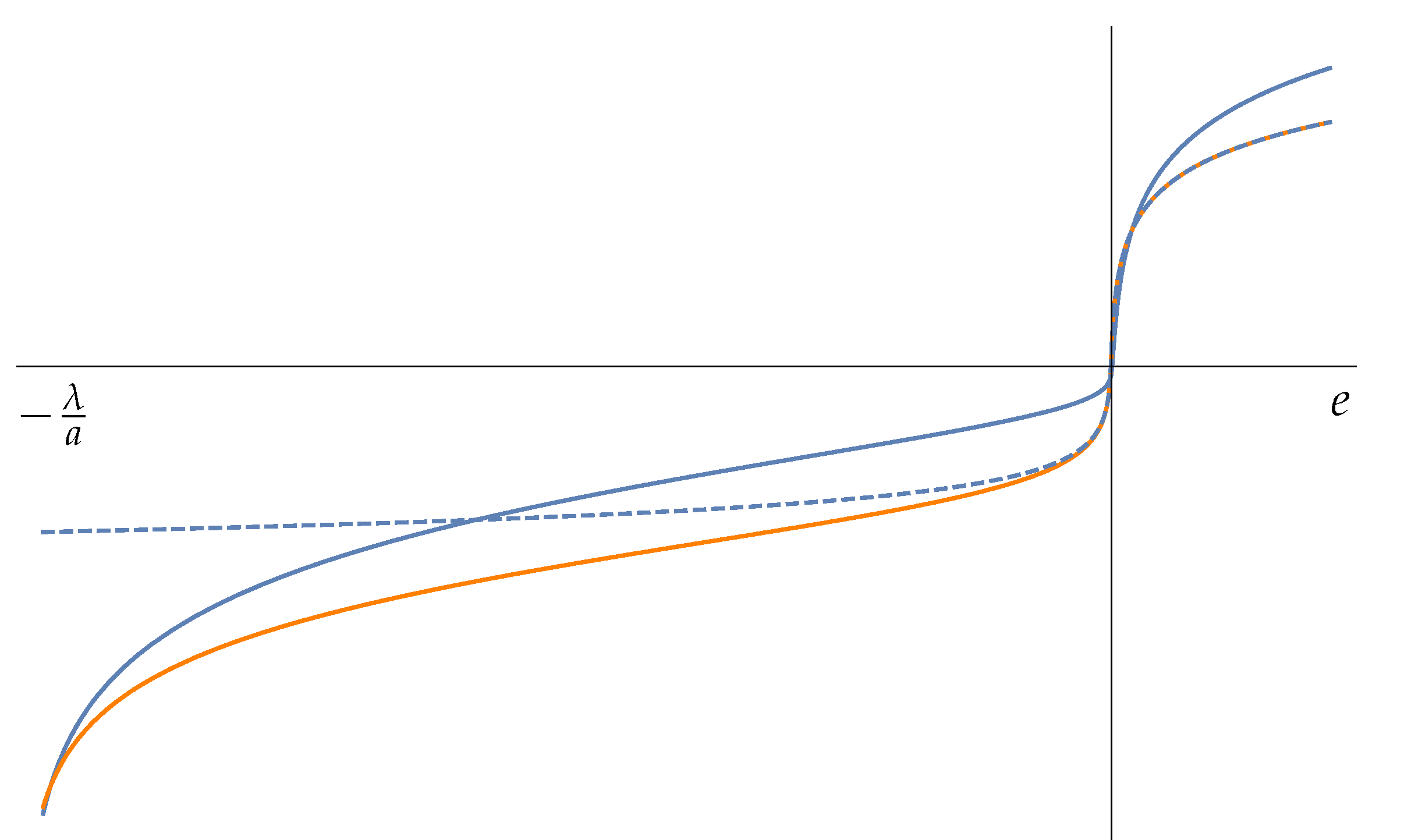

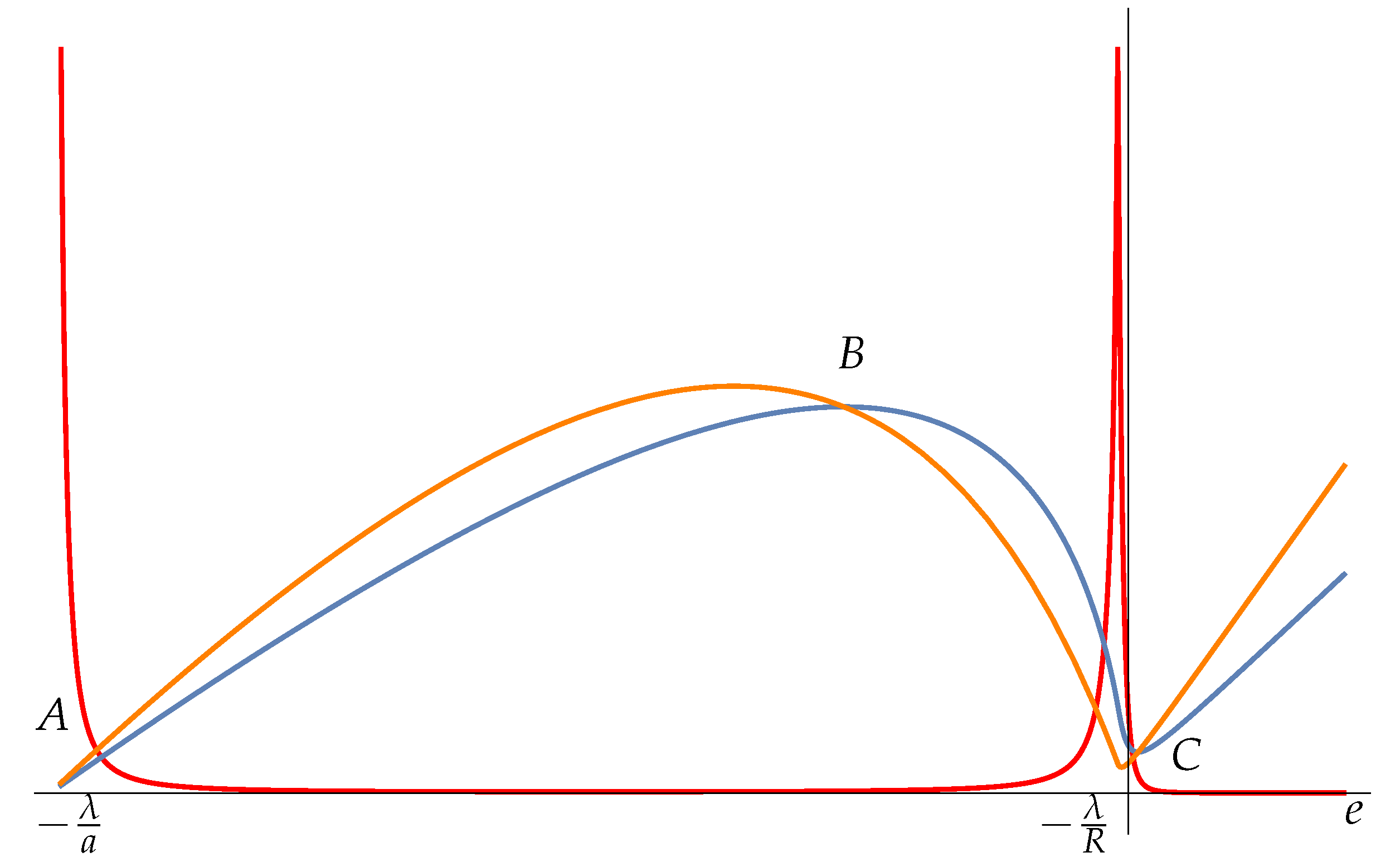

We can now compute the temperature

T, the microcanonical temperature

and the pressure

P using (

21) for our system in the two energy ranges and then juxtapose the plots that have continuous junction at

. See Appendix 1 for computations. We also compute the Fisher information

from (

33). See

Figure 1 for the plot of

T,

and

.

The temperature

(blue curve of

Figure 1) presents a gentle maximum for negative energy (point B) and a sharp minimum (point C) for a positive value of the energy. We see that for

the temperature is zero as in the previous example 1. The temperature curve of this simple mechanical system displays the same features of more complex and realistic models of gravitating many particle systems: a phase (A to B) of positive specific heat followed by a phase (B to C) of

negative specific heat and again a phase of positive specific heat (after C). The microcanonical temperature

(orange curve) has a similar behavior of

T. The points where the two temperature curves cross each other are the points where the specific heat

diverges; see (

24). See [

25] for a physical interpretation of these phases.

We see that the Fisher information

(red curve of

Figure 1) diverges for

and has a peak for

. The divergence in

is located at the minimum of the potential energy (due to the cutoff at

) and it is similar to the one found in the previous example 1. Here we are concerned with the assessment of the ability of Fisher information to locate the phase transitions at B and C. It seems that the phase transition in B is not detected, and the one in C is not exactly located. This is due to the presence of the cutoffs

(which are essential for the existence of the negative specific heat phase). As far as the phase transition in

B is concerned, the shape of the curve of temperature

T does not change with

a and in the limit

entropy

S becomes infinite. On the other hand, the microcanonical entropy

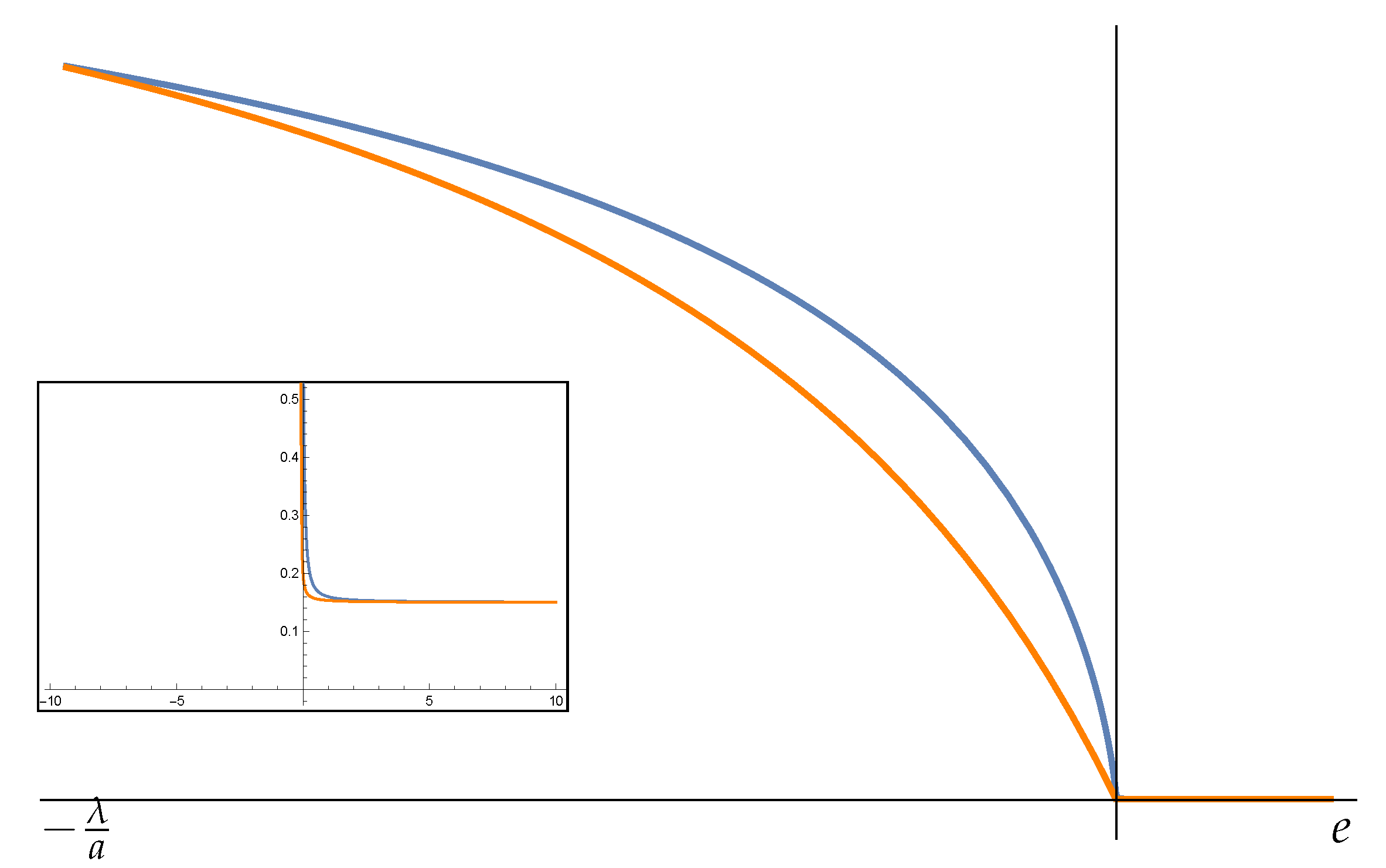

is defined for

and the curve of the microcanonical temperature computed for

(dashed line of

Figure 2) does not show the phase A to B of positive specific heat. Therefore, for

, the phase transition in B is removed in the microcanonical description of the system, while the remaining one is detected by the divergence of Fisher information.

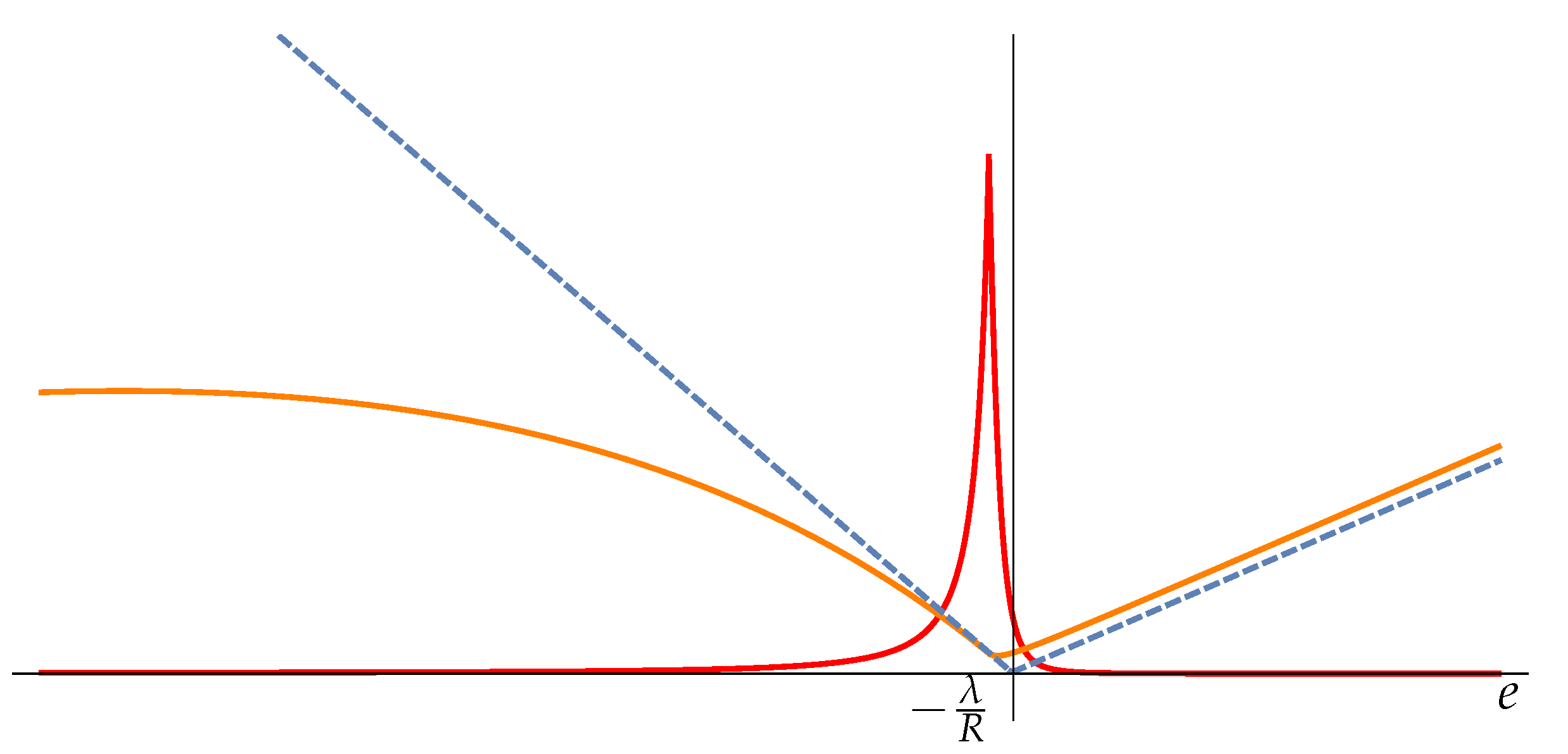

We will show that if the bounds are removed, letting

and

the minimum C and the peak in Fisher information

coincide at

. In fact, the energy corresponding to point C can be determined using the condition

. By computing

at the leading order in

we deduce that the minimum in C in

Figure 1 is located at

The peak of Fisher information is located at

and it height is

In the limit both and tends to 0. So we can say that the divergence of Fisher information correctly detects the phase transition from negative to positive specific heat located at .