1. Introduction

Technological evolution has given rise to an innovative digital ecosystem, where concepts such as virtual reality (VR), the metaverse (MV), digital twins (DT), and the creation of virtual events are profoundly transforming various industrial and social sectors. This article explores the intersection of these technologies, highlighting their applications, benefits, and challenges. VR enables immersive experiences that improve learning, entertainment, and remote collaboration. For its part, the MV is emerging as a shared digital space, where human interactions are amplified through persistent three-dimensional environments. DT, virtual representations of physical objects or systems, optimize processes, and facilitate data-driven decision-making in real time. Finally, the creation of virtual events opens new possibilities in the world of marketing, education, and remote work, offering attractive and globally accessible experiences.

The adoption and development of these technologies have become of unprecedented relevance in contemporary society, redefining the way people interact, learn, and work with the environment. At the same time, as these tools evolve, their implications for the economy, culture, and communication are more significant. To better understand the impact of these technologies, it is necessary to analyze their historical background and their development over time. In the case of virtual reality, it has evolved since the 1960s toward modern applications in video games, training simulations, and contemporary collaborative environments. Similarly, the concept of the metaverse, influenced by science fiction literature and the evolution of digital platforms, has managed to create an immersive ecosystem with virtual economies and advanced interaction models. In areas such as tourism [

1], hospitality [

2], fashion [

3], or virtual museums [

4], it has been implemented and is providing an increase in revenue for businesses.

On the other hand, virtual events became especially relevant after the COVID-19 pandemic, when companies, educational institutions, and conference organizers adopted digital solutions at a time when connectivity had to be maintained on a global scale. Technologies such as live streaming, interactive 3D spaces, and the integration of artificial intelligence have improved the user experience in virtual environments, enabling open participation without geographical barriers. In the case of DT, their application in Industry 4.0 is to improve manufacturing efficiency, predictive maintenance, and the optimization of complex systems, through the use of virtual models that faithfully replicate their physical counterparts [

5,

8]. These digital representations are expected to be very effective in sectors such as health, engineering, and urban infrastructure management, where simulations and predictive analytics can reduce costs and improve decision-making [

9,

12].

In the field of education, DTs are used to create environments for experimentation in the fields of science, engineering, and medicine, avoiding risks [

13,

14]. Thus, acting as virtual models that can predict student performance and simultaneously adapt academic content [

15,

16]; as replicas of machines and industrial processes, capacitates in realistic environments, within practical experimentation laboratories [

17,

18], and operates as a recreation of historical environments in 3D, for interactive exploration [

19,

20]. Finally, DT plays a key role in optimizing performance and operational efficiency in various industries. They are being applied in various areas, highlighting their use in the health sector [

21] to optimize hospital processes, forecast the demand for services, and manage resources. In this, they facilitate the planning and design of hospital infrastructures, improving the construction and renovation of facilities. In addition, they allow for the monitoring of the patient’s status and the personalization of treatments. Its implementation in medical training through interactive simulations reduces errors in surgeries and emergencies. Institutions such as Mayo Clinic, Cleveland Clinic, Johns Hopkins Medicine, and Partners HealthCare have integrated this technology into the optimization of their services. For example, Philips Healthcare’s digital twin functions as an accurate virtual replica of an organ or biological system, created from data obtained from advanced medical imaging (MRI, CT, ultrasound), genetic testing, biometric sensors, and medical records. Its application allows health professionals to simulate and predict to dictate more precise treatments and more quickly. In addition, it streamlines the predictive analysis of the patient’s response to different procedures and therapies, improving medical planning, and reducing clinical risks. The case of Dassault Systèmes’ Living Heart Project is an advanced application of digital twins in cardiology with which highly detailed virtual models of the human heart are created to simulate and optimize treatments, evaluate the efficiency of medical devices, and plan surgeries without direct intervention in patients. Its implementation aims to improve the understanding and approach to heart disease, providing precision tools for medical practice and innovation in cardiovascular health.

DTs are becoming fundamental in the transportation industry [

22,

23]. In the railway sector, Deutsche Bahn has implemented this technology to optimize the operation, maintenance and management of its rail network, improving operational efficiency, safety, infrastructure planning, and transportation sustainability. In aviation, Boeing uses them to simulate aircraft behavior, optimize design, improve safety, operational efficiency, and reduce costs [

24]. Its application in the 787 Dreamliner series is notable, where this technology has revolutionized the design, maintenance, and operation of aircrafts. All of this promotes more efficient and sustainable aviation and its impact is expected to grow in the aerospace industry. In the aerospace sector, Rolls-Royce has implemented this technology for real-time monitoring of engine performance, such as the Trent XWB used in Airbus A350 aircraft, optimizing its life cycle and predictive maintenance [

25]. In the automotive industry, BMW has integrated DT in its Regensburg plant (see

Figure 1), allowing assembly simulation to reduce production times [

26]. Mercedes-Benz applies this technology in its Sindelfingen factory through Internet of Things (IoT), AI, and Big Data, improving efficiency and sustainability, especially in the production of electric vehicles such as the EQS. Ford optimizes the manufacture of batteries and electrical systems for models such as the Mustang Mach-E and the F-150 Lightning, ensuring quality and efficiency [

27].

For its part, the Tesla brand has developed a digital twin that virtually replicates each vehicle in real time, allowing performance monitoring, predictive maintenance, and remote software updates. Turning this technology into the key to the evolution of its autonomous driving system, which optimizes the performance of the Autopilot through advanced simulations [

28,

30]. Siemens has implemented a digital twin in its Amberg factory in Germany, optimizing the production of industrial control systems through advanced automation, which improves efficiency and reduces costs. General Electric applies this technology in multiple sectors, from manufacturing to energy and healthcare. In renewable energy, DTs enable optimal monitoring of wind turbine performance, analyzing data in real-time to predict failures and improve operational efficiency. Unilever has integrated a digital twin into its production and supply chain, highlighting its application at the Hamburg plant. This implementation has improved operational efficiency, reduced costs, and promoted sustainability through energy optimization and waste reduction [

31,

32].

In the field of urban planning, DTs are applied in the simulation of urban infrastructures to monitor and improve mobility, traffic, and energy efficiency, contributing to the development of sustainable cities [

33]. Singapore’s city has developed a digital twin as part of its Smart Nation (SN) strategy, using advanced technologies to improve these areas and thus improve the quality of life [

34]. In Dubai, through its Smart Dubai initiative, a digital twin based on IoT, big data, and artificial intelligence has been implemented, allowing the simulation and optimization of infrastructures, achieving the same advantages offered by the previous SN strategy. Shenzhen has developed a detailed virtual replica of the city, integrating real-time data through IoT sensors and monitoring systems to improve traffic, energy, public services, and security management, establishing itself as a benchmark in smart cities [

35].

The MV has transformed the organization of events, highlighting its impact on museums and art galleries. Sotheby’s Virtual Gallery is a digital platform that employs advanced technology for the exhibition and commercialization of art, antiques and collectibles in an immersive virtual environment. This gallery uses high-resolution 3D renderings to represent works with great precision, allowing users to explore pieces in three-dimensional mode and, in some cases, through VR. Its catalog includes modern and contemporary art, works by masters of antiquity, nonfungible tokens (NFT), jewelry, and other historical objects, accessible globally through the internet. Users can interact with the works, obtain detailed information, and participate in acquisitions directly or in online auctions. Sotheby’s also organizes temporary exhibitions and exclusive events, consolidating its role in the digitalization of the art market and democratizing access through technology. One of the most prestigious contemporary art fairs in America is Art Basel Miami Beach, which has recently incorporated virtual editions to respond to recent global challenges. Already in 2020, it launched the Online Viewing Rooms, allowing the digital exhibition of works, with the participation of 282 galleries from 35 countries and more than 230,000 visitors. Subsequently, in December, it presented OVR: Miami Beach, with 255 galleries from 30 countries and nearly 2,500 works of art, integrating new features such as interactive videos and digital exploration tools. These initiatives consolidated a hybrid model of art dissemination and commerce, complementing in-person fairs with digital platforms [

36,

37].

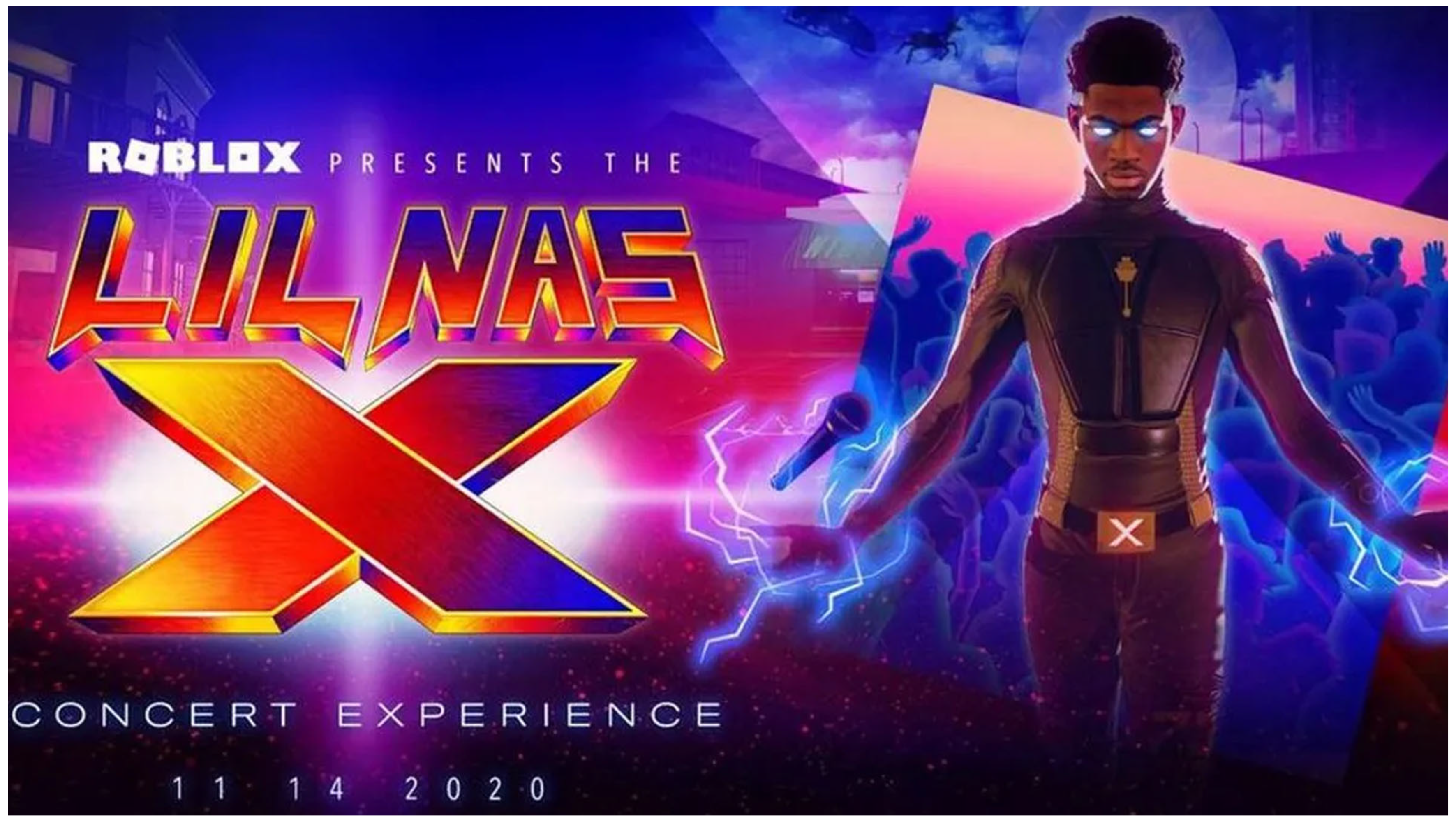

In the musical realm, MV has revolutionized the concert experience through interactive events in video games [

38,

39]. The Fortnite Concert Series has redefined the connection between artists and audiences through immersive shows and dynamic virtual environments. The first major event, with Marshmello in 2019, gathered more than 10 million players, followed in the ranking by Travis Scott (2020) with 27 million attendees and Ariana Grande (2021) with interactive stages. Other artists like J Balvin, Eminem, and The Kid Laroi have participated, thus consolidating this format. Likewise, Roblox Virtual Concerts have expanded the music industry in digital environments, offering interactive experiences with missions and collectible items within the game. Who started this trend in 2020 was Lil Nas X (see

Figure 2), with 33 million views, followed by Twenty One Pilots, Zara Larsson, and KSI in 2021. A few years later, David Guetta, The Chainsmokers, and Charli XCX solidified their concerts as a new form of artistic expression and massive interaction in virtual spaces [

40,

41].

Sandbox Game Jams are video game development events in which programmers, designers, and creatives collaborate to come up with games within a limited time frame (48-72 hours) on open development platforms like Roblox, Minecraft, The Sandbox, and Garry’s Mod. Their goal is to encourage experimentation and innovation in game design, allowing participants to explore new mechanics and more creative and unrestricted concepts. These events usually include specific topics and promote interdisciplinary teamwork. A recent example is the Discovery Game Jam, announced by The Sandbox on March 14, 2025, in their GMAE Show program, with the aim of continuing to drive creativity in the MV ecosystem [

42]. The Metaverse Summit is an international conference that brings together experts, companies, and developers to analyze the future of MV. It addresses key topics such as VR, augmented reality (AR), blockchain, NFT, and AI, exploring technological trends and opportunities. It has been held in cities like Paris and New York, attracting thousands of participants and establishing itself as an essential event for the digital industry [

43,

44]. In the realm of digital assets, NFT.NYC is an important annual conference in the NFT sector, bringing together artists, collectors, and industry leaders to discuss advancements in digital art, blockchain, and commercial applications. The 2025 edition of NFT.NYC will be held from June 25 to 27 at Times Square, New York, addressing topics such as art, AI, video games, legal aspects, and the digital community. Some previous editions have featured the participation of influential figures from companies such as T-Mobile, Mastercard, and Lacoste, demonstrating the growing impact of NFTs in various industries [

45].

One of the most dynamic virtual social interaction platforms that organizes festivals and events to enhance user experience is Zepeto World Festival. Among the highlights are the Zepetomoji Festival, held at Zepeto University, and the Zepeto School Festival 2024, which included contests, challenges, giveaways of avatar items, and live broadcasts with influencers. These festivals reflect Zepeto’s commitment to creating immersive experiences for its global community [

46]. New Horizons, released in 2020 by Animal Crossing, is beginning to be considered a metaverse due to its shared virtual world and social interaction capabilities. This is because during the pandemic, it allowed players to create customized islands, promoting social connection. It is not a complete MV, although it shares certain characteristics, due to the lack of integration with multiple platforms and digital experiences[

47,

48]. In highrise: Virtual Metaverse, events are fundamental to the user experience, where they can participate in activities such as fashion contests, debates, karaoke nights, and competitions in labyrinthine spaces. These events, organized by both developers and users themselves, stimulate rich and creative social interaction within the platform [

49,

50]. Meta has promoted virtual events through Horizon World Events, a VR platform where users explore, interact, and create in a shared environment. Among the highlighted events are educational marathons, immersive concerts (such as those by Sabrina Carpenter and The Kid LAROI), and themed experiences like "3D Ocean of Light - Dolphins in VR" and "Space Explorers." Additionally, Horizon Worlds has integrated "Venues," a space dedicated to broadcasting live events such as concerts and sports, expanding entertainment options [

51,

52].

BKOOL, a virtual cycling simulator, offers immersive training experiences and has hosted notable events such as the competition between professional cyclists Chris Froome and Alberto Contador during the Virtual Giro d’Italia 2024 [

53,

54]. The Brooklyn Nets’ Netaverse, launched in 2022, uses digital twin and virtual reality technologies for immersive experiences during NBA games, allowing fans to experience the games from new perspectives and access real-time statistics [

55,

56]. Formula 1 in MV has developed with various events, integrating NFTs, immersive experiences, virtual simulations, and video games. Among the most relevant events are F1 Delta Time (2019-2022), the F1 Virtual Grand Prix (2020), the collaboration with Roblox in the F1® Arcade and Virtual Paddock, and the Monaco Grand Prix in the MV (2023). These events reflect F1’s incorporation into the digital world and its commitment to innovation in the virtual realm. The AIXR XR Awards, formerly known as the Virtual Reality Awards, celebrate the most significant achievements in the field of extended reality (XR), which encompasses VR, augmented reality(AR), and mixed reality (MR). These annual awards recognize technological innovations, immersive experiences, and applications that transform human interaction with technology.

On the other hand, Microsoft has developed Microsoft Mesh, a MR collaboration platform that allows interaction in digital environments through avatars or holograms. It works on devices such as HoloLens 2 and smartphones, facilitating immersive meetings, collaborative design, technical training, and virtual events creation. Based on Microsoft Azure, Mesh uses AI and spatial computing to create realistic and secure experiences, positioning itself as a key tool for collaboration in MV and its future integration with services like Microsoft Teams [

57]. These platforms reflect the growing diversification of immersive experiences in the MV, offering user interaction, entertainment, and learning in advanced virtual environments [

58,

59]. Throughout this article, case studies will be analyzed that illustrate the use of these technologies and how leading companies and government agencies have adopted these strategies to maximize their potential. From a socio-economic perspective, these technologies present a series of challenges and opportunities. Although MV and virtual events democratize access to digital experiences, they also raise questions about security, data privacy, and their impact on employment. The creation of digital economies within the MV could redefine business models and innovate new forms of monetization, although it also carries risks associated with regulation and economic sustainability.

This study focuses on Alcoi, an industrial city with a textile tradition that began in the 19th century driven by hydraulic energy. The modernization of the city was accelerated with the arrival of steam, creating a strong economic and social fabric. In 1828, the first industrial studies derived from the Royal Cloth Factory were founded, and in the mid-19th century, the Elementary Industrial School was established, funded by public and private funds. In 1869, the first title of chemical expert was awarded and in 1873 that of mechanical expert. At the beginning of the 20th century, the Higher School of Industry was founded, offering specializations in various industrial branches. In 1972, the School became part of the Universitat Politècnica de València (UPV) campus, expanding its educational offerings, which currently include seven degrees: Industrial Design, Computer Science, Business Administration, Electricity, Chemistry, Mechanics, and Robotics.

To commemorate the 50th anniversary of the integration of the University School of Industrial Technical Engineering into the UPV, a comprehensive design effort has been carried out. For this experiment, first, a DT was designed of the Alcoi campus of the UPV. Next, and based on several photographs, the Ferrándiz and Carbonell buildings that make up the central part of the campus were modeled in 3D using 3dsmax 2025. The brand image was then created by designing the logo. A series of canvases with the commemorative image have been modeled in 3D, which are displayed on the facades of the Ferrándiz and Carbonell buildings, like a stage, as they are still installed in the urban space. With the Unity game engine, user interactions with the scene have been configured: the activation of animations, videos, among others, and they have been implemented in the template of the same program on the Spatial.io web portal, to be able to develop the digital twin in the metaverse. Spatial is a free application that provides its socialization interface, where we can customize our avatar and chat or voice with other users. In conclusion, the convergence of VR, MV, DT, and the creation of virtual events is causing a paradigm shift, especially in the way we interact with the digital world. Its impact will continue to expand in the coming years, evealing new opportunities for innovation and global connectivity. This article is structured as follows. First, the proposed digital twin is developed in

Section 2. Next,

Section 3 provides information on the usability of the interface and aspects of Unity and Spatial. All of the above, to conclude with a discussion and comments presented in

Section 4 and

Section 5.

2. Materials and Methods

2.1. Methodology for Designing Digital Twins in the Metaverse for Events.

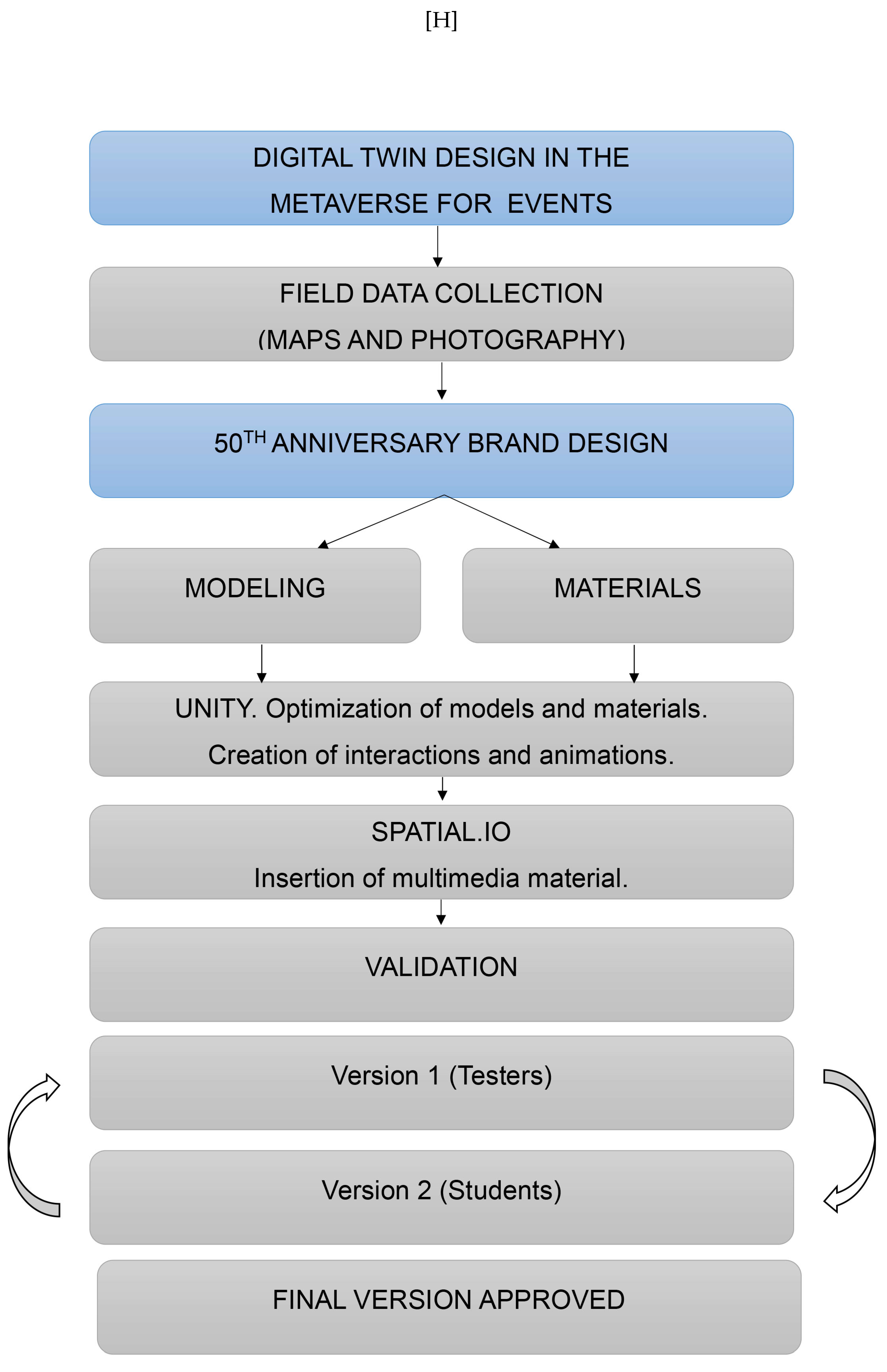

The methodology used to create the DT of this virtual event is illustrated in

Figure 3. In the diagram, the first step is the interpretation of the cad plans and the taking of photographs of the facades and decorative elements. The campus maintenance service provided plans that only provided information on the dimensions of the floors. Apparently, there are no plans for the elevation of the facades of the buildings of Ferrándiz and Carbonell, so we had to take as a reference the photographs taken previously. To do this, about 300 photographs were collected to define all the important details of the buildings, as well as the vegetation, the background images, and all the textures. The next step was to define the brand image for the 50th anniversary of the union between the University School of Industrial Technical Engineering and the UPV. Once the logo was defined, the posters were designed and a commemorative video was edited where an animated 3D morphing was configured, where the transformation between the UPV logo and the one proposed for the 50th anniversary was represented. This entire process is detailed in Point 2.2. Study of the graphic design of the event. Once the brand image has been defined, the 3D modeling phase of the Ferrándiz and Carbonell buildings is accessed, with 3dstudio Max 2025. The plans were imported and their height was extruded from their measurements in the plan. It was necessary to make photomontages of the facades with Adobe Photoshop Version 25, in order to define the height details of the buildings (location of reliefs, windows, doors, etc.). Subsequently, the auxiliary elements, such as benches, litter bins, and trees, were modeled on a real scale, all based on field photographs. I have removed the phrase. Once the modeling was finished, the images were processed to obtain all the textures. This issue is explained in detail in point 2.3. 3d Modeling and Texturing of the Digital Twin.

For the implementation of the DT in the MV, we used the free tool Spatial.io, remember that it is a virtual reality and augmented reality platform that allows users to create and explore virtual spaces in 3D. It is mainly used for remote collaboration, the design of immersive experiences, and also for exhibitions in the metaverse. This platform has templates for the implementation of 3D content and animations, under the Unity video game tool. In this way, the next step was to export the 3d model to Unity. Within Unity, the 3d models and textures were then optimized, so that the navigability of the future user was fluid, and the animations and interactions of the scenario were defined. All of this is explained in detail in point 2.4 Optimizing models and materials with Unity. Creation of interactions and animations. The Unity template was then exported to Spatial.io, where all multimedia material was defined: videos and external links. To see this process in more detail, see point 2.5. SPATIAL. IO. Insertion of multimedia material. Once our digital twin was defined on the Spatial platform, it was validated on campus. In a first phase, version 1 was evaluated by the Graphic Expression department (Testers). Its indications for improvement were included in version 2, which was presented to 265 students of the Bachelor’s Degree in Industrial Design enrolled in the subjects of Computer Aided Design, Presentation Techniques, and Simulation. The students tested the virtual scenario in pairs using an assessment test. Finally, with this recorded data, the final version of the virtual event was improved, which ended up being presented at the gala of the 50th anniversary of the incorporation of the University School of Industrial Technical Engineering into the UPV, held on April 7, 2022. Once a space with 4 Meta Quest 3 glasses was set up, those attending the gala were able to try the digital twin and the personalization of the event. To this was added the presentation of a documentary video about the foundation of the Industrial School and the 3D animation of the morphing between the current logo of the UPV and the new one designed for the commemorative event. In the following sections, each of these phases is described in a more specific way, which will help better understand the process of creating the digital twin of the Ferrándiz and Carbonell Buildings on the Alcoi campus for the celebration of the 50th anniversary of the Alcoi campus at the UPV

Figure 4.

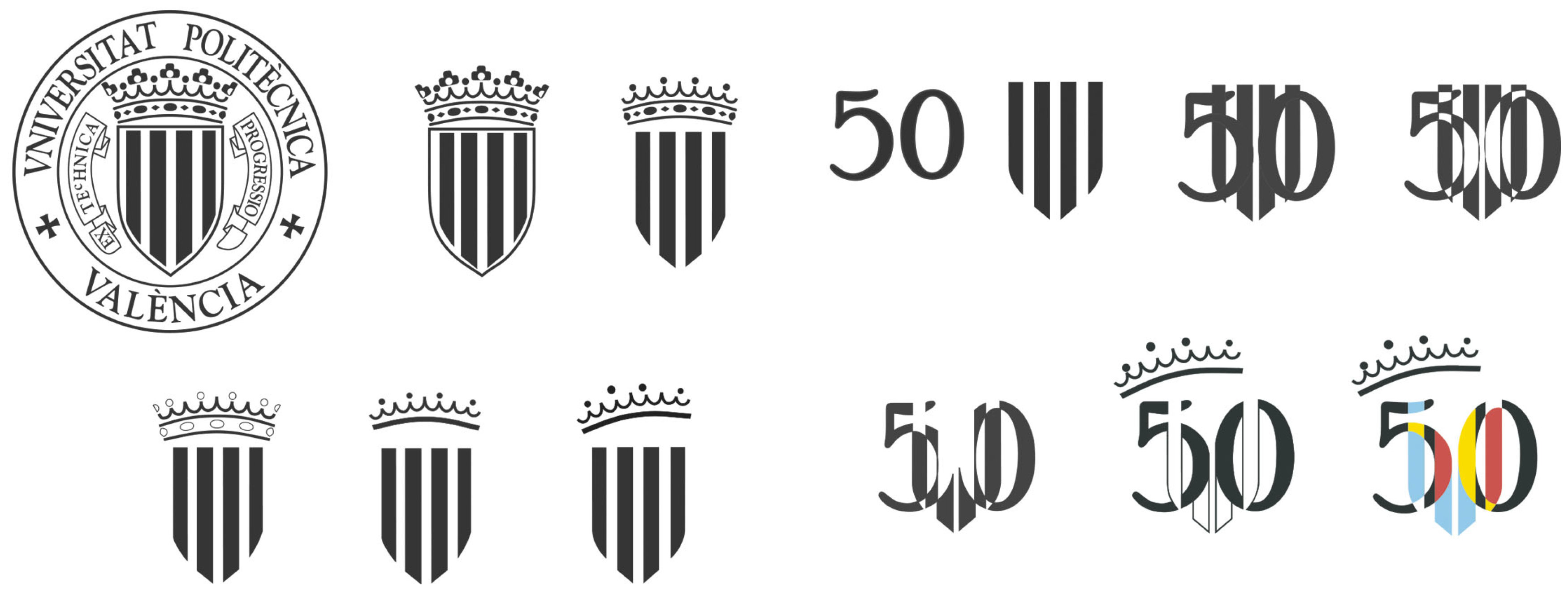

2.2. Study of the event’s graphic design.

As indicated, this project is carried out to celebrate two commemorative events that are part of the recent history of the Alcoi campus of the UPV. The first act corresponds to 2022, which marks 50 years since the integration into the UPV, as a University School of Technical Engineering (Decree 1377/1972, of 10 May BOE, 7 June 1972), and the second act commemorates the 100th anniversary of the laying of the first stone of the Viaduct Building in the last century. The management of the educational center proposed the artistic commission to Silvia Sempere Ripoll for the artistic direction of the event that involved the creation of the commemorative logo and all those corresponding graphic elements, which made up the graphic image of the campus of Alcoi 1972 /2022 UPV event. See

Figure 5.

Below, the evolutionary sequence of the graphic design development of the commemorative logo is visualized, which began by taking the original coat of arms of the UPV with the emblematic legend EXTECHNICA PROGRESSIO as the starting point. To achieve this, all compositional elements, such as outlines, circles, and typography, were removed. The crown and the four bars are reserved. With the four vertical parallel stripes of the four-barred flag, a formal overlay game is proposed, to which a selective subtraction exercise is applied, integrating it with the number 50, the years equivalent to the commemoration celebrated. See

Figure 6.

For the crown, a simplification of the elements that make up it is carried out and the design of the shape is reinterpreted. First, its symmetry is eliminated to give it a more dynamic appearance; second, the four small spheres that top the crown are animated. The animation movement is designed so that each sphere moves at a different pace, mimicking the bounce of small balls. All of this, without losing the essence of the central identifying element of the official emblem of the educational entity. For staging, several urban interventions were carried out in public spaces. One of them involved the installation of rigid structures that served as a support for the placement of 9 digitally printed color canvases on PVC. As can be seen, the color range of the logo respects the official corporate colors: blue, red, yellow, and black. See

Figure 7.

These large-format graphic elements were installed on the facades of the Ferrándiz, Carbonell, and Viaducto buildings, which are part of the Alcoi campus of the Polytechnic University of València. See

Figure 8.

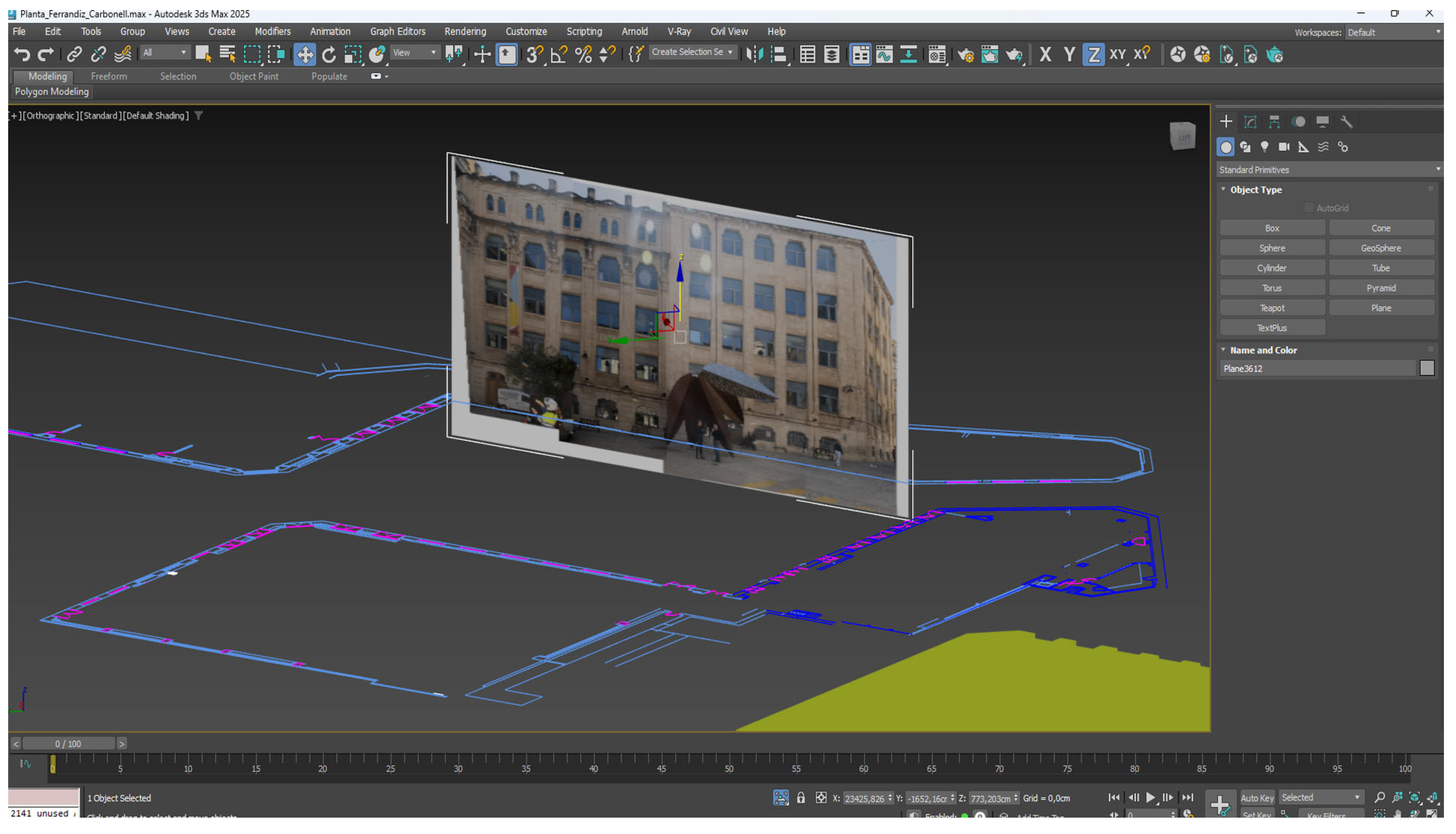

2.3. Modeling and Texturing of the Digital Twin.

The process of modeling the DT begins with the request to the maintenance service of the Alcoi campus for the building plans. They told us that they only had plans for the floor of the building and not the elevations of the facades. In this way, the cad plans had to be imported into the 3dsmax program to insert the photomontage of each of the facades and to obtain the true dimensions of the building. Gaps in the real measurements of the ground floor allowed us to extract the real measurements of the facades. See

Figure 9.

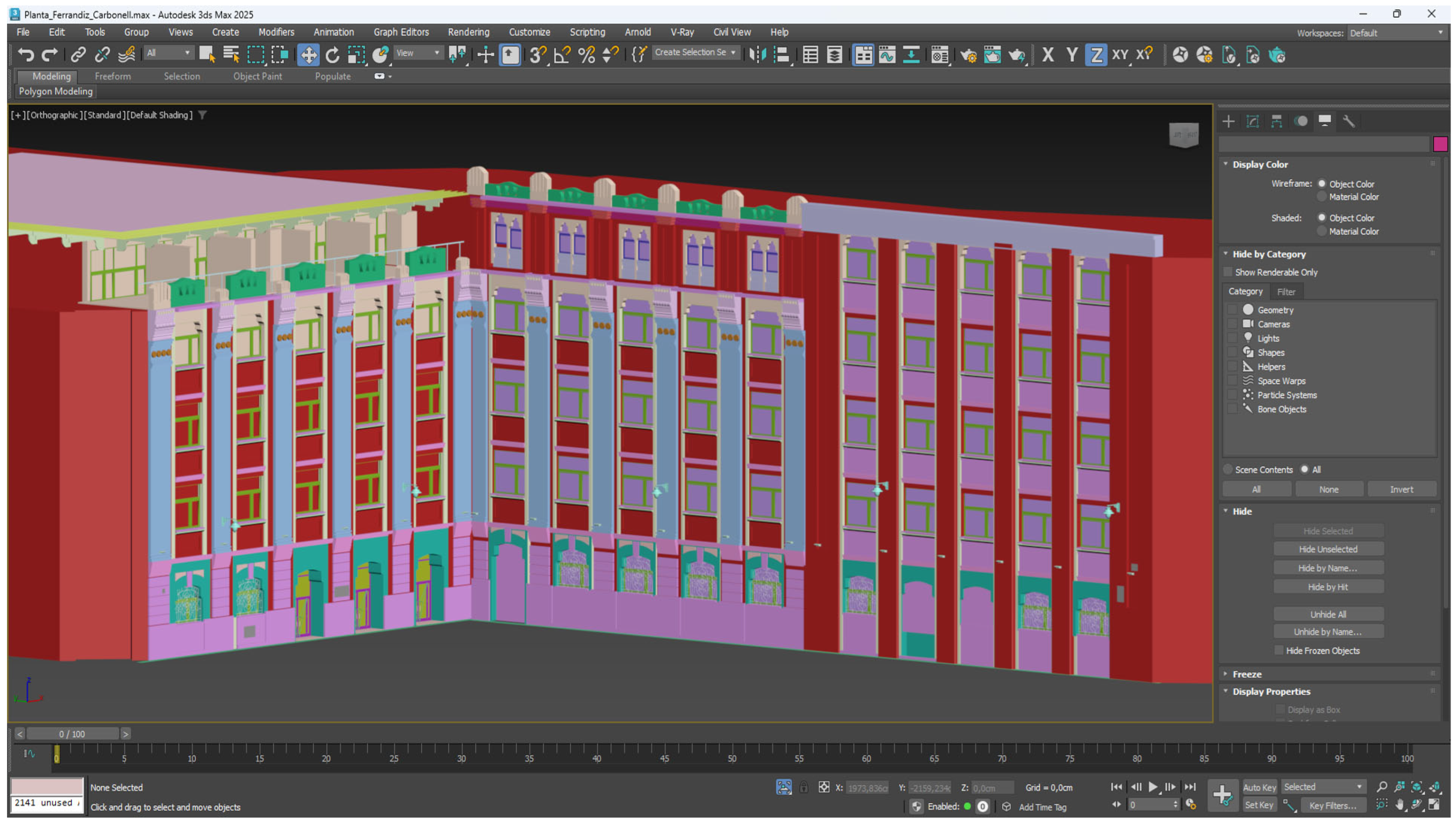

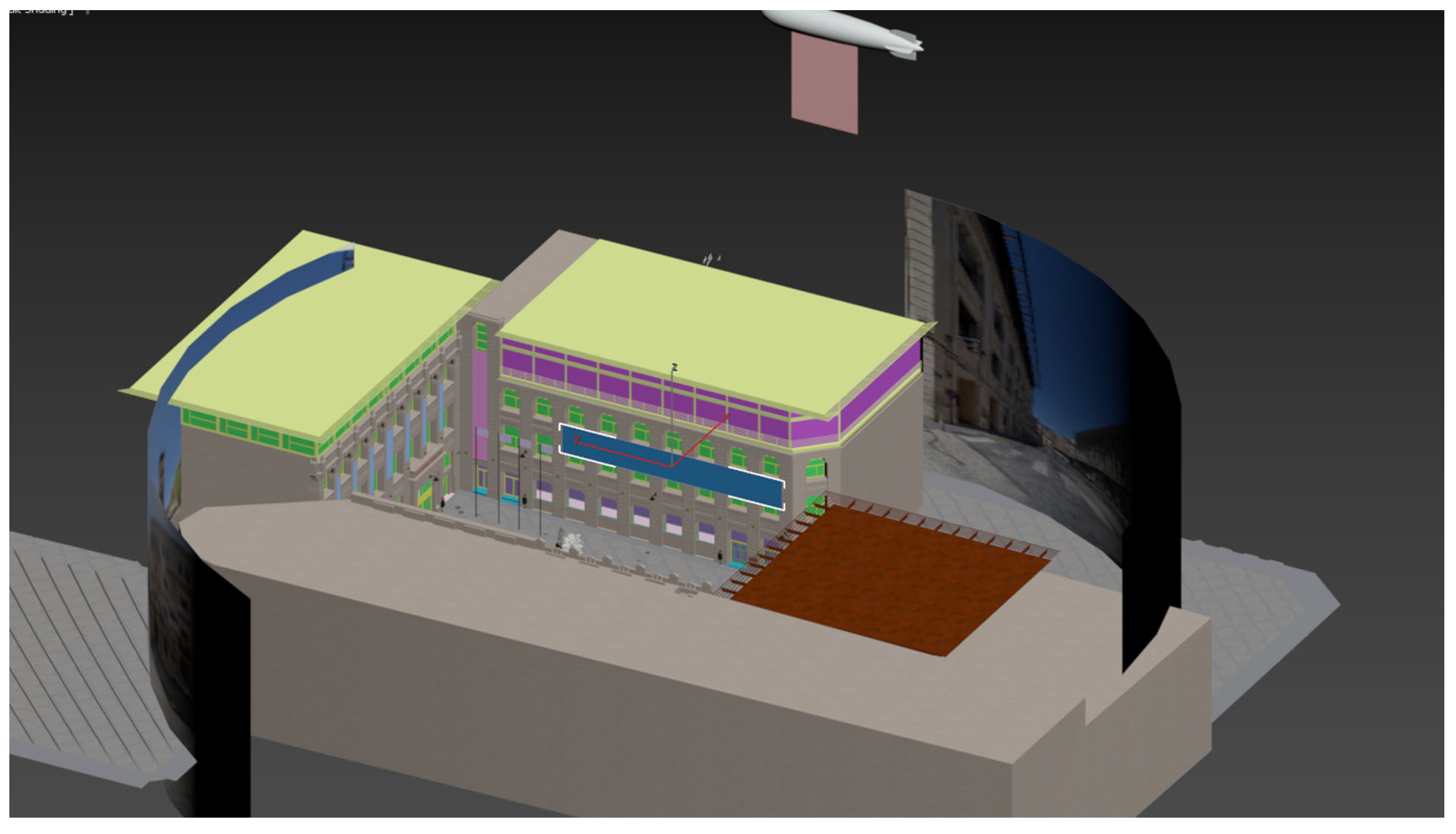

From this point on, nearly 300 photographs were used to define the details of the facades, windows, reliefs, grilles, etc. this allowed us to define the volumes of the façades of the Ferrándiz and Carbonell buildings, using 3D modeling instructions of extrusion, sweeps and Boolean operations. See

Figure 10.

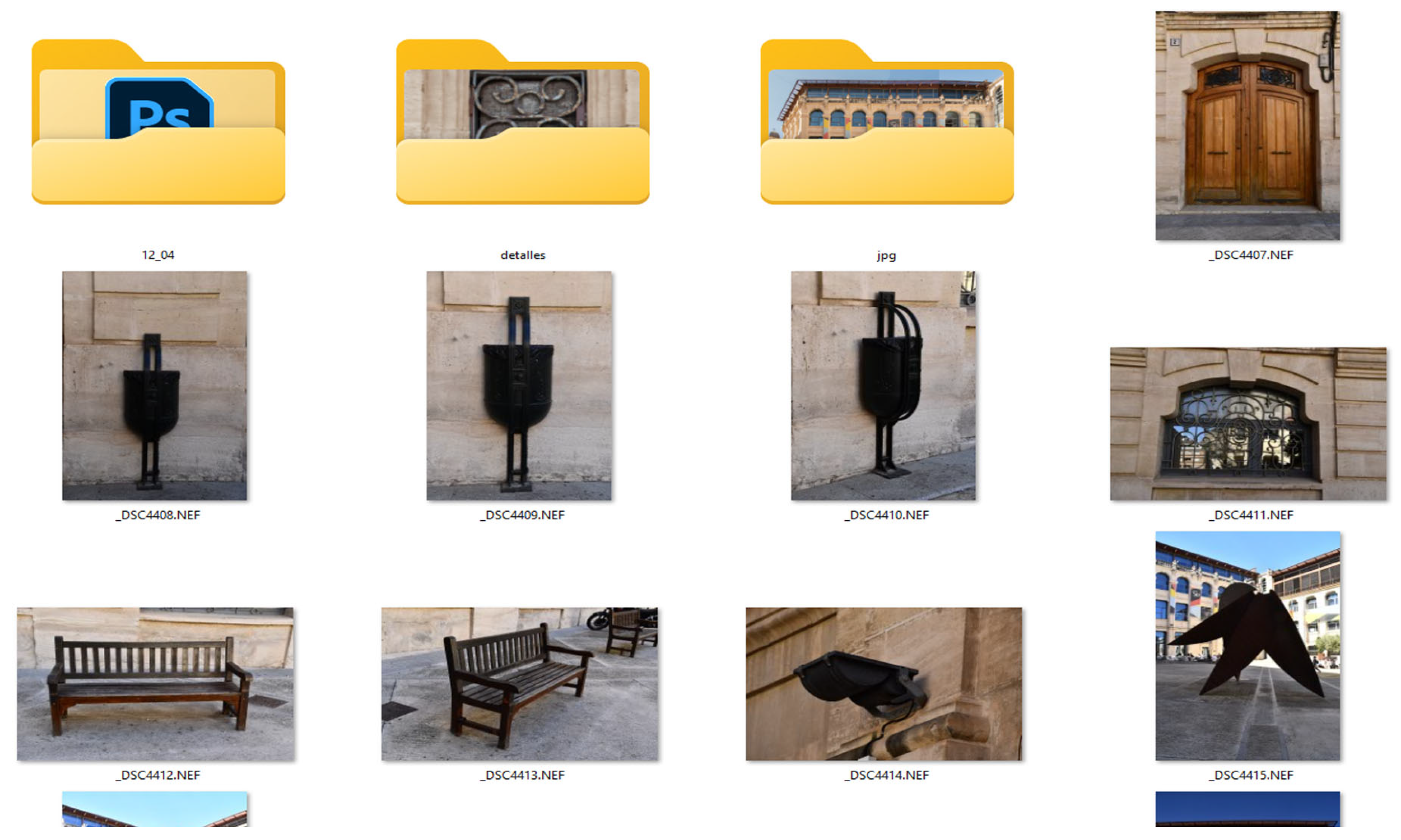

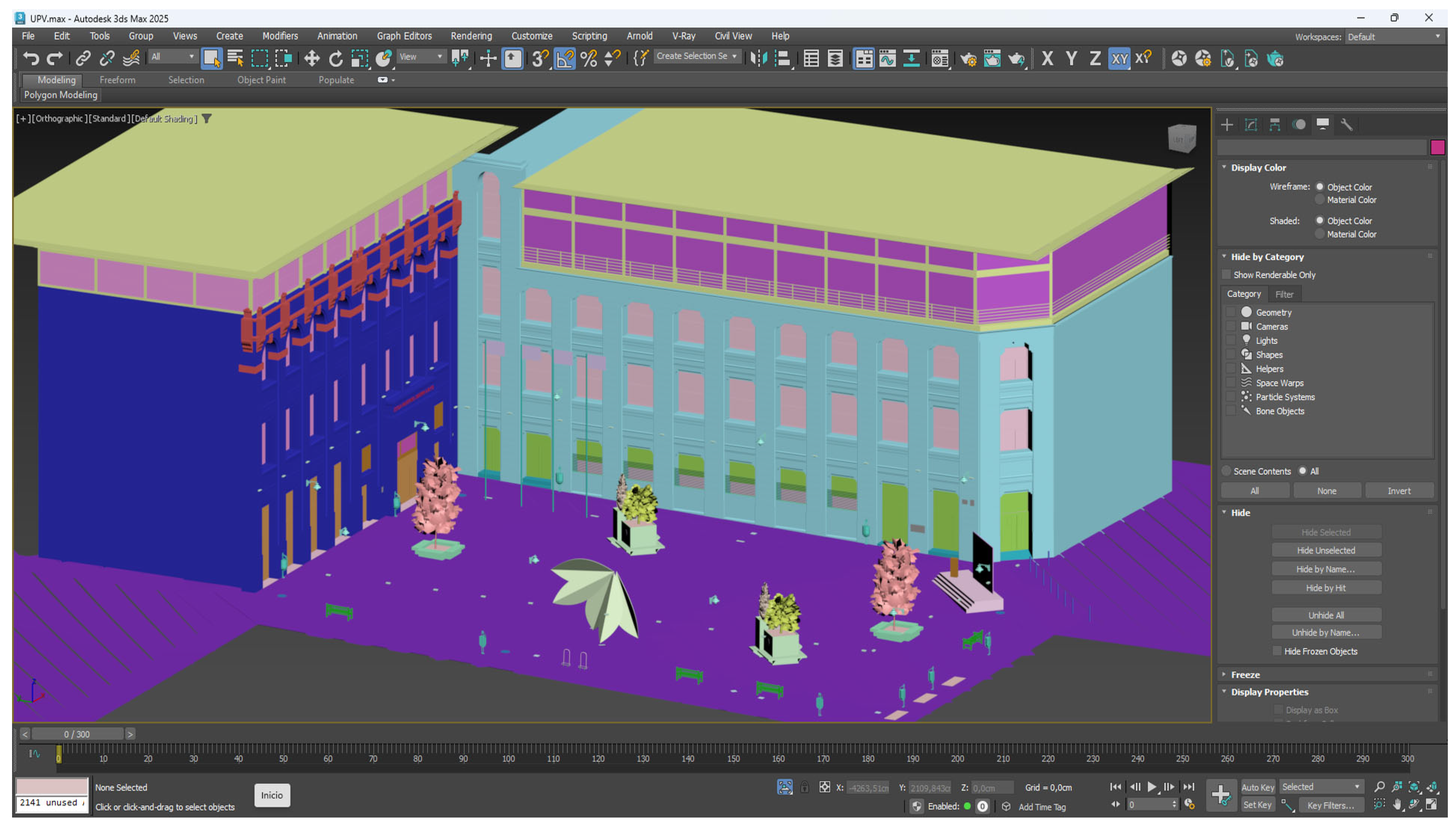

Once the volumes of the facades were defined, it was necessary to work again on the photographs taken to construct the existing decorative objects: benches, trash cans, planters, sculptures, etc. as in the previous case, we relied on taking measurements of those elements that we could access. See

Figure 11 and

Figure 12.

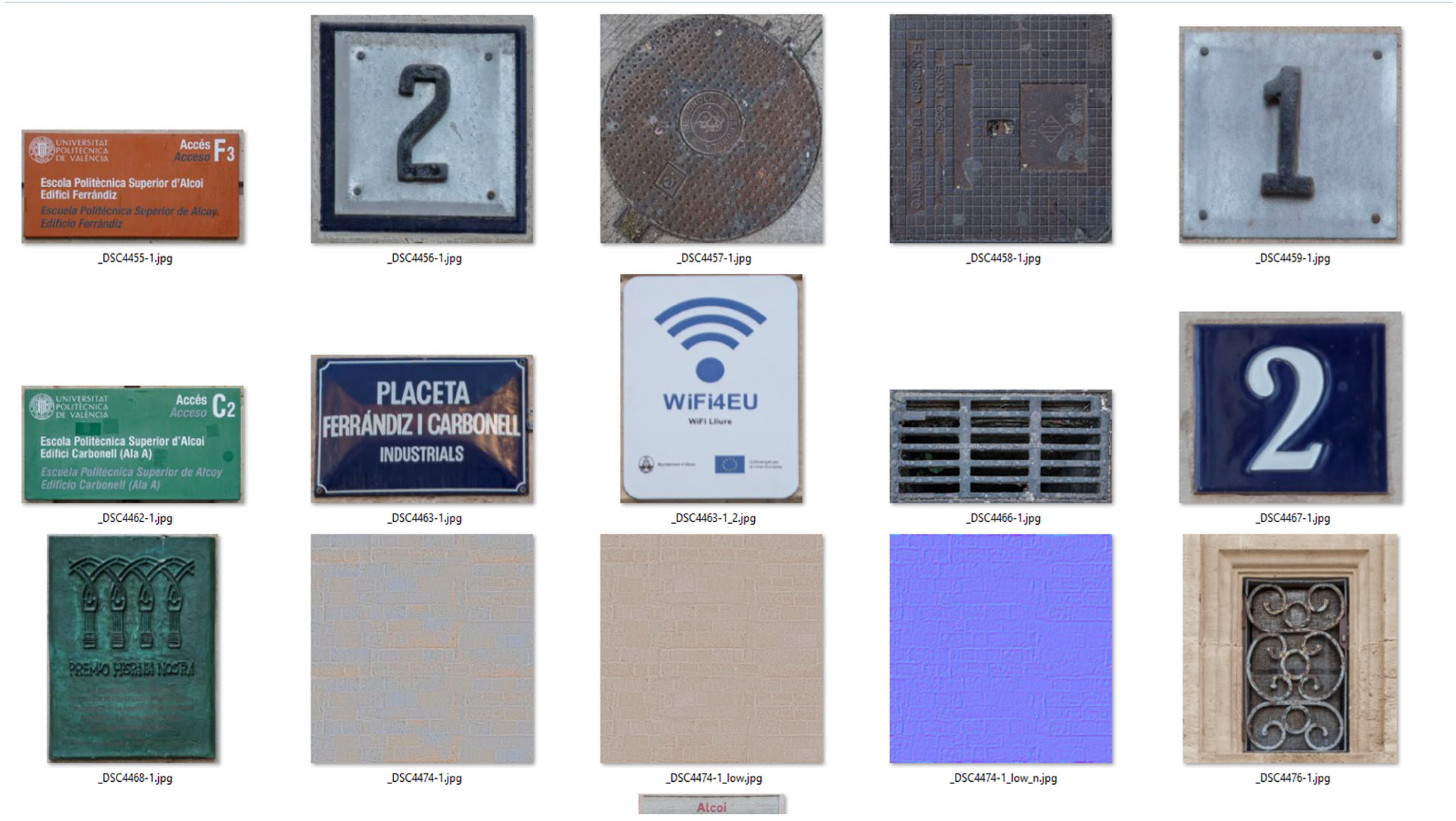

The DT modeling was completed with the flag poles, the spaces for the commemorative tarpaulins, and a pair of conference lecterns with a rear screen to show corporate videos of the 50th anniversary event and the Alcoi campus. To give it greater realism, a flock of pigeons and a zeppelin identified with the 50th anniversary logo were added, a nod to the Japanese series "Alice in borderlands". Once the modeling was finished, texturing began, cutting out images of posters, manholes, doors, etc., from the taken photographs. In this case, we had to use Adobe Photoshop version 25 to crop each texture part. The Alpha channels had to be worked on for those textures with transparent parts like backgrounds or grilles. Similarly, normal maps had to be generated to add more realism to those textures with roughness, such as the cement in the square. See

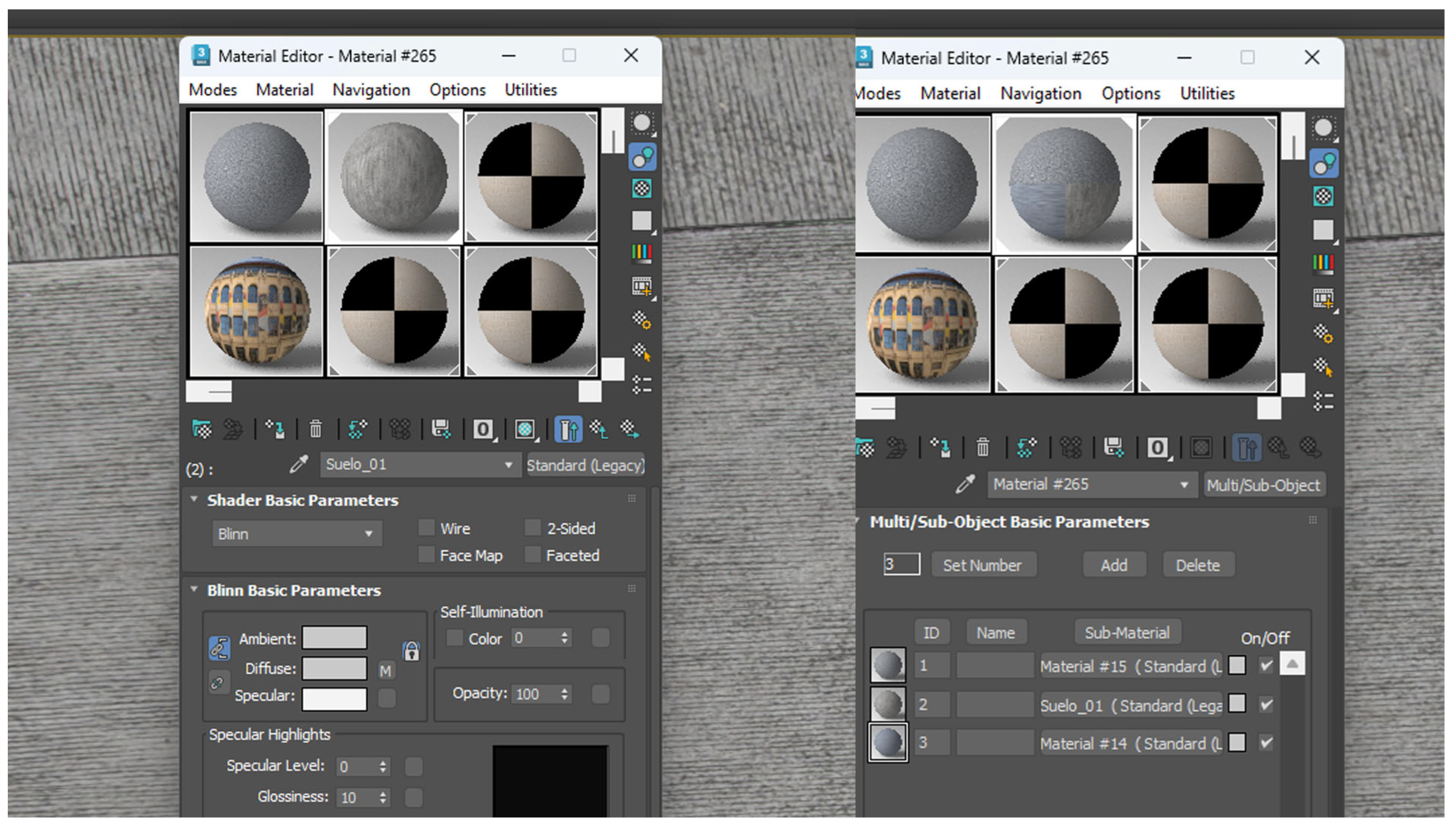

Figure 13. To texture the 3D models, the Standard (Legacy) material type was used to place the diffuse, opacity, and bump (normal) maps. In those 3D models with different textures, the Multi/Sub-Object material type was used to identify the parts of the model and their corresponding textures by ID. See

Figure 14.The trees (two elms and two olive trees) were imported from 3D model libraries and already had the opacity maps incorporated to define their leaves. To finish the texturing, 2 plans were created at both entrances to the square with applied photographs of the part of the IVAM CADA Alcoi building and the Salesian school, adjacent to the Ferrándiz and Carbonell buildings. These plans will delimit the visitable area of the immersive space. See

Figure 15. The tarpaulins are expected to be animated with the graphic image of the event, so they have been modeled in 3dsmax, but they will be textured in Unity.

2.4. Optimization of models and materials with Unity. Creation of interactions and animations.

Unity is a real-time rendering engine widely used in the interactive software development industry, which stands out for its versatility and ability to generate highly immersive three-dimensional environments. Its relevance in the construction of MV-oriented applications lies in its architecture optimized for the creation and management of interactive virtual spaces, allowing the implementation of immersive experiences on multiple platforms. Unity technology enables the development of dynamic virtual worlds, positioning it as an essential tool in the production of content for video games, VR, AR, and MR, fundamental disciplines in the configuration of the MV. Likewise, its graphics engine and advanced scripting systems allow for the customization of digital experiences with a high degree of interaction and adaptability to various devices and computational environments. In the context of developing immersive experiences and three-dimensional environments, Unity maintains a close relationship with Spatial.io, although both platforms perform different functions within the digital content generation ecosystem. Spatial.io employs Unity as the technological infrastructure for building its virtual environments, leveraging its capability for real-time rendering and simulation of complex interactions. Thanks to this integration, the environments generated in Spatial.io present a high degree of visual realism and optimized interaction capabilities for social, collaborative, and commercial applications within the MV. While Unity provides the computational foundation for the generation and manipulation of these three-dimensional environments, Spatial.io facilitates their accessibility and use within a multiuser interaction context.

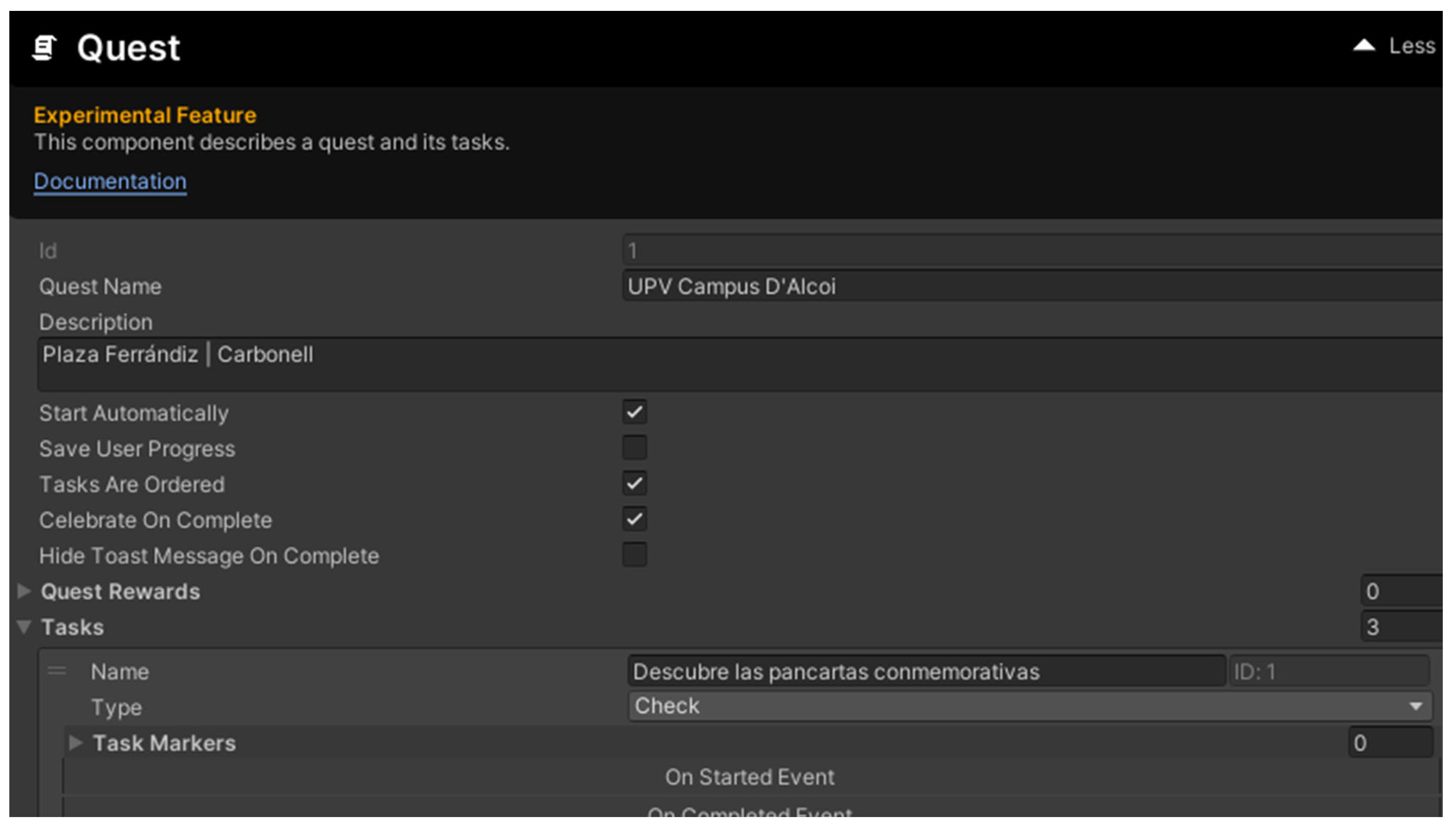

In the implementation of the digital twin of the platform, version 2021.3.21f1 of Unity has been used. For the correct configuration of the environment, it is necessary to import the UI Menu Spatial package into the project. This import provides a series of pre-configured assets that can be used as a reference in the construction of the virtual environment. It is recommended to open the corresponding level and transfer all the elements of the hierarchy to the development project, with the aim of using them as a structural template for the implementation of the final design. For this reason, the integration of Spatial in Unity has been chosen over other well-known game engines like Unreal or Nvidia Omniverse. For this scenario, a component developed by Spatial has been used to enhance user interactivity with the space. It is the Quest component, which allows us to create a mission system with as many objectives as we want. In our case, the mission system has been used to provoke the user’s call to action and to encourage the complete exploration of the scenario. See

Figure 16.

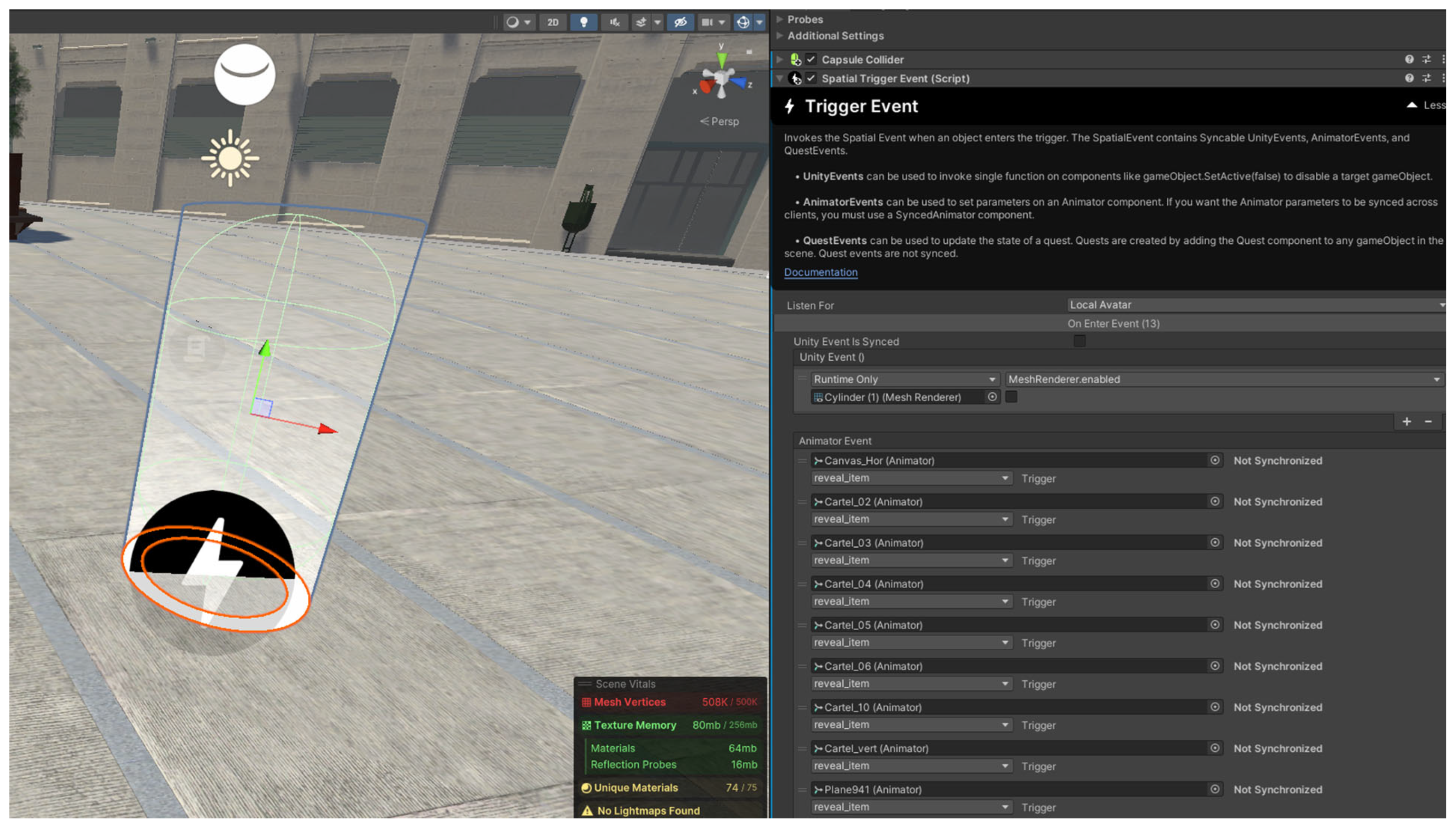

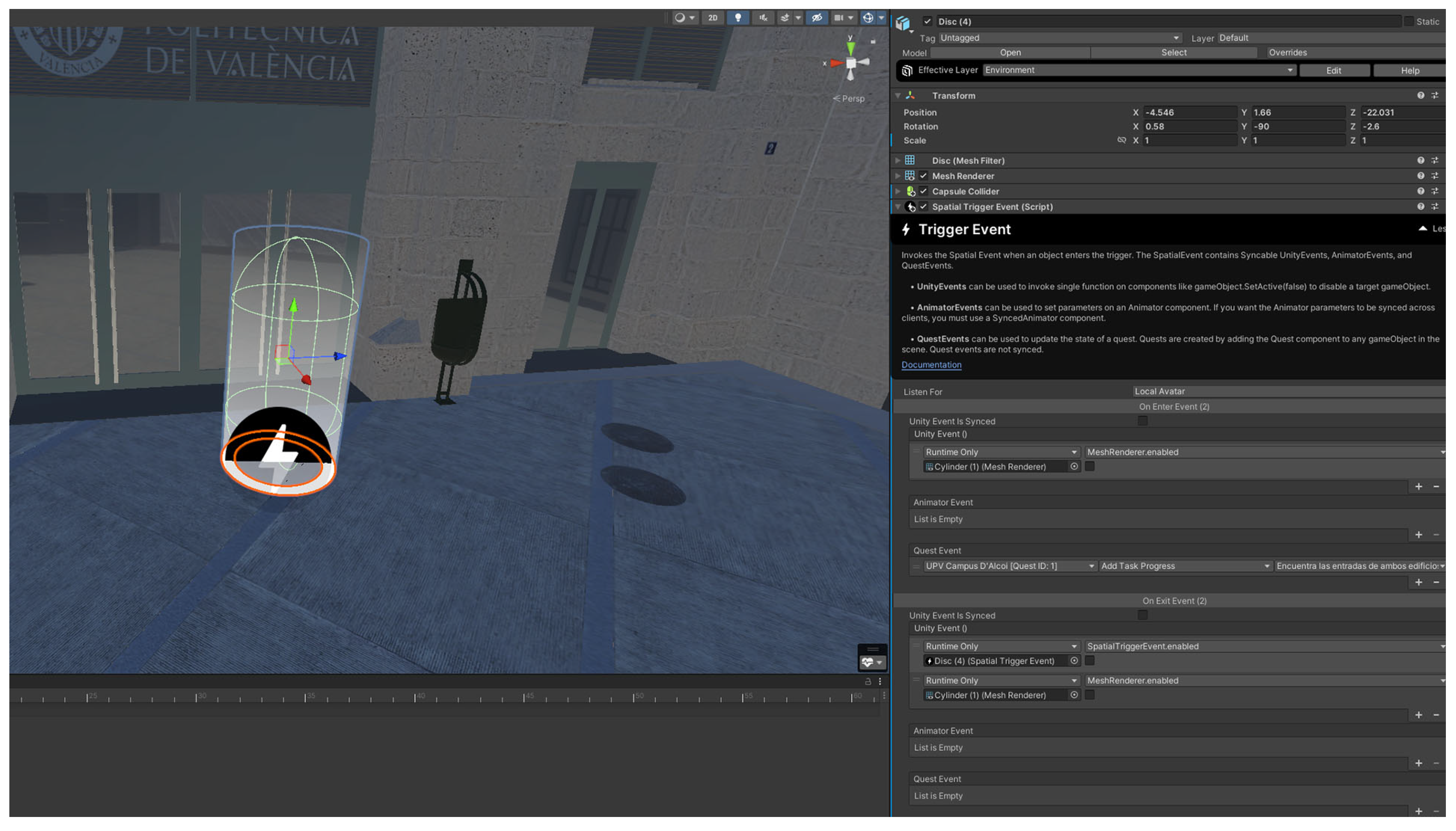

Mission 1: The user must pass through the illuminated cylinder to discover the commemorative banners of the 50th anniversary event. To do this, they must activate the collision trigger defined in the scene. Upon collision, it will activate the banner animation and indicate to the mission system that the objective has been completed, thereby unlocking the next mission. See

Figure 17.

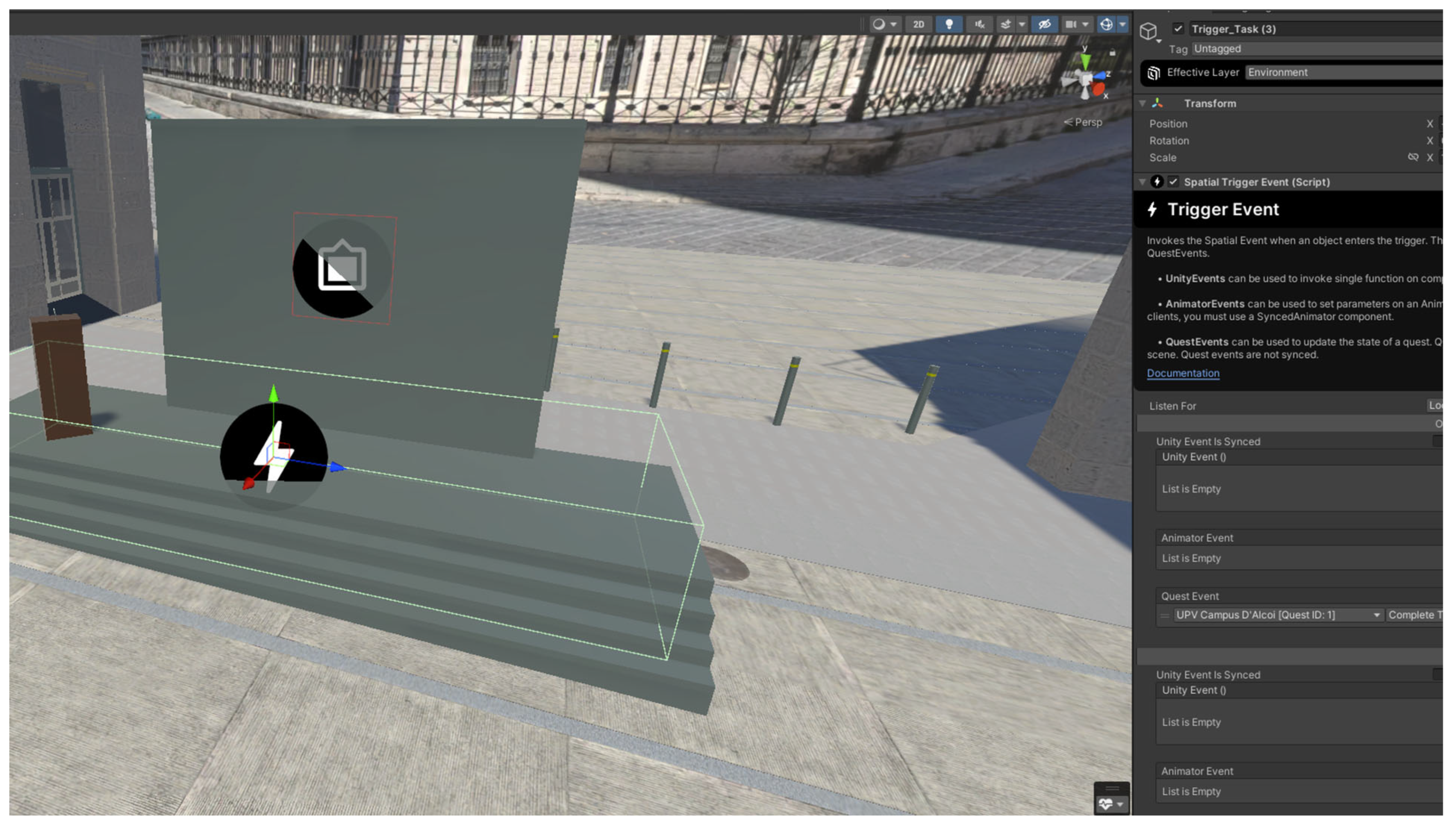

Mission 2: The user must find the three entrances to the buildings of the Alcoi campus (1 in the Ferrándiz building and 2 in the Carbonell building). An element similar to the previous one (Trigger Event) is reused to register the collision and increase the mission log counter. See

Figure 18.

Mission 3: The user must get on stage to complete the final mission. In this case, a Trigger Event collision zone has been set up on the stage to activate the event that checks if the user has completed the objective. Upon completion, a celebration message will appear. See

Figure 19.

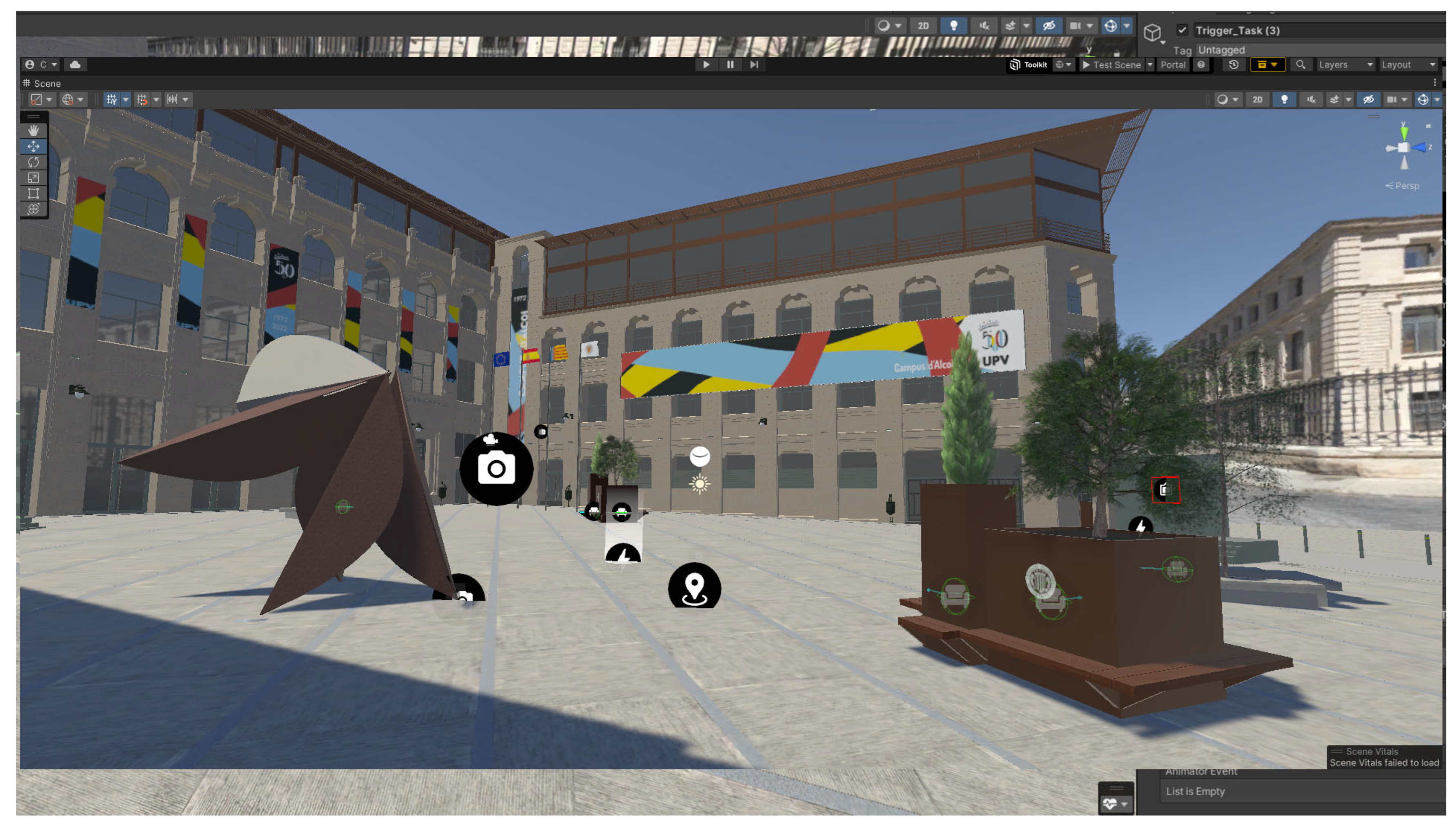

To optimize navigability, all textures used on stage were converted to base 2 textures, measuring 1024 x 1024 pixels. Both modeling and texturing must be treated, under the criteria of optimization of video games, so as not to have problems later in the gameplay in the metaverse. To add more interactivity to the scene, seating areas have been included in benches and planters, using the Seat HotSpot component. This adds more realism to the scene and makes it easier for people to remain seated in the case of talks or training on the virtual stage. See

Figure 20.

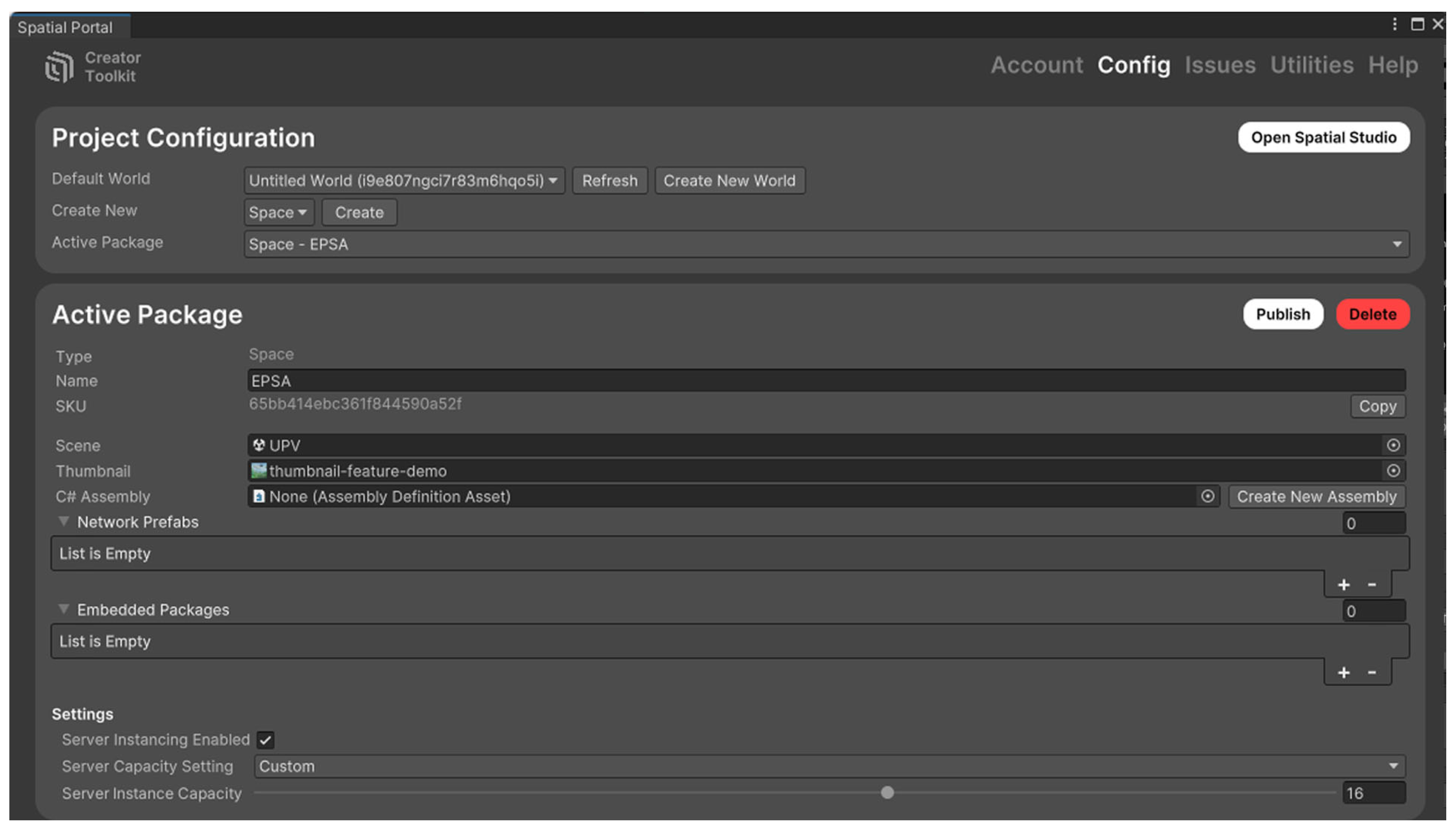

2.5. SPATIAL.IO. Insertion of multimedia material.

And in the final phase of the creation process, we export the scene from Unity to Spatial. From the Spatial Portal tab in Unity, we access the Creator Kit menu. From there, we can configure the name of the scene and the thumbnail that will be displayed when it starts in Spatial. Previously, we will need to register with the details of our spatial account. Using the Publish button, the process of publishing on the network will begin. Normally, after 20-30 minutes, we will receive a confirmation email indicating that our scenario is already published. See

Figure 21.

Spatial.io is an advanced platform that redefines virtual collaboration by creating immersive 3D spaces where users can meet, work, and interact. Using the power of AR and VR, Spatial.io offers a unique and intuitive environment that enhances communication and productivity. It allows the design of personalized virtual spaces where users can create customized virtual environments to their needs, such as galleries to showcase art, virtual offices for team collaboration, or interactive educational spaces. The platform allows for the integration of 3D models and assets from various design programs, making it easier for artists, designers, and architects to incorporate their creations into the virtual environment. Additionally, Spatial.io supports simultaneous collaboration of multiple users in the same virtual space, ideal for brainstorming sessions, joint projects, or collaborative design reviews. Users can build immersive presentations and demonstrations, useful for proposals, educational seminars, or product exhibitions, offering more engaging experiences. Every aspect of the virtual space is customizable, from the layout and design to specific features and functionalities, ensuring that the environment adapts to the unique needs of each project. Spatial.io integrates with various tools and platforms, allowing the incorporation of documents, videos, and interactive widgets into virtual spaces, which enhances the functionality and interactivity of the environment. The platform allows for the hosting of events, workshops, and experiences within virtual spaces, with robust event management features, including spatial audio and attendee management, to ensure smooth and engaging virtual experiences. Virtual spaces on Spatial.io can be easily shared via links, making it simple to invite collaborators, clients, or audiences to explore and interact in the space without complex setup processes. Additionally, Spatial.io offers a wide range of premium templates without the need for coding, allowing creators to easily design interactive spaces. The platform is compatible with VR, AR, Web and mobile devices, ensuring a smooth and accessible experience from any device.

Spatial has been chosen over other platforms such as Roblox or Meta Horizon because it is specifically designed to create professional and collaborative environments in the metaverse, making it ideal for virtual meetings, digital art galleries, exhibitions, and business events. It allows you to import high-quality 3D models and uses advanced rendering that provides more realistic graphics compared to Roblox’s more cartoonish and simplified style. In addition, it is optimized for VR and AR experiences, making it an ideal choice for immersive presentations, virtual tours, and more advanced interactive experiences. Spatial.io allows users to create experiences without coding, thanks to no-code building tools and pre-built templates. Roblox, for example, requires knowledge of Lua (Roblox Studio) to develop more advanced experiences, which represents a barrier for users with no programming experience. Spatial.io is accessible through web browsers, VR devices (Meta Quest, HTC Vive) and mobiles, without the need to install additional software, while Roblox requires installing the specific application for each platform and does not have direct support in browsers for advanced experiences. In short, we chose Spatial.io because it is the best option to create our platform for virtual events, collaborative work, exhibitions or reality environments with high quality. However, as a platform for video games or monetization, using Roblox would have been a better option. A countersloped view of the starting point of the scenario can be seen in

Figure 22.

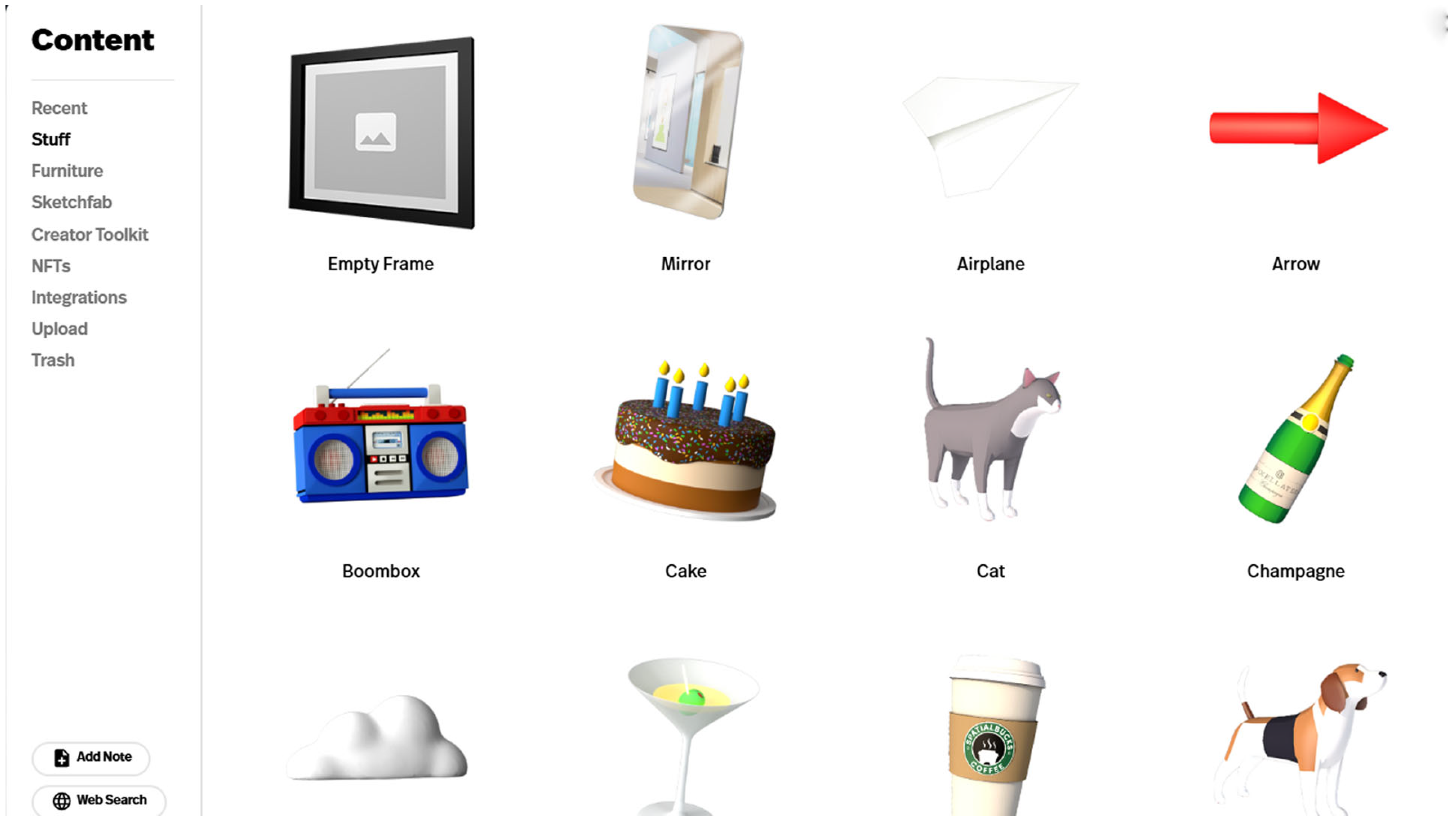

In this final section, we upload all the multimedia content online, in our case, the videos from the two stages. One with the presentation of the Alcoi campus and another with the commemorative video of the 50th anniversary. It is a bit annoying to work with Spatial because it does not allow inserting this content from Unity. You have to insert a component called Empty Frame in those places where we want to add images, PowerPoint presentations, PDFs, or videos, and then, once the scene is published, insert them online. The advantage is that we can edit this content without having to re-publish the scenario. See

Figure 23.

One of the main advantages of Spatial.io is its MV-adapted interface with socialization tools such as text or voice chat, along with the inclusion of emoticons or dance animations for our avatar. From its Share icon, we can make calls to the entire community by email or social media to announce an event. As we have seen in the Introduction, many companies have created their MV with a very vertical structure with high implementation and maintenance costs. We believe that Spatial.io is a more horizontal and accessible tool for all types of audience, allowing any business, entity, or organization to create their own space in the MV and share it with the community.

2.6. Research Design.

To validate the versions of the digital twin in this research, Meta Quest 3 glasses, a PC, and an iPhone, model X, were used. Before each test, using the VR glasses, a technician conducted a simple tutorial, explaining the purpose of the test. The way to place the glasses and use the controllers and their buttons was explained, as well as how to navigate through the space and its menus. Specific tasks were established to evaluate the experience, and the tools for socialization and avatar creation were described, as well as those for image and video capture creation. Users were warned about the possibility of feeling dizzy and were advised to make movements slowly and gently. During the test, the technician virtually accompanied each user with their own avatar, to prevent them from getting lost in the journey and to ensure they knew how to correctly use the Spatial tools. Once the user became comfortable, they were allowed to navigate freely through the environment, without any time limit. In this way, three phases were carried out in the test. A first phase with directed tasks where specific actions were assigned to evaluate key functionalities. A second, where the user was allowed to navigate freely through the environment without restrictions, and a third with real-time feedback, where reactions were observed and verbal comments were recorded. The test was concluded the moment the user requested to leave the scenario. The same process was carried out for the application tests from the laptop and the iPhone. They were introduced into the same scenario, so they would coexist with the person who was simultaneously taking the test with the Meta Quest 3. The tests were conducted individually on each of the platforms. For the tests with the glasses and the mobile phone, the campus wifi network was used, at a speed of 500Mb/s, and for the PC, the LAN network of 1GB/s was used. In the first phase (Tester version), the digital twin was evaluated by 10 colleagues from the Department of Graphic Expression. With the feedback from our colleagues, a second version (Student Version) was created, which was evaluated by 265 students from the Industrial Design Degree.

2.7. Data Analysis.

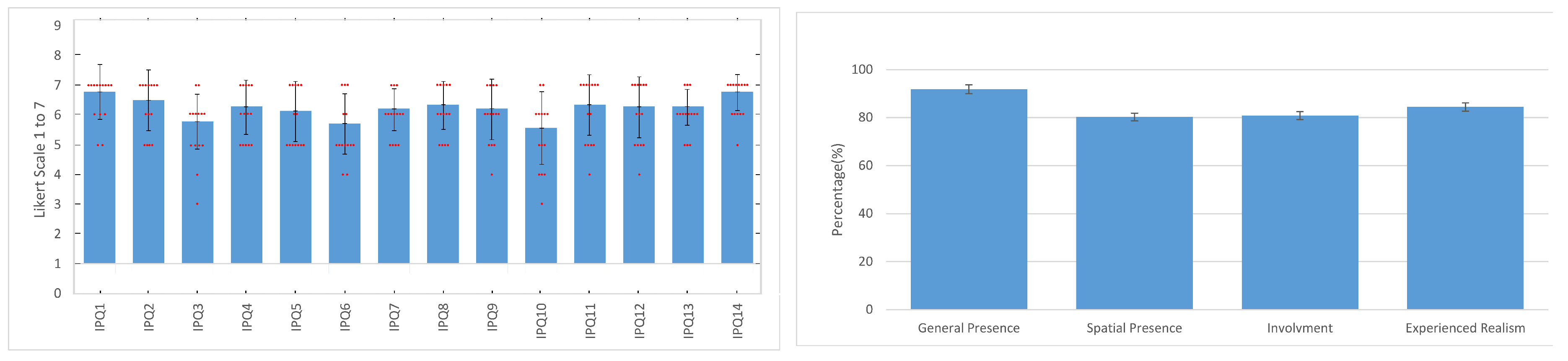

To validate the methodology employed in the trials, interviews were conducted with the students and tests were carried out to evaluate the degree of satisfaction with the immersive experience using VR glasses (Case 1), with the PC (Case 2), and with the iPhone X (Case 3). Each user was given two standard questionnaires: the IPQ (Igroup Presence Questionnaire) and the SUS (System Usability Scale). The iGroup Presence Questionnaire (IPQ) is a questionnaire designed to measure people’s sense of presence in virtual environments, such as VR. Presence refers to the subjective sensation of "being" truly in a virtual environment, beyond simply interacting with a digital simulation. This questionnaire was developed to evaluate this immersive experience and is widely used in VR studies and interactive digital environments. The IPQ is structured into 14 questions to evaluate different aspects of the presence experience, such as: 1.- Spatial Presence: Evaluates the perception of "being physically in another place" while in the virtual environment. 2.- Immersive Presence: Measures the level of concentration and interest in the virtual environment. Greater participation suggests that the user has "immersed" themselves emotionally in the experience. 3.- Presence Realism: Captures the perception of realism in the virtual environment. Evaluate if users feel that the environment is "believable" or similar to the real world. 4.- General Presence: A more general measure of presence that complements the other dimensions and allows for a global view of the presence experience. The IPQ is used to evaluate the effectiveness of virtual environments in generating an immersive experience, compare different VR or AR technologies to see which ones generate a greater sense of presence, and improve the design of immersive experiences in video games, training simulations, exposure therapy, and educational applications. The 14 questions of the IPQ questionnaire are detailed in

Table 1. The measurement method used for this questionnaire is a 7-point Likert scale. In this article, the variables of each test are analyzed, using a standard deviation of the average scores of the questions in each test.

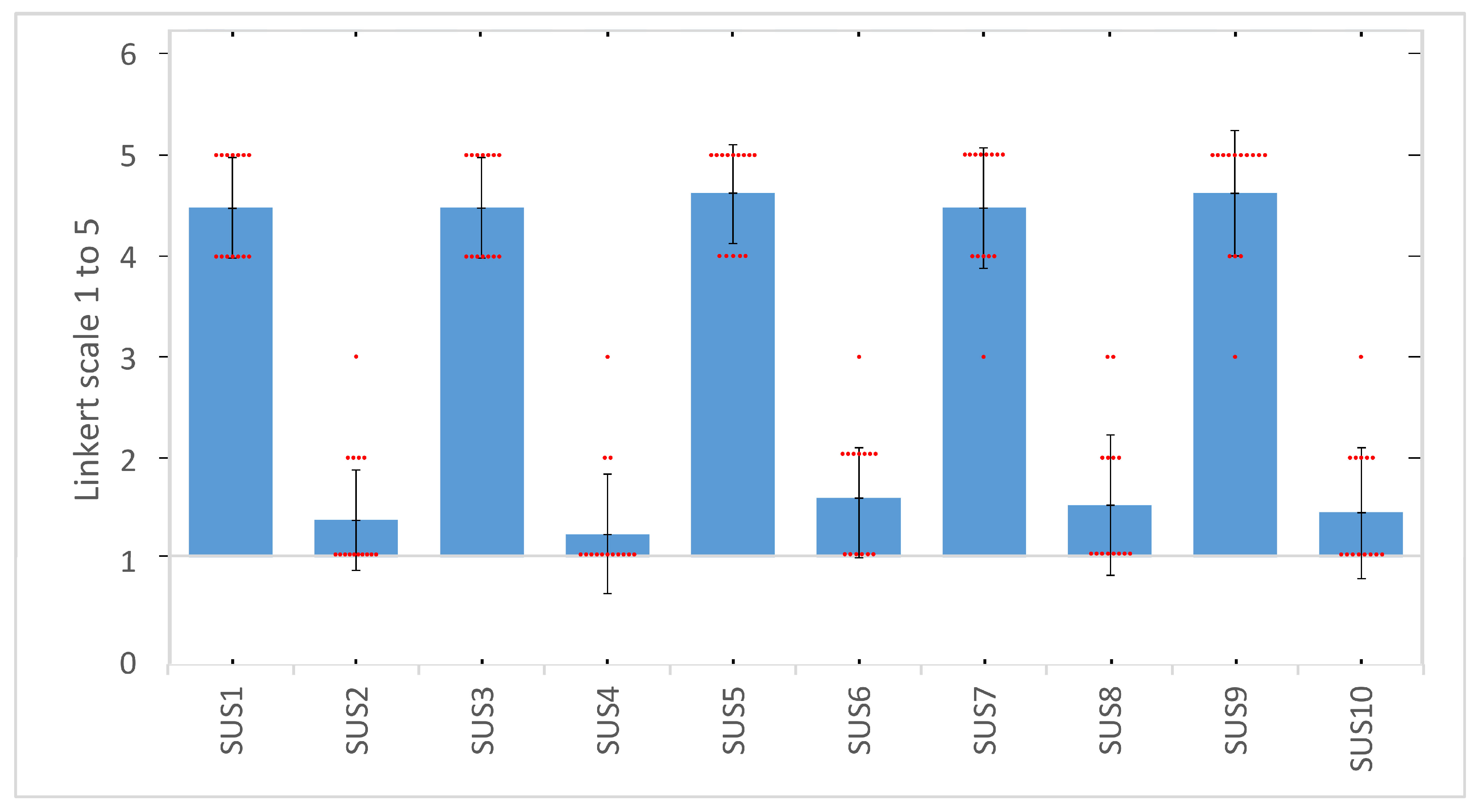

For its part, the SUS is a 10-question questionnaire designed to evaluate the usability of systems and technological products, such as websites, applications, and devices. It was developed in 1986 by John Brooke and is widely used because it is quick, easy to administer, and effective in obtaining a general measure of usability. See

Table 2The 10 questions are detailed in Table 2, and users must answer them after interacting with the system or product. The questions alternate between positive and negative statements to reduce bias and cover aspects of system usability and learning. Each statement is evaluated on a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree). The SUS provides a score from 0 to 100. It does not represent a percentage, but rather allows comparison of the relative usability of different systems and determines whether a system is easy or difficult to use. (see

Figure 24). The formula can be expressed mathematically as follows:

In this formula, the variables from Q1 to Q2 are the answers to the 10 questions. To evaluate the responses of all the tests, the mean and standard deviation are calculated. See Table 2.

4. Discussion

The public presentation of the alpha version of the developed Digital Twin took place on 7 April, 2022, at the 50th Anniversary Gala of the union between the University School of Industrial Technical Engineering and the UPV. A small stand was prepared with a PC and Meta Quest 3 glasses, both connected to the Alcoi campus wifi network. The two devices were connected to the same Spatial account, and the PC’s video output was connected to a 50-inch television screen so that the audience present at the gala could see the same virtual space as the people testing the application. See

Figure 32.

An advanced technician informed each participant about the operation of the glasses and their controls. He entered with the users, using a mobile device (iPhone X), and acted as a guide to explain the functioning of the DT application. Once the technician determined that the user was navigating the interface with ease, they were given complete freedom to test each of the functions. The Alcoi campus DT application was presented as a base platform for the dissemination of knowledge by each of the departments through the virtual space. The creation of conferences, workshops, and virtual events facilitates access to this knowledge for any user who connects online to the Spatial.io application. After the experience, a technical questionnaire was provided to the attendees, with five qualitative questions and two quantitative questions. See

Table 3. Forty random users aged 16 to 70 years completed this survey, which was aimed at measuring their level of satisfaction during the experience and their assessment of the quality of the DT.

Users could respond on a scale from 1 ("Very Unsatisfactory") to 5 ("Very Satisfactory"). The average score obtained was 4.5, with a standard deviation of 0.7, indicating that the user was satisfied with the experience. Only six respondents rated any question with a value of 1, as they encountered difficulties in navigating with the VR glasses. 85% of the users expressed satisfaction with the use and navigation of the digital twin and its missions, and only two had difficulty completing them. This revealing data will be used to improve both the explanatory tutorial and to add visual aids within the virtual environment, to help the user better manage the internal tools of the application. Overall, the experience for most users was satisfactory, but in discussions following the experience, improvements were suggested, such as voice-over and interactive aids, a virtual tour explaining the application’s functionalities, and graphical enhancements. At first, the comments triggered a surprise effect in the user upon entering the application, as they saw a highly detailed replication of what they knew in reality. As the user explored the space, improvement options were indicated. "It is very fun and useful to visualize the square and the campus buildings in real time, many activities can be organized here, but it makes you a bit dizzy." "Can this type of application be used for other events like concerts or video games?" "I was a bit lost at first, but then I felt completely integrated into the square." It is a very useful tool." These valuable responses were used for subsequent improvements, especially in the previous tutorial. Explanatory text was removed from it and more graphic information was added. Explanatory signs were also inserted to indicate the functions of the keyboard to the user. In

Figure 33, some examples of the new explanatory signs added can be seen.

These changes aim to optimize navigation and user experience within the immersive environment. In the future, the expansion of the number and diversity of users is projected, as well as the use of VR devices, with the purpose of analyzing the interaction between multiple individuals and the possible communicative dynamics that may arise through chat, voice, or other means. Likewise, updates and improvements implemented in the Spatial Toolkit Creator will be evaluated and, if deemed relevant, incorporated into the corresponding applications. It should be noted that the applications developed in this study have a high potential in the educational field, as they enable the dissemination of knowledge in an interactive and innovative manner [

60]. As future research lines, the industrial application of these DTs is planned [

61,

62], as well as their application in sporting events within the University [

63,

65]. Their application in the field of health, such as coordination with medical leave for students and professors [

66,

67] and expansion to other campus buildings [

68,

71]. However, it is essential to consider aspects related to user privacy and data protection. In the current version of the application, the tests have been conducted anonymously; however, in the future, the collection of user data may be required by clients, making compliance with current data protection regulations essential. Although this technology promotes global connectivity, accessibility, and inclusion, it is necessary to emphasize that it will not completely replace all human interactions. However, the environmental impact and sustainability of display devices in the future must be considered. The evolution of these devices should be oriented towards more eco-friendly and recyclable materials, following a trend similar to that observed in the mobile phone industry, where more compact devices with sustainable materials have been developed. In this regard, Meta has developed a prototype of VR glasses, called Orion, see

Figure 34, which features a lighter, more efficient, and sustainable design compared to the current Meta Quest 3. Although their initial cost is high, they are expected to eventually become everyday devices capable of integrating multiple functionalities, replacing devices such as mobile phones, smartwatches, and headphones, with the incorporation of VR and AR experiences.

Figure 1.

BMW engineers testing their digital twin in Regensburg (Germany).

Figure 1.

BMW engineers testing their digital twin in Regensburg (Germany).

Figure 2.

Poster announcing Lil Nas X’s concert in Roblox

Figure 2.

Poster announcing Lil Nas X’s concert in Roblox

Figure 3.

Detail of the event celebrating the 50th anniversary of the incorporation of the University School of Industrial Technical Engineering of Alcoi to the UPV.

Figure 3.

Detail of the event celebrating the 50th anniversary of the incorporation of the University School of Industrial Technical Engineering of Alcoi to the UPV.

Figure 4.

Detail of the methodology used to create the digital twin of the Alcoi campus.

Figure 4.

Detail of the methodology used to create the digital twin of the Alcoi campus.

Figure 5.

Logo design: black and white version: negative, positive. Color version.

Figure 5.

Logo design: black and white version: negative, positive. Color version.

Figure 6.

Sequence of the transformation of the logo from the UPV coat of arms.

Figure 6.

Sequence of the transformation of the logo from the UPV coat of arms.

Figure 7.

Installation of structures using aerial platforms. Plaza of the Alcoi campus of the UPV.

Figure 7.

Installation of structures using aerial platforms. Plaza of the Alcoi campus of the UPV.

Figure 8.

Lighting of graphic elements. Plaza of the Alcoi campus of the UPV.

Figure 8.

Lighting of graphic elements. Plaza of the Alcoi campus of the UPV.

Figure 9.

Detail of the integration of the photomontage of the facade with the CAD floor plans.

Figure 9.

Detail of the integration of the photomontage of the facade with the CAD floor plans.

Figure 10.

Result of the 3D modeling of the Ferrándiz building.

Figure 10.

Result of the 3D modeling of the Ferrándiz building.

Figure 11.

Examples of photographs taken of the decorative elements of the square.

Figure 11.

Examples of photographs taken of the decorative elements of the square.

Figure 12.

Result of the modeling of the Carbonell Building, along with the decorative elements

Figure 12.

Result of the modeling of the Carbonell Building, along with the decorative elements

Figure 13.

Detail of the cropped images to texture the 3D models.

Figure 13.

Detail of the cropped images to texture the 3D models.

Figure 14.

Diagram of maps and materials used: Standard (Legacy) and Multi/Sub-Object.

Figure 14.

Diagram of maps and materials used: Standard (Legacy) and Multi/Sub-Object.

Figure 15.

Final view of the modeling of part of the architectural complex of the Alcoi campus.The two delimitation planes, the zeppelin and the spaces for the tarpaulins can be distinguished with the graphic image of the 50th anniversary.

Figure 15.

Final view of the modeling of part of the architectural complex of the Alcoi campus.The two delimitation planes, the zeppelin and the spaces for the tarpaulins can be distinguished with the graphic image of the 50th anniversary.

Figure 16.

Detail of the Quest component. It has been configured to start the missions automatically when the user enters the scene, to play them in the correct order, and a celebration has been set up upon completing the missions (Celebrate On Complete).

Figure 16.

Detail of the Quest component. It has been configured to start the missions automatically when the user enters the scene, to play them in the correct order, and a celebration has been set up upon completing the missions (Celebrate On Complete).

Figure 17.

Trigger collision element located inside the illuminated cylinder. When the avatar enters the cylinder, the trigger triggers the animation of the deployment of the commemorative tarpaulins on the facades.

Figure 17.

Trigger collision element located inside the illuminated cylinder. When the avatar enters the cylinder, the trigger triggers the animation of the deployment of the commemorative tarpaulins on the facades.

Figure 18.

Detail of one of the Trigger Events located at one of the doors of the Carbonell building. Once the objective of discovering the 3 doors has been completed, the final mission is unlocked.

Figure 18.

Detail of one of the Trigger Events located at one of the doors of the Carbonell building. Once the objective of discovering the 3 doors has been completed, the final mission is unlocked.

Figure 19.

Detail of the stage with the lectern and the rear screen. The user must get on stage to complete the mission.

Figure 19.

Detail of the stage with the lectern and the rear screen. The user must get on stage to complete the mission.

Figure 20.

Final result of the scene in Unity, with all components inserted. In the planter, the Seat Hotspot component can be seen, represented by an armchair.

Figure 20.

Final result of the scene in Unity, with all components inserted. In the planter, the Seat Hotspot component can be seen, represented by an armchair.

Figure 21.

Spatial’s Creator Toolkit menu in Unity, with the configuration sections and the publish icon.

Figure 21.

Spatial’s Creator Toolkit menu in Unity, with the configuration sections and the publish icon.

Figure 22.

View of the avatar’s starting point on the stage. You can see the detail of the zeppelin with the 50th anniversary logo, as well as the illuminated cylinder that activates the appearance of the flags.

Figure 22.

View of the avatar’s starting point on the stage. You can see the detail of the zeppelin with the 50th anniversary logo, as well as the illuminated cylinder that activates the appearance of the flags.

Figure 23.

Detail of the online content creation menu in Spatial, where we can select our Empty Frame.

Figure 23.

Detail of the online content creation menu in Spatial, where we can select our Empty Frame.

Figure 24.

The equation that defines the SUS system

Figure 24.

The equation that defines the SUS system

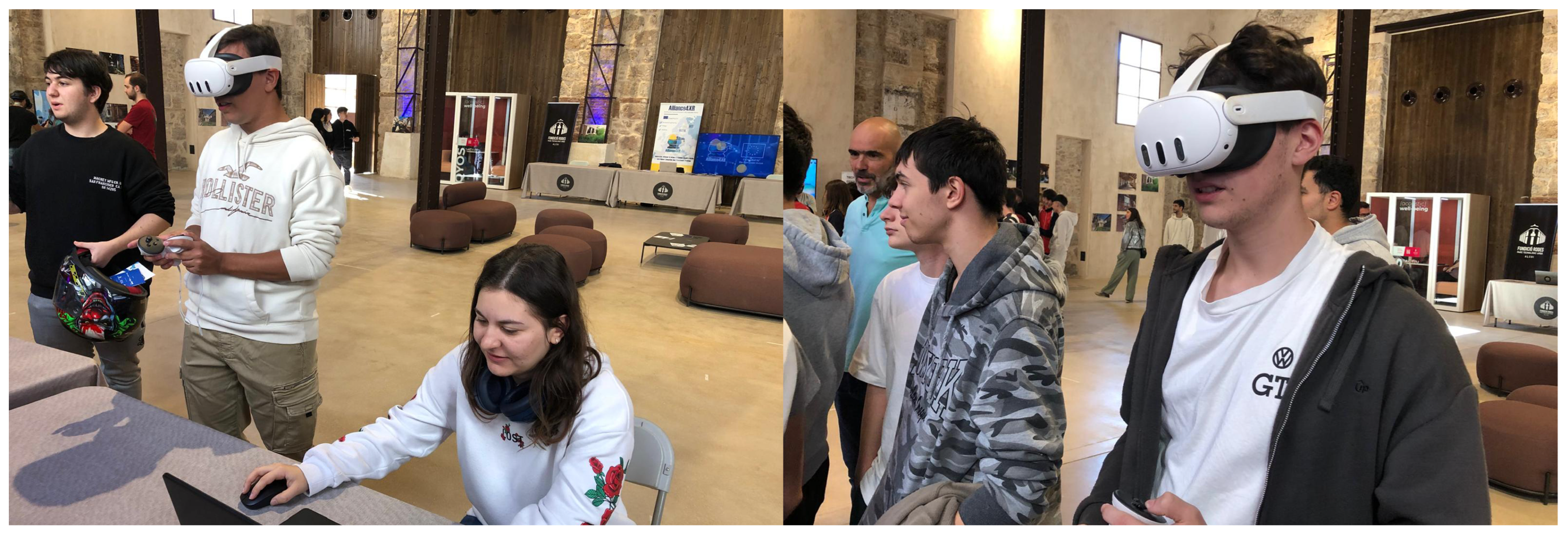

Figure 25.

Design Degree students testing the designed application.

Figure 25.

Design Degree students testing the designed application.

Figure 26.

View of the digital twin from the door of the Carbonell Building

Figure 26.

View of the digital twin from the door of the Carbonell Building

Figure 27.

Detail of how the avatar can sit and contemplate the entire square.

Figure 27.

Detail of how the avatar can sit and contemplate the entire square.

Figure 28.

At this point, the avatar completes the first mission and the tarpaulins are deployed on the facades.

Figure 28.

At this point, the avatar completes the first mission and the tarpaulins are deployed on the facades.

Figure 29.

Detail of the celebration when the avatar completes the 3 missions.

Figure 29.

Detail of the celebration when the avatar completes the 3 missions.

Figure 30.

Results of the Igroup Presence Questionnaire. In the left graph, the obtained data are represented with red dots, the blue bars represent the mean, and the standard deviation is represented with vertical black lines. In the graph on the right, the results of the subscales, their mean, and their standard deviation are represented.

Figure 30.

Results of the Igroup Presence Questionnaire. In the left graph, the obtained data are represented with red dots, the blue bars represent the mean, and the standard deviation is represented with vertical black lines. In the graph on the right, the results of the subscales, their mean, and their standard deviation are represented.

Figure 31.

Figure 30. Results obtained in the SUS questionnaire: data (red dots), mean (blue bars), and standard deviation (vertical black lines).

Figure 31.

Figure 30. Results obtained in the SUS questionnaire: data (red dots), mean (blue bars), and standard deviation (vertical black lines).

Figure 32.

Students, teachers, and attendees testing and viewing the application.

Figure 32.

Students, teachers, and attendees testing and viewing the application.

Figure 33.

Detail of the graphics introduced to help the user better understand the operation of the controls, to improve navigability.

Figure 33.

Detail of the graphics introduced to help the user better understand the operation of the controls, to improve navigability.

Figure 34.

Appearance of Meta’s new AR glasses. You can see the size reduction compared to the Meta Quest 3.

Figure 34.

Appearance of Meta’s new AR glasses. You can see the size reduction compared to the Meta Quest 3.

Table 1.

Description of the 14 IPQ issues.

Table 1.

Description of the 14 IPQ issues.

| Number |

Question |

| IPQ1 |

In the computer generated world I had a sense of “being there”. |

| IPQ2 |

Somehow I felt that the virtual world surrounded me. |

| IPQ3 |

I felt like I was just perceiving pictures. |

| IPQ4 |

I did not feel present in the virtual space. |

| IPQ5 |

I had a sense of acting in the virtual space, rather than operating something from outside. |

| IPQ6 |

I felt present in the virtual space. |

| IPQ7 |

How aware were you of the real world surrounding while navigating in the virtual world? (i.e., sounds, room temperature, other people, etc.)? |

| IPQ8 |

I was not aware of my real environment. |

| IPQ9 |

I still paid attention to the real environment. |

| IPQ10 |

I was completely captivated by the virtual world. |

| IPQ11 |

How real did the virtual environment seem to you? |

| IPQ12 |

How much did your experience in the virtual environment seem consistent with your real world experience? |

| IPQ13 |

How real did the virtual world seem to you? |

| IPQ14 |

The virtual world seemed more realistic than the real world. |

Table 2.

Description of the SUS test questions.

Table 2.

Description of the SUS test questions.

| Number |

Question |

| SUS1 |

I think that I would like to use this system frequently. |

| SUS2 |

I found the system unnecessarily complex. |

| SUS3 |

I thought the system was easy to use. |

| SUS4 |

I think that I would need the support of a technical person to be able to use

this system. |

| SUS5 |

I found the various functions in this system were well integrated. |

| SUS6 |

I thought there was too much inconsistency in this system. |

| SUS7 |

I would imagine that most people would learn to use this system very quickly. |

| SUS8 |

I found the system very cumbersome to use. |

| SUS9 |

I felt very confident using the system. |

| SUS10 |

I needed to learn a lot of things before I could get going with this system. |

Table 3.

Questionnaire presented to the attendees of the 50th anniversary gala.

Table 3.

Questionnaire presented to the attendees of the 50th anniversary gala.

| Number |

Type |

Description of the question |

| 1 |

Qualitative |

How has your navigation and interaction experience been? |

| 2 |

Qualitative |

Did you find easy to complete the missions? |

| 3 |

Qualitative |

Has the help from the guide and the ability to connect with

other users been useful to you? |

| 4 |

Qualitative |

Were you able to complete the missions without external help? |

| 5 |

Qualitative |

What did you think of the application of the Digital Twin? |

| 6 |

Quantitative |

Did you find the experience useful? |

| 7 |

Quantitative |

Would you recommend the experience? |