Submitted:

15 December 2025

Posted:

17 December 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- RQ1: What are the key technological advances that have driven progress in the aforementioned video research fields?

- RQ2: What are the current challenges and best practices in evaluating T2V models, and how do benchmark datasets support the development of robust text-to-video generators?

- RQ3: What are the primary technical challenges and future research directions in leveraging Generative AI and LLMs for text-to-video generation?

2. Background

2.1. Large Language Models and Generative Architectures

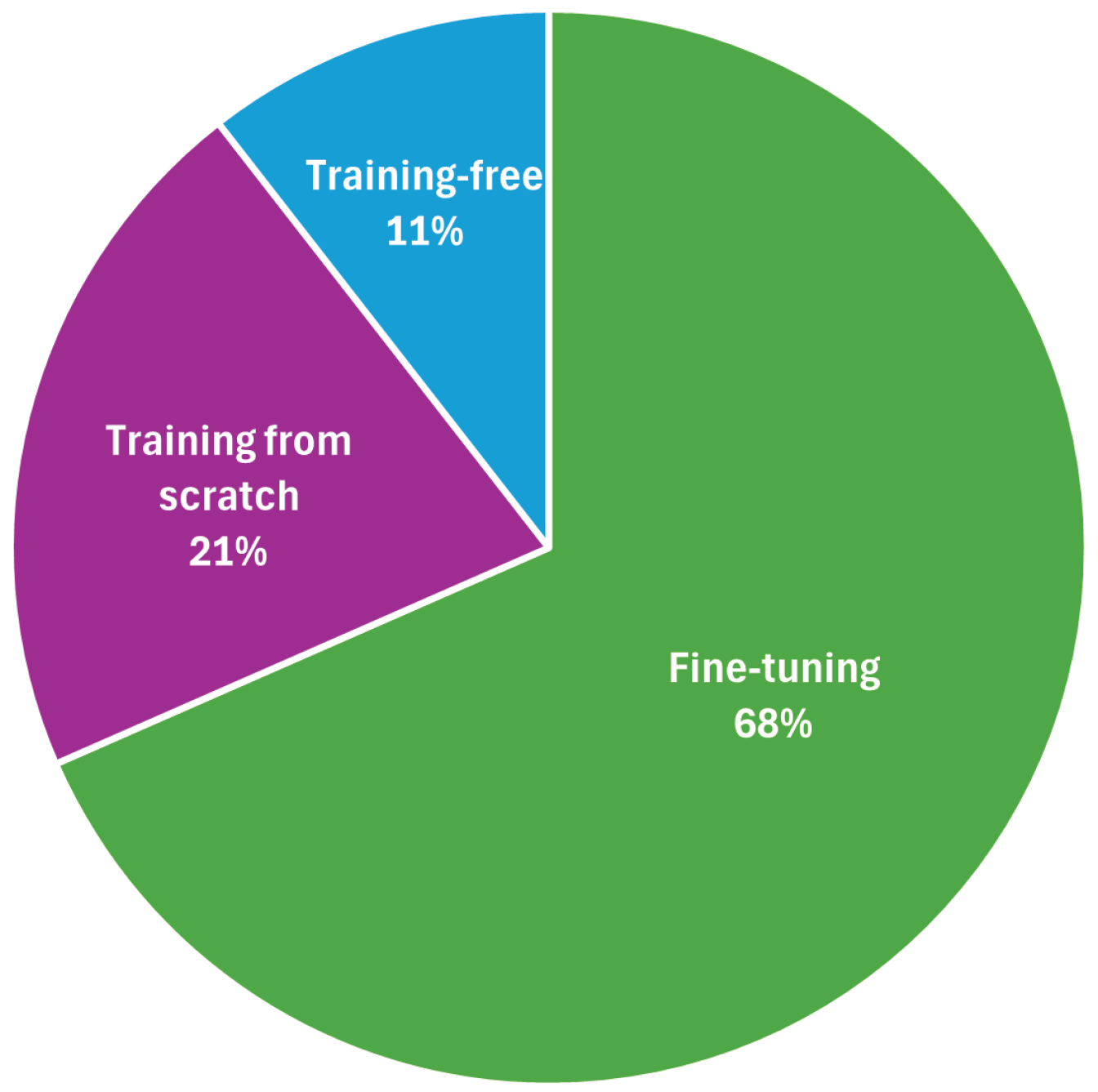

2.2. Pre-training and Transfer Learning Paradigms

- Training from Scratch: The model weights are randomly initialized, and all spatial and temporal patterns are learned entirely from large-scale paired video–text datasets. This method enables full flexibility when designing model architectures (e.g., spatiotemporal transformers or 3D convolutional networks) [14].

- Fine-Tuning: In this paradigm, the model is initialized with pre-trained weights and then adapted to the video domain through further training on paired video–text datasets. Fine-tuning involves updating the entire model or selectively updating specific modules (e.g., temporal transformers or attention layers). Techniques such as lightweight adapters, Low-Rank Adaptation (LoRA) layers [15], or partial-freeze schedules [16] (e.g., freezing the text encoder and early spatial layers while updating temporal transformer blocks) are often used to reduce computational cost.

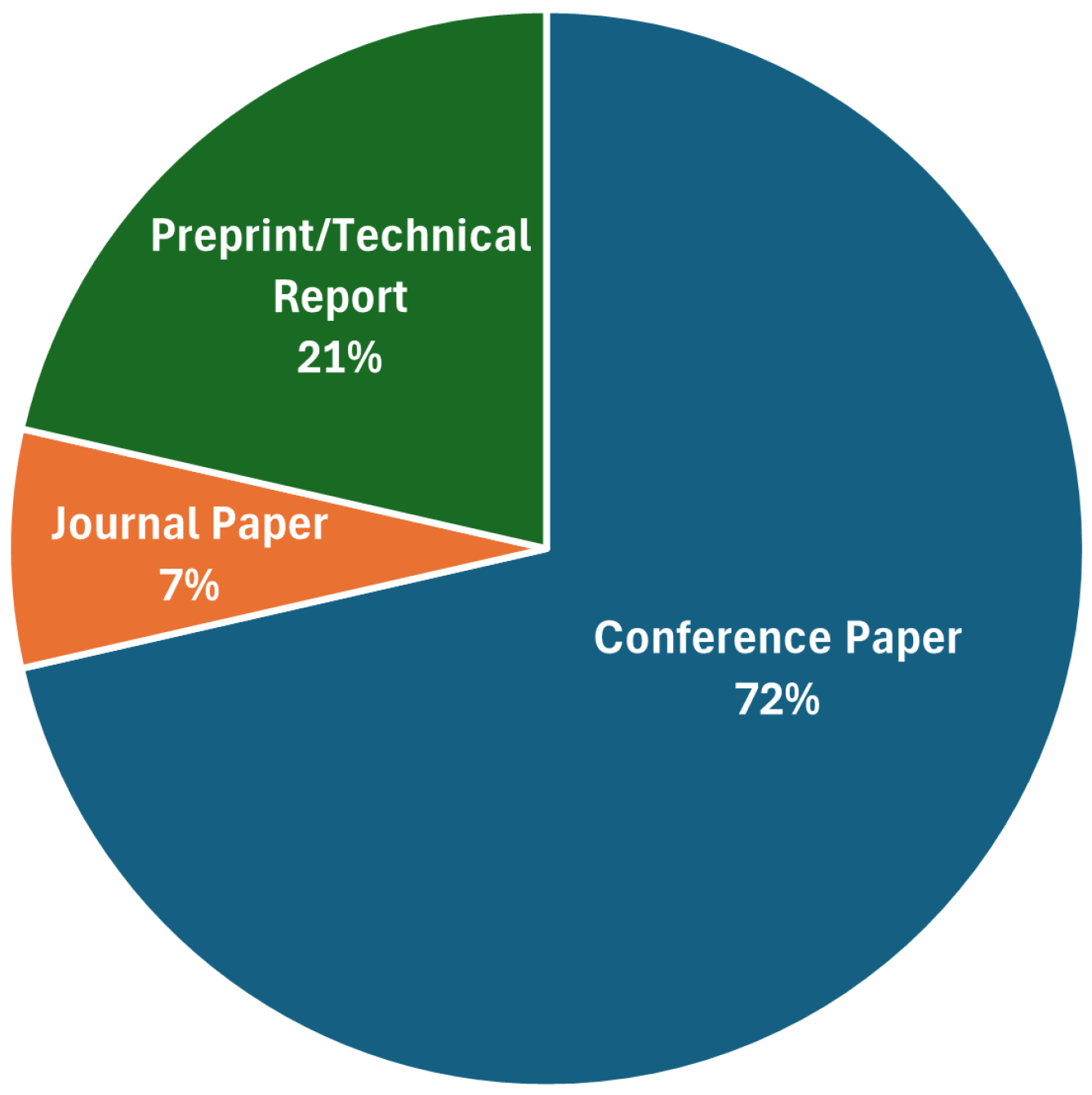

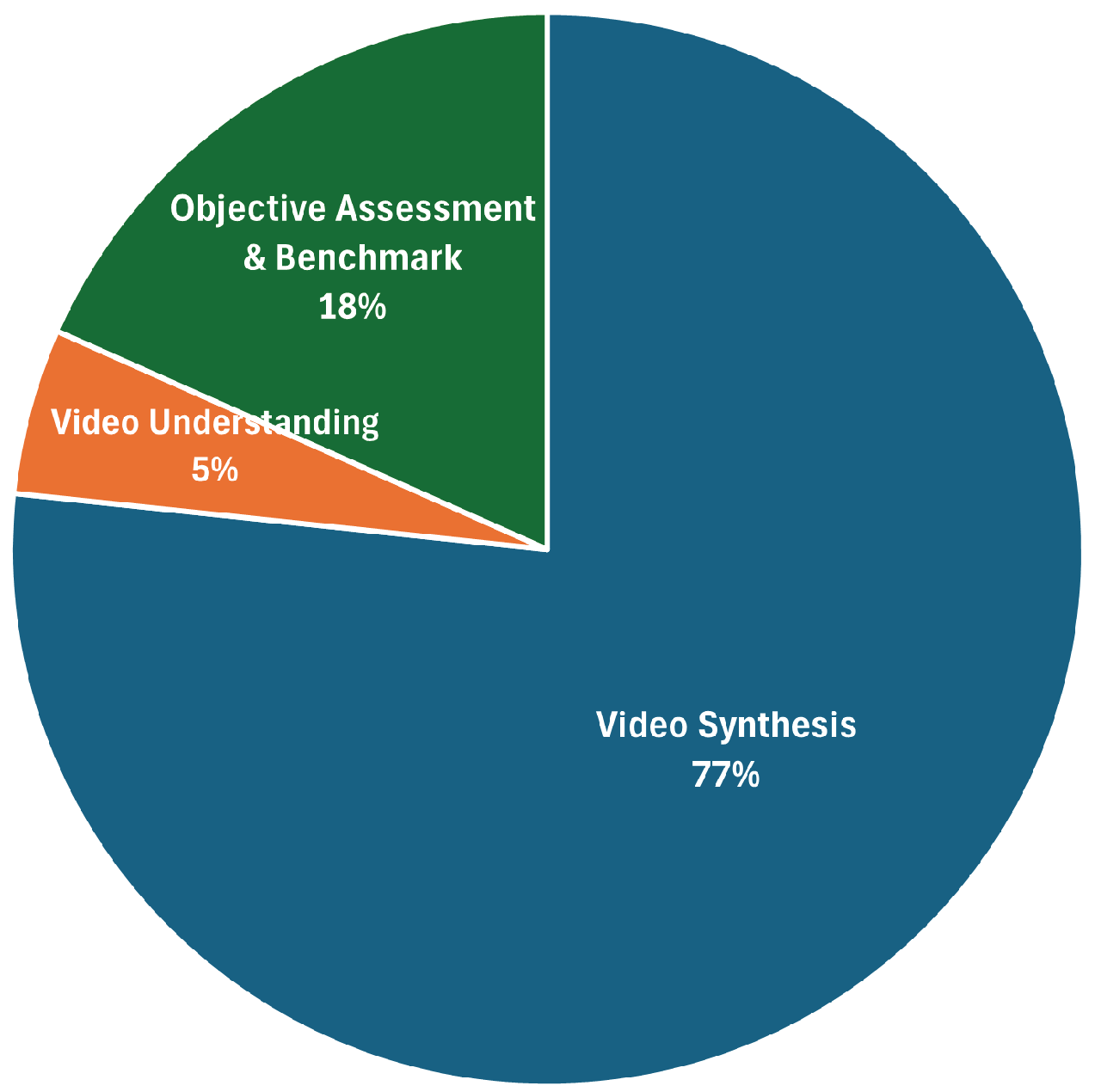

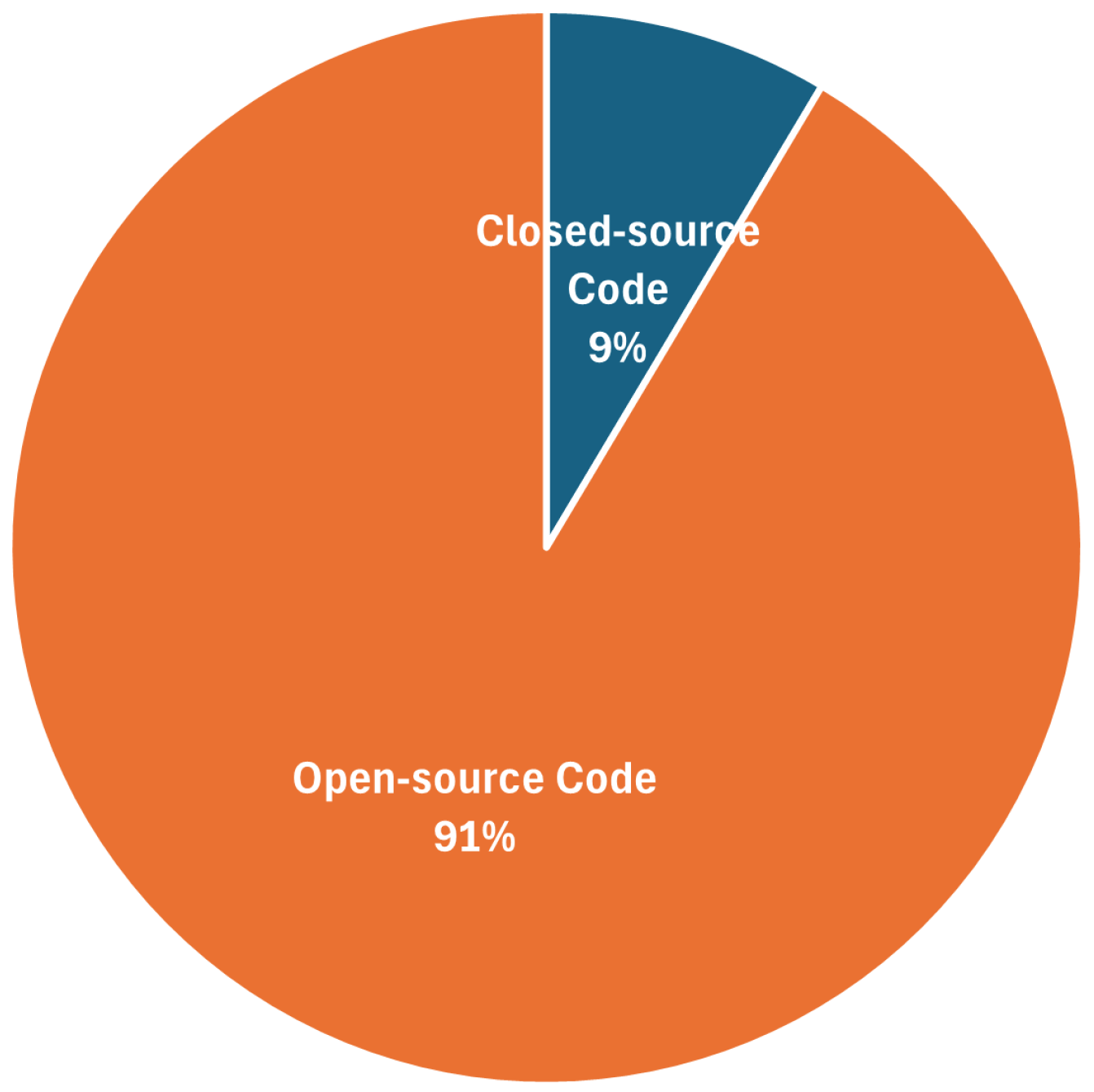

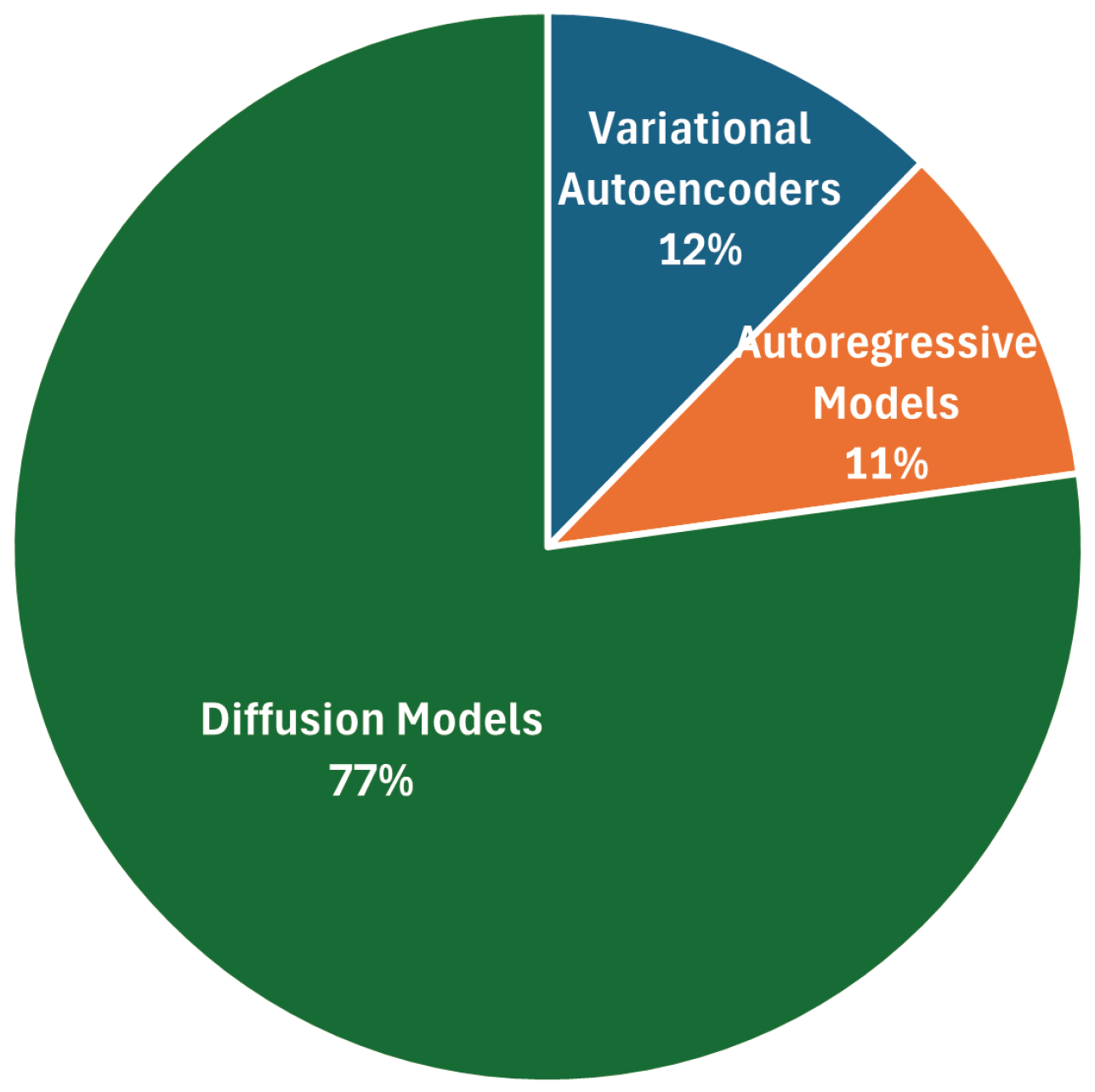

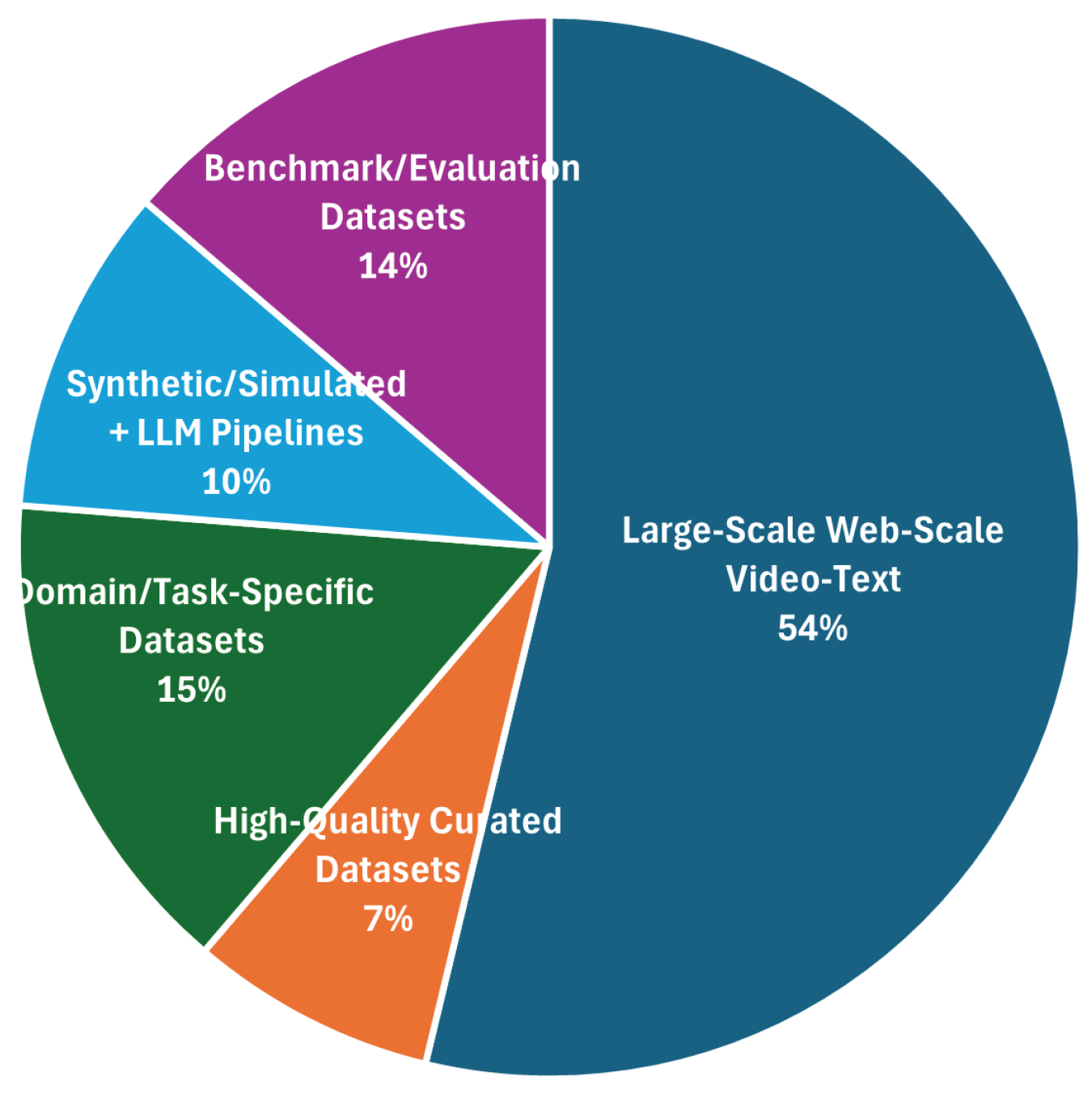

3. Bibliometric Analysis

4. Literature Review

4.1. Video Synthesis

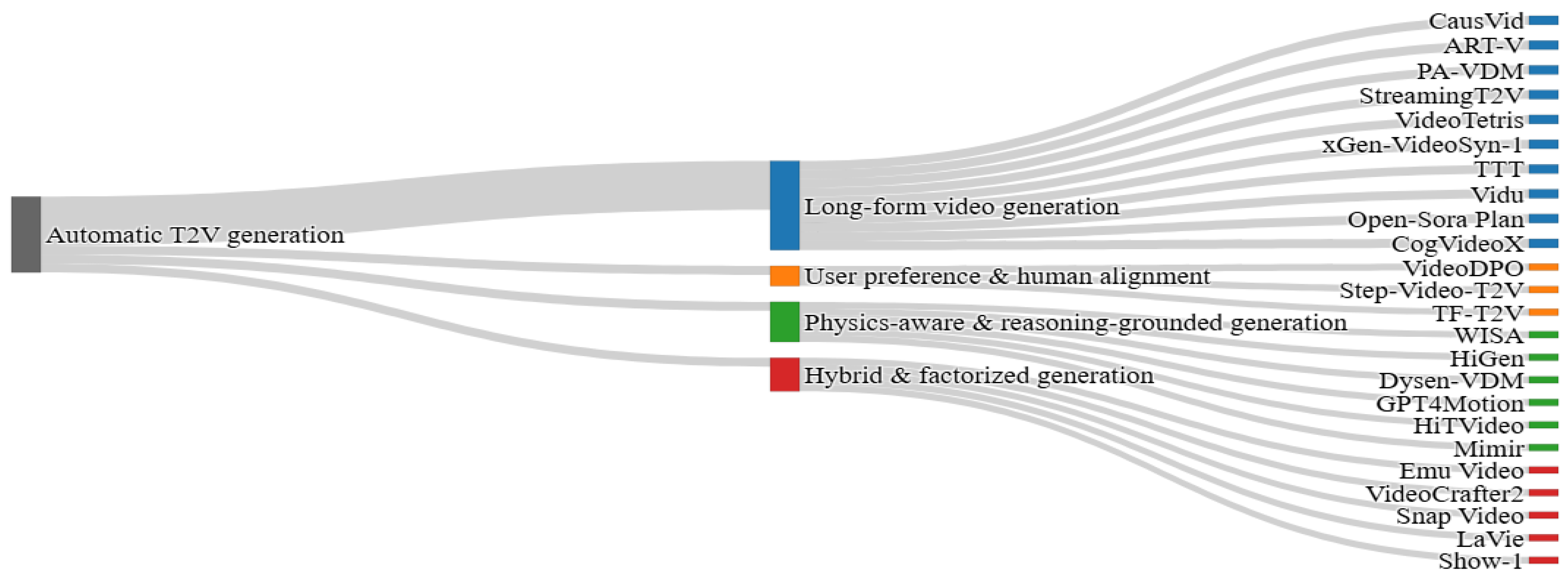

4.1.1. Automatic T2V Generation

- a)

- Long-form video generation

- b)

- User preference & human alignment

- c)

- physics-aware & reasoning-grounded generation

- d)

- Hybrid & factorized generation

| Reference | Model Architecture | Methods | Training Strategy | Training Dataset | Project Code |

|---|---|---|---|---|---|

| Girdhar et al. | Two-stage factorized generation: frozen T2I image + latent video diffusion with temporal layers & image conditioning | Factorized conditioning + tuned noise schedules + classifier-free guidance with separate image/text weights | Frozen T2I init + multi-stage training: 256px image-conditioned → 512px zero terminal-SNR → high-motion subset fine-tune (1.6K clips) | 34M licensed text-video pairs (unnamed) | https://emu-video.metademolab.com |

| Chen et al. | Stable Diffusion backbone + factorized 3D U-Net temporal modules + separate spatial & temporal modules | Disentangled spatial/temporal training + partial temporal tuning + LoRA fine-tuning + frame rate conditioning | Temporal modules trained on low-quality videos → spatial modules trained on high-quality images → separate fine-tuning | WebVid-10M (low-quality videos) + JDB (Midjourney-synthesized high-quality images) | https://github.com/AILab-CVC/VideoCrafter |

| Menapace et al. | Spatiotemporal transformer (FIT) + extended EDM diffusion for video | Joint modeling of spatial and temporal redundancies | Two-stage training: pre-training on lower-res videos → fine-tuning on high-res videos | Large-scale video-text datasets (unnamed) | https://snap-research.github.io/snapvideo |

| Wang et al. | Cascaded latent diffusion models + temporal self-attention + rotary positional encoding | Latent diffusion + temporal self-attention for frame coherence + temporal interpolation in latent space | Joint image-video fine-tuning + cascaded training | Curated Vimeo25M dataset with 25 million text-video pairs | https://github.com/Vchitect/LaVie |

| Zhang et al. | Two-stage hybrid: pixel-based diffusion for low-res generation + latent diffusion for high-res upscaling | Hybrid pixel-latent diffusion pipeline + expert translation module for super-resolution | Multi-stage training (keyframe generation → frame interpolation → SR → expert fine-tuning) | WebVid-10M | https://github.com/showlab/Show-1 |

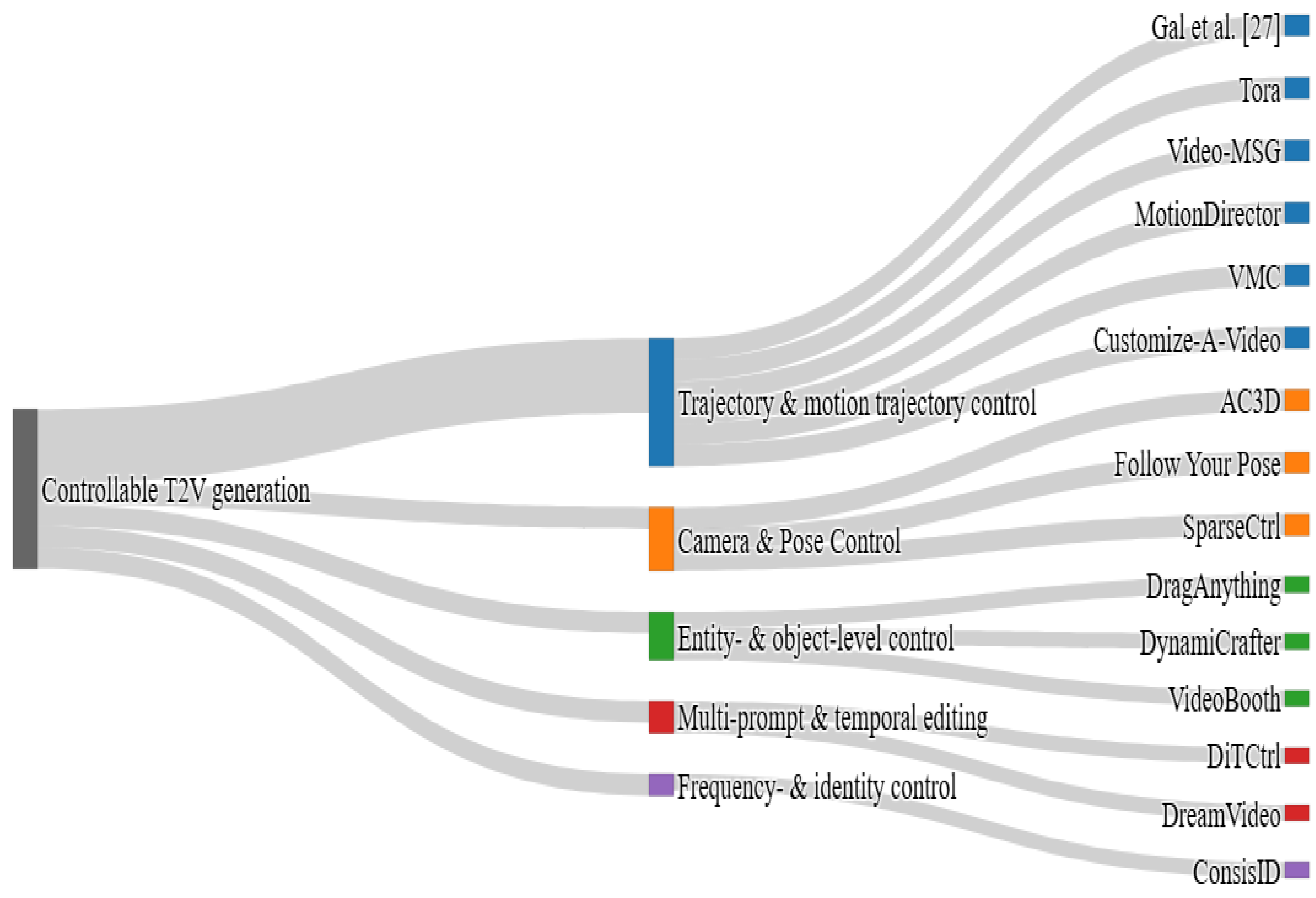

4.1.2. Controllable T2V Generation

- a)

- Trajectory & motion trajectory control

- b)

- Camera & Pose Control

- c)

- Entity- & object-level control

- d)

- Multi-prompt & temporal editing

- e)

- Frequency- & identity control

| Reference | Model Architecture | Methods | Training Strategy | Training Dataset | Project Code |

|---|---|---|---|---|---|

| Gal et al. | Lightweight network controlling sketch strokes + pretrained T2V motion prior with local deformation and global affine components | Score distillation sampling (SDS) loss + vector Bézier curve sketch representation | Optimization-based, no training or fine-tuning | None | https://github.com/yael-vinker/live_sketch |

| Zhang et al. | DiT backbone (OpenSora) with: Trajectory Extractor (3D VAE) + Spatial-Temporal DiT blocks + Motion-guidance Fuser | Trajectory-conditioned video generation + MGF hierarchical fusion + diffusion with text/visual conditions + alt. spatial-temporal attention | 3D Training of 3D VAE on flow maps + Joint training of diffusion transformer and MGF on trajectory-annotated video-text data | 630,000 videos from: Panda-70M + Mixkit + Pexels + Internal sources | https://github.com/alibaba/Tora |

| Li et al. | Pretrained T2V diffusion model + pipeline of multimodal LLM planner | Background planning, foreground layout & trajectory planning + structured noise init. + MLLM/vision models | No training or fine-tuning | None | https://github.com/jialuli-luka/Video-MSG |

| Zhao et al. | Pretrained 3D U-Net T2V diffusion backbone + dual-path spatial & temporal LoRA modules | Motion customization via decoupled LoRA tuning + appearance-debiased temporal loss + Temporal Attention Purification | Fine-tune spatial LoRAs on single frames + temporal LoRAs on multiple frames + backbone frozen | UCF Sports Action | https://github.com/showlab/MotionDirector |

| Jeong et al. | Pretrained cascaded VDM backbone + adapted temporal attention layers | Motion distillation via residual latent frame vectors + appearance-invariant prompt transformation | Parameter-efficient fine-tuning on temporal attention layers only + motion distillation loss + frozen backbone | Few, short videos | https://github.com/HyeonHo99/Video-Motion-Customization |

| Ren et al. | Pretrained T2V diffusion backbone + LoRA modules on temporal attention layers + appearance absorbers | One-shot motion customization from single video + appearance absorption before motion adaptation + LoRA tuning for temporal attention | Parameter-efficient LoRA fine-tuning + two-stage training (appearance absorber training → motion LoRA tuning) | Few, short videos | https://github.com/customize-a-video/customize-a-video |

| Bahmani et al. | Transformer-based diffusion backbone (VDiT/VD3D) + Plücker coordinate-based camera pose encoding + lightweight DiT-XS blocks | Motion spectral analysis + layer-specific camera knowledge probing + truncated normal noise schedule + feedback connections | Training with camera conditioning only in early transformer layers + standard diffusion denoising loss + truncated normal noise | Curated 20,000 video-text pairs from RealEstate10K | https://github.com/snap-research/ac3d |

| Ma et al. | Zero-initialized convolutional pose encoder + Pretrained text-to-image diffusion backbone + temporal & cross-frame self-attention blocks | Learnable temporal attention for motion coherence + preservation of pretrained T2I’s editing ability | Two-stage training: training on image-pose pairs → finetuning on pose-free videos + minimal tuning of pretrained backbone | Curated 20,000 video-text pairs from RealEstate10K | https://github.com/mayuelala/FollowYourPose |

| Guo et al. | Condition encoder (shared backbone + modality heads) + frozen diffusion T2V model (AnimateDiff) | Sparse temporal control with condition propagation + masking-based sparsity simulation + purging noised ControlNet inputs + multimodal control support | Training of encoder only + freezing T2V backbone | WebVid-10M | https://github.com/guoyww/AnimateDiff |

| Wu et al. | Stable Video Diffusion backbone (3D U-Net) + entity representation + conditional denoising autoencoder | Segmentation tool (SAM) + 2D Gaussian creation + user trajectory input + Co-Tracker for trajectories | Supervised training with MSE loss focused on entity regions | VIPSeg | https://github.com/showlab/DragAnything |

| Xing et al. | Pretrained T2V diffusion backbone + CLIP image encoder + query Transformer + gated fusion mechanism with image/text conditioning | Dual-stream image injection (text-aligned context + visual detail guidance) + generative frame interpolation + looping videos | Three-stage training: training the image context network → adapting with T2V → joint fine-tuning with VDG | WebVid-10M | https://github.com/Doubiiu/DynamiCrafter |

| Jiang et al. | Pretrained T2V diffusion backbone + CLIP image encoder + attention injection module + cross-frame and temporal attention layers | Hierarchical image prompt embedding + attention injection into cross-frame attention layers + conditioning on text and image jointly | Two-stage coarse-to-fine training: training MLP encoder → training attention injection module | WebVid-10M | https://github.com/Vchitect/VideoBooth |

| Cai et al. | Multi-Modal Diffusion Transformer (MM-DiT) backbone with 3D full attention | Mask-guided attention sharing + latent blending + prompt token reweighting | No training or fine-tuning | None | https://github.com/TencentARC/DiTCtrl |

| Wei et al. | Pretrained video diffusion backbone U-Net + image retention branch + convolutional image feature extractor | Image-to-video generation + low-level image feature concatenation + double-condition guidance | Two-stage training: training of the identity adapter → fine-tuning of the motion adapter | Pexels 300K | https://github.com/ali-vilab/Vgen |

| Yuan et al. | Diffusion Transformer (DiT) backbone + global facial extractor + local facial extractor | Frequency-aware identity control with LF/HF features + dynamic mask loss (face) + dynamic cross-face loss | Hierarchical frequency-aware training + joint optimization of facial extractors with DiT backbone | Large-scale human face video datasets (unnamed) | https://github.com/PKU-YuanGroup/ConsisID |

4.1.3. Video Style Transfer & Editing

4.1.4. Video Quality & Inference Enhancement

4.2. Video Understanding

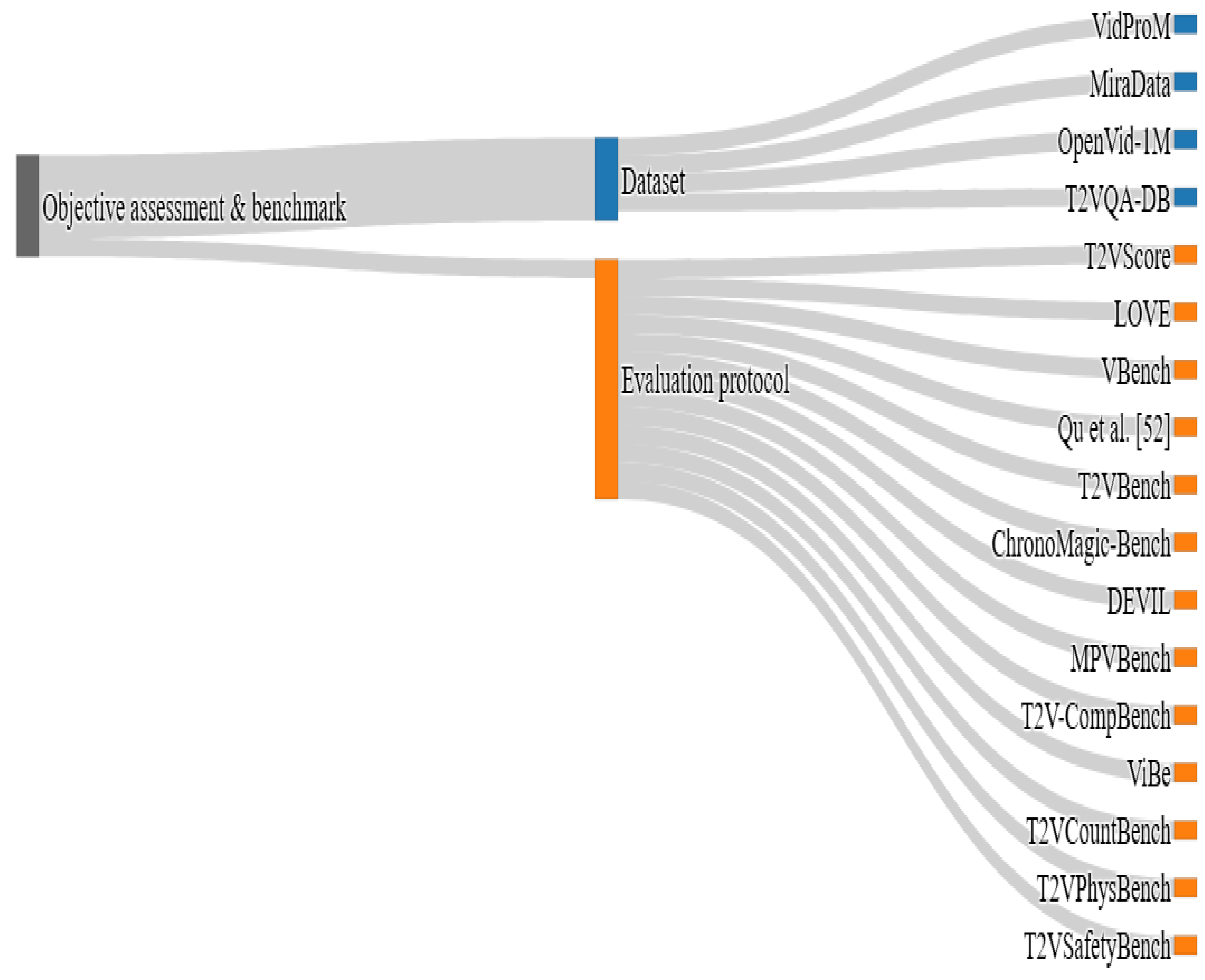

4.3. Objective Assessment & Benchmark

4.3.1. Dataset

4.3.2. Evaluation Protocol

5. Discussion

- RQ1: What are the key technological advances that have driven progress in the aforementioned video research fields?

- RQ2: What are the current challenges and best practices in evaluating T2V models, and how do benchmark datasets support the development of robust text-to-video generators?

- RQ3: What are the primary technical challenges and future research directions in leveraging Generative AI and LLMs for text-to-video generation?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| T2V | Text-to-video |

| DiT | Diffusion Transformer |

| VAE | Variational Autoencoders |

| SVG | Stochastic Video Generation |

| LLM | Large Language Models |

| LVLM | Large video-language models |

| VDM | Video Diffusion Models |

| VPN | Video Pixel Networks |

| LSTM | Long Short-Term Memory |

| LoRA | Low-Rank Adaptation |

| U-ViT | U-shaped Vision Transforme |

| AdaLN | Adaptive LayerNorm |

| DPO | Direct Preference Optimization |

| FID | Fréchet Inception Distance |

| IS | Inception Score |

References

- Brooks, T.; Peebles, B.; Holmes, C.; DePue, W.; Guo, Y.; Jing, L.; Schnurr, D.; Taylor, J.; Luhman, T.; Luhman, E.; et al. Video Generation Models as World Simulators. 2024. Available online: https://openai.com/index/video-generation-models-as-world-simulators/.

- Kalchbrenner, N.; van den Oord, A.; Simonyan, K.; Danihelka, I.; Vinyals, O.; Graves, A.; Kavukcuoglu, K. Video Pixel Networks. In Proceedings of the Proceedings of the 34th International Conference on Machine Learning (ICML). PMLR, 2017; Vol. 70, pp. 1771–1779, Proceedings of Machine Learning Research. [Google Scholar] [CrossRef]

- Denton, E.; Fergus, R. Stochastic Video Generation with a Learned Prior. In Proceedings of the Proceedings of the 35th International Conference on Machine Learning (ICML). PMLR, 2018; Vol. 80, pp. 1174–1183, Proceedings of Machine Learning Research. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. 2023. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bikel, D.; Blecher, L.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Lin, B.; Zhu, B.; Ye, Y.; Ning, M.; Jin, P.; Yuan, L. Video-LLaVA: Learning United Visual Representation by Alignment Before Projection. arXiv 2023, arXiv:2311.10122. [Google Scholar]

- OpenAI. GPT-4o System Card Describes GPT-4o, a multimodal large language model with text, audio, and vision capabilities. 2024. Available online: https://openai.com/index/gpt-4o-system-card/.

- Ho, J.; Salimans, T.; Gritsenko, A.; Chan, W.; Norouzi, M.; Fleet, D.J. Video Diffusion Models. arXiv 2022, arXiv:2204.03458. [Google Scholar]

- van den Oord, A.; Kalchbrenner, N.; Vinyals, O.; Espeholt, L.; Graves, A.; Kavukcuoglu, K. Conditional Image Generation with PixelCNN Decoders. arXiv 2016, arXiv:1606.05328. [Google Scholar] [CrossRef]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Kaczmarczyk, R.; Schaeffer, K.; Shah, S.A.; et al. LAION-5B: An open large-scale dataset for training next generation image-text models. In Proceedings of the NeurIPS Datasets and Benchmarks Track, 2022. [Google Scholar]

- Chen, T.S.; Siarohin, A.; Menapace, W.; Deyneka, E.; wei Chao, H.; Jeon, B.E.; Fang, Y.; Lee, H.Y.; Ren, J.; Yang, M.H.; et al. Panda-70M: Captioning 70M Videos with Multiple Cross-Modality Teachers. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) CVPR 2024, 2024; pp. 13320–13331. [Google Scholar]

- Bain, M.; Zhu, A.; Sidorov, E.; Laurens, V.; Holden, D.; et al. Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval. arXiv 2021, arXiv:2104.00650. [Google Scholar]

- Bain, M.; Nagrani, A.; Varol, G.; Zisserman, A. Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021; pp. 1728–1738. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv. Accessed. 2022.

- Liu, Y.; Agarwal, S.; Venkataraman, S. AutoFreeze: Automatically Freezing Model Blocks to Accelerate Fine-tuning. arXiv 2021, arXiv:2102.01386. [Google Scholar] [CrossRef]

- Singer, U.; Polyak, A.; Hayes, T.; Yin, X.; An, J.; Zhang, S.; Hu, Q.; Yang, H.; Ashual, O.; Gafni, O.; et al. Make-A-Video: Text-to-Video Generation without Text-Video Data. arXiv 2022, arXiv:2209.14792. [Google Scholar]

- Bahmani, S.; Skorokhodov, I.; Qian, G.; Siarohin, A.; Menapace, W.; Tagliasacchi, A.; Lindell, D.B.; Tulyakov, S. AC3D: Analyzing and Improving 3D Camera Control in Video Diffusion Transformers. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Bao, F.; Xiang, C.; Yue, G.; He, G.; Zhu, H.; Zheng, K.; Zhao, M.; Liu, S.; Wang, Y.; Jun, Z. Vidu: A Highly Consistent, Dynamic and Skilled Text-to-Video Generator with Diffusion Models. arXiv 2024. arXiv:2405.04233.

- Cai, M.; Cun, X.; Li, X.; Liu, W.; Zhang, Z.; Zhang, Y.; Shan, Y.; Yue, X. DiTCtrl: Exploring Attention Control in Multi-Modal Diffusion Transformer for Tuning-Free Multi-Prompt Longer Video Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2025; pp. 7763–7772. [Google Scholar]

- Chen, L.; Wei, X.; Li, J.; Dong, X.; Zhang, P.; Zang, Y.; Chen, Z.; Duan, H.; Lin, B.; Tang, Z.; et al. ShareGPT4Video: Improving Video Understanding and Generation with Better Captions. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024) Datasets and Benchmarks Track, 2024. [Google Scholar]

- Chen, H.; Zhang, Y.; Cun, X.; Xia, M.; Wang, X.; Weng, C.; Shan, Y. VideoCrafter2: Overcoming Data Limitations for High-Quality Video Diffusion Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024; pp. 7310–7320. [Google Scholar]

- Choi, M.; Sharan, S.P.; Goel, H.; Shah, S.; Chinchali, S. We’ll Fix it in Post: Improving Text-to-Video Generation with Neuro-Symbolic Feedback. arXiv arXiv:2504.17180.

- Cuttano, C.; Trivigno, G.; Rosi, G.; Masone, C.; Averta, G. SAMWISE: Infusing Wisdom in SAM2 for Text-Driven Video Segmentation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Dalal, K.; Koceja, D.; Hussein, G.; Xu, J.; Zhao, Y.; Song, Y.; Han, S.; Cheung, K.C.; Kautz, J.; Guestrin, C.; et al. One-Minute Video Generation with Test-Time Training. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Fei, H.; Wu, S.; Ji, W.; Zhang, H.; Chua, T.S. Dysen-VDM: Empowering Dynamics-aware Text-to-Video Diffusion with LLMs. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024; pp. 7641–7653. [Google Scholar]

- Gal, R.; Vinker, Y.; Alaluf, Y.; Bermano, A.H.; Cohen-Or, D.; Shamir, A.; Chechik, G. Breathing Life Into Sketches Using Text-to-Video Priors. arXiv 2023, arXiv:2311.13608. [Google Scholar] [CrossRef]

- Girdhar, R.; Singh, M.; Brown, A.; Duval, Q.; Azadi, S.; Rambhatla, S.S.; Shah, A.; Yin, X.; Parikh, D.; Misra, I. Factorizing Text-to-Video Generation by Explicit Image Conditioning. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar] [CrossRef]

- Guo, Y.; Yang, C.; Rao, A.; Agrawala, M.; Lin, D.; Dai, B. SparseCtrl: Adding Sparse Controls to Text-to-Video Diffusion Models. In Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar]

- Guo, X.; Huang, Z.; Huo, J.; Liang, Y.; Shi, Z.; Song, Z.; Zhang, J. Can You Count to Nine? A Human Evaluation Benchmark for Counting Limits in Modern Text-to-Video Models. arXiv arXiv:2504.04051.

- Guo, X.; Huo, J.; Shi, Z.; Song, Z.; Zhang, J.; Zhao, J. T2VPhysBench: A First-Principles Benchmark for Physical Consistency in Text-to-Video Generation. arXiv 2025, arXiv:2505.00337. [Google Scholar]

- Henschel, R.; Khachatryan, L.; Poghosyan, H.; Hayrapetyan, D.; Tadevosyan, V.; Wang, Z.; Navasardyan, S.; Shi, H. StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar] [CrossRef]

- Huang, Z.; He, Y.; Yu, J.; Zhang, F.; Si, C.; Jiang, Y.; Zhang, Y.; Wu, T.; Jin, Q.; Chanpaisit, N.; et al. VBench: Comprehensive Benchmark Suite for Video Generative Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024. [Google Scholar]

- Jeong, H.; Park, G.Y.; Ye, J.C. VMC: Video Motion Customization using Temporal Attention Adaption for Text-to-Video Diffusion Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024; pp. 9212–9221. [Google Scholar]

- Jiang, Y.; Wu, T.; Yang, S.; Si, C.; Lin, D.; Qiao, Y.; Loy, C.C.; Liu, Z. VideoBooth: Diffusion-based Video Generation with Image Prompts. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024; pp. 6689–6699. [Google Scholar]

- Ju, X.; Gao, Y.; Zhang, Z.; Yuan, Z.; Wang, X.; Zeng, A.; Xiong, Y.; Xu, Q.; Shan, Y. MiraData: A Large-Scale Video Dataset with Long Durations and Structured Captions. arXiv 2024, arXiv:cs. [Google Scholar]

- Kou, T.; Liu, X.; Zhang, Z.; Li, C.; Wu, H.; Min, X.; Zhai, G.; Liu, N. Subjective-Aligned Dataset and Metric for Text-to-Video Quality Assessment. In Proceedings of the Proceedings of the 2024 International Conference on Machine Learning (ICML) Workshop or similar venue, 2024. [Google Scholar]

- Li, J.; Yu, S.; Lin, H.; Cho, J.; Yoon, J.; Bansal, M. PhyT2V: LLM-Guided Iterative Self-Refinement for Physics-Grounded Text-to-Video Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2024. [Google Scholar]

- Li, J.; Yu, S.; Lin, H.; Cho, J.; Yoon, J.; Bansal, M. Training-free Guidance in Text-to-Video Generation via Multimodal Planning and Structured Noise Initialization. arXiv 2025, arXiv:2504.08641. [Google Scholar] [CrossRef]

- Liao, M.; Lu, H.; Zhang, X.; Wan, F.; Wang, T.; Zhao, Y.; Zuo, W.; Ye, Q.; Wang, J. Evaluation of Text-to-Video Generation Models: A Dynamics Perspective. Proceedings of the Advances in Neural Information Processing Systems (NeurIPS) 2024, 37. [Google Scholar]

- Lin, B.; Ge, Y.; Cheng, X.; Li, Z.; Zhu, B.; Wang, S.; He, X.; Ye, Y.; Yuan, S.; Chen, L.; et al. Open-Sora Plan: Open-Source Large Video Generation Model. arXiv 2024, arXiv:2412.00131. [Google Scholar]

- Liu, R.; Wu, H.; Zheng, Z.; Wei, C.; He, Y.; Pi, R.; Chen, Q. VideoDPO: Omni-Preference Alignment for Video Diffusion Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Liu, F.; Zhang, S.; Wang, X.; Wei, Y.; Qiu, H.; Zhao, Y.; Zhang, Y.; Ye, Q.; Wan, F. Timestep Embedding Tells: It’s Time to Cache for Video Diffusion Model. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Lv, J.; Huang, Y.; Yan, M.; Huang, J.; Liu, J.; Liu, Y.; Wen, Y.; Chen, X.; Chen, S. GPT4Motion: Scripting Physical Motions in Text-to-Video Generation via Blender-Oriented GPT Planning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2024. [Google Scholar] [CrossRef]

- Ma, Y.; He, Y.; Cun, X.; Wang, X.; Chen, S.; Shan, Y.; Li, X.; Chen, Q. Follow Your Pose: Pose-Guided Text-to-Video Generation Using Pose-Free Videos. Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) 2024, Vol. 38, 4117–4125. [Google Scholar] [CrossRef]

- Menapace, W.; Siarohin, A.; Skorokhodov, I.; Deyneka, E.; Chen, T.S.; Kag, A.; Fang, Y.; Stoliar, A.; Ricci, E.; Ren, J.; et al. Snap Video: Scaled Spatiotemporal Transformers for Text-to-Video Synthesis. Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2024, 16820–16830. [Google Scholar] [CrossRef]

- Miao, Y.; Zhu, Y.; Dong, Y.; Yu, L.; Zhu, J.; Gao, X.S. T2VSafetyBench: Evaluating the Safety of Text-to-Video Generative Models. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024) Datasets and Benchmarks Track, 2024. [Google Scholar]

- Mohamed, A.A.; Lucke-Wold, B. Text-to-video generative artificial intelligence: Sora in neurosurgery. Neurosurgical Review 2024, 47, 272. [Google Scholar] [CrossRef] [PubMed]

- Nan, K.; Xie, R.; Zhou, P.; Fan, T.; Yang, Z.; Chen, Z.; Li, X.; Yang, J.; Tai, Y. OpenVid-1M: A Large-Scale High-Quality Dataset for Text-to-Video Generation. In Proceedings of the International Conference on Learning Representations (ICLR), 2025. [Google Scholar]

- Qin, C.; Xia, C.; Ramakrishnan, K.; Ryoo, M.S.; Tu, L.; Feng, Y.; Shu, M.; Zhou, H.; Awadalla, A.; Wang, J.; et al. xGen-VideoSyn-1: High-Fidelity Text-to-Video Synthesis with Compressed Representations. arXiv 2024. arXiv:2408.12590.

- Qing, Z.; Zhang, S.; Wang, J.; Wang, X.; Wei, Y.; Zhang, Y.; Gao, C.; Sang, N. Hierarchical Spatio-temporal Decoupling for Text-to-Video Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024; pp. 6334–6344. [Google Scholar]

- Qu, B.; Liang, X.; Sun, S.; Gao, W. Exploring AIGC Video Quality: A Focus on Visual Harmony, Video-Text Consistency and Domain Distribution Gap. arXiv 2024, arXiv:2404.13573. [Google Scholar] [CrossRef]

- Rawte, V.; Jain, S.; Sinha, A.; Kaushik, G.; Bansal, A.; Vishwanath, P.R.; Jain, S.R.; Reganti, A.N.; Jain, V.; Chadha, A.; et al. ViBe: A Text-to-Video Benchmark for Evaluating Hallucination in Large Multimodal Models. arXiv 2024. arXiv:2411.10867.

- Ren, Y.; Zhou, Y.; Yang, J.; Shi, J.; Liu, D.; Liu, F.; Kwon, M.; Shrivastava, A. Customize-A-Video: One-Shot Motion Customization of Text-to-Video Diffusion Models. In Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar]

- Sharan, S.P.; Choi, M.; Shah, S.; Goel, H.; Omama, M.; Chinchali, S. Neuro-Symbolic Evaluation of Text-to-Video Models using Formal Verification. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Si, C.; Huang, Z.; Jiang, Y.; Liu, Z. FreeU: Free Lunch in Diffusion U-Net. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024; pp. 184–193. [Google Scholar]

- Tan, S.; Gong, B.; Feng, Y.; Zheng, K.; Zheng, D.; Shi, S.; Shen, Y.; Chen, J.; Yang, M. Mimir: Improving Video Diffusion Models for Precise Text Understanding. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2025; pp. 23978–23988. [Google Scholar]

- Tian, Y.; Yang, L.; Yang, H.; Gao, Y.; Deng, Y.; Chen, J.; Wang, X.; Yu, Z.; Tao, X.; Wan, P.; et al. VideoTetris: Towards Compositional Text-to-Video Generation. Proceedings of the Advances in Neural Information Processing Systems (NeurIPS) 2024, 37. [Google Scholar]

- Wang, X.; Zhang, S.; Yuan, H.; Qing, Z.; Gong, B.; Zhang, Y.; Shen, Y.; Gao, C.; Sang, N. A Recipe for Scaling up Text-to-Video Generation with Text-free Videos. arXiv 2023, arXiv:2312.15770. [Google Scholar]

- Wang, Y.; Chen, X.; Ma, X.; Zhou, S.; Huang, Z.; Wang, Y.; Yang, C.; He, Y.; Yu, J.; Yang, P.; et al. LaVie: High-Quality Video Generation with Cascaded Latent Diffusion Models. arXiv 2023, arXiv:2309.15103. [Google Scholar] [CrossRef]

- Wang, W.; Yang, Y. VidProM: A Million-scale Real Prompt-Gallery Dataset for Text-to-Video Diffusion Models. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024) Datasets and Benchmarks Track, 2024. [Google Scholar]

- Sun, K.; Huang, K.; Liu, X.; Wu, Y.; Xu, Z.; Li, Z.; Liu, X. T2V-CompBench: A Comprehensive Benchmark for Compositional Text-to-Video Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2025. [Google Scholar]

- Wang, J.; Duan, H.; Jia, Z.; Zhao, Y.; Yang, W.Y.; Zhang, Z.; Chen, Z.; Wang, J.; Xing, Y.; Zhai, G.; et al. LOVE: Benchmarking and Evaluating Text-to-Video Generation and Video-to-Text Interpretation. arXiv arXiv:2505.12098. [CrossRef]

- Wang, J.; Duan, H.; Jia, Z.; Zhao, Y.; Yang, W.Y.; Zhang, Z.; Chen, Z.; Wang, J.; Xing, Y.; Zhai, G.; et al. T2VBench: Benchmarking Temporal Dynamics for Text-to-Video Generation. arXiv 2024. arXiv:2505.12098.

- Wang, J.; Ma, A.; Cao, K.; Zheng, J.; Zhang, Z.; Feng, J.; Liu, S.; Ma, Y.; Cheng, B.; Leng, D.; et al. WISA: World Simulator Assistant for Physics-Aware Text-to-Video Generation. arXiv arXiv:2503.08153.

- Wei, Y.; Zhang, S.; Qing, Z.; Yuan, H.; Liu, Z.; Liu, Y.; Zhang, Y.; Zhou, J.; Shan, H. DreamVideo: Composing Your Dream Videos with Customized Subject and Motion. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024; pp. 6537–6549. [Google Scholar]

- Weng, W.; Feng, R.; Wang, Y.; Dai, Q.; Wang, C.; Yin, D.; Zhao, Z.; Qiu, K.; Bao, J.; Yuan, Y.; et al. ART•V: Auto-Regressive Text-to-Video Generation with Diffusion Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2024. [Google Scholar]

- Wu, J.Z.; Fang, G.; Wu, H.; Wang, X.; Ge, Y.; Cun, X.; Zhang, D.J.; Liu, J.W.; Gu, Y.; Zhao, R.; et al. Towards A Better Metric for Text-to-Video Generation. arXiv 2024, arXiv:2401.07781. [Google Scholar]

- Wu, W.; Li, Z.; Gu, Y.; Zhao, R.; He, Y.; Zhang, D.J.; Shou, M.Z.; Li, Y.; Gao, T.; Zhang, D. DragAnything: Motion Control for Anything Using Entity Representation. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar]

- Xie, D.; Xu, Z.; Hong, Y.; Tan, H.; Liu, D.; Liu, F.; Kaufman, A.; Zhou, Y. Progressive Autoregressive Video Diffusion Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2025. [Google Scholar]

- Xing, J.; Xia, M.; Zhang, Y.; Chen, H.; Yu, W.; Liu, H.; Wang, X.; Wong, T.T.; Shan, Y. DynamiCrafter: Animating Open-Domain Images with Video Diffusion Priors. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar] [CrossRef]

- Yang, Z.; Teng, J.; Zheng, W.; Ding, M.; Huang, S.; Xu, J.; Yang, Y.; Hong, W.; Zhang, X.; Feng, G.; et al. CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer. arXiv 2024, arXiv:2408.06072. [Google Scholar]

- Yang, X.; Tan, Z.; Nie, X.; Li, H. IPO: Iterative Preference Optimization for Text-to-Video Generation. arXiv arXiv:2502.02088.

- Yin, T.; Zhang, Q.; Zhang, R.; Freeman, W.T.; Durand, F.; Shechtman, E.; Huang, X. From Slow Bidirectional to Fast Autoregressive Video Diffusion Models. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Yin, Y.; Feng, Y.; Yang, Y.; Tang, Z.; Zhang, Z.; Yang, Z.; Jiao, B.; Chen, J.; Li, J.; Zhou, S.; et al. Step-Video-T2V Technical Report: The Practice, Challenges, and Future of Video Foundation Model. arXiv arXiv:2502.10248.

- Yuan, S.; Huang, J.; Xu, Y.; Liu, Y.; Zhang, S.; Shi, Y.; Zhu, R.; Cheng, X.; Luo, J.; Yuan, L. ChronoMagic-Bench: A Benchmark for Metamorphic Evaluation of Text-to-Time-lapse Video Generation. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024) Datasets and Benchmarks Track, 2024. [Google Scholar]

- Yuan, S.; Huang, J.; Shi, Y.; Xu, Y.; Zhu, R.; Lin, B.; Cheng, X.; Yuan, L.; Luo, J. MagicTime: Time-lapse Video Generation Models as Metamorphic Simulators. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024) Datasets and Benchmarks Track, 2024. [Google Scholar]

- Yuan, X.; Baek, J.; Xu, K.; Tov, O.; Fei, H. Inflation With Diffusion: Efficient Temporal Adaptation for Text-to-Video Super-Resolution. In Proceedings of the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) Workshops, January 2024; pp. 489–496. [Google Scholar]

- Yuan, S.; Huang, J.; He, X.; Ge, Y.; Shi, Y.; Chen, L.; Luo, J.; Yuan, L. Identity-Preserving Text-to-Video Generation by Frequency Decomposition. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Zhang, G.; Zhang, T.; Niu, G.; Tan, Z.; Bai, Y.; Yang, Q. CAMEL: CAusal Motion Enhancement Tailored for Lifting Text-driven Video Editing. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024; pp. 12345–12354. [Google Scholar]

- Zhang, R.; Li, W.; Chen, H.; Wang, Y.; Liu, J. Style-A-Video: Agile Diffusion for Arbitrary Text-Based Video Style Transfer. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2024. [Google Scholar] [CrossRef]

- Zhang, Z.; Liao, J.; Li, M.; Dai, Z.; Qiu, B.; Zhu, S.; Qin, L.; Wang, W. Tora: Trajectory-oriented Diffusion Transformer for Video Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025. [Google Scholar]

- Zhang, D.J.; Wu, J.Z.; Liu, J.W.; et al. Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation. International Journal of Computer Vision 2025, 133, 1879–1893. [Google Scholar] [CrossRef]

- Zhao, R.; Gu, Y.; Wu, J.Z.; Zhang, D.J.; Liu, J.W.; Wu, W.; Keppo, J.; Shou, M.Z. MotionDirector: Motion Customization of Text-to-Video Diffusion Models. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), 2024. [Google Scholar]

- Zhou, Z.; Yang, Y.; Yang, Y.; He, T.; Peng, H.; Qiu, K.; Dai, Q.; Qiu, L.; Luo, C.; Liu, L. HiTVideo: Hierarchical Tokenizers for Enhancing Text-to-Video Generation with Autoregressive Large Language Models. arXiv arXiv:2503.11513.

- Zhu, Z.; Feng, X.; Chen, D.; Yuan, J.; Qiao, C.; Hua, G. Exploring Pre-trained Text-to-Video Diffusion Models for Referring Video Object Segmentation. arXiv 2024, arXiv:cs. [Google Scholar]

- Brooks, T.; Peebles, B.; Holmes, C.; DePue, W.; Guo, Y.; Jing, L.; Schnurr, D.; Taylor, J.; Luhman, T.; Luhman, E.; et al. Improving Image Generation with Better Captions. 2024. Available online: https://cdn.openai.com/papers/dall-e-3.pdf.

- Esser, P.; Rombach, R.; Ommer, B. Taming Transformers for High-Resolution Image Synthesis. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021; pp. 12873–12883. [Google Scholar] [CrossRef]

- Schuhmann, C.; Köpf, A.; Coombes, T.; Vencu, R.; Trom, B.; Beaumont, R. LAION-COCO: 600M synthetic captions from LAION2B-en. 2022. Available online: https://laion.ai/blog/laion-coco/.

- Ravi, N.; Gabeur, V.; Hu, Y.T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024. arXiv:2408.00714.

| Authors | Publication title | |

| 1 | Bahmani et al. [18] | AC3D: Analyzing and Improving 3D Camera Control in Video Diffusion Transformers. |

| 2 | Bao et al. [19] | Vidu: a Highly Consistent, Dynamic and Skilled Text-to-Video Generator with Diffusion Models. |

| 3 | Cai et al. [20] | DiTCtrl: Exploring Attention Control in Multi-Modal Diffusion Transformer for Tuning-Free Multi-Prompt Longer Video Generation. |

| 4 | Chen et al. [21] | ShareGPT4Video: Improving Video Understanding and Generation with Better Captions. |

| 5 | Chen et al. [22] | VideoCrafter2: Overcoming Data Limitations for High-Quality Video Diffusion Models. |

| 6 | Choi et al. [23] | We’ll Fix it in Post: Improving Text-to-Video Generation with Neuro-Symbolic Feedback. |

| 7 | Cuttano et al. [24] | SAMWISE: Infusing Wisdom in SAM2 for Text-Driven Video Segmentation. |

| 8 | Dalal et al. [25] | One-Minute Video Generation with Test-Time Training. |

| 9 | Fei et al. [26] | Dysen-VDM: Empowering Dynamics-aware Text-to-Video Diffusion with LLMs. |

| 10 | Gal et al. [27] | Breathing Life Into Sketches Using Text-to-Video Priors. |

| 11 | Girdhar et al. [28] | Factorizing Text-to-Video Generation by Explicit Image Conditioning. |

| 12 | Guo et al. [29] | SparseCtrl: Adding Sparse Controls to Text-to-Video Diffusion Models. |

| 13 | Guo et al. [30] | Can You Count to Nine? A Human Evaluation Benchmark for Counting Limits in Modern Text-to-Video Models. |

| 14 | Guo et al. [31] | T2VPhysBench: A First-Principles Benchmark for Physical Consistency in Text-to-Video Generation. |

| 15 | Henschel et al. [32] | StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text. |

| 16 | Huang et al. [33] | VBench: Comprehensive Benchmark Suite for Video Generative Models. |

| 17 | Jeong et al. [34] | VMC: Video Motion Customization using Temporal Attention Adaption for Text-to-Video Diffusion Models. |

| 18 | Jiang et al. [35] | VideoBooth: Diffusion-based Video Generation with Image Prompts. |

| 19 | Ju et al. [36] | MiraData: A Large-Scale Video Dataset with Long Durations and Structured Captions. |

| 20 | Kou et al. [37] | Subjective-Aligned Dataset and Metric for Text-to-Video Quality Assessment. |

| 21 | Li et al. [38] | PhyT2V: LLM-Guided Iterative Self-Refinement for Physics-Grounded Text-to-Video Generation |

| 22 | Li et al. [39] | Training-free Guidance in Text-to-Video Generation via Multimodal Planning and Structured Noise Initialization. |

| 23 | Liao et al. [40] | Evaluation of Text-to-Video Generation Models: A Dynamics Perspective. |

| 24 | Lin et al. [41] | Open-Sora Plan: Open-Source Large Video Generation Model. |

| 25 | Liu et al. [42] | VideoDPO: Omni-Preference Alignment for Video Diffusion Generation. |

| 26 | Liu et al. [43] | Timestep Embedding Tells: It’s Time to Cache for Video Diffusion Model. |

| 27 | Lv et al. [44] | GPT4Motion: Scripting Physical Motions in Text-to-Video Generation via Blender-Oriented GPT Planning. |

| 28 | Ma et al. [45] | Follow Your Pose: Pose-Guided Text-to-Video Generation Using Pose-Free Videos. |

| 29 | Menapace et al. [46] | Snap Video: Scaled Spatiotemporal Transformers for Text-to-Video Synthesis. |

| 30 | Miao et al. [47] | T2VSafetyBench: Evaluating the Safety of Text-to-Video Generative Models. |

| 31 | Mohamed and Lucke-Wold [48] | Text-to-Video Generative Artificial Intelligence: Sora in Neurosurgery. |

| 32 | Nan et al. [49] | OpenVid-1M: A Large-Scale High-Quality Dataset for Text-to-Video Generation. |

| 33 | Qin et al. [50] | xGen-VideoSyn-1: High-Fidelity Text-to-Video Synthesis with Compressed Representations. |

| 34 | Qing et al. [51] | Hierarchical Spatio-temporal Decoupling for Text-to-Video Generation. |

| 35 | Qu et al. [52] | Exploring AIGC Video Quality: A Focus on Visual Harmony Video-Text Consistency and Domain Distribution Gap. |

| 36 | Rawte et al. [53] | ViBe: A Text-to-Video Benchmark for Evaluating Hallucination in Large Multimodal Models. |

| 37 | Ren et al. [54] | Customize-A-Video: One-Shot Motion Customization of Text-to-Video Diffusion Models. |

| 38 | Sharan et al. [55] | Neuro-Symbolic Evaluation of Text-to-Video Models using Formal Verification. |

| 39 | Si et al. [56] | FreeU: Free Lunch in Diffusion U-Net. |

| 40 | Tan et al. [57] | Mimir: Improving Video Diffusion Models for Precise Text Understanding. |

| 41 | Tian et al. [58] | VideoTetris: Towards Compositional Text-to-Video Generation. |

| 42 | Wang et al. [59] | A Recipe for Scaling up Text-to-Video Generation with Text-free Videos. |

| 43 | Wang et al. [60] | LaVie: High-Quality Video Generation with Cascaded Latent Diffusion Models. |

| 44 | Wang and Yang [61] | VidProM: A Million-scale Real Prompt-Gallery Dataset for Text-to-Video Diffusion Models. |

| 45 | Sun et al. [62] | T2V-CompBench: A Comprehensive Benchmark for Compositional Text-to-video Generation. |

| 46 | Wang et al. [63] | LOVE: Benchmarking and Evaluating Text-to-Video Generation and Video-to-Text Interpretation. |

| 47 | Wang et al. [64] | T2VBench: Benchmarking Temporal Dynamics for Text-to-Video Generation |

| 48 | Wang et al. [65] | WISA: World Simulator Assistant for Physics-Aware Text-to-Video Generation. |

| 49 | Wei et al. [66] | DreamVideo: Composing Your Dream Videos with Customized Subject and Motion. |

| 50 | Weng et al. [67] | ART-V: Auto-Regressive Text-to-Video Generation with Diffusion Models. |

| 51 | Wu et al. [68] | Towards A Better Metric for Text-to-Video Generation. |

| 52 | Wu et al. [69] | DragAnything: Motion Control for Anything Using Entity Representation. |

| 53 | Xie et al. [70] | Progressive Autoregressive Video Diffusion Models. |

| 54 | Xing et al. [71] | DynamiCrafter: Animating Open-Domain Images with Video Diffusion Priors. |

| 55 | Yang et al. [72] | CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer. |

| 56 | Yang et al. [73] | IPO: Iterative Preference Optimization for Text-to-Video Generation. |

| 57 | Yin et al. [74] | From Slow Bidirectional to Fast Autoregressive Video Diffusion Models. |

| 58 | Yin et al. [75] | Step-Video-T2V Technical Report: The Practice, Challenges, and Future of Video Foundation Model. |

| 59 | Yuan et al. [76] | ChronoMagic-Bench: A Benchmark for Metamorphic Evaluation of Text-to-Time-lapse Video Generation. |

| 60 | Yuan et al. [77] | MagicTime: Time-lapse Video Generation Models as Metamoraphic Simulators. |

| 61 | Yuan et al. [78] | Inflation With Diffusion: Efficient Temporal Adaptation for Text-to-Video Super-Resolution. |

| 62 | Yuan et al. [79] | Identity-Preserving Text-to-Video Generation by Frequency Decomposition. |

| 63 | Zhang et al. [80] | CAMEL: CAusal Motion Enhancement Tailored for Lifting Text-driven Video Editing. |

| 64 | Zhang et al. [81] | Style-A-Video: Agile Diffusion for Arbitrary Text-Based Video Style Transfer. |

| 65 | Zhang et al. [82] | Tora: Trajectory-oriented Diffusion Transformer for Video Generation. |

| 66 | Zhang et al. [83] | Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation. |

| 67 | Zhao et al. [84] | MotionDirector: Motion Customization of Text-to-Video Diffusion Models. |

| 68 | Zhou et al. [85] | HiTVideo: Hierarchical Tokenizers for Enhancing Text-to-Video Generation with Autoregressive Large Language Models. |

| 69 | Zhu et al. [86] | Exploring Pre-trained Text-to-Video Diffusion Models for Referring Video Object Segmentation. |

| Reference | Model Architecture | Methods | Training Strategy | Training Dataset | Project Code |

|---|---|---|---|---|---|

| Yin et al. | Transformer-based diffusion model | Bidirectional to autoregressive transformer adaptation + Distribution matching distillation + Asymmetric distillation + KV caching | Teacher-student distillation with ODE-based initialization + Asymmetric supervision + Training on short clips | UCF-101 (~13,000 clips) | https://github.com/tianweiy/CausVid |

| Weng et al. | Pretrained image diffusion model (Stable Diffusion 2.1) + Lightweight T2I-Adapter + Masked diffusion mask prediction | Autoregressive generation + Masked diffusion to reduce drifting | Learn simple continual motions + Noise augmentation + Anchored conditioning on initial frame | WebVid-10M | https://github.com/WarranWeng/ART.V |

| Xie et al. | Transformer-based latent diffusion model | Progressive noise assignment to latent frames + Autoregressive video denoising with overlapping attention windows | Autoregressive training with progressively increasing noise levels on latent frames | Large-scale, filtered datasets with ~1 million videos and 2.3 billion images (unnamed) | https://github.com/desaixie/pa_vdm |

| Henschel et al. | Autoregressive diffusion with CAM for short-term and APM for long-term appearance consistency + Video enhancer | Autoregressive diffusion with CAM and APM + Randomized blending for video enhancement | Initialization (pretrained model) + Autoregressive chunk generation + Streaming refinement with enhancer | Large-scale video collection from public sources, resized to 720×720 (unnamed) | https://github.com/Picsart-AI-Research/StreamingT2V |

| Tian et al. | Spatio-temporal compositional diffusion + ControlNet-based auto-regressive diffusion + Reference frame attention | Compositional video generation with spatial-temporal region modeling + Consistency regularization + Enhanced data preprocessing | Train auto-regressive model on enhanced video-text dataset | Filtered Panda-70M | https://github.com/YangLing0818/VideoTetris |

| Qin et al. | Video Variational Autoencoder (VidVAE) + Diffusion Transformer (DiT) with spatial & temporal attention | Latent Diffusion Model with Video VAE compression + Divide-and-merge strategy + Spatial-temporal self-attention | Progressive three-stage training at video resolutions of 240p, 480p, and 720p | 13M+ curated video-text pairs (unnamed) | Not released |

| Dalal et al. | Pretrained Diffusion Transformer (e.g., CogVideo-X) augmented with adaptive TTT layers | Test-Time Training (TTT) layers updating during inference + Segmented video generation + Gating between local attention and TTT layers | Progressive fine-tuning on segmented cartoon videos from 3-second clips up to 63-second concatenations | Curated ~7 hours of Tom & Jerry videos + Human-generated storyboards | https://github.com/test-time-training/ttt-video-dit |

| Bao et al. | Diffusion model + U-ViT transformer backbone + Video autoencoder for compression | Diffusion model with U-ViT backbone + Video autoencoder + Transformer processes 3D patches + Re-captioning text | Train video captioner to auto-annotate video-text pairs + Multi-length video training | Large-scale text-video datasets with auto-generated captions (unnamed) | https://www.shengshu.com/en |

| Lin et al. | Diffusion Transformer (DiT) based STDiT; PixArt- pretrained T2I backbone + spatial-temporal attention + pretrained spatial VAE | Wavelet-Flow Variational Autoencoder (VAE) + Joint Image-Video Skiparse Denoise + Conditional controller modules | Large-scale video pretraining on ~70M video clips, image pretraining from large image datasets, image-to-video finetuning | Curated ~70M video dataset from public sources; resized 256×256 | https://github.com/PKU-YuanGroup/Open-Sora-Plan |

| Yang et al. | Transformer-based diffusion with 3D full attention + 3D VAE encoder-decoder + Expert Transformer with adaptive LayerNorm + 3D rotary positional embeddings | Latent diffusion denoising conditioned on expert fused text-video embeddings + LLaMA2 for video caption generation | Progressive training with mask modeling and long sequence modeling, separate VAE training | ~35M curated video-text pairs + 2B images from LAION-5B and COYO-700M | https://github.com/THUDM/CogVideo |

| Reference | Model Architecture | Methods | Training Strategy | Training Dataset | Project Code |

|---|---|---|---|---|---|

| Liu et al. | Post-training adaptation of existing diffusion models; no architecture change | Direct Preference Optimization (DPO) + Omni-preference alignment + VideoDPO Loss | Multi-stage: T2V pretraining → DPO fine-tuning with re-weighted preference pairs | VidProM dataset with 10,000 human prompts + millions of videos for preference alignment | https://github.com/CIntellifusion/VideoDPO |

| Yin et al. | Video-VAE with causal Res3D blocks + DiT with 3D attention + bilingual text encoders; + Video-DPO module | Latent video diffusion, Flow Matching training, multilingual text encoding, preference-based Direct Preference Optimization (Video-DPO) | Multi-stage training: Video-VAE pretraining → DiT diffusion model training → DPO finetuning | Large-scale, filtered video corpus (unnamed) | https://github.com/stepfun-ai/Step-Video-T2V |

| Wang et al. | Two-branch diffusion model: content branch (text-conditioned), motion branch (image-conditioned) with shared weights | Disentangled content-motion learning, temporal coherence loss | Joint optimization on video-text and text-free video data; semi-supervised approach | WebVid10M video-text pairs + large-scale unlabeled videos from YouTube | https://github.com/ali-vilab/Vgen |

| Wang et al. | Enhanced T2V model with physics-aware modules | Decomposition of physical principles into textual, qualitative, and quantitative components; MoPA attention; Physical classifier | Joint training with generative loss and physical classifier supervision | WISA-32K: 32,000 videos on 17 physical laws (dynamics, thermodynamics, optics) | https://github.com/360CVGroup/WISA |

| Yuan et al. | Diffusion Transformer (DiT) backbone with MagicAdapter modules in temporal layers, enhanced text encoder | MagicAdapter for physics encoding; Dynamic Frame Extraction for adaptive sampling + Magic Text-Encoder for prompt understanding + auto-captioning for annotation | Fine-tuning pretrained T2V models on metamorphic time-lapse videos | Curated ChronoMagic dataset (~2,265 annotated metamorphic time-lapse videos) | https://github.com/PKU-YuanGroup/MagicTime |

| Qing et al. | Diffusion model with unified denoiser for spatial-temporal reasoning + motion and appearance cue extraction modules | Decoupled spatial-temporal diffusion + hierarchical conditioning on motion and appearance variations | Two-step training: spatial prior from text → temporal motion from spatial priors | MSR-VTT (10,000 video-text pairs) | https://github.com/ali-vilab/Vgen |

| Fei et al. | Latent video diffusion model + Dysen module (action extractor, DSG constructor, LLM-based enrichment, graph Transformer encoder) | Dynamic Scene Graphs (DSGs) + LLMs (ChatGPT) + Recurrent Graph Transformer (RGT) | Pre-training on large-scale video-text datasets → Fine-tuning Dysen module and RGT | 3M WebVid (pre-training) + UCF-101, MSR-VTT, ActivityNet (fine-tuning) | https://github.com/scofield7419/Dysen |

| Lv et al. | Pipeline of GPT-4 (planner) + Blender physics engine (simulator) + ControlNet-augmented Stable Diffusion | GPT-4 generates Blender scripts for simulation + Blender simulates motion + Stable Diffusion generates frames | Training-free framework; uses pretrained GPT-4 and Stable Diffusion; no fine-tuning | None | https://github.com/jiaxilv/GPT4Motion |

| Zhou et al. | 3D causal VAE with hierarchical discrete token layers + LLaMA 3B for token generation conditioned on frozen Flan-T5-XL embeddings | Hierarchical autoregressive token generation from coarse semantic to fine visual detail | Two-stage training: Training of the 3D causal VAE → Training of LLM autoregressively with text conditioning | Pexels Videos dataset | Not released |

| Tan et al. | Latent diffusion backbone + Dual-text encoding (ViT-style encoder + decoder-only LLM) + Token Fuser | Token fusion to harmonize encoder and LLM embeddings + Semantic stabilization | Joint training on large video-text datasets with supervised latent denoising conditioned on fused semantic embeddings | Large-scale video-text corpora (unnamed) | https://lucaria-academy.github.io/Mimir |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).